We write an asynchronous parser and image scraper in Python with a graphical interface

In this article we will create a desktop application that, at our request, will save a specified number of pictures on our disk. Since there will be a lot of pictures, we will use Python's asynchrony to implement I/O operations concurrently. Let's see how the requests and aiohttp libraries differ. We will also create two additional application threads to bypass the global Python interpreter lock.

Instead of a thousand words…

So that you can better understand what I'm talking about, I'll just show you what the end result should be and how it will work:

When starting to create an application, we need to define the main classes that perform a strictly defined function and will be limited only to their task.

GUI classes

We will have a separate GUI class. Let's call it UI – this is the main window of the program. There are two different frames in this window. Let's also represent these frameworks with different classes:

The SearchFrame class will be responsible for entering a search query that will be used to search for images.

The ScraperFrame class will be responsible for displaying the number of pictures available for downloading, setting the path for saving these pictures, selecting their size and quantity. Also in this class there is a progress bar for downloading and saving, and an information field.

We implement these classes using the standard Python library – tkinter.

We have decided on the GUI classes. Now you need to figure out exactly how to search, download and save images.

Class for parsing PictureLinksParser

Choosing a photo hosting

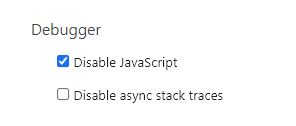

First, we need to choose a photo hosting site where the pictures are stored. Here you need to analyze exactly how photos are placed on the hosting. First, using the developer tools, we will disable JavaScript and look at the behavior of the site.

If all the content disappeared when we refreshed the page, we are most likely dealing with a single page application SPA (Single Page Applications). And parsing such sites requires “advanced” Python libraries. For example, Scrapy with the Splash tool, or even worse – Selenium. Scrapy is a great tool, but not for our case. Remember the KISS principle? Therefore, we are looking for a site where the influence of JavaScript on the content is negligible.

My choice was on the site flickr.com. The only one

The problem with this site is that when displaying images there is no

pagination of pages, and new pictures appear when scrolling the feed. However

We have less than 25 pictures without scrolling. A little later in the article I will tell you how

It's very clever to get around this limitation.

Selecting a parsing library

The simplest parser for Python is Beautiful Soup. This library will be quite sufficient to solve our problem.

Selecting a Web Request Library

Everyone knows that there is a requests library. The problem with this library is that it is blocking – while the request is executed and data is received, we have a global blocking of the Python interpreter (GIL). This is a lock that prevents a Python process from executing more than one bytecode instruction at a time. You should have a question – why do they use it then? For a single web request, the GIL will be invisible. Imagine that we have 1000 such requests. Until all 1000 requests are completed, the rest of the program will be blocked. To solve this problem, non-blocking libraries were created. An example of a non-blocking library is aiohttp, which can also send web requests.

And here I must convey one important idea to you: for one single web request with aiohttp, we gain nothing from requests. aiohttp will only benefit if we execute multiple web requests competitively.

The PictureLinksParser class will only make one web request to retrieve the HTML document. But since in another class we will perform several web requests competitively, we will install only aiohttp. We don't need the additional requests library for one web request – here we replace it with aiohttp. The parsing algorithm is as follows:

def parse_html_to_get_links(self, html: str) -> None:

"""Parses HTML and adds links to the array."""

soup = BeautifulSoup(html, 'lxml')

box = soup.find_all(PHOTO_CONTAINER, class_=PHOTO_CLASS)

for tag in box:

img_tag = tag.find('img')

src_value = img_tag.get('src')

self.add_links('https:' + src_value)

async def get_html(self) -> None:

"""Downloads HTML with pictures links."""

async with aiohttp.ClientSession() as session:

async with session.get(self.url) as response:

html = await response.text()

self.parse_html_to_get_links(html)

And here the first disadvantage of aiohttp compared to requests comes up. Requests has a simple interface – I wrote a get method (web page address) and received the page. In aiohttp, we create a client session, which is the runtime environment for making HTTP requests and managing connections. We also use an asynchronous context manager, which allows you to start and close HTTP sessions correctly.

Conclusions:

if there is only one request in the program (receiving a token, receiving one web page), then we use the requests library.

if a program needs to execute many requests simultaneously, then we use aiohttp (or another non-blocking library).

Class for scraping pictures PictureScraperSaver

When, after working with the PictureLinksParser class, we have generated a set of picture links, we must go to these addresses and save the pictures to our disk.

set() sets in Python

This is a standard data type that everyone knows about. This is a very fast collection that is built on hash tables and contains only unique elements. The peculiarity of our photo hosting is that when you repeat a request, completely new links sometimes appear in the HTML document that were not in the previous request. Using the set set, when we make a second request, we add these links to our set of links and the following happens: the number of elements of the set is automatically increased by the number of new unique links with a new request with the same keyword.

We fulfill web requests competitively

So we have a lot of links. Now you need to go through them and save the pictures on disk. I implemented it as follows (For better code readability, I did not use list comprehension):

async def _save_image(self, session: ClientSession, url: str) -> None:

"""Asynchronously downloads the image and saves it on disk."""

try:

response = await session.get(url)

if response.status == HTTPStatus.OK:

image_data = await response.read()

pic_file = f'{self.picture_name}{self.completed_requests}'

with open(f'{self.save_path}/{pic_file}.jpg', 'wb') as file:

file.write(image_data)

logging.info(f'Успешное сохранение картинки {url}')

else:

logging.error(

f'Ошибка при работе с картинкой {response.status}'

)

except Exception as e:

logging.exception(

f"Ошибка при загрузке {url}: {response.status} {e}"

)

self.completed_requests += 1

if self.completed_requests % self.refresh_rate == 0 or \

self.completed_requests == self.total_requests:

self.callback(self.completed_requests, self.total_requests)

async def _make_requests(self) -> None:

"""Concurrently sends URL links to perform."""

async with ClientSession() as session:

reqs = []

for _ in range(self.total_requests):

current_link = self.links_array.pop()

reqs.append(self._save_image(session, current_link))

await asyncio.gather(*reqs)

Let's start with the _make_requests coroutine. We submit for competitive execution only the number of images specified in the graphical interface – the self.total_requests attribute. Using the pop() method in a set, we remove a random element and send it for downloading and saving. And then we use the asyncio.gather method to competitively download images from the corresponding URLs.

As for the _save_image coroutine, everything is even simpler here. We follow the picture link, receive confirmation that all status = 200. And then we save this read content using the standard open function and binary write mode at the specified address. We log events at all stages.

We transfer the parser and scraper to additional threads

The problem is that at the parsing and scraping stage we may experience lengthy operations that will block the graphical interface. In practice, it will look like this: while 1000 requests are being executed, our graphical interface is blocked, it will stop responding. And the operating system will prompt us to kill this process, considering it “hung”.

To prevent this from happening, multithreading is used for I/O operations. We will create 2 additional threads and pass asynchronous event loops there.

What we have:

main thread: GUI

additional thread #1: parser

additional thread #2: scraper

All that remains is to implement thread safety in the parser and scraper classes. This is achieved by two asyncio methods:

Method call_soon_threadsafe takes a Python function (not a coroutine) and schedules its execution in a thread-safe manner at the next iteration of the event loop.

Method run_coroutine_threadsafe accepts a coroutine, submits it for execution in a thread-safe manner, and immediately returns a future object that allows access to the result of the coroutine.

View full application code

Because the article does not give a complete picture of what we did, I advise you to look at the full application code in my GitHub repository.

There, in the description of the repository, you will find a link to the exe version of the program and you can play with it a little.

Prospects for use

You can safely use the program code in your projects. For example, you want to make an online service that, upon request, returns a zip archive with pictures to the user. And you don’t need to worry about the server configuration – you will only need one processor core, because multithreading and asynchrony are implemented within a single process and consume the memory of only that process.

The application has undergone manual testing on the operating systems Windows 11 and Ubuntu 22.04