Vector databases vs Accuracy – part 1

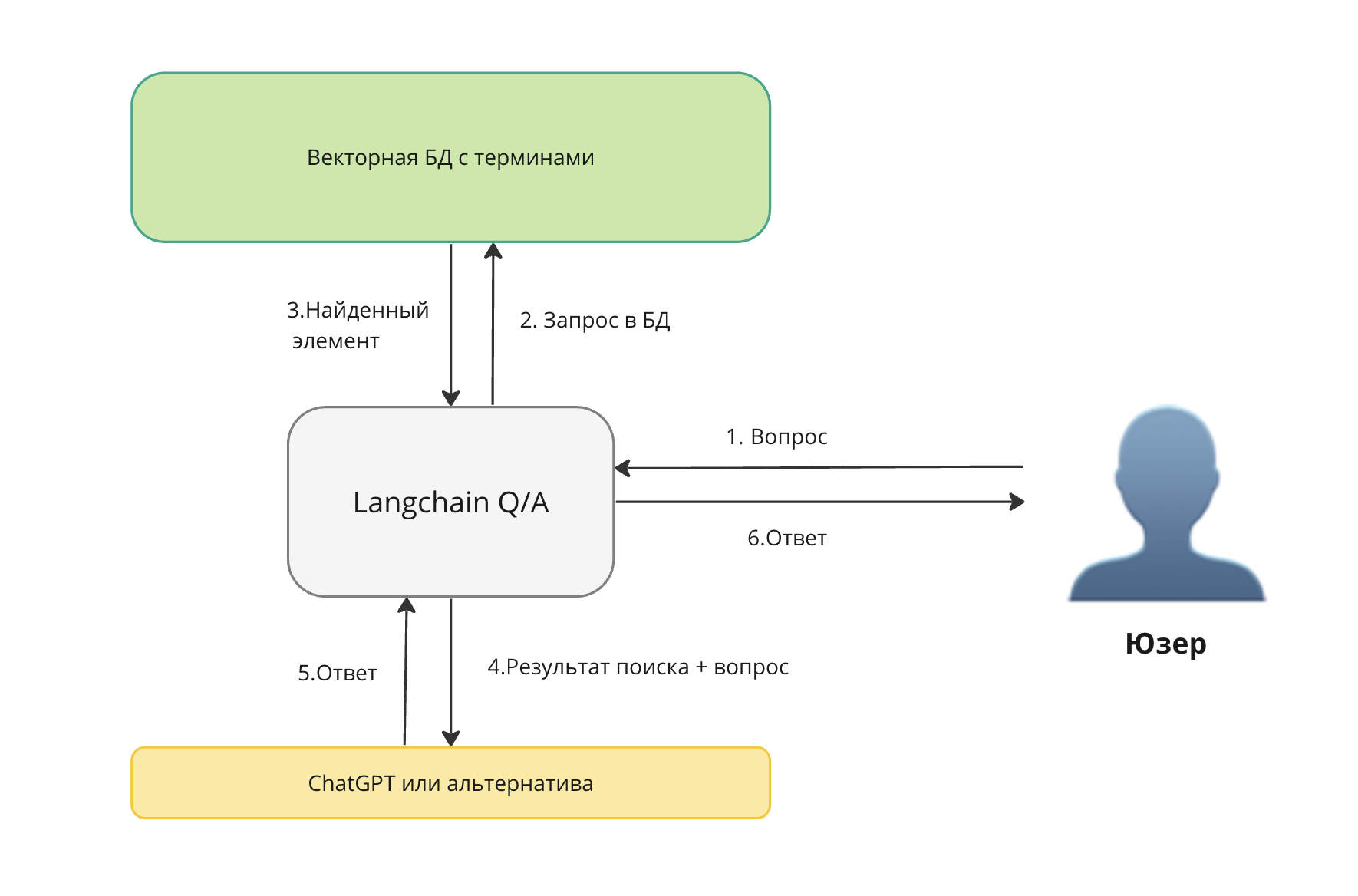

I decided to “quickly” assemble a local RAG (retrieval augmentation generation), which will find terms from Ozhegov’s dictionary. After studying the Internet, I understood. It all comes down to the recipe (simplified interpretation):

We take the text we need and cut it into pieces

Using the “embedder” we turn it into embeddings

Loading into a vector database

Chaining ChatGPT or an alternative using Langchain

We write a prompt

We rejoice

I was not happy for long. After a while I began to understand that he is confused in terms. I ask for one thing, but get something completely different. Let's figure out why.

You can see it in the diagram above. For the answer, “search result + question” is used – you need to check the request and answer from the database. Since we load the dataset ourselves and know what the answer should be, we can automatically check whether the vector database gives us the correct answer to our request.

Preparing the data

We take the text we need and cut it into pieces.

import re

import pandas as pd

with open("ozhegov.txt", mode="r", encoding="UTF-8") as file:

text_lines = file.readlines()

def return_first_match(pattern, text):

result = re.findall(pattern,text)

result = result[0] if result else ""

return result

data = []

for line in text_lines:

title = return_first_match(r"^[а-яА-Я]{2,}(?=,)", line)

text = return_first_match(r"\.\s([А-Я]+.*)\n", line)

if(len(title) > 3):

data.append(

{

"title": title,

"text" : text

})

dataset = pd.DataFrame(data)

dataset.to_csv('ozhegov_dataset.csv')

dataset.head()title | text | |

|---|---|---|

0 | SHADE | Cap for a lamp, lamp. Green a. 11 p… |

1 | ABAZINSKY | Relating to the Abazins, their language, national… |

2 | ABAZINS | The people living in Karachay-Cherkessia and Adygea… |

3 | ABBAT | Abbot of a male Catholic monastery. 2… |

4 | ABBEY | Catholic monastery. |

For our experiment, we will not use the entire dataset, but will take only 1000 random pieces.

#бывают пустые в моем датасете

dataset = dataset[dataset['title'].str.len() > 0]

dataset = dataset[dataset['text'].str.len() > 0]

dataset = dataset.sample(n=1000)

dataset.astype({"text": str, "title": str})

dataset.info(show_counts=True)

dataset.head()<class 'pandas.core.frame.DataFrame'>

Index: 1000 entries, 28934 to 16721

Data columns (total 3 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Unnamed: 0 1000 non-null int64

1 title 1000 non-null object

2 text 1000 non-null object

dtypes: int64(1), object(2)

memory usage: 31.2+ KBUnnamed: 0 | title | text |

|---|---|---|

28934 | THICKENING | A thickened area on something. U. trunk. U. vessel. |

30193 | BLACK HUNDRED | In Russia at the beginning. 20th century: member of the chauvinist… |

14378 | IMPOSSIBLE | One that is impossible to overcome. Irresistible… |

27420 | THERMAL | Related to the application of thermal energy in… |

27021 | SCHEMATIZE | Present (-vlyat) in the form of a diagram (in 2 values)… |

Turning it into embeddings

I didn’t choose “Embedder” for a long time, I used the Rating of Russian-language encoders offers

I won’t tell you about the vector databases themselves and how they work, what they are. Articles have already been written on this matter. I will use Chroma (top 1 from this article).

For the purity of the experiment, I decided to take the top 5 models and 3 different distance functions available in Chroma

models = [

"intfloat/multilingual-e5-large",

"sentence-transformers/paraphrase-multilingual-mpnet-base-v2",

"symanto/sn-xlm-roberta-base-snli-mnli-anli-xnli",

"cointegrated/LaBSE-en-ru",

"sentence-transformers/LaBSE"

]

distances = [

"l2",

"ip",

"cosine"

]Getting ready Chroma

We put the necessary ones pip packages

%pip install -U sentence-transformers ipywidgets chromadb chardet charset-normalizerThere is an error with the installation, there is a solution in the error itself

HINT: This error might have occurred since this system does not have Windows Long Path support enabled. You can find information on how to enable this at https://pip.pypa.io/warnings/enable-long-paths

https://learn.microsoft.com/en-us/windows/win32/fileio/maximum-file-path-limitation?tabs=powershell#enable-long-paths-in-windows-10-version-1607-and- later

Let's run Chroma in Docker

docker pull chromadb/chroma

docker run -p 8000:8000 chromadb/chromaFeatures for working with Chroma

We define the necessary functions for creating and deleting collections. And also a search function, in which we take a record with the minimum distance + add data by index from the dataset. Indexes in the database are equal to indexes in the dataset.

from chromadb.utils import embedding_functions

import chromadb

chroma_client = chromadb.HttpClient(host="localhost", port=8000)

def create_collection(model_name, distance):

chroma_client = chromadb.HttpClient(host="localhost", port=8000)

sentence_transformer_ef = embedding_functions.SentenceTransformerEmbeddingFunction(model_name=model_name)

#в этом эксперименте не будем использовать, нам нужно найти термин

#text_collection = chroma_client.create_collection(name="text", embedding_function=sentence_transformer_ef)

title_collection = chroma_client.create_collection(name="title", embedding_function=sentence_transformer_ef, metadata={"hnsw:space": distance})

ids = list(map(str, dataset.index.values.tolist()))

#text_collection.add(ids = ids, documents=dataset["text"].tolist())

title_collection.add(ids = ids, documents=dataset["title"].tolist())

return title_collection

def delete_collection():

chroma_client.delete_collection("title")

def query_collection(collection, query, max_results, dataframe, model_name, distance):

results = collection.query(query_texts=query, n_results=max_results, include=['distances'])

#print(results)

df = pd.DataFrame({

'id':results['ids'][0],

'score':list(map(float,results['distances'][0])),

'query': query,

'title': dataframe[dataframe.index.isin(list(map(int,results['ids'][0])))]['title'],

'content': dataframe[dataframe.index.isin(list(map(int,results['ids'][0])))]['text'],

'model_name': model_name,

'distance': distance

})

# Забираем с минимальной дистанцией, значит он ближе и больше похож

df = df[df.score == df.score.min()]

df['is_found'] = df.apply(lambda row: row.query == row.title, axis=1)

return df

We create a test dataset and start

We form a test dataset of random 100 pieces from the dataset loaded into Chroma.

test_dataset = dataset.sample(n=100)

test_dataset.head()

test_results = pd.DataFrame()We run and collect the results for each model with a different distance function.

for model in models:

for distance in distances:

print(f"{model} - {distance}")

try:

delete_collection()

except Exception as ex:

print(f"delete_collection error: {ex}")

collection = create_collection(model, distance)

for title in test_dataset["title"].tolist():

test_results = test_results._append(query_collection(

collection=collection,

query=title,

max_results=5,

dataframe=dataset,

model_name=model,

distance=distance))

print(f"{len(test_results)}")

test_results.to_csv("results_ozhegov2.csv")

test_results.head()id | score | query | title | content | model_name | distance | is_found | |

|---|---|---|---|---|---|---|---|---|

10363 | 10363 | 1.315708e-12 | GERKICHONS | GERKICHONS | Small unripe cucumbers intended for… | intfloat/multilingual-e5-large | l2 | True |

8566 | 8566 | 7.252605e-13 | IMMIGRANT | IMMIGRANT | A person who has immigrated somewhere. II immy… | intfloat/multilingual-e5-large | l2 | True |

12175 | 17352 | 1.157366e-12 | PENSION | MENSTRUATION | Monthly bleeding from a woman's uterus (… | intfloat/multilingual-e5-large | l2 | False |

18297 | 11029 | 7.939077e-13 | BUDDLE | STRAY | Walking without knowing the way, wandering. P. through the forest. | intfloat/multilingual-e5-large | l2 | False |

14052 | 5394 | 1.371903e-12 | DECADE | A WEEK | A unit of time equal to seven days… | intfloat/multilingual-e5-large | l2 | False |

Let's look at the results

Now let's count the number of found (correct) results.

finally_result = pd.DataFrame()

for model in models:

for distance in distances:

df = test_results.loc[test_results['model_name'].str.contains(model) == True]

df = df.loc[df['distance'].str.contains(distance) == True]

finally_result = finally_result._append(pd.DataFrame({

'founded': [len(df[df['is_found'] == True])],

'model_name': [model],

'distance': [distance]

}))

finally_result.head(15)founded | model_name | distance |

|---|---|---|

24 | intfloat/multilingual-e5-large | l2 |

24 | intfloat/multilingual-e5-large | ip |

24 | intfloat/multilingual-e5-large | cosine |

17 | sentence-transformers/paraphrase-multilingual-… | l2 |

0 | sentence-transformers/paraphrase-multilingual-… | ip |

19 | sentence-transformers/paraphrase-multilingual-… | cosine |

23 | symanto/sn-xlm-roberta-base-snli-mnli-anli-xnli | l2 |

25 | symanto/sn-xlm-roberta-base-snli-mnli-anli-xnli | ip |

21 | symanto/sn-xlm-roberta-base-snli-mnli-anli-xnli | cosine |

14 | cointegrated/LaBSE-en-ru | l2 |

14 | cointegrated/LaBSE-en-ru | ip |

14 | cointegrated/LaBSE-en-ru | cosine |

14 | sentence-transformers/LaBSE | l2 |

14 | sentence-transformers/LaBSE | ip |

14 | sentence-transformers/LaBSE | cosine |

Conclusion

It has not yet been possible to assemble a local RAG “quickly” for working with terms and produce a ready-made recipe.

Current ~25% – can hardly be called good accuracy.

What options do I see for solving the problem with accuracy:

Use hybrid search with BM25, not all vector databases support it

Attach Postgres + BM25 as a second retriever and search in two at once – it sounds so-so + duplicate information…

Tune the model, but this is far from “quick”

Work with the text (lemmatization, normalization, etc.) – I highly doubt it will help.

In the second part I will try to use hybrid search

PS I’ll wait in the comments for what other option to try, so that I can “quickly” and deploy it locally.