Once again about the performance of streams in Java

From time to time I observe or even get embroiled in a debate about performance art in Java streaming. It is common knowledge that streaming is a compromise between performance and convenience. However, I did not find a sane set of benchmarks that would show exactly how slow (or fast) the streams are. So I decided to write these benchmarks myself.

What was checked and how

I measured the performance of several typical use cases for the forEach loop: applying a function to a set, summing, filtering, and grouping. Generally routine tasks. The same problems were solved through streams.

I didn’t have to think long about choosing a tool for the benchmark. JMH – a battle-tested solution developed under the auspices of the OpenJDK team. The framework is very flexible and easy to use.

Full test code can be found at Github.

The benchmarks were run on a 4-core Java 21 virtual machine with the following JVM parameters: -Xms2G -Xmx2G. Operations were performed on sheets of size 1000, 10,000,100,000, 1,000,000. For each case, both sequential and parallel streams were used.

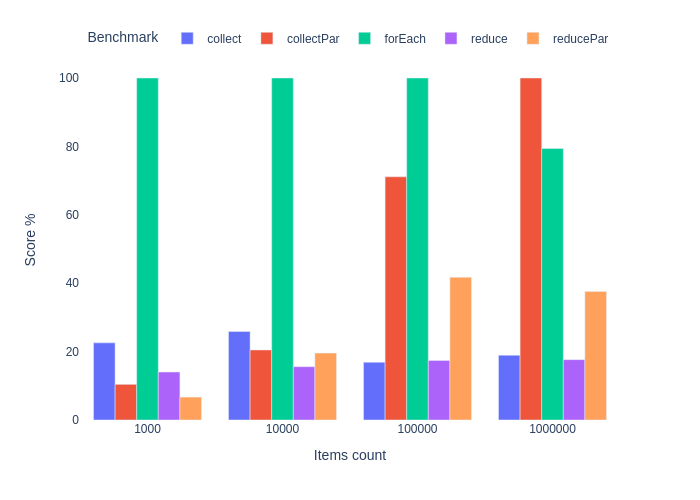

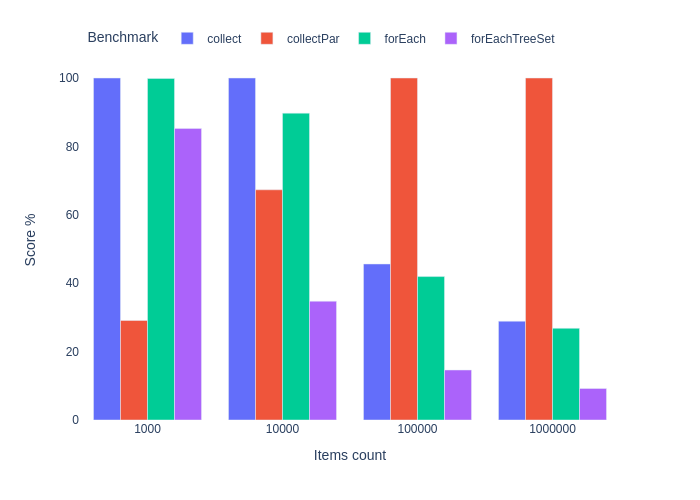

The results are plotted as percentages of the best result within the sample size. The graphs are normalized such that, for a given list size, the best result among the various implementations is found and each result is divided by it. The best result is 100%. A score of 50% means that this implementation is half “slower” than the best in this test.

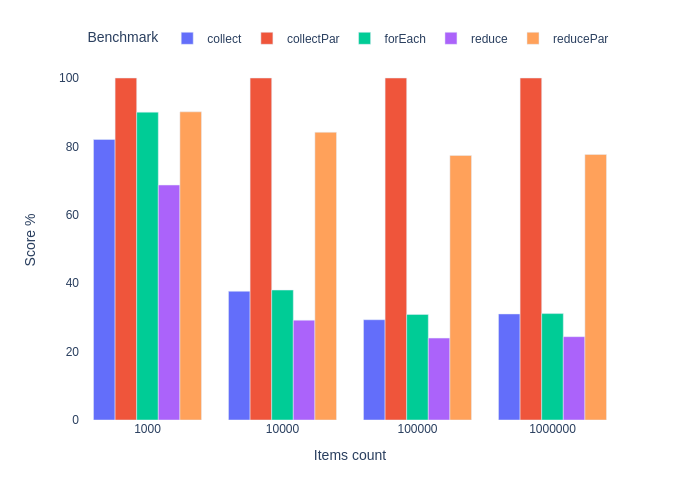

Summation of integers

Code

@State(Scope.Benchmark)

public static class Params {

@Param({"1000", "10000", "100000", "1000000"})

public int size;

public List<Integer> items;

@Setup

public void setUp() {

items = IntStream.range(0, size)

.mapToObj(i -> i)

.collect(Collectors.toList());

}

}

@Benchmark

public int forEach(Params params) {

int res = 0;

for (Integer item : params.items) {

res += item;

}

return res;

}

@Benchmark

public int collect(Params params) {

return params.items.stream()

.collect(Collectors.summingInt(i -> i));

}

@Benchmark

public int collectPar(Params params) {

return params.items.parallelStream()

.collect(Collectors.summingInt(i -> i));

}

@Benchmark

public int reduce(Params params) {

return params.items.stream()

.reduce(0, Integer::sum);

}

@Benchmark

public int reducePar(Params params) {

return params.items.parallelStream()

.reduce(0, Integer::sum);

}

This was expected. We have a winner in forEach, but its advantage decreases as the collection size increases. Especially in comparison with parallel streaming. Approach withreduce the slowest and even parallelization does not save the situation.

If your program for some reason revolves around summing integers, then give preference to the classics. If this sounds sarcastic, that's because it is. In practice, all the gains in performance are lost in the world of REST APIs, microservices and event driven architectures. If we replace Stream.collect() on forEach in a rest service that sums up integers and adds the result to a database, then we may gain some tenths of microseconds that will be lost due to serialization, deserialization, transmission over the wire, access to the database, etc.

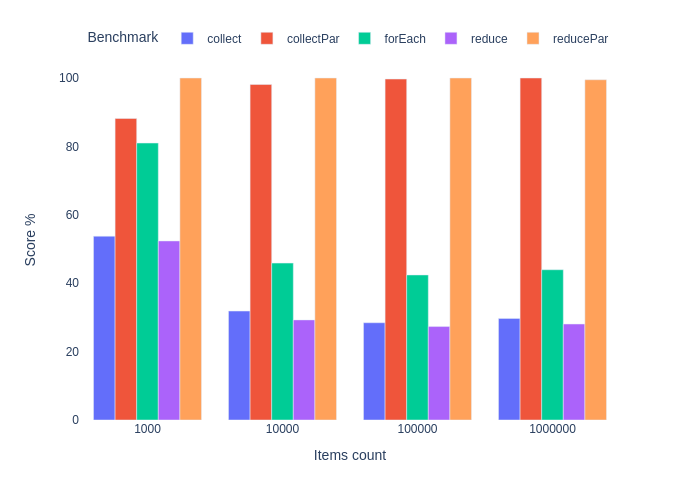

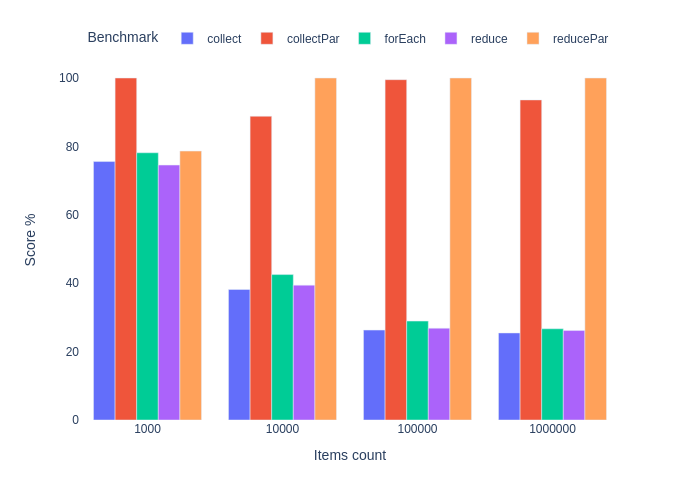

Calculation and summation

Let's look at a slightly more realistic example. Let's perform a mathematical operation on a collection of randomly generated floating point numbers and add the result.

Code

@State(Scope.Benchmark)

public static class Params {

@Param({"1000", "10000", "100000", "1000000"})

public int size;

public List<Double> items;

@Setup

public void setUp() {

Random random = new Random();

items = random.doubles(size).mapToObj(i -> i)

.collect(Collectors.toList());

}

}

private static double calculate(double value) {

return Math.sqrt(Math.sin(Math.log(value)));

}

@Benchmark

public Double forEach(Params params) {

Double res = 0d;

for (Double item : params.items) {

res += calculate(item);

}

return res;

}

@Benchmark

public Double collect(Params params) {

return params.items.stream()

.map(DoubleCalculationBenchmark::calculate)

.collect(Collectors.summingDouble(i -> i));

}

@Benchmark

public Double collectPar(Params params) {

return params.items.parallelStream()

.map(DoubleCalculationBenchmark::calculate)

.collect(Collectors.summingDouble(i -> i));

}

@Benchmark

public Double reduce(Params params) {

return params.items.stream()

.map(DoubleCalculationBenchmark::calculate)

.reduce(0d, Double::sum);

}

@Benchmark

public Double reducePar(Params params) {

return params.items.parallelStream()

.map(DoubleCalculationBenchmark::calculate)

.reduce(0d, Double::sum);

}

Well, surprise. Result forEach plus or minus is the same as for a sequential stream. Parallel streaming takes the lead on large collections and works 3-5 times faster. This is explained quite simply.

This was not visible in the previous benchmark, because… The larger part of the program is executed sequentially, the worse the program can be parallelized. Cm. Amdahl's law. This is just a case of summation. Adding two numbers is a very fast operation, so we spent more time parallelizing and then synchronizing the result. Especially in the case of small collections.

The same principle resulted in slightly better performance of the function reduce in this benchmark. This higher-order function accepts side-effect-free binary associative functions that operate on immutable objects. Double.sum() falls under this definition. If we fulfill this contract, then the combination of results is perfectly parallelized and does not require synchronization.

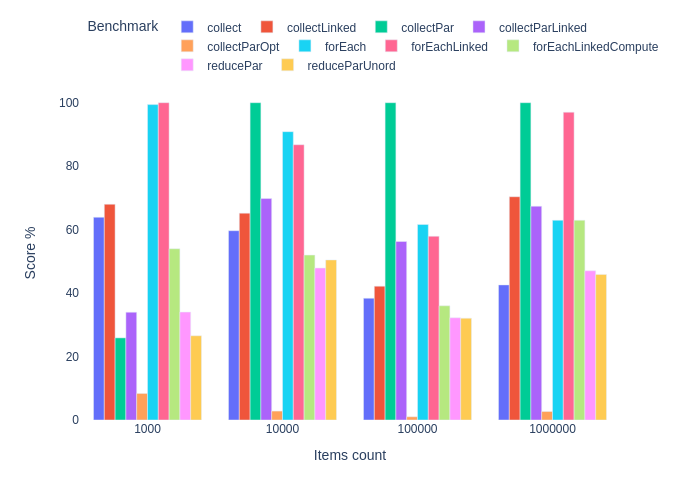

Grouping

Grouping using streams is truly concise and visual, so it is widely used in everyday tasks. The result is influenced by many factors: the stream can be sequential or parallel, ordered or unordered. We have several options for selecting a collector, or we can even use Stream.reduce() instead of Stream.collect() . We can also use various auxiliary data structures and containers where the grouping results will be placed. That's why there's so much code below.

The actual task of the benchmark: take a sheet of randomly generated floating point numbers and group the result by the result of division by 10, rounded to the nearest integer. Result type – Map<Long, List<Double>>

Code

static final double DIVISOR = 100.0;

@State(Scope.Benchmark)

public static class Params {

@Param({"1000", "10000", "100000", "1000000"})

public int size;

public List<Double> items;

@Setup

public void setUp() {

Random random = new Random();

items = random.doubles(size)

.mapToObj(i -> i)

.collect(Collectors.toList());

}

}

@Benchmark

public Map<Long, List<Double>> forEach(Params params) {

Map<Long, List<Double>> res = new HashMap<>();

for (Double item : params.items) {

Long key = Math.round(item / DIVISOR);

List<Double> list = res.get(key);

if (list != null) {

list.add(item);

} else {

list = new ArrayList<>();

list.add(item);

res.put(key, list);

}

}

return res;

}

@Benchmark

public Map<Long, List<Double>> forEachLinked(Params params) {

Map<Long, List<Double>> res = new HashMap<>();

for (Double item : params.items) {

Long key = Math.round(item / DIVISOR);

List<Double> list = res.get(key);

if (list != null) {

list.add(item);

} else {

list = new LinkedList<>();

list.add(item);

res.put(key, list);

}

}

return res;

}

@Benchmark

public Map<Long, List<Double>> forEachLinkedCompute(Params params) {

Map<Long, List<Double>> res = new HashMap<>();

for (Double item : params.items) {

res.compute(Math.round(item / DIVISOR), (k, v) -> {

if (v == null) {

LinkedList<Double> list = new LinkedList<>();

list.add(item);

return list;

}

v.add(item);

return v;

});

}

return res;

}

@Benchmark

public Map<Long, List<Double>> collect(Params params) {

return params.items.stream()

.collect(Collectors

.groupingBy(n -> Math.round(n / DIVISOR)));

}

@Benchmark

public Map<Long, List<Double>> collectLinked(Params params) {

return params.items.stream()

.collect(Collectors.groupingBy(

n -> Math.round(n / DIVISOR),

Collectors.toCollection(LinkedList::new)));

}

@Benchmark

public Map<Long, List<Double>> collectPar(Params params) {

return params.items.parallelStream()

.collect(Collectors

.groupingBy(n -> Math.round(n / DIVISOR)));

}

@Benchmark

public Map<Long, List<Double>> collectParLinked(Params params) {

return params.items.parallelStream()

.collect(Collectors.groupingBy(

n -> Math.round(n / DIVISOR),

Collectors.toCollection(LinkedList::new)));

}

@Benchmark

public Map<Long, List<Double>> collectParOpt(Params params) {

return params.items.parallelStream()

.unordered()

.collect(Collectors.groupingByConcurrent(

n -> Math.round(n / DIVISOR),

Collectors.toCollection(LinkedList::new)));

}

@Benchmark

public io.vavr.collection.HashMap<Long, io.vavr.collection.List<Double>> reducePar(Params params) {

return params.items.parallelStream()

.reduce(

io.vavr.collection.HashMap.empty(),

(m, n) -> {

Long key = Math.round(n / DIVISOR);

return m.put(key, m.get(key)

.map(l -> l.prepend(n))

.getOrElse(() -> io.vavr.collection.List.of(n)));

},

(l, r) -> l.merge(r, io.vavr.collection.List::prependAll));

}

@Benchmark

public io.vavr.collection.HashMap<Long, io.vavr.collection.List<Double>> reduceParUnord(Params params) {

return params.items.parallelStream()

.unordered()

.reduce(

io.vavr.collection.HashMap.empty(),

(m, n) -> {

Long key = Math.round(n / DIVISOR);

return m.put(key, m.get(key)

.map(l -> l.prepend(n))

.getOrElse(() -> io.vavr.collection.List.of(n)));

},

(l, r) -> l.merge(r, io.vavr.collection.List::prependAll));

}

Among successive implementations forEach slightly faster than streams, grouping the result into Map . Usage LinkedList in theory it can improve the result, but not for parallel streams. In fact, there is a serious performance penalty when using Collectors.toCollection(LinkedList::new).

For testing Stream.reduce() on parallel streams we must use immutable structures. Regular HashMap – mutable, so they rush to help Map And List from the library Vavr. True, without much success. To be honest, I expected more from reduce on parallel streams, but then I realized that the expectations were groundless. U Map from Varv function get And put have constant complexity, but we have to call both of them every time, because no convenient function Map.compute() as in the standard API.

I would not like to draw significant conclusions regarding the reasons for the results achieved. The collected data is not enough. The question “to stream or not to stream” seems to have the following answers. If we care about microsecond-level performance, we should use serial forEach or parallel Stream.collect() . And we'd better stop trying to prematurely optimize if we're not going to test it in real-world scenarios.

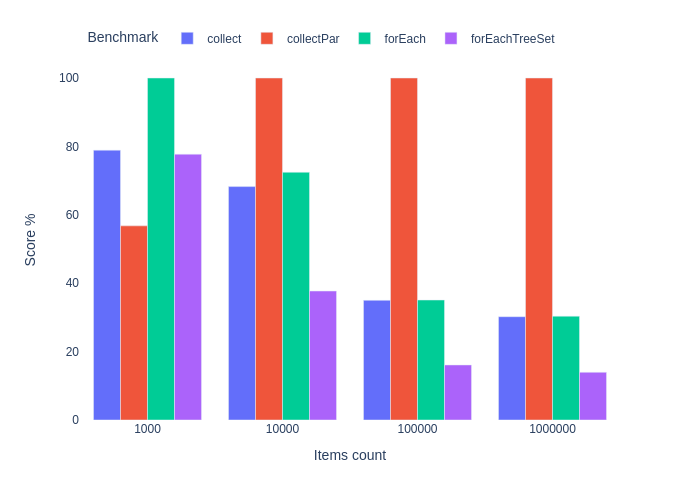

Filtering and sorting

Another use case for streams is filtering, sorting and selecting unique elements from a collection. Of course, not always everything is together. The benchmark takes random numbers, filters them by falling between the minimum and maximum values, selects unique values and sorts them in natural order.

Code

static final double MIN = 0.0;

static final double MAX = 10.0;

@State(Scope.Benchmark)

public static class Params {

@Param({"1000", "10000", "100000", "1000000"})

public int size;

public List<Double> items;

@Setup

public void setUp() {

Random random = new Random();

List<Double> values = random.doubles(500, MIN, MAX + 5)

.mapToObj(i -> i)

.collect(Collectors.toList());

items = random.ints(size, 0, values.size())

.mapToObj(values::get)

.collect(Collectors.toList());

}

}

@Benchmark

public Collection<Double> forEach(Params params) {

Set<Double> set = new HashSet<>();

for (Double item : params.items) {

if (item > MIN && item < MAX) {

set.add(item);

}

}

List<Double> res = new ArrayList<>(set);

Collections.sort(res);

return res;

}

@Benchmark

public Collection<Double> forEachTreeSet(Params params) {

Set<Double> set = new TreeSet<>();

for (Double item : params.items) {

if (item > MIN && item < MAX) {

set.add(item);

}

}

return set;

}

@Benchmark

public Collection<Double> collect(Params params) {

return params.items.stream()

.filter(n -> n > MIN && n < MAX)

.distinct()

.sorted()

.collect(Collectors.toList());

}

@Benchmark

public Collection<Double> collectPar(Params params) {

return params.items.parallelStream()

.filter(n -> n > MIN && n < MAX)

.distinct()

.sorted()

.collect(Collectors.toList());

}

We see a familiar pattern: the longer the collection, the more effective parallel streams are. An attempt to optimize sorting through TreeSet was not successful.

Conclusion

It looks like we can do without revelations. Streams in different situations can be both faster and slower than imperative cycles. And I'm really glad that the streams are generally good. At least in the area of daily tasks in the enterprise. If I were forced to stop using streaming due to poor performance, I would miss this beautiful and visual abstraction.

One point left unnoticed. All parallel streams use the same ForkJoinPool (unless you asked pool obviously – approx. lane). Everyone has heard that this is why we need to be careful about the massive use of parallel streams – but what would the numbers say?

Update

In the comments I was asked about the impact of the JIT compiler on performance forEach. Below are the results of benchmark runs with the JIT compiler turned off (JVM parameter -Xint)