Using Hashicorp Vault to Store Secrets

In this article, I would like to share the practice of using a secret store from Hashicorp, and it is called Vault.

I’ll talk about how our company uses this storage, omitting the details of installation and scaling. We will not talk about monitoring and fault tolerance. Let’s also skip over the topic of restoring storage after a disaster. All these are topics of separate articles.

Hello everyone, my name is Sergey Andryunin.

In the same article, we will focus on access to secret data and methods of authorization in the vault.

For those who do not like to read a lot of text, I immediately publish links to materials from the article: once And two.

Content

Authorizations using the AppRole method

Introduction

Before we start describing different authorization mechanisms and their pros and cons, let’s first talk about why it is needed at all – that same storage.

In the modern world, most web applications and various services have either already switched to a microservice architecture, or are in the active transition stage. Due to the fact that instead of one monolithic configuration file, we get a huge number of small ones (one or even several for each service), there is a need to store the contents of these files as safely as possible. And it also happens that instead of a configuration file, we use environment variables to configure the service. As a result, for each service we have our own settings for connecting to the database, external APIs, message queues, caches and other systems. In addition, we can configure other parameters that we would like to keep secret. For example, salt (hash function input modifier) for encryption, keys for generating JWT tokens. In a word, we have a huge number of entities that we want to store and preferably so that outsiders do not have access to them. And here a product from Hashicorp comes to the rescue.

Meet Hashicorp Vault

For those who do not know, in short – this is a repository of secret data, of any kind. At the same time, this repository supports various authorization mechanisms and access policies. This is done to differentiate rights to our data. In addition to secrets, this vault can be configured as a PKI server. Data can be accessed through the web interface, command line using the client, and through the API. Even using curl requests in your lamp bash scripts. Regardless of the data access method, all storage authorization methods are supported at all levels. And for a moment, there are not so few of them, namely:

AppRole

AliCloud

AWS

Azure

Cloud Foundry

GitHub

Google Cloud

JWT/OIDIC

Kerberos

Kubernetes

LDAP

Oracle Cloud

Okta

Radius

TLS

tokens

Basic

As you can see, for every taste and color. For those who want more details, here is a link to documentation.

In this article, we will talk a little about only a few, and we will dwell on one of them in more detail. A little further you will understand why.

Authorization methods

Most often, we log in to the WEB interface either through an access token or using a login and password, which can be internal or stored on a Radius or LDAP server.

Log in to the vault using your username and password:

$ vault login -method=userpass username=admin password=strongPASSThe same but using curl:

$ curl --request POST --data '{"password": "strongPASS"}' http://vault_addr/v1/auth/userpass/login/adminWhen using the vault cli tool, a token is most often used for authorization in the vault, which is saved in a special environment variable VAULT_TOKEN.

$ export VAULT_ADDR='http://10.10.10.10:8200/'

$ export VAULT_TOKEN=<token>

$ vault login [token=<token>]In the last command, the token option is optional provided that we set VAULT_TOKEN in the environment variables.

To make requests through the API from the application or from the curl utility, the same token is used. Or, using our account, we generate a new short-lived token and use it. All details on working with tokens can be found here.

And another way to interact with the API is authorization through the AppRole. In order to authorize in the repository, we need to know our App-ID and Secret-ID. Which is much more secure than login/password and access token.

Authorization using the AppRole method

A loose translation of the documentation reads:

The Approle authorization method allows computers or applications to authenticate using roles defined by Vault. This authentication method is focused on automated workflows (machines and services) and is less useful for human operators.

An AppRole is a set of Vault policies and login restrictions that must be met in order to obtain a token with those policies. An AppRole can be created for a specific machine, or even for a specific user on that machine, or for a service distributed across machines. The credentials required for a successful login depend on the restrictions set on the AppRole.

Vault Configuration

In order to start using this method, we must first set up our repository. To do this, run a few simple commands.

Before starting work with the storage, let’s configure the settings for connecting to it.

$ export VAULT_ADDR=http://10.10.10.10:8200

$ export VAULT_TOKEN=your_token_hereEnable the desired authorization method.

$ vault auth enable approleThen we will add test data, it is with them that we will work in the future.

$ vault kv put secrets/demo/app/service db_name="users" username="admin" password="passw0rd"You can use the root token to further configure this authorization method. That in itself is not safe and is not recommended. It is good practice to create separate accounts for each engineer, or enable LDAP authorization, for example.

In order for the user to perform further configuration steps, the following policy must be assigned to the user:

# Mount the AppRole auth method

path "sys/auth/approle" {

capabilities = [ "create", "read", "update", "delete", "sudo" ]

}

# Configure the AppRole auth method

path "sys/auth/approle/*" {

capabilities = [ "create", "read", "update", "delete" ]

}

# Create and manage roles

path "auth/approle/*" {

capabilities = [ "create", "read", "update", "delete", "list" ]

}

# Write ACL policies

path "sys/policies/acl/*" {

capabilities = [ "create", "read", "update", "delete", "list" ]

}

# Write test data

# Set the path to "secrets/data/demo/*" if you are running `kv-v2`

path "secrets/demo/*" {

capabilities = [ "create", "read", "update", "delete", "list" ]

}

This can be done through the web interface or the command line, we will not dwell on this now. In our example, we will do all the actions using the root token. I want to note that you should not do this on combat environments. This token should be stored as securely as possible from prying eyes.

Before creating the role itself, we need to define an access policy for it. Namely, what and at what level of access we can do in the repository. In our case, let’s allow reading the previously created secret.

$ vault policy write -tls-skip-verify app_policy_name -<<EOF

# Read-only permission on secrets stored at 'secrets/demo/app/service'

path "secrets/data/demo/app/service" {

capabilities = [ "read" ]

}

EOFNext, we need to create a role – the very role with which we will read the secrets:

$ vault write -tls-skip-verify auth/approle/role/my-app-role \

token_policies="app_policy_name" \

token_ttl=1h \

token_max_ttl=4h \

secret_id_bound_cidrs="0.0.0.0/0","127.0.0.1/32" \

token_bound_cidrs="0.0.0.0/0","127.0.0.1/32" \

secret_id_ttl=60m policies="app_policy_name" \

bind_secret_id=falseWe believe that the role was created successfully with the following command:

$ vault read -tls-skip-verify auth/approle/role/my-app-roleThen we get the role-id. This parameter is useful for us to perform authorization in the repository.

$ vault read -tls-skip-verify auth/approle/role/my-app-role/role-idNext, I will give a short excerpt of a free translation from the documentation.

The RoleID is the identifier that the AppRole selects against which other credentials are evaluated. When authenticating to the system, RoleID is always a required argument (via role_id). By default, RoleIDs are unique UUIDs that allow them to serve as secondary secrets for other credential information. However, they can be set to specific values to match the client’s introspective information (eg, the client’s domain name).

Now let’s look at the parameters that we need to create a role.

role_id – Required credentials in the login endpoint. The AppRole pointed to by role_id will be restricted.

bind_secret_id – Requires whether or not to provide a secret_id at the registration point. If the value is true then the Vault Agent will not be able to log in. The parameter is set when creating a role.

Additionally, you can configure other restrictions for the AppRole. For example, secret_id_bound_cidrs will only be allowed to login from IP addresses that belong to configured CIDR blocks on the AppRole.

The AppRole API documentation can be read here.

Usage

For authorization, you can use 2 methods. The first method is to pass the full set of parameters required for authorization. Then getting a token or a temporary wrapping token in order to get the main token. As a rule, the wrapper token is made short-lived in order, for example, to upload the application to the server and get the main token. Then store the main token in memory and use it, at which time the wrapping token will no longer be valid. And the second option is more tricky and safer, which we use in our company. Let’s consider both options in more detail.

Option 1

RoleID is equivalent to the username and SecretID is equivalent to the corresponding password. The application needs both to log into the Vault. Naturally, the next question is how to securely deliver these secrets to the client.

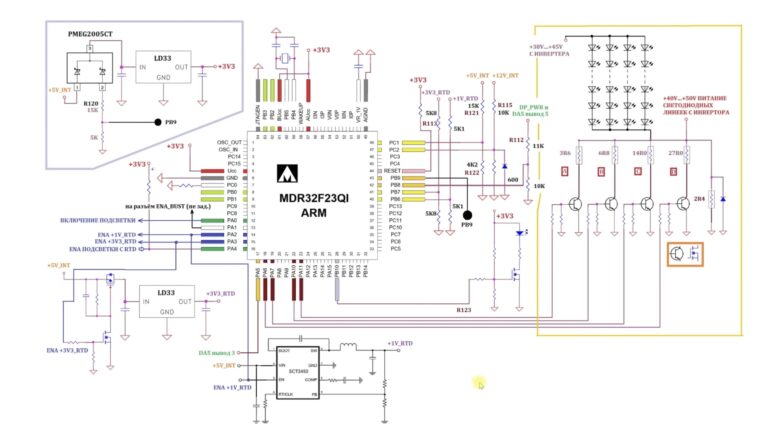

For example, Ansible can be used as a trusted way to deliver the RoleID to the environment where the application is running. The figure, taken from the official documentation, clearly shows the whole process.

To get a wrap token that will be used for authorization and secret-id request, run the command:

$ vault write -wrap-ttl=600s -tls-skip-verify -force auth/approle/role/my-app-role/secret-idThen the resulting file must be placed on the file system of the virtual machine where our application is launched. This path must match the one expected by the application. The received token can be used only once. After launch, the token will be read and used to get the secret-id. After receiving the secret-id, the application can request data from the Vault, as long as the TTL for the secret_id has not expired. This method is well suited when we fill in the initial configuration of the application at the launch stage. For example, using the Singleton pattern, we fill the Config structure with values.

The recommendations state that the wrap token should have a lifetime equal to the deployment time of the application. At the same time, secret-ids can have a long lifetime, but this is not recommended by the Vault developer due to the high memory consumption and the load when clearing expired tokens. The best recommendation is to use a short life time and constantly extend it. Thus, avoid expensive authorization operations and the issuance of new tokens.

Option 2

To get the secret-id, you can use the vault-agent, which allows, knowing only the role-id, to log in and get an access token in the storage. In this case, the agent takes over the functions of updating this token. The agent can supply both a wrap token and a ready-to-use secret-id.

Preparing the vault-agent.hcl agent configuration. In this demonstration configuration, we will enable writing received tokens to disk. As a result, we will get two types of wrapped token and a regular secret-id ready for use. I want to note that it is not necessary to write tokens to disk.

pid_file = "./pidfile"

auto_auth {

mount_path = "auth/approle"

method "approle" {

config = {

role_id_file_path = "./roleID"

secret_id_response_wrapping_path = "auth/approle/role/my-app-role/secret-id"

}

}

sink {

type = "file"

wrap_ttl = "30m"

config = {

path = "./token_wrapped"

}

}

sink {

type = "file"

config = {

path = "./token_unwrapped"

}

}

}

vault {

address = "https://10.10.10.10:8200"

}

Launch Vault Agent.

$ vault agent -tls-skip-verify -config=vault-agent.hcl -log-level=debugAfter starting the agent, two files will appear on the file system according to our configuration. The first file will be in json format and contain the wrap token data to get the secret-id. The second file will contain the secret-id ready to be used for authorization. The agent takes over the functionality of updating these tokens.

This configuration makes sense when our application constantly reads or writes data to the Vault. Well, when we want to secure our data in the maximum way.

From theory to practice

As an example, we will try to configure a demo application and an nginx server. For the sake of brevity, I’ll omit some of the details, but provide a link to repository where you can see everything yourself.

For speed, we will deploy Vault in a Kubernetes cluster. You can use Minikube or Docker Desktop for Mac by enabling the Kubernetes Cluster option there. All the necessary manifests are located in the repository at the link above in the manifests folder.

You can also run vault on your work machine. To do this, you need to download the binary file and run it.

We write demo data to the repository.

$ vault kv put secrets/demo/app/nginx responseText="Hello from Vault"We create a policy.

$ vault policy write -tls-skip-verify nginx_conf_demo -<<EOF

# Read-only permission on secrets stored at 'secrets/demo/app/nginx'

path "secrets/data/demo/app/nginx" {

capabilities = [ "read" ]

}

EOFWe create a role.

$ vault write -tls-skip-verify auth/approle/role/nginx-demo \

token_policies="nginx_conf_demo" \

token_ttl=1h \

token_max_ttl=4h \

secret_id_bound_cidrs="0.0.0.0/0","127.0.0.1/32" \

token_bound_cidrs="0.0.0.0/0","127.0.0.1/32" \

secret_id_ttl=60m policies="nginx_conf_demo" \

bind_secret_id=falseWe get the role-id.

$ vault read -tls-skip-verify auth/approle/role/nginx-demo/role-idFurther in the demo folder are manifests. The nginx folder contains everything that is needed for our example to work. There is also an app folder there, which will run a demo demo application written in go that will read our secrets prepared in the first theoretical part.

I will just focus on explanations of the nginx manifests.

As we can see from the deployment, we have multiple containers.

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-autoreload

labels:

role: nginx-reload-test

...

initContainers:

- name: init-nginx-config

image: vault:1.9.0

imagePullPolicy: IfNotPresent

...

containers:

- name: nginx

image: nginx:1.21.4-alpine

imagePullPolicy: IfNotPresent

...

- name: vault-agent-rerender

image: vault:1.9.0

imagePullPolicy: IfNotPresent

...

volumes:

- name: vault-nginx-template

configMap:

name: vault-nginx-template

- name: vault-agent-config

configMap:

name: vault-agent-configs

- name: nginx-rendered-conf

emptyDir:

medium: MemoryThe init container generates the initial configuration for us by reading the secret string and writing it to the nginx configuration file. The nginx worker container reads this configuration file and outputs a secret string to the browser. And there is also a third container with a volt, in which all the magic happens.

Here is the most interesting part of the config:

template {

source = "/etc/vault/config/template/nginx/nginx.conf.tmpl"

destination = "/etc/vault/config/render/nginx/nginx.conf"

command = "ps ax | grep 'nginx: maste[r]' | awk '{print $1}' | xargs kill -s HUP"

}

template_config {

static_secret_render_interval = "1m"

}Here we tell the agent to monitor changes to the repository once every 1 minute. You can do it less often, you can do it more often, depending on your tasks. Then, if the config is rendered, we execute the following command, namely, we send the HUP master to the nginx process, which in turn terminates active connections and starts a new worker with a new configuration. Thus, we gently reread it.

Now, if we change our phrase in the repository, then we will overwrite the config and force nginx to reread it and return the new data.

As an experiment with accessing from a specific CIDR while your application is running, you can override the role the agent logs in with. For example, like this:

$ vault write -tls-skip-verify auth/approle/role/nginx-demo \

token_policies="nginx_conf_demo" \

token_ttl=1h \

token_max_ttl=4h \

secret_id_bound_cidrs="192.168.17.0/24","127.0.0.1/32" \

token_bound_cidrs="192.168.17.0/24","127.0.0.1/32" \

secret_id_ttl=60m policies="nginx_conf_demo" \

bind_secret_id=falseBy replacing the 0.0.0.0 subnet with 192.168.17.0/24 or any other where your application is not running. After changing the policy, the agent will no longer be able to log in to the store and receive data. To check this, change the line in the secrets again and look in the agent logs. There you will see a message stating that authorization is not possible.

conclusions

What is the secret here? And here’s the thing: when creating a role, we set the secret_id_bound_cidrs and token_bound_cidrs parameters, which limit the subnets from which we can receive secret-id and token. In other words, Vault will reject requests from other networks and you will not be able to access the data. In order to do everything to the maximum, when creating the role, we will set the parameter bind_secret_id=false. This will allow you to log in to the repository without passing the secret-id. Vault will get it for us.

Thus, it turns out that in order to authorize our application, we need only one parameter – this is the role-id, which in itself is not secret and you can survive its leakage into the network and do not change anything in your system, provided that you have configured the role correctly and limited the subnets from which this role can be used.

I would like to add one important feature. In order to access the vault through the AppRole, specifying only the role-id, you need to authorize through the vault agent. At the time of this writing, the library for accessing the storage for GO did not support this method and also required a secret-id.

Consequently, there are architectural features of building applications that are not considered in this article. But to be brief, through the agent, you can fill in the env variables for the application and work with them further. Or the second option – the application itself receives an access token during deployment through a wrapped token and already independently reads the secrets from the vault, having the secret-id in hand, which is stored in memory and used as many times as needed.

From the above, it follows that the simplest secure method of authorization in the store is a properly configured AppRole. This is the method we use in our company to retrieve data from the warehouse. And already further, according to the circumstances, we can save or transfer a secret access token to applications, generate configuration files or set environment variables for applications.

If you liked the article and want to continue, for example, how to create and work with PKI, how to monitor and scale a cluster, how to perform backup and restore, then support us with a like and be sure to let us know in the comments.

Do you have any questions about the implementation of this authorization method? Feel free to leave them in the comments. If you think another way is better, share it in the comments, it will be useful for readers to know several ways to work with secrets.