testing Vault stability using Gremlin

Chaos engineering is an approach to testing application resilience. Roughly speaking, we deliberately break something in a system to see how it will behave, and from this experiment we draw useful conclusions about reliability and vulnerabilities.

We translated an article on how to apply this approach to HashiCorp Vault, a secrets management system.

What is HashiCorp Vault

HashiCorp Vault is an identity-based secrets management and encryption system. A secret is anything you want to restrict access to, such as API encryption keys, passwords, and certificates.

HashiCorp Vault Architecture

Vault supports multi-server mode, where multiple Vault servers are run to ensure high availability. High Availability Mode (HA) is enabled automatically when using data storage.

When running in HA mode, Vault servers have two states: standby and active. Only one instance is active at all times. All backup instances are in hot standbys. Only the active server processes requests, while the backup server redirects all requests to the active one. If the active server is blocked for some reason, goes down, or loses network connectivity, one of the backup servers becomes active. The Vault service can continue to operate as long as a majority of the servers (quorum) remain online. Read more about the performance of backup nodes in our documentation.

What is chaos engineering?

Chaos engineering is the practice of finding reliability risks in systems by deliberately introducing faults. This practice helps identify weaknesses in systems, services, and architecture before actual failure occurs. You can improve availability, lower mean time to resolution (MTTR), lower mean time to detect (MTTD), reduce the number of bugs getting into the product, and reduce the number of failures. For teams that frequently conduct Chaos engineering experiments, availability can reach 99.9%.

When conducting Chaos engineering experiments you:

increase system performance and stability;

identify blind spots using monitoring, observability and alerting;

check the stability of the system in case of failure;

study how systems cope with various failures;

help the engineering team prepare for real failures;

improve the architecture for handling failures.

You can learn more about Chaos Engineering practices and tools find out in Slurm's article or after watching the webinar.

Chaos engineering and Vault

Because Vault stores and processes sensitive application secrets, it can become a target for attackers. If all Vault instances fail, applications that receive secrets from Vault will be unable to function. Any breach or unavailability of Vault could result in serious damage to an organization's business, reputation, and finances. Here are the main types of threats for Vault:

code and configuration changes that affect application performance;

loss of node leader;

loss of quorum in the Vault cluster;

unavailability of the main cluster;

high load on Vault clusters.

To mitigate these risks, teams need to test and verify the resiliency of Vault. This is where Chaos engineering comes to the rescue. Let's consider experiments using Gremlin — platforms for Chaos engineering.

The goal of Chaos engineering

Despite the name, the goal of Chaos engineering is not to create chaos, but to reduce it. After all, ultimately you have to identify and fix the problems. Chaos engineering is not random or uncontrolled testing. This is a methodical approach, so all experiments should be planned and carefully thought through. You must have a good understanding of when and how to stop an experiment, how to monitor health checks and the state of systems.

Remember that Chaos engineering is not an alternative to unit tests, integration tests, or performance benchmarking. It complements them and can be carried out in parallel. For example, simultaneous experiments in Chaos engineering and performance tests can help identify problems that only arise under load. This increases the likelihood of detecting reliability problems that may arise during operation.

Five stages of Chaos engineering

The Chaos engineering experiment consists of five main stages:

Creating a Hypothesis. A hypothesis is an educated guess about how your system will behave under certain conditions. That is, this is the expected reaction to a certain type of failure. For example, if Vault loses a leader node in a cluster of three nodes, Vault must continue to respond to requests and another node must be selected as the leader node. When forming a hypothesis, start small and focus on one part of your system. This will make it easier to test that specific part without affecting others.

Definition of Steady State. The steady state of a system is its performance and behavior under normal conditions. Determine the metrics that best indicate the reliability of your system and track them under normal conditions. This is the baseline against which you will compare the results of the experiment. Examples of steady state indicators include

Vault.core.handle_login_requestAndvault.core.handle_request. Additional Key Metrics can be found here.Creating and conducting an experiment. At this stage, you determine the parameters of the experiment. How will you test your hypothesis? For example, when testing the response time of the Vault application, you can simulate a slow connection and create a delay.

Here you need to determine the conditions under which you will interrupt the experiment. For example, if the Vault application's latency exceeds the experiment's thresholds, you should stop it immediately. Please note that an aborted experiment does not equal a failed experiment. It simply means that you have identified a security risk.

Once you define your experiment and interrupt conditions, you can create experimental systems using Gremlin.

Track results. During the experiment, track key metrics for your application. See how they compare to the baseline and draw conclusions about the test results. For example, if “black hole” In your cluster, Vault is rapidly increasing CPU usage, and your response time to API requests may be too fast. Or the web application might start giving users HTTP 500s instead of clear error messages. In both cases, these are undesirable results that need to be addressed.

Make changes and improvements. After analyzing the results and comparing the indicators, fix the problem. Make the necessary changes to the application or system, deploy the changes, and then verify that the changes fixed the problem by repeating the process. This way you will gradually increase the stability of the system. This is a more efficient approach than trying to make large changes to the entire application at once.

Implementation

This section describes four experiments to test the Vault cluster. Before you can perform these experiments, you will need:

Experiment 1: the impact of losing a leader node

In the first experiment, you will test whether Vault can continue to respond to requests if the leader node becomes unavailable. If the active server is blocked, goes down, or loses network connectivity, one of the standby Vault servers becomes the active instance. You will use black hole experimentto block network traffic to and from the leader node, and then monitor the health of the cluster.

Hypothesis

If Vault loses a leader node in a three-node cluster, then Vault must continue to respond to requests and another node must become the leader.

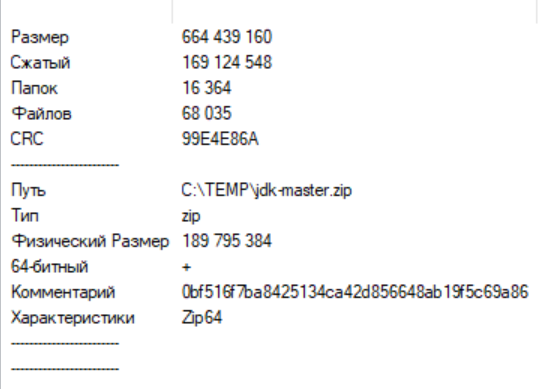

Determining Steady State Using a Monitoring Tool

Our sustainable state is based on three indicators:

the sum of all requests processed by Vault;

vault.core.handle_login_request;vault.core.handle_request.

The graph below shows that the amount of requests fluctuates around 20 thousand, while handle_login_request And handle_request fluctuate between indicators 1 and 3.

Conducting an experiment

In this experiment, a “black hole” experiment is carried out on the leader node for 300 seconds (5 minutes). Blackhole experiments block network traffic from a host and are great for simulating any number of network failures, including misconfigured firewalls, network hardware failures, etc. Five minutes is enough time to measure the impact and observe the Vault's response.

In the screenshot you can see the current status of the experiment in Gremlin:

Observation

In this experiment, Datadog is used to track metrics. The graphs below show that Vault responds to requests with negligible impact on throughput. This means that the Vault backup node has become the leader node:

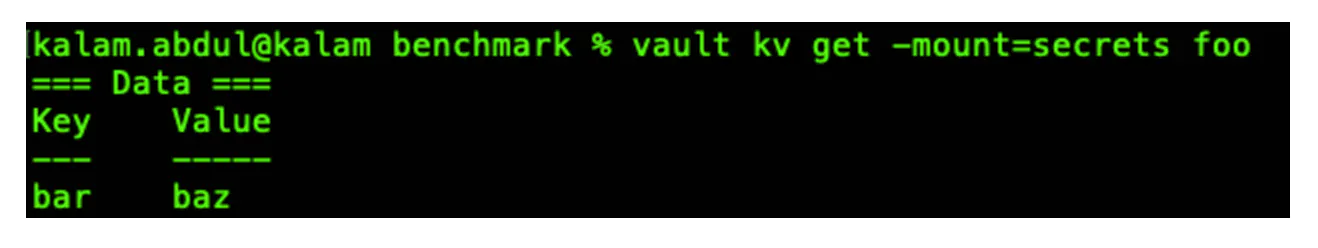

You can verify this by checking the nodes in the cluster using the Vault operator raft command:

Improving Cluster Resilience

Based on these results, no immediate changes are required, but there is scope to expand the scope of this test. What happens if two nodes fail? Or all three? If this really bothers your team, try repeating the experiment and removing a few more nodes. You can try increasing the cluster scale to four nodes instead of three and see how that changes your results. Don't forget that Gremlin has a Halt button to stop the current experiment if something unexpected happens. Be aware of the interruption conditions and don't be afraid to stop the experiment if these conditions are met.

Experiment 2: Impact of Quorum Loss

The next experiment tests whether Vault can continue to respond to requests without quorum. To do this, in the “black hole” experiment, two nodes out of three will be disconnected from the network. In this scenario, Vault will not be able to add or remove a node or commit additional log entries. IN this instruction from HashiCorp describes the steps required to restore the cluster, and this experiment will help test them.

Hypothesis

If Vault loses quorum, Vault must stop responding. But, following the instructions, we will be able to restore the cluster in a reasonable time.

Vault Steady State Definition

In this experiment, we will test whether Vault is responsive. We'll get the key to check.

Conducting an experiment

Gremlin is running another black hole experiment, this time targeting two cluster nodes.

Observation

Now that the nodes are down, the Vault cluster has lost quorum. Without quorum, reads and writes cannot occur within the cluster. When trying to get the same key, an error is returned.

Restoration and improvements

Follow this instruction from HashiCorp to recover from the loss of two of its three Vault nodes. To do this, you need to convert it into a cluster with one node. It will take a few minutes to get the cluster back online, but this will work as a temporary measure.

A long-term solution may be to implement a multi-data center deployment where you can replicate data. This will improve performance and provide disaster recovery (DR). HashiCorp recommends using DR clusters to avoid downtime and meet service level agreements (SLAs).

Experiment 3: Testing How Vault Handles Latency

The following experiment tests Vault's ability to handle high-latency, low-bandwidth network connections. You need to create a delay to the leader node of your cluster, and then monitor the request metrics. This will give you an idea of how this affects Vault functionality.

Hypothesis

Introducing a delay on the cluster leader node should not cause application timeouts or cluster failures.

Determining key performance indicators (KPIs) from the monitoring tool

This experiment uses the same Datadog metrics as the first experiment: vault.core.handle_login_request And vault.core.handle_request.

Conducting an experiment

This time use Gremlin to add delay. Instead of running a single experiment, create a script that allows you to run multiple experiments in sequence. Gradually increase the delay from 100 ms to 200 ms over 4 minutes with 5 second breaks between experiments. (This post Gremlin's blog explains how the delay attack works.)

Observation

In our test, the experiment caused some delays in response time, especially at the 95th and 99th percentiles, but all requests were successful. More importantly, our cluster is stable, as evidenced by the key metrics below:

Improving Cluster Resilience

To make the cluster even more stable, add non-voting nodes to it. A non-voting node has all Vault data, but has no influence on quorum calculations. This can be used with nodes in standby modeto add scalability to cluster reads. This can be useful in cases where a large volume of read operations from servers is required. This way, if one or two nodes fail, additional standby nodes can kick in and maintain performance.

Experiment 4: Testing How Vault Handles Out of Memory

This final experiment tests Vault's ability to handle reads when memory is low.

Hypothesis

If you are using memory on the leader node of a Vault cluster, applications should switch to reading from the high performance nodes in standby mode. This should not affect performance.

Defining indicators from a monitoring tool

For this experiment, the graphs below collect telemetry directly from Vault nodes; specifically, the memory allocated and used by Vault.

Conducting an experiment

Try a memory experiment: load 99% of Vault memory for 5 minutes. This will lead to the node leader working at the limit and maintaining this state until the end of the experiment or its interruption.

Observation

In this example, the leader node continued to run, and although there were slight delays in response time, all requests were successful. This can be seen in the graph below. Thus, our cluster tolerates high memory load well.

Improving Cluster Resilience

As in the previous experiment, you can use non-voting nodes and standby nodes to add computing power to the cluster as needed. These nodes add additional memory, but do not affect quorum calculation.

How to build a culture of using Chaos engineering

Teams tend to think about reliability in terms of technology and systems, but there's another side to it—reliability starts with people. To start building a culture of reliability, you need to teach application developers, SRE engineers, incident responders, and other team members to think proactively about reliability.

In a culture of reliability, every member of the organization works to maximize the availability of services, processes and people, reduce the risk of downtime and achieve rapid response to incidents. This culture ultimately focuses on one goal: providing the best possible customer experience. Here are some general tips for building a culture of reliability:

1. Introduce other teams to the concept of Chaos engineering.

2. Show the value of Chaos engineering in your team (you can use the results of these experiments as evidence).

3. Encourage teams that focus on reliability early in the software development life cycle, not just at the end.

4. Create a culture within the team that encourages experimentation and learning rather than assigning blame for incidents.

5. Implement new tools and methods of Chaos engineering.

6. Use Chaos engineering to regularly test systems and processes, automate experiments, and conduct organized team reliability events (often called “Game Days”).

If you want to learn the practices of Chaos engineering, come to Slurm for a course Chaos engineering. You'll learn how to formulate hypotheses and run experiments on your own using ChaosBlade and ChaosMesh, as well as other tools like Gremlin.