How to organize the process of testing hypotheses in a team and save several tens of millions of rubles

Article author: Dmitry Kurdyumov

Participated in Agile transformations in the largest companies in Russia (Alfa Bank, MTS, X5 retail group), with international experience in a startup abroad.

Hypothesis testing within a product team is an integral part of a successful business, allowing you to make informed decisions based on facts rather than assumptions, reduce risk, and increase the likelihood of product success in the marketplace. In today’s rapid pace of development, where user requirements are constantly changing, hypothesis testing ensures a high rate of adaptation to changes and product innovation, which makes this topic relevant and necessary for companies.

In this article, I want to share a case study of how we set up the process of testing hypotheses in one product company.

A detailed guide will be described below, what the process looks like, which you can take as a basis. And if you need detailed advice or implementation, feel free to contact me directly at telegram.

What does the process look like?

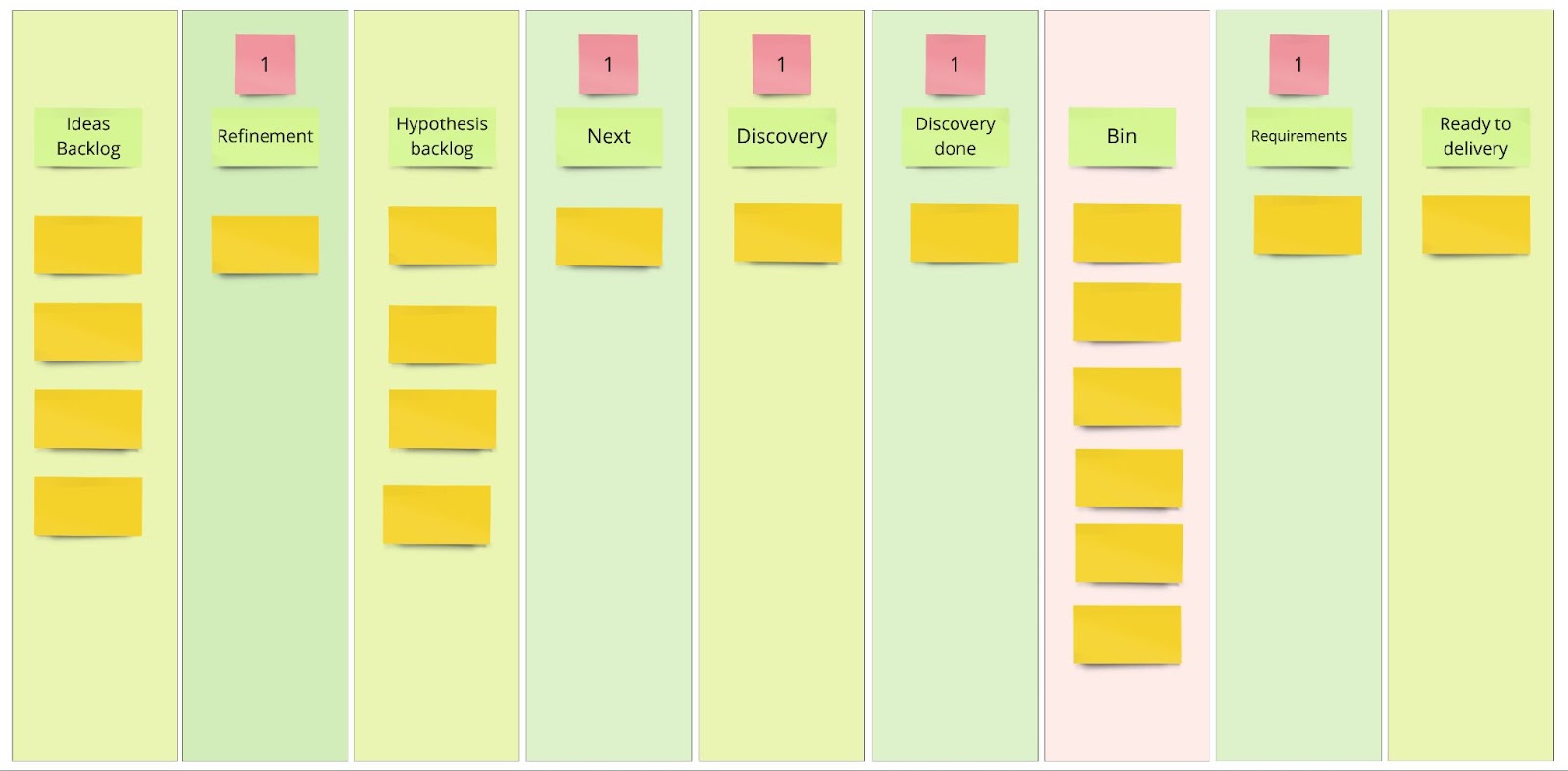

We visualized the process of testing hypotheses on a Kanban board (see the picture below), which we made in Jira. The result was a backlog of hypotheses for the Domain, Separate views for each unit and product team.

Each team member can put an idea into the Ideas Backlog stage.

At the next stage, the product manager must turn any idea into a product hypothesis. Well-described ideas have a much higher chance of getting into the backlog for review.

How to describe an idea?

Describe your idea in as much detail as possible. Try to structure the description so that it meets the SMART criteria. We need any information.

Questions, the answers to which will help us a lot.

specific.

What’s the idea? What change needs to be made in the product?

What result do we expect to get?

Which customer segment is targeted by the change? Who will benefit?

measurable.

How will we measure the result?

What metrics will we use to track progress towards achieving the goal?

What value of the metric should we achieve in order to consider the goal achieved?

Achievable.

Why do we believe that the goal of the idea is achievable?

What will help you achieve it?

What will stop you from achieving it?

Relevant.

What problem are we solving?

How does the idea fit into the company’s strategy?

What are the strategic goals?

If possible, provide links to materials confirming the value and validity of the idea.

time bound.

Are there any time limits? What are they subject to?

What is the desired timeline for the implementation of the idea?

Important! The specified time frames are not a product team commitment. The specific deadlines for testing the hypothesis and the timing of implementation are determined by the team at the next steps of the process.

Source. Optionally indicate what is the source of the idea, attach a link to the source from the database

For example:

UX Research,

Insight from the data

team brainstorm,

Opinion of the stakeholder or team member,

Customer Service,

user feedback,

Analysis of released projects and tested hypotheses,

Market, trends, media

Refinement stage

The product selects one idea from the ideas backlog for clarification and development.

Having finished refining the hypothesis, the product manager takes the next hypothesis to work.

How to formulate a hypothesis?

Check if the title is correct.

The title should be as concise as possible to answer the question “What needs to be done?” and reflect the essence of the hypothesis.

Formulate a hypothesis.

Format: If

Example:

Initial formulation of the idea: Offer the user additional accessories when buying a smartphone.

If we offer the user to add accessories to the cart with the selected smartphone, then we will increase the average purchase receipt by X %.

Rework and expand the original idea statement to make it as clear as possible about the hypothesis.

Some questions to help develop the idea:

What needs to change in the product?

What result do we expect to get?

Which customer segments are targeted by the change? What will be the coverage? (does not always need to be completed)

What problem are we solving/goal are we pursuing?

Why do we believe that the problem exists and that the important / goal is relevant? Provide all links to insights and materials confirming the problem.

Why do we think that the goal is achievable? For what?

How do we measure the result? What additional metrics, informational metrics and countermetrics will we take into account?

Are there any time limits and what are they caused by?

In the Hypothesis card in Jira, we sewed the following template, which is automatically filled in when creating a new hypothesis:

Stage Hypothesis backlog

Based on the collected knowledge about the hypothesis, it should be evaluated.

Evaluation is carried out collectively, by closed voting. The final calculation is the average.

The following are participating in the voting:

Members of the discovery team.

The author of the idea.

Relevant experts who will help evaluate the hypothesis more accurately (developers, stakeholders, cross-functions).

It is the responsibility of the responsible product to ensure that the hypothesis is evaluated by all the people necessary for an accurate evaluation.

If there is a large spread in the estimates, it means that people perceive the hypothesis ambiguously and this is a signal for synchronization / refinement.

The estimate can and should be reviewed regularly as new knowledge about the hypothesis becomes available.

After the Refinement stage, the hypotheses need to be evaluated by ICE.

Result: The team has an estimated backlog and understands which hypotheses will go to the next stage.

The company’s strategy and focus changes over time, new introductory ones appear. Therefore, estimates need to be revised. For us, the revision of estimates was once a quarter.

Stage Next

An important stage in the selection of hypotheses for work. The team meets once a week Planning a Discovery Sprint and selects the next hypothesis to work on, based on ICE priority and general discussion. Limit on this column = 1 at one time.

Also at this meeting, the team decides how they will test the hypothesis and forms a plan for testing the hypothesis by decomposing the hypothesis into tasks that need to be done to test it.

It is important to make sure at the meeting that there are answers to all questions (some of them can be worked out BEFORE planning)

What does the experience of the test and control group look like?

What users are we testing on? (Which users and at what point become part of the experiment).

What is the sample size of users for the experiment?

How long will the experiment last?

How do we measure change? Are Metrics Enough? Expand the set if necessary.

Clearly and unambiguously formulate what will be the success of the test and what will not.

Discovery Stage and Hypothesis Testing Tools

One of the most important stages in which the hypothesis is tested and the experiment is launched

The main task of the Discovery Team at this stage is to confirm or disprove the hypothesis as accurately as possible with as few resources as possible.

Think about how you will test the hypothesis.

It is clear that the best test of a hypothesis is a working product and real numbers. But it is not always advisable to use the entire arsenal of methods for verification.

Your task is to find the fastest and cheapest way without the involvement of development (or with minimal involvement of it).

When choosing a set of tools, be guided by an understanding of the cost of error and the cost of lost profits.

As an example, testing a hypothesis should definitely not be longer and more expensive than implementing a task “in combat”.

Some possible verification tools:

Calculation of the economic model;

Corridor prototype tests;

Customer surveys;

Fake feature in AB;

pre-sales;

Growth Hack;

Qualitative-quantitative UX tests (first click, comprehension test, unmoderated test, etc.);

MVP

And many others.

The final design of the experiment can combine several ways to test the hypothesis.

Run the experiment!

It is important not to change the conditions of the experiment in the course of its implementation, this may affect the correctness of the results and the honesty of the conclusions.

Summarize the experiment

Have you met the criteria for success?

Was the experiment carried out correctly?

What new insights from experiments have we received? Write them down in your insights database.

Share the results with one of your colleagues, ask for a review of the experiment and conclusions.

Stage Discovery done

Decide on next steps.

As a result, the hypothesis can either turn into a task and be sent to the next stage – Requirements, or closed as unconfirmed or inappropriate – go to the Bin stage (basket).

Also at this stage, we had a mandatory criterion, a review was carried out by other products. At the end of the Discovery Sprint, we had a Sprint review meeting at which each product spoke about the results of the experiment and conclusions, then the rest of the products and members of the Discovery teams asked questions. It helped a lot to share knowledge, share experiences and ask each other the right questions.

Stage Requirements

If the hypothesis was confirmed, then at this stage the Product formulated a solution, described the boundaries of the MVP. Often, the User story mapping tool was used for this, which helped to decompose the task into user steps and form the MVP of the solution. Design layouts were also pretended to be here. Not finishing options, but rather drafts that could then be brought to PBR (grooming) with the team.

Stage Bin

At any stage of the process, we can abandon the Hypothesis and transfer it to the status of Bin with the appropriate commentary and justification.

Stage Ready to Delivery

Confirmed hypotheses are stored in the development backlog. Here, an ICE assessment is carried out according to the same principle as I described above. Further, when planning the development, the next task located on top is drawn.

Metrics

In order to track the effectiveness of the process, we have implemented a number of metrics.

Key success metric for the Discovery process:

% of green tests after feature development. What is a green test? If after the development of any feature it passes an A/B test and the version with the new feature shows an increase in metrics, then the test is green, if there is no growth – gray, if the metrics fail – the test is red.

We need discovery processes in order to increase confidence (confidence that a feature needs to be done) and then after its development, the percentage of green tests will be higher. To do this, we started building Discovery processes.

But you can’t immediately affect the number of green tests, to track progress, you need counter metrics through which we can influence the% of green tests, for example:

Number of hypotheses tested per period

Average Hypothesis Testing Rate

The ratio of confirmed hypotheses to discarded ones

These metrics were analyzed at every retrospective after the Discovery Sprint. We looked at the dynamics and how the process works for us, how the counter-metrics change and how the target metric depends on them.

How do Discovery processes save money?

Dsicovery processes improve confiedence, the confidence that a feature needs to be done. Therefore, with successful Discovery processes, the number of tasks that bring an effect increases, and therefore you save money on wasted features.

If you liked the article, I’m waiting for you on my free webinar, where we consider cases and examples of Kanban systems. Also subscribe to my telegrams channel and come for a consultation.