Texthero pandas guide

Lightweight Natural Language Processing (NLP)

I'm always on the lookout for new tools to help me simplify my natural language processing routine, so when I came across a short video clip showing Texthero's functionality, I knew I had to give it a try. Texthero is designed as a Pandas wrapper, making it easier than ever to preprocess and parse Pandas text series. I immediately pulled out the documentation, opened my laptop, and downloaded a couple thousand discussions from Reddit for analysis to test the new library.

Note: The Texthero library is still in beta! There may be errors and processing procedures may change. I found a bug in wordcloud functionality and reported it. This should be fixed in the next update!

Texthero review

I'll go into much more detail when working through the code, but for the sake of brevity, Texthero is broken down into four functional modules:

Module Pre-treatment is all you need to effectively clean up Pandas text series. Under the hood he mainly uses regular expressions (regex).

Natural Language Processing (NLP)

Module NLP contains several core features such as named entity and noun recognition. He uses Spacy.

Module Representation used to create word vectors using various algorithms. It also includes Principal Component Method and k-Means. Uses scikit-learn for TF-IDF and Countand embeddings are loaded pre-computed from language models.

Module Visualizations used to display scatter plot representations or generate word clouds. This module currently only has a few functions and uses Plotly and WordCloud.

Check out the documentation for a complete list of features:Texthero Text preprocessing, representation and visualization from zero to hero.Text preprocessing, representation and visualization from zero to hero.texthero.org

Dependencies and data

Thanks to pip, the installation was easy, but I ran into a conflict when trying to do it in my environment with Apache Airflow due to a problem with the pandas version. Also, it took a while to install it in a new environment since it uses a lot of other libraries on the backend. It also loads a couple of additional items after importing for the first time.

Also I use PRAW to extract data from Reddit.

!pip install texthero

import praw

import pandas as pd

import texthero as hero

from config import cid, csec, ua # Данные для PRAW Please note that when you import Texthero for the first time, it loads some of the NLTK And Spacy:

Receiving data

I'm pulling data from a subreddit Teachingto see if we can identify any themes around concerns about starting school in the fall in COVID-stricken America.

# Создание соединения с reddit

reddit = praw.Reddit(client_id= cid,

client_secret= csec,

user_agent= ua)

# Список для конверсии фрейма данных

posts = []

# Возврат 1000 новых постов из teaching

new = reddit.subreddit('teaching').new(limit=1000)

# Возврат важных атрибутов

for post in new:

posts.append([post.title, post.score, post.num_comments, post.selftext, post.created, post.pinned, post.total_awards_received])

# Создание фрейма данных

df = pd.DataFrame(posts,columns=['title', 'score', 'comments', 'post', 'created', 'pinned', 'total awards'])

# Возврат 3-х верхних рядов из фрейма данных

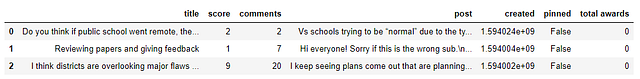

df.head(3)By using PRAW you can easily extract data from Reddit and load it into a pandas frame.

Here I receive 1000 new posts from teaching. df.head(3) will output a data frame something like this:

Preprocessing with Texthero

The real highlight of the texthero library is its simplified pre-processing procedure. Can't remember the regular expression syntax? Texthero has your back! Just call the .clean() method and pass it a series of data frames:

df['clean_title'] = hero.clean(df['title'])When using clean(), the following seven functions are performed by default:

fillna(s)replaces unassigned values with empty spaces;lowercase(s)converts all text to lowercase;remove_digits()deletes all blocks of numbers;remove_punctuation()removes all punctuation (!”#$%&'()*+,-./:;<=>?@[\]^_`{|}~);remove_diacritics()removes all accent marks from strings;remove_stopwords()removes all stop words;remove_whitespace()removes all spaces between words.

Customizable cleaning

If the default functionality doesn't do what you need, you can very easily create your own custom cleaning procedure. For example, if I want to save safe words and remove included ones, I can comment out remove_stopwords and add texthero.preprocessing.stem() to the procedure:

from texthero import preprocessing

# Создание настраиваемой процедуры чистки

custom_pipeline = [preprocessing.fillna

, preprocessing.lowercase

, preprocessing.remove_digits

, preprocessing.remove_punctuation

, preprocessing.remove_diacritics

#, preprocessing.remove_stopwords

, preprocessing.remove_whitespace

, preprocessing.stem]

# Передача custom_pipeline аргументу процедуры

df['clean_title'] = hero.clean(df['title'], pipeline = custom_pipeline)

# Передача custom_pipeline аргументу процедуры

df['clean_title'] = hero.clean(df['title'], pipeline = custom_pipeline)

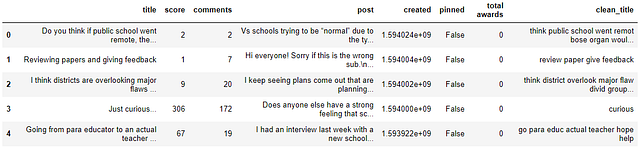

df.head()note that custom_pipeline is a list of preprocessing functions. Check out the documentation for a complete list!

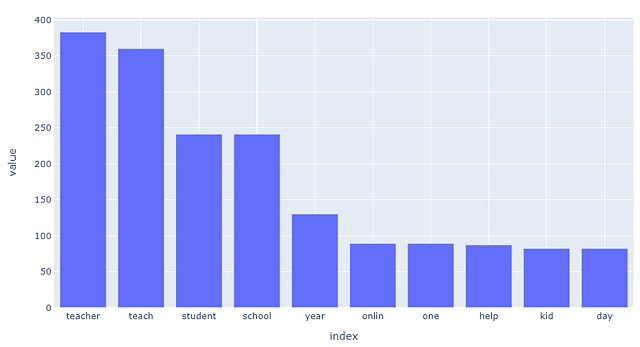

Checking popular words

This is just 1 line of code and I leave it to check if there are more words that can be added to the stop words list. Texthero doesn't have built-in bar charts yet, it only has a scatter plot, so I'll use Plotly express to visualize popular words in a histogram.

tw = hero.visualization.top_words(df['clean_title']).head(10)

import plotly.express as px

fig = px.bar(tw)

fig.show()

tw.head()

Adding new stop words

Stemming words and adding “ ' “ (note this between teachers and students) to stop words should provide more unique words. Stemming has already been added to the custom procedure, but there must also be stop words. They can be added to the list using associations two others:

from texthero import stopwords

default_stopwords = stopwords.DEFAULT

# Добавление списка стоп-слов к стоп-словам

custom_stopwords = default_stopwords.union(set(["'"]))

# Вызов remove_stopwords и передача списка custom_stopwords

df['clean_title'] = hero.remove_stopwords(df['clean_title'], custom_stopwords)Note that the list of custom_stopwords is passed to hero.remove_stopwords(). I'll re-render it and check the results!

The results look a little better after stemming and additional stopwords were applied!

Creating a procedure

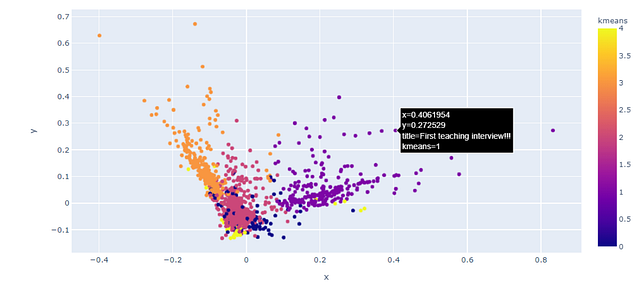

Thanks to .pipe() from Pandas, linking Texthero module components is very easy. To render the title I'm going to use principal component method (PCA) to compress vector space. I'm also going to run clustering K-meansto add color. Remember that Texthero accepts rowas input and output so I can set the output as a new column in the dataframe.

# Добавление значения метода главных компонент к фрейму данных, чтобы использовать его как координаты визуализации

df['pca'] = (

df['clean_title']

.pipe(hero.tfidf)

.pipe(hero.pca)

)

# Добавление кластера k-средних к фрейму данных

df['kmeans'] = (

df['clean_title']

.pipe(hero.tfidf)

.pipe(hero.kmeans)

)

df.head()

PCA and K-means clustering was applied using just a few lines of code! Now the data can be visualized using hero.scatterplot()

# Генерация точечной диаграммы

hero.scatterplot(df, 'pca', color="kmeans", hover_data=['title'] )Since he uses Plotly, the scatter plot is as interactive as expected! It can be increased as needed. Now it's time to examine the visualized results and see what information can be gleaned!

Final thoughts

Although Texthero is still in beta, I see a promising future for it and hope it gets the love it deserves. The library makes cleaning and preparing text in panda data frames a breeze. I hope to add a few more visualization options, but the scatter plot is a great start.

Full code

Thank you for reading. Here's the full code:

# Создание соединения с reddit

reddit = praw.Reddit(client_id= cid,

client_secret= csec,

user_agent= ua)

# Список для конверсии фрейма данных

posts = []

# Возврат 1000 новых постов из teaching

new = reddit.subreddit('teaching').new(limit=1000)

# Возврат важных атрибутов

for post in new:

posts.append([post.title, post.score, post.num_comments, post.selftext, post.created, post.pinned, post.total_awards_received])

# Создание фрейма данных

df = pd.DataFrame(posts,columns=['title', 'score', 'comments', 'post', 'created', 'pinned', 'total awards'])

# Возврат 3-х верхних рядов из фрейма данных

df.head(3)

from texthero import preprocessing

custom_pipeline = [preprocessing.fillna

, preprocessing.lowercase

, preprocessing.remove_digits

, preprocessing.remove_punctuation

, preprocessing.remove_diacritics

, preprocessing.remove_stopwords

, preprocessing.remove_whitespace

, preprocessing.stem]

df['clean_title'] = hero.clean(df['title'], pipeline = custom_pipeline)

df.head()

from texthero import stopwords

default_stopwords = stopwords.DEFAULT

custom_stopwords = default_stopwords.union(set(["'"]))

df['clean_title'] = hero.remove_stopwords(df['clean_title'], custom_stopwords)

hero.visualization.top_words(df['clean_title'])tw = hero.visualization.top_words(df['clean_title']).head(10)

import plotly.express as px

fig = px.bar(tw)

fig.show()

df['pca'] = (

df['clean_title']

.pipe(hero.tfidf)

.pipe(hero.pca)

)

df['kmeans'] = (

df['clean_title']

.pipe(hero.tfidf)

.pipe(hero.kmeans)

)

hero.scatterplot(df, 'pca', color="kmeans", hover_data=['title'] )To apply to all columns.

import texthero

import pandas as pd

df = pd.read_excel('data.xlsx')

df = df.astype(str)

def cleaner(column):

return texthero.clean(column)

# Определим функцию, которая будет применяться к каждому столбцу

def clean_column(column):

return texthero.clean(column)

# Применим функцию к каждому столбцу DataFrame

df = df.apply(clean_column)

df