Teaching AI to play the board game Magic the Gathering

Over the past six months I I wrote several times about fine tuning). Fine tuning is a very tempting technology that promises to solve problems in GPT-4 functions while being faster and cheaper. However, no matter how often fine tuning is discussed, I found surprisingly little content about it that would help me understand how effective fine tuning is and how difficult it is to integrate new features into language models.

So I decided to take matters into my own hands, dust off my ML skills and figure it out on my own.

Selecting a task

I was particularly interested in testing the capabilities of the model reason (that is, perform fairly complex tasks that require a good understanding of the context) about data out of distribution (that is, about those that the model did not see). Ultimately I chose my hobby: trading card game Magic the Gathering (and in particular the draft).

Let me explain for those who are not in the know: Magic: The Gathering is a strategy trading card game in which players use decks of cards representing creatures or spells to battle their opponents. One way to play Magic (and my favorite) is draftin which players build their decks by taking turns selecting individual cards from a pool of random cards.

Draft Perfectly meets my criteria:

Reasoning: Selecting a card from a randomly collected set is a fairly serious test of skill, requiring a holistic understanding of the context (for example, previously selected cards and cards available in the current set)

Out of distribution: new Magic cards are released approximately 4-6 times a year, and the newest cards are not included in the LLM model training corps.

Another important aspect: data. There is amazing service 17lands, which stores a huge amount of historical data – players use the 17lands tracking service to track draft data from Magic’s digital client. Using this data, you can extract “benchmark data” by examining the draft results of the top players on the service (sorted by win rate). The data is pretty fuzzy (many great Magic players argue about the right choices all the time), but it’s a good enough signal to test LLM’s ability to learn new tasks.

If you are curious about the details of the data, then here is an example of what 17lands data looks like after conversion to promt for LLM.

Results + conclusion

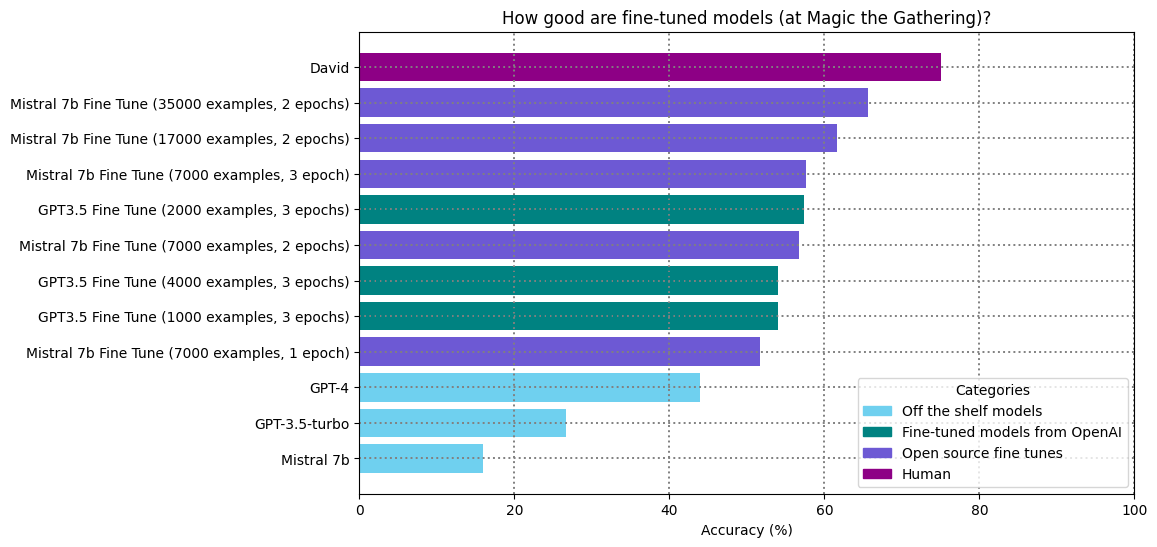

Let’s jump straight into the results and then dive into the conclusions and thoughts:

Thoughts:

The fine-tuned 7B parameter model confidently beats GPT-4 and approaches human (or at least the author’s) level of accuracy in performing this task.

It looks like GPT-3.5 with fine tuning would perform even better, however fine-tuning GPT-3.5 is very expensive! (about a hundred times more expensive than fine-tuning Mistral on bare metal + additional cost of each inference). A GPT-3.5 fine-tuning run equivalent to my largest Mistral-7b run would cost about $500; It would be an expensive experiment.

Fine tuning is still something of an art — I hoped that this would be closer to development than to science, but we have to perform a lot of experiments. In particular, industrial engineering with long fine-tuning feedback loops is a pain. More on this below.

Even small open source models are huge by the standards of five years ago. It’s one thing to count “7 billion parameters”, but quite another to put 7 billion parameters and all the associated mathematics into the GPU.

I did an interesting experiment: I fine-tuned the model on one set of maps, and then evaluated its accuracy on a set of maps that it had not seen before. It seems, the model generalized the draft conceptrather than just remembering which cards were good.

Field Observation Journal: Methods and Lessons

Data

Creating a text dataset: The 17lands draft dataset is a huge CSV file that describes user draft selection sequences in approximately this format:

Cards available in current set

Cards that the drafter has previously selected

The card the drafter selected from this set

To make this data suitable for fine-tuning the language model, it must be converted to text; I ended up using the assistant format popularized by OpenAI:

{

"messages": [

{

"role": "system",

"content": "You are DraftGPT, a Magic the Gathering Hall of Famer and helpful AI assistant that helps players choose what card to pick during a draft. You are a master of the current draft set, and know every card well.\n\nWhen asked for a draft pick, respond with the card's name first."

},

{

"role": "user",

"content": "In our Magic the Gathering draft, we're on pack 2 pick 13. These are the contents of our pool so far:\n-------------------------\nEvolving Wilds -- (common)\nRat Out -- {B} (common)\nNot Dead After All -- {B} (common)\nHopeless Nightmare -- {B} (common)\nBarrow Naughty -- {1}{B} (common)\nUnassuming Sage -- {1}{W} (common)\nThe Witch's Vanity -- {1}{B} (uncommon)\nSpell Stutter -- {1}{U} (common)\nMintstrosity -- {1}{B} (common)\nWater Wings -- {1}{U} (common)\nBarrow Naughty -- {1}{B} (common)\nGadwick's First Duel -- {1}{U} (uncommon)\nBitter Chill -- {1}{U} (uncommon)\nThe Princess Takes Flight -- {2}{W} (uncommon)\nStockpiling Celebrant -- {2}{W} (common)\nVoracious Vermin -- {2}{B} (common)\nDevouring Sugarmaw // Have for Dinner -- {2}{B}{B} // {1}{W} (rare)\nMisleading Motes -- {3}{U} (common)\nJohann's Stopgap -- {3}{U} (common)\nBesotted Knight // Betroth the Beast -- {3}{W} // {W} (common)\nThreadbind Clique // Rip the Seams -- {3}{U} // {2}{W} (uncommon)\nTwining Twins // Swift Spiral -- {2}{U}{U} // {1}{W} (rare)\nEriette's Whisper -- {3}{B} (common)\nFarsight Ritual -- {2}{U}{U} (rare)\nTwisted Sewer-Witch -- {3}{B}{B} (uncommon)\nInto the Fae Court -- {3}{U}{U} (common)\n-------------------------\n\nTo keep track of what colors are open, you've counted how many cards of each color identity you've seen in the last 5 packs. Here is the breakdown:\nW: 11\nB: 6\nG: 4\nRW: 1\nR: 2\n\nThese are the contents of the pack:\n-------------------------\nCut In -- {3}{R}\nSorcery (common)\nCut In deals 4 damage to target creature.\nCreate a Young Hero Role token attached to up to one target creature you control. (If you control another Role on it, put that one into the graveyard. Enchanted creature has \"Whenever this creature attacks, if its toughness is 3 or less, put a +1/+1 counter on it.\")\n-------------------------\nSkewer Slinger -- {1}{R}\nCreature — Dwarf Knight (common)\nReach\nWhenever Skewer Slinger blocks or becomes blocked by a creature, Skewer Slinger deals 1 damage to that creature.\n1/3\n-------------------------\n\nWhat card would you pick from this pack?"

},

{

"role": "assistant",

"content": "Cut In"

}

]

}This immediately demonstrates the most difficult aspect of fine-tuning: Formatting data to get the desired result – a difficult and inherently experimental task.

Today, most people are familiar with industrial engineering – the experimental process of modifying industrial software to ensure the best possible accuracy of a language model. With fine tuning, the industrial engineering process is a hundred times slower. Typically, industrial testing requires many hours of work. This significantly slows down experiments and makes fine-tuning almost as difficult as classical machine learning.

To illustrate this with a Magic draft problem, I tested the following:

Approximately five formats of promts, in particular differing in the level of detail displayed about each card

Adding additional context about the last few draft cards chosen to create a “memory”

Including “general card information” training strings where the model is asked to remember details about new cards

I’ve spent about forty hours experimenting, but I still can’t say with certainty that I’ve answered the questions about which format is “best” for this task. A lot of experimentation is still required.

Performing fine tuning

Search GPU: It’s not worth talking about, but it sucks! In most places their availability is not very great. I ended up renting the GPU by the hour at Runpod (RTX 4090 with 24 GB VRAM) for about $0.7 per hour.

Fine tuning script: This is not my first experience in machine learning, so instinctively I decided to write my own training script with HuggingFace + transformers PEFT. Considering the tense situation with the GPU, it seemed like a good choice QLoRA.

It turns out that writing your own script is a bad idea! There are a whole bunch of small optimizations and options, from quite simple before requiring reading research articles. Nothing overwhelming, but it would take a long time to figure it out on your own.

I ended up using axolotl, which implements many of these optimizations and is much simpler (and faster) to work with. Its documentation is quite good, and I think it’s a good starting point for people learning how to fine-tune LLM.

Note about models: LLMs are huge! The last time I trained models regularly (in 2019) Bert had approximately 110 million parameters; today even “small” LLMs are seventy times larger. Such volumetric models are clumsy by nature. The 16GB weights make them very difficult to store; GPU memory is a scarce resource even when using techniques like QLora. It’s no wonder that top researchers are in such high demand; on the largest scale this is very difficult work.

Grade

Let’s start with an assessment: My previous work with machine learning taught me a lesson that the wizards of industrial engineering have not mastered well enough: before starting experiments, you need to create a good rating system. In my experiment, the evaluation was fairly straightforward (remove a few full drafts from the training data and see if the model picks the same card as the human in the scrapped data), but having a good evaluation set makes the fine tuning a lot easier.

Some criteria for language models are difficult to formulate: The “pick the right card” problem is easy enough to formulate for Magic drafts, but there are also fuzzier problems that I would like to teach a ready-made model:

When she chooses different options, they must be valid

It would be great if the model could intelligently explain “why” it chose a particular option

Each of these problems is much more difficult to formulate, and in the end I tested them “with my eyes”, looking at a bunch of examples, but it was slow. As it were, GPT-4 does a better job of selecting less “weird” options and justifying its choices better than smaller, fine-tuned models.

Main conclusions

My two most important takeaways from this experiment:

Fine-tuning on new data can be remarkably effective, easily outperforming GPT-4 contextual learning in both accuracy and cost.

Getting fine-tuning done “right” requires a process of experimentation, and this in itself becomes a separate skill set (which is harder to master than learning industrial engineering).

Oh yes, and about Magic

How do bots cope with the role of drafters? Pretty good!

I connected the draft selection model to the logs generated by Magic Arena, wrote a small Electron application and ran several drafts with Magic Copilot:

Some features:

The selection is generated by the fine-tuned model, but the comment is generated by GPT-4. Most of the time this works fine, but from time to time GPT-4 disagrees with the fine tuning and contradicts it

I connected eight draft AIs to the simulated draft (meaning all the bots drafted against each other). When passing a set to an opponent, they had a strange behavior – an unusual tendency to draft mono-colored decks. If the opponent is a human, then they usually converge to much more normal decks.

Overall, I would suggest that this is probably one of the most powerful and human-like draft AIs available today. Compared to the bots in Magic Arena’s Quick Draft feature, they are much more like a high-quality live drafter than a bot with heuristics.

Wizards of the Coast – If you want extremely accurate and quite expensive to operate draft AI, then write me. I’ll be happy to send you an LLM!