Server WebRTC channel quality indicator over TCP

Publish and Play

There are two main server-side WebRTC features in video streaming: publishing and playback. In the case of publication, the video stream is captured from the webcam and moves from the browser to the server. In the case of playback, the stream moves in the opposite direction – from the server to the browser, is decoded and reproduced in browser HTML5 item on the device screen.

UDP and TCP

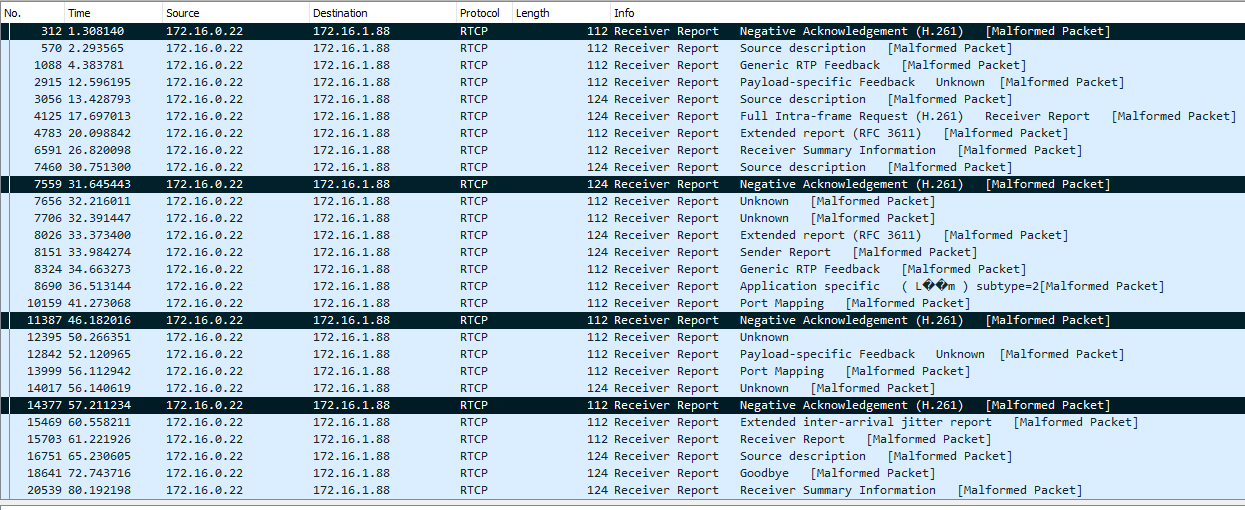

Video can move over two transport protocols: TCP or UDP. In the case of UDP, RTCP NACK feedbacks are actively working, which carry information about lost packets, and therefore, determining the deterioration of the UDP channel is a fairly simple task and reduces to counting NACK (Negative ACK) to determine the quality. The more NACK and PLI (Picture Loss Indicator) feedbacks, the more real losses and the worse the channel quality

TCP without NACK

In this article, we will be more interested in the TCP protocol. When using WebRTC over TCP, NACK RTCP feedbacks are not sent, and if they are sent, they do not reflect the real picture of losses, and it is not possible to determine the quality of the channel from feedbacks. As you know, TCP is a transport protocol with guaranteed delivery. For this reason, in the event of a channel degradation, packets on the network will be sent at the transport protocol level. Sooner or later, they will be delivered, but NACK will not be generated for these losses, because there were no casualties. Packages will eventually arrive late. Late packets simply will not be collected in video frames and will be discarded by the depacketizer, as a result of which the user will see something like this full of artifacts:

On feedbacks, everything will be fine, but there will be artifacts in the picture. Below are screenshots of Wireshark traffic that illustrate the behavior of the published stream on pinched TCP and UDP channels, as well as screenshots of Google Chrome statistics. The screenshots show that in the case of TCP, the NACK metric does not grow, in contrast to UDP, despite the fact that everything is very bad with the channel.

TCP

UDP

And why do you need to stream over TCP if there is UDP

Reasonable question. Answer: to push large resolutions through the channel. For example, when streaming VR (Virtual Reality), resolutions can start with 4k. It is not possible to push a stream of such a resolution and a bit rate of about 10 Mbps into a regular channel without loss, the server spits out UDP packets and they begin to get lost in packets in the network, then sent out, etc. Large dumps of video packets spoil the video, and in the end, the quality becomes bad. For this reason, for general purpose networks and high resolutions Full HD, 4k, WebRTC over TCP is used for video delivery to eliminate network packet loss at the cost of some increase in communication delay.

RTT to determine channel quality

Thus, there is no metric that is guaranteed to say that everything is bad with the channel. Some developers try to rely on the RTT metric, but it does not work on all browsers and does not give accurate results.

Below is an illustration of the dependence of channel quality on RTT according to the version of the callstat project

REMB Solution

We decided to approach this problem from a slightly different perspective. REMB works on the server side, which calculates the incoming bitrate for all incoming streams, calculates its deviation from the average and in the case of a large spread, offers the browser to lower the bitrate by sending special REMB commands using the RTCP protocol. The browser receives such a message and reduces the video encoder bit rate for the recommended values - this is how protection against channel overload and input stream degradation works. Thus, on the server side, a mechanism for calculating the bitrate has already been implemented. Scatter averaging and determination are implemented through the Kalman filter. This allows you to remove the current bitrate at any time with high accuracy and filter strong deviations.

The reader will probably have a question – “What will give the knowledge of the bit rate that the server sees on its incoming stream?”. This will give an understanding of exactly what the video with the bitrate is entering on the server, the value of which was determined. To evaluate the quality of the channel, one more component is required.

Outgoing bitrate and why is it important

The statistics of the publishing WebRTC stream shows with what bitrate the video stream exits the browser. As in a bearded joke: Admin, checking the machine: “From my side the bullets flew out. Problems are on your side .. ". The idea of checking the quality of the channel is to compare two bitrates: 1) the bit rate that the browser sends 2) the bit rate that the server actually receives.

The admin fires bullets and calculates the speed of their departure at home. The server calculates the speed at which they are received at home. There is another participant in this event, TCP is a superhero who is in the middle between the admin and the server and can slow down bullets randomly. For example, it can brake 10 random bullets out of a hundred for 2 seconds, and then allow them to fly again. Here is such a Matrix.

On the browser side, we take the current bitrate from the WebRTC statistics, then we smooth the graph with the Kalman filter in the JavaScript implementation and at the output we get a smoothed version of the client browser bitrate. Now we have almost everything we need: the client bitrate tells us how the traffic leaves the browser, and the server bitrate tells how the server sees this traffic and what bitrate it receives. Obviously, if the client bitrate remains high, and the server bitrate begins to sag in relation to the client, this means that not all “bullets have flown” and the server does not actually see part of the traffic that was sent to it. Based on this, we conclude that something is wrong with the channel and it is time to switch the indicator to red.

Not all

The graphs are correlated, but slightly shifted in time relative to each other. For a complete correlation, it is necessary to combine the time charts in order to compare the client and server bit rates at the same time point on historical data. The desync looks something like this:

And it looks like a time-synchronized chart.

Testing

The case is small, it remains to test. We publish the video stream, open and watch the chart of published bitrates: on the browser side and on the server side. According to the graphs, we see an almost perfect match. The indicator is called PERFECT.

Next, we begin to spoil the channel. To do this, you can use such free tools “winShaper” on Windows or “Network Link Conditioner” on MacOS. They allow you to pinch the channel to the set value. For example, if we know that a 640×480 stream can accelerate to 1Mbps, pinch it to 300 kbs. At the same time, we remember that we are working with TCP. We check the test result – the graphs show inverse correlation and the indicator falls into BAD. Indeed, the browser continues to send data and even increases the bitrate, trying to push a new portion of traffic into the network. This data settles on the network in the form of retransmissions and does not reach the server, as a result, the server shows the opposite picture and says that the bitrate that it sees has fallen. Really Bad.

We conducted a lot of tests that show the correct operation of the indicator. The result is a feature that allows you to qualitatively and efficiently inform the user about problems with the channel both for publishing the stream and for playback (it works on the same principle). Yes, for the sake of this green-red PERFECT-BAD light bulb, we have fenced this whole garden. But practice shows that this indicator is very important and its absence and misunderstanding of the current status can greatly ruin the life of an ordinary user of a video streaming service via WebRTC.

References

WCS 5.2 – media server for developing web and mobile video applications

WebRTC channel quality control documentation for publishing and playback

REMB – server side bitrate management

NACK – control of lost packets from the server side