large overview of tools

Neural networks are rapidly changing the digital marketing landscape, and SEO is no exception. More and more SEOs are turning to the power of artificial intelligence to improve their strategies, automate routine tasks, and achieve higher organic results.

However, the introduction of neural networks in SEO is not just a fad, but an urgent necessity. With growing competition and the constant complication of search engine algorithms, traditional optimization methods no longer give the same effect. To stay on top of the wave, SEO specialists need to master new tools and approaches based on machine learning and big data processing.

But how exactly neural networks can they help with SEO? What problems can they solve today, and what prospects do they open up for the future? How to choose and implement the right tools into your workflow? These questions concern many optimizers – both beginners and experienced professionals.

In this article we will try to give detailed answers to these questions and provide maximum useful information on the topic. We have prepared a large overview of tools that use at least machine learning algorithms. Our goal is not just to introduce you to trends at the intersection of SEO and AI, but also to equip you with practical knowledge that you can apply in your work.

Well, let's go! Enjoy reading:)

Neural networks for text content optimization

One of the key applications of neural networks in SEO is the generation and optimization of text content. Traditionally, writing SEO text has been a labor-intensive and time-consuming process that requires professionals to have a good understanding of the principles of relevance, readability, and search algorithms. However, with the development of generative language models such as GPT, this process can be automated and improved to a large extent.

I want to point out a couple of things right away:

In current realities, it is worth considering that most of the tools described in the article are available via VPN and in English.

In the context of this article, we will talk about the latest models from OpenAI and Anthropic.

Now a short introduction for those who are not very familiar with basic neural networks.

One of the most advanced tools in this area is ChatGPT-4, a language model from OpenAI based on the transformer architecture and trained on a huge array of text data. ChatGPT-4 uses a multi-layer neural network with an attention mechanism, which allows the model to take into account the context and generate coherent, relevant and grammatically correct texts based on user-specified parameters.

The Transformer architecture underlying ChatGPT-4 has revolutionized the NLP field. Unlike traditional recurrent neural networks), transformers use a self-attention mechanism, which allows the model to analyze the relationships between words in a sentence without reference to their position. This makes it possible to efficiently process long sequences and capture deep context, which is critical for generating coherent and relevant text.

Another powerful tool is Claude by Anthropic. Claude 3 is based on the Constitutional AI architecture, which imposes on the model certain ethical and behavioral restrictions set by the developers. This allows you to generate not only high-quality, but also safe, consistent texts that comply with given rules and values.

The Constitutional AI concept is an important step in the development of responsible and controlled AI. With built-in ethical guidelines and restrictions, models like Claude 3 can generate content free of toxicity, misinformation, plagiarism and other objectionable elements. This is especially important for SEO texts, which must not only attract traffic, but also build trust and brand authority in the eyes of users and search engines.

But how exactly do these models work and how to apply them for SEO tasks? Let's take a closer look.

A key feature of ChatGPT-4 and Claude 3 is their ability for few-shot learning – that is, they can adapt to new tasks and generate relevant content based on just a few examples or instructions. This is achieved through the use of query design techniques or prompt engineering – the formation of specially structured text queries that direct the model to solve a specific problem.

Prompt engineering is the art and science of creating efficient text queries for language models. A correctly composed prompt should clearly describe the desired result, set the necessary parameters and restrictions, and at the same time leave room for creativity and adaptation to the model. Mastering prompt engineering skills is the key to effectively using tools like ChatGPT-4 and Claude 3 to solve SEO problems.

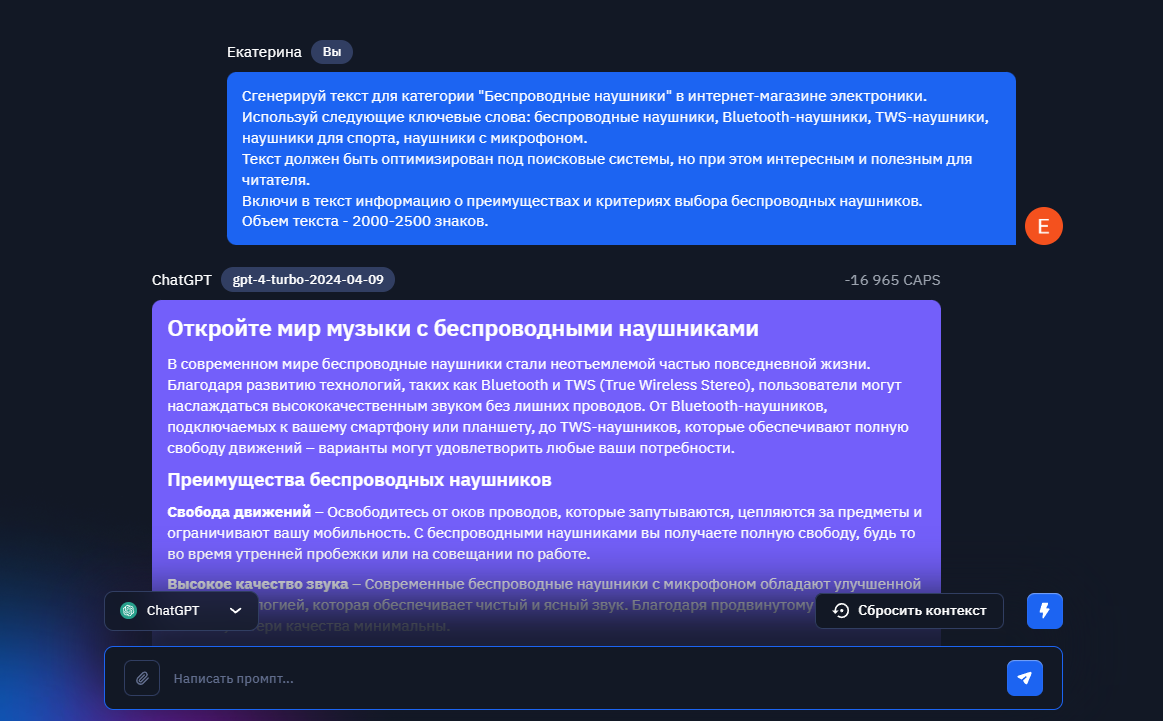

For example, to generate SEO-optimized text for an online store page, we can formulate the following query for ChatGPT-4-Turbo:

"Сгенерируй текст для категории «Беспроводные наушники» в интернет‑магазине электроники. Используй следующие ключевые слова: беспроводные наушники, Bluetooth‑наушники, TWS‑наушники, наушники для спорта, наушники с микрофоном. Текст должен быть оптимизирован под поисковые системы, но при этом интересным и полезным для читателя. Включи в текст информацию о преимуществах и критериях выбора беспроводных наушников.

Объем текста — 2000–2500 знаков."

Such a request sets the model clear parameters of the desired text: topic, keywords, requirements for optimization and usefulness for the reader, necessary information blocks and volume. Based on these instructions, ChatGPT-4-Turbo will generate a unique text that will meet all the specified criteria and is ready for publication on the site.

You can use Claude 3 in a similar way, adding instructions to your query regarding the desired style and tone of the text, as well as ethical restrictions (for example, avoid mentioning specific brands or not giving medical advice).

In addition to generating texts from scratch, neural networks can also be used to optimize existing content. Tools like Title Generator Tool from SemRush and Headline Analyzeruse machine learning algorithms to evaluate and improve titles and meta tags for their appeal to users and relevance to search engines.

TitleGeneratorTool uses natural language processing and deep learning technologies to analyze the semantics of input text and generate optimized titles. The tool takes into account factors such as title length, keyword inclusion, use of emotional language, and other SEO parameters. This allows you to create headlines that not only attract the attention of users, but also rank well in search results.

CoSchedule's Headline Analyzer, in turn, evaluates the quality of headlines across a range of parameters, including readability, emotion, use of power words, and overall click-through potential. The tool uses machine learning algorithms trained on a large sample of successful headlines to predict their performance and make recommendations for improvement.

To improve the quality and relevance of already written SEO texts, you can use tools such as Hemingway Editor And Grammarly. They analyze the text using the same NLP algorithms and identify problems with readability, grammar, style and tone.

Hemingway Editor uses a set of rules and heuristics to evaluate the complexity and readability of text. It identifies complex sentences, passive constructions, adverbs and other elements that may be difficult to understand, and offers suggestions for simplifying or replacing them. This optimization makes the text easier and more attractive for readers, which has a positive effect on behavioral factors and search rankings.

Grammarly, in turn, uses advanced machine learning algorithms to identify grammatical, stylistic and punctuation errors in the text. The tool not only offers correction options, but also provides detailed explanations of the rules and recommendations for improving the text. This helps make SEO content higher quality, more professional, and more attractive to users.

In addition, to effectively use language models and NLP algorithms, you need to constantly monitor their development, experiment with different approaches and techniques, and continuously educate yourself. Only in this case can neural networks become a truly powerful weapon in the arsenal of an SEO specialist and help take the quality of text content to a new level.

Application of neural networks for technical SEO

Technical website optimization is the most important aspect of SEO, which affects loading speed, ease of navigation, mobile responsiveness and other factors taken into account by search engines when ranking. While technical SEO has traditionally been considered an area where AI and neural networks have limited application, recent advances in machine learning are opening up new opportunities to automate and optimize technical processes.

One of the key areas for using neural networks in technical SEO is optimizing website loading speed. A fast website not only improves the user experience, but also has a positive effect on search rankings, especially after the introduction of the Google Page Experience Update algorithm. Machine learning powered tools such as GTmetrix And Google PageSpeed Insightshelp identify factors that slow down page loading and provide recommendations for eliminating them.

GTmetrix uses a combination of performance analysis algorithms and heuristics to evaluate site loading speed and identify potential problems. The tool analyzes parameters such as server response time, size and number of HTTP requests, caching and compression efficiency, image optimization and other factors affecting speed. Based on this analysis, GTmetrix generates a detailed report with scores for each parameter and step-by-step optimization recommendations.

Google PageSpeed Insights, in turn, uses machine learning algorithms and user behavior data to evaluate site loading speed and its impact on UX. The tool analyzes both lab data (simulating page loads on standard hardware and connections) and field data (real metrics from Chrome users). This allows you to get a comprehensive assessment of site performance and identify priority areas for optimization.

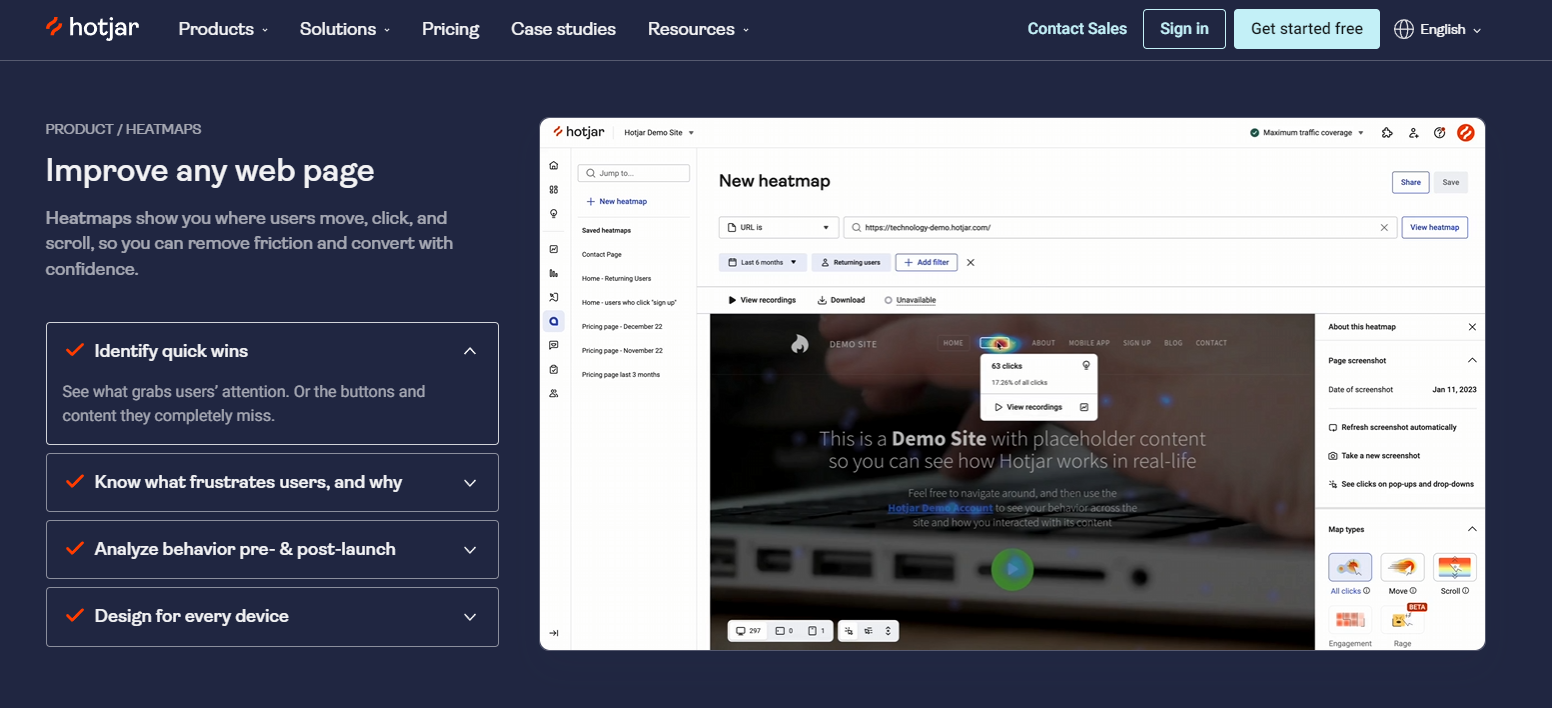

But speed optimization is just one aspect of technical SEO where neural networks can be useful. Another promising direction is improving user experience (UX) based on user behavior analysis. AI-powered web analytics tools such as Hotjar And Crazyeggallow you to track and analyze user interaction with the site, identify problem areas and opportunities for optimization.

Hotjar uses a combination of heat maps of clicks, scrolling and mouse movement, session recordings, surveys and other methods to collect qualitative data about user behavior on the site. Machine learning algorithms then analyze this data, identify patterns, and generate insights to optimize the UX. For example, based on the analysis of heat maps, you can identify unpopular navigation elements or non-obvious call-to-action buttons, and based on session recordings, you can find pages with a high bounce rate and understand the reasons.

Crazyegg offers similar functionality, including heatmaps, session recording, and A/B testing, but with a focus on visual analytics. The tool uses advanced algorithms to create clear reports and visualizations that help you quickly identify problems and opportunities for optimization. For example, the Confetti feature shows every click on a page as a colored dot, segmented by traffic source, device, or other parameters. This allows you to quickly assess which page elements attract the most attention and conversions for different groups of users.

In addition to analyzing user behavior, neural networks can also be used to automate site audits and identify technical errors. AI-powered tools such as Lumar (Deepcrawl) and Screaming Frog SEO Spiderhelp crawl sites, detect problems with indexing, duplicates, redirects, broken links and other technical factors affecting SEO.

Lumar uses advanced crawling and data processing algorithms to scan sites for technical errors and potential SEO issues. The tool can analyze hundreds of thousands of pages, identify patterns and anomalies, and generate detailed reports with recommendations for correction. One of Lumar's key features is its ability to integrate with Google Search Console, Google Analytics, and other tools to correlate technical data with real-world traffic and ranking metrics.

Screaming Frog SEO Spider is a desktop website technical audit tool that uses crawling and data analysis algorithms to identify a wide range of SEO issues. The tool can scan sites for broken links, errors in robots.txt and sitemap.xml, duplicate content, missing meta tags, incorrect titles and many other factors. Screaming Frog SEO Spider also offers integrations with Google Analytics, Google Search Console, and other tools for in-depth analysis of technical and behavioral data.

Incorporating these tools into your technical SEO workflow allows you to automate many routine tasks, identify and fix problems faster, and make more informed decisions about website optimization.

Neural networks in link promotion

Link promotion, or link building, is one of the key factors in external website optimization, which affects its authority, search visibility and organic traffic. Even though ranking algorithms are becoming more complex and take into account hundreds of different factors, links are still one of the main signals that search engines use to evaluate the quality and relevance of sites.

However, in an era of ever-increasing competition and stricter requirements for link quality, traditional link building methods are becoming less effective and more labor-intensive. Neural networks and machine learning algorithms can automate and optimize many processes associated with building a link profile.

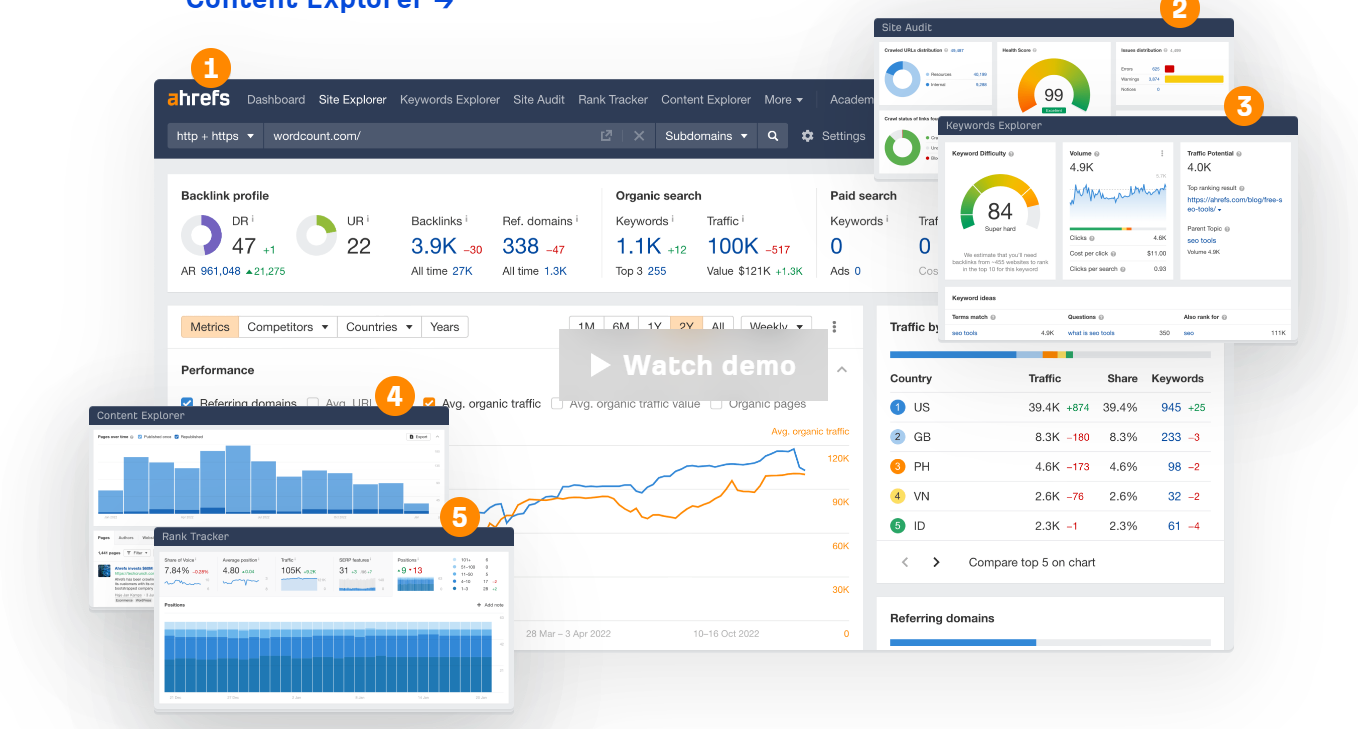

One of the key applications of neural networks in link building is the selection of quality donors for posting links. Machine learning algorithms used in tools such as Majestic, Ahrefs And SEMrushallow you to analyze huge amounts of data about the link profile of sites and identify the most authoritative and relevant resources for obtaining links.

These tools use a combination of various metrics and signals, such as Trust Flow, Domain Rating, Topical Trust Flow, as well as algorithms for analyzing the naturalness of the link profile and identifying potential link manipulation. This allows SEO specialists to quickly find high-quality donor sites that not only convey weight and authority, but also correspond to the topic of the resource being promoted, which is important for the relevance of links.

In addition, neural networks can help optimize the anchor list and link texts. Natural language processing algorithms used in tools such as LinkAssistant And SEO SpyGlassallow you to analyze the context and semantics of link texts, evaluate their relevance and naturalness, and also generate recommendations for optimizing anchors and surrounding content.

These tools can automatically identify over-optimized or unnatural anchors, suggest options for diversification and improvement, and evaluate the overall quality and safety of the link profile. This helps SEOs avoid potential risks associated with low-quality or spammy links and build a more natural and resilient link profile.

Another promising area for using neural networks in link building is the automation of search processes and contacts with potential donors. Machine learning algorithms can analyze large volumes of data about sites and their owners, identify the most promising sites for placing links, and automate the processes of outreach communication and negotiations.

For example, tools such as Pitchbox And BuzzStream, use NLP and data analytics algorithms to automate searches for relevant sites, collect owner contact information, personalize and send outreach emails, and track and analyze campaign results. This allows you to significantly speed up and scale link building processes, as well as increase their efficiency by personalizing communication and focusing on the most promising sites.

Of course, as with other applications of AI in SEO, neural networks and machine learning algorithms cannot completely replace the human factor in link building. Building a high-quality and natural link profile requires not only technical skills and tools, but also creativity, communication skills and a deep understanding of how search engines work.

Analyze data and identify patterns

In modern SEO, data plays an increasingly important role. As search engine algorithms become more complex and take into account hundreds of different factors, traditional optimization methods based on guesswork and intuition are giving way to a data-driven approach based on analyzing large volumes of data and identifying patterns.

And this is where neural networks and machine learning algorithms come into the picture, capable of analyzing gigantic amounts of information, finding non-obvious relationships and generating valuable insights for optimizing SEO strategies. From researching ranking factors and traffic forecasting to identifying hotspots and pain points, AI tools open up new possibilities for making informed, data-driven decisions.

One of the key applications of neural networks in SEO analytics is the study of ranking factors and their impact on a site’s position in search results. Machine learning algorithms used in tools such as DataForSEO And SERPstatallow you to analyze huge amounts of data about search queries, site positions, link profiles and other factors, and identify correlations and patterns.

These tools use techniques such as regression analysis, decision trees, and neural networks to determine which factors have the greatest impact on ranking for specific queries, and how optimizing them can affect a site's rankings. For example, an analysis might show that for a certain niche, having a video on a page or fast loading speed are more important factors than the number of inbound links or keyword density.

Another important application of neural networks in SEO analytics is predicting website traffic and rankings based on historical data and external factors. Machine learning algorithms used in tools such as SE Ranking And Forecast.SEMrushallow you to build predictive models that take into account seasonality, trends, competitor activity and other variables that affect the site’s visibility in search.

These models can help SEOs better understand the potential of different groups of queries, evaluate the possible effect of optimizing certain pages, and set realistic goals for traffic and conversions. Forecasting also allows you to proactively identify potential issues and risks, such as seasonal downturns or increased competition, and adapt your strategy accordingly.

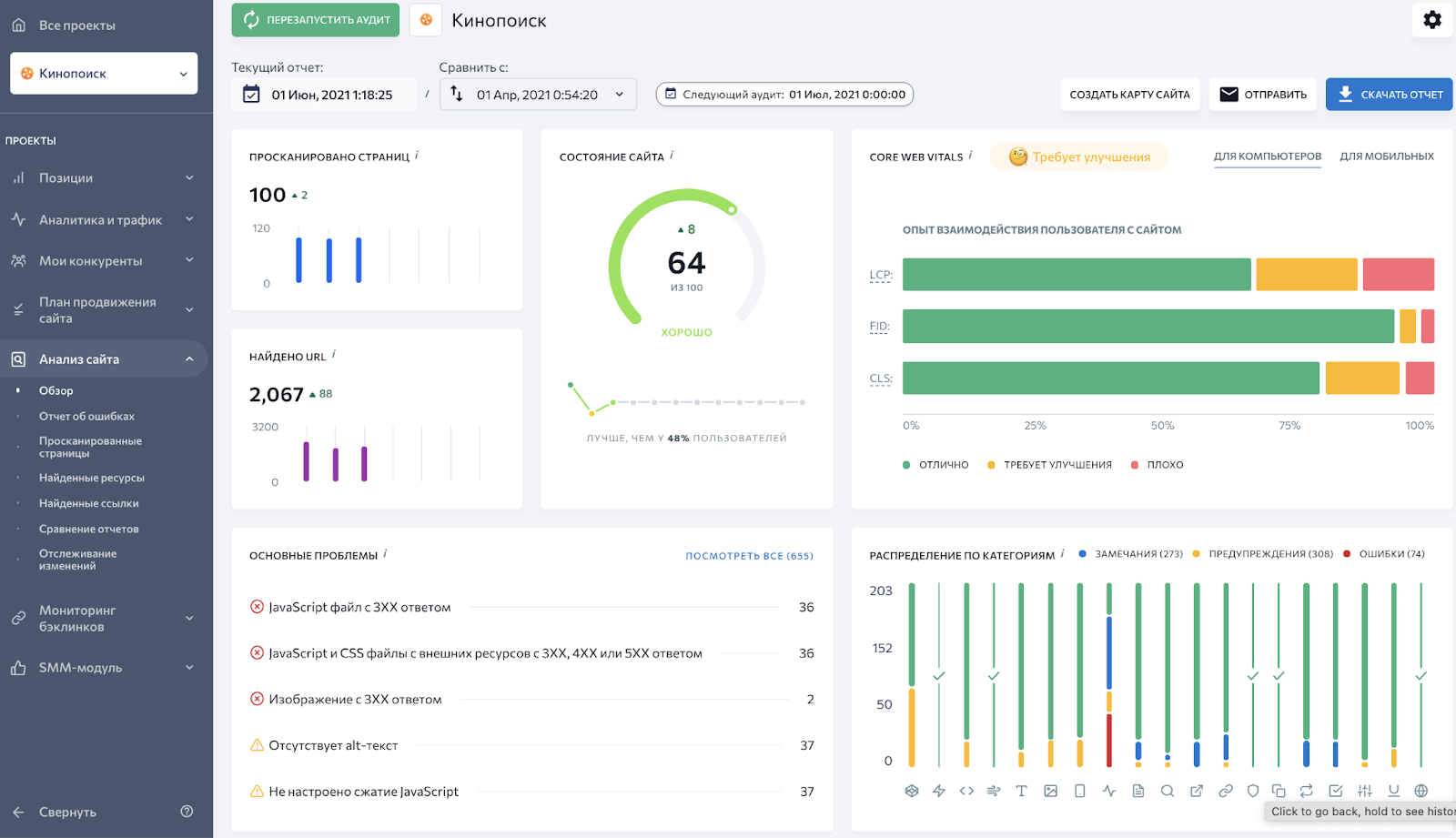

But neural networks can help not only in predicting future results, but also in identifying current problems and growth areas in an SEO strategy. Machine learning algorithms used in tools such as Netpeak Spider And WebSite Auditorallow you to analyze large amounts of data about site structure, content, link profile and other factors, and identify potential problems and opportunities for optimization.

These tools can automatically find issues such as duplicate content, broken links, missing meta tags, unoptimized images, and other technical errors that impact SEO. In addition, they can identify potential growth points, such as under-optimized pages with high traffic potential, opportunities to improve internal linking or expand the semantic core.

Using these insights, SEOs can quickly find and fix problems that are holding them back from growing traffic and rankings, and identify new opportunities to optimize and scale their strategy. And the main thing is to make decisions based on real data, and not guesses and assumptions.

Personalization and increased conversions

In modern digital marketing, personalization plays an increasingly important role. As users become more demanding and spoiled for choice, traditional methods of mass communication are losing their effectiveness. To capture attention, build trust, and motivate action, brands must address each website visitor as an individual, tailored to their unique needs, preferences, and behaviors.

Neural networks can easily adapt to more specific user requests. From dynamic landing page personalization and recommendation systems to predictive analytics and conversion path optimization, AI tools open up new opportunities to increase relevance, engagement and conversions.

One of the key applications of neural networks in personalization is the dynamic adaptation of website content and design to the individual characteristics and behavior of users. Machine learning algorithms used in tools such as Personyzeallow you to analyze data about visitors in real time (traffic source, search queries, history of interaction with the site, geolocation, etc.) and based on this personalize various elements of the page.

For example, if a visitor comes to a site searching for “buy an iPhone,” the system can automatically tailor the headline, images, and content blocks to that specific need, highlighting benefits and promotions relevant to that product. Or if the algorithm has detected that the user is at the stage of comparing various options, he may be shown a comparison table of characteristics or a cost calculator.

Dynamic personalization can significantly increase the relevance and persuasiveness of communications, which in turn leads to increased conversions and loyalty. According to research, personalized websites generate 20-30% more conversions than generic versions, and also significantly reduce bounce rates.

Another important application of neural networks in personalization is in recommender systems and smart search suggestions that can predict users' needs and intentions and suggest the most relevant products, services or content to them. The machine learning algorithms used in such systems analyze user behavior patterns, past purchases, pages viewed, search queries, and other data to identify patterns and generate personalized recommendations.

A good example of such a system is the RankBrain algorithm, which Google uses to personalize search results. RankBrain analyzes the history of search queries and user interactions with results to better understand the context and intent of each query and suggest the most relevant pages. This not only improves the user experience, but also gives SEOs the opportunity to optimize their pages for specific micro-moments and stages of the user experience.

At the level of individual websites and online stores, AI-powered recommendation systems can also significantly improve relevance and conversion rates. For example, algorithms can analyze a user's behavior on a website and suggest additional products or services that they might be interested in based on pages viewed, items added to cart, or even the behavior of similar users. This can increase check average, increase engagement, and reduce bounce rates.

But personalization and increasing conversions are not only about adapting content and recommendations. Neural networks can also help in optimizing conversion paths themselves and UX design based on predictive analytics and identifying user behavior patterns. Machine learning algorithms can analyze big data about how visitors interact with a website (clicks, scrolls, time on page, funnel path, etc.) to identify patterns, obstacles, and churn points.

Based on this information, AI systems can generate recommendations for optimizing site structure, arrangement of elements on the page, design of CTA buttons and forms, and also identify potential usability problems that may reduce conversions. For example, if the algorithm notices that a large percentage of users are leaving the checkout page at the shipping method selection step, this could be a signal to simplify or rethink that step.

In addition, predictive analytics based on neural networks can help in audience segmentation and personalization of marketing campaigns. By analyzing data about user demographics, behavior, and preferences, algorithms can identify patterns and cluster audiences into micro-segments with similar characteristics. This allows you to more accurately target advertising, personalize email campaigns and push notifications, and tailor content and offers to each segment.

Examples of successful use of predictive analytics and personalization can be found in e-commerce giants such as Amazon and Netflix. Their recommendation systems, based on deep big data analysis and machine learning, generate up to 35% of all sales and views, respectively. And personalized Amazon email campaigns achieve 50% higher conversion rates than mass mailings.

Of course, implementing AI into personalization and conversion optimization is a complex and multi-step process that requires not only technical knowledge and tools, but also a deep understanding of your audience and business goals. It is also important to consider ethical and data privacy issues to ensure that personalization does not become intrusive or an invasion of privacy.

Risks, ethics and prospects for using neural networks in SEO

Neural networks and machine learning algorithms open up new horizons for SEO; this is an indisputable fact that is already becoming our reality. On the one hand, I see the huge potential of these technologies – how they can make our lives easier, automate routines and open up new opportunities for optimization. But on the other hand, I cannot ignore the potential risks and ethical dilemmas that AI brings.

Take, for example, the issue of quality and reliability of content generated by neural networks. Yes, modern language models are capable of creating texts that are sometimes difficult to distinguish from those written by humans. But let's be honest – they are still far from perfect and can make factual errors or generate meaningless or contradictory statements. And if SEO specialists mindlessly rely on AI and forget about editing and quality control, there is a risk of filling the Internet with low-quality, misleading content. And this, in turn, can undermine user trust in search engines and websites.

What about privacy and ethical use of data? After all, in order to personalize content and improve user experience, neural networks need a huge amount of personal information about each of us. But are we willing to sacrifice privacy for more relevant recommendations? And how can we be sure that this data will not be used for harm? These are serious questions to which there are no clear answers yet.

However, maybe I'm being too dramatic. After all, despite all the advances AI has made, it is still nowhere near human levels of creativity, empathy, and ethical judgment. And I want to believe that if we learn to use the strengths of neural networks, but not forget about our unique human qualities, we can achieve incredible results.

The future of SEO in the age of neural networks promises to be exciting and challenging. But I believe that with responsible and creative approach, the synergy of human intelligence and AI can lead to incredible breakthroughs and successes. So let's embrace this opportunity and shape the future of SEO together – hand in hand with neural networks, but with a human at the helm.

Thanks for reading! I hope this material was useful for you 🙂

![[Личный опыт] Why do IT engineers go to Mexico and then work for the USA](https://prog.world/wp-content/uploads/2021/07/ypbwn2ni-hscgewyxpsud6abgow-768x512.png)