DNC is better than LSTM

This article is an abstract to the monograph “Analysis and visualization of neural networks with external memory”.

This book describes in detail the principles of operation of neural network models, namely the Turing neural machine, a differentiable neural computer, as well as its modifications. The spheres of applicability of these models are listed. The advantages of these models compared to the earlier successful LSTM model are highlighted. The shortcomings of these models, as well as ways to eliminate them, are described. A theoretical justification is given for the fact that the above-considered neural networks with external memory have a greater potential for solving many problems than LSTM.

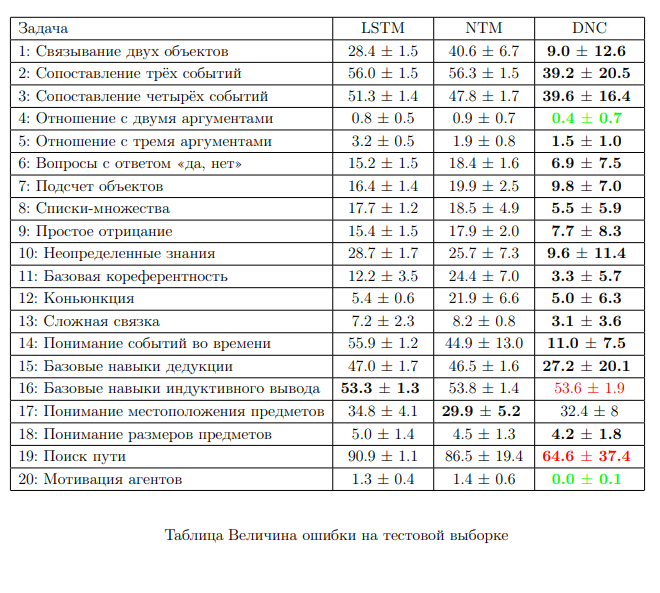

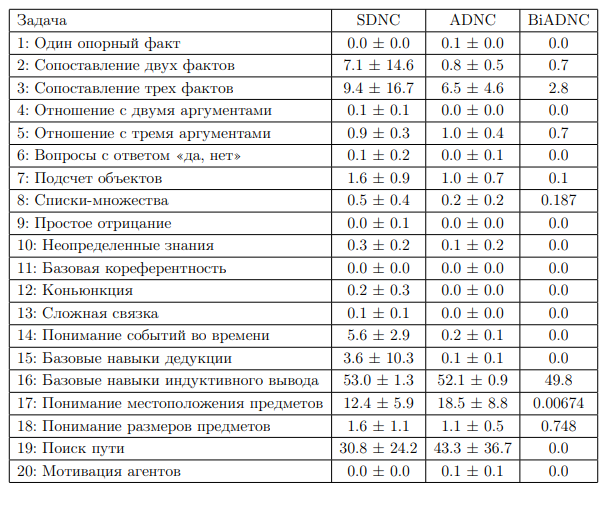

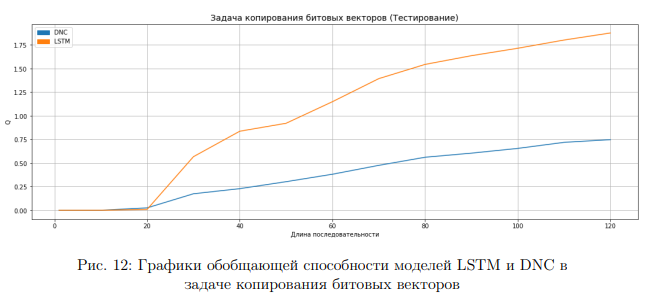

Computational experiments were carried out for the tasks of copying sequences of bit vectors, as well as for the task of mastering the basic skills of a question-answer system. The results show that neural networks with external memory have more “long-term memory” than LSTMs and have a greater generalization ability. In some cases, they outperform LSTMs in terms of learning rate.

Introduction

Recurrent Neural Networks (RNNs) differ from other machine learning methods in that they are capable of processing a series of events over time or sequential logical chains. Recurrent neural networks can use their internal memory to process sequences of different lengths. RNNs are applicable in such tasks as, for example: handwriting recognition, text analysis, speech recognition, etc.

In addition, RNNs are known to be Turing-complete, and therefore have the ability to mimic arbitrary software procedures. But in practice this is not always easy to do.

Recurrent neural networks are good at learning from sequential data and reinforcement learning, but are very limited in their ability to solve problems related to working with data structures and variables, as well as storing data over long time periods due to the lack of long-term memory.

One of the ways to improve standard recurrent networks for the successful solution of algorithmic problems is the introduction of a large address memory. Unlike a Turing machine, the Neural Turing Machine (NTM) is a fully differentiable model that can be trained by modifications of the gradient descent method (eg RMSProp), providing a practical mechanism for learning programs from examples.

The NTM model was proposed in 2014 in the work. This work does not describe in detail the details of the functioning of this neural network model. One of the objectives of the final qualification work is to provide a detailed description of the operation of the neural Turing machine.

The main factor in the emergence of neural networks with external memory is the invention of differentiable mechanisms of attention.

In 2016, an improved model of a neural network with external memory called a differentiable neural computer was proposed in the work. It also contained only a brief description of the principles of operation of this model.

In 2018, four modifications were proposed for a differentiable neural computer, which made it possible to improve the quality of solving problems related to question-answer systems (QA tasks). These modifications were based on the works.

Today, the relevance of creating new recurrent neural network models capable of storing large amounts of data, as well as successfully solving problems for question-answer systems (QA-tasks) is very high.

The following requirements are imposed on such neural network models:

the presence of “long-term” learning memory;

high learning rate;

the stability of the learning process (the learning process should not significantly depend on the initial initialization);

transparency of decision-making by the model and interpretability of the work of the neural network (an attempt to get away from the “black box” concept);

ability to solve QA tasks;

the model should contain a relatively small number of trainable parameters;

the ability to work with variables, as well as with data structures (for example, with graphs), to solve algorithmic problems.

The object of study of this work is neural networks with external memory, namely the neural Turing machine and a differentiable neural computer.

The subject of the study is a detailed analysis of the above neural network models, as well as their applicability to solving problems related to the construction of question-answer systems.

The aim of the work is a detailed theoretical and experimental study of the above neural network models, as well as the development of an application for visualizing the internal processes of a differentiable neural computer.

The following tasks were set in the work:

describe in detail the operation of the Turing neural machine, differentiable neural computer (DNC), DNC modifications, and also build the necessary circuits;

note the advantages and disadvantages of these models;

compare the performance of these models in relation to the earlier successful LSTM model using two tasks as an example: copying sequences of bit vectors and bAbi-task (basic skills of question-answer systems);

develop an application for visualizing the internal processes of a differentiable neural computer model;

General information. Recurrent neural networks.

Recurrent neural networks are a broad class of dynamic state systems. The current state of such systems depends on the current input vector (for example, from the training sample) and on the previous state. Dynamic state is critical because it enables context-sensitive computations: an input vector received by a network at a given moment can change its behavior at a much later moment.

Recurrent networks easily handle variable length structures. Therefore, they have recently been used in a variety of cognitive tasks, including speech recognition), text generation, handwriting generation, and machine translation.

The most important innovation for recurrent networks was the creation of long short-term memory (LSTM). This architecture was designed to solve the “fade-and-explode gradient” problem. LSTM solves this problem by introducing special valves.

The current stage of development of recurrent neural networks is characterized by the introduction of external memory. External memory addressing is based on recently invented differentiable attention models. A factor in the emergence of such models of attention was the study of human working memory.

The introduction of external memory reduces a significant portion of the search space, since we are now simply looking for an RNN that can process information stored outside of it. This truncation of the search space allows us to start using some of the features of RNN that were not available before, as can be seen from the many tasks that a neural Turing machine can learn: from copying input sequences after viewing them, to emulating N-grams, implementing question-answer systems, solving the graph traversal and orientation problem, as well as finding the shortest path in the graph and solving tasks related to reinforcement learning.

Here is a list of names of some neural networks with external memory:

Graph Neural Network

Neural stack machine (neural stack, Neural stack machine)

Neural queue

Neural deck (neural deque)

Pointer Network

End-to-end memory network

Memory network

Dynamic memory network

Neural map

Neural Turing Machine

Differentiable Neural Computer

The concept of working memory

The concept of working memory is most strongly developed in psychology. It explains the process of performing tasks related to the short-term manipulation of information. The principle is that the “central executive body” focuses attention and performs operations on the data in the memory buffer. Psychologists have studied the limits of human working memory capacity extensively, often quantified by the number of pieces of information that can be easily remembered. These capacity limits lead to an understanding of the limitations in human memory operation, but in the NTM and Differentiable Neural Computer (DNC) models, the size of working memory can be set arbitrarily.

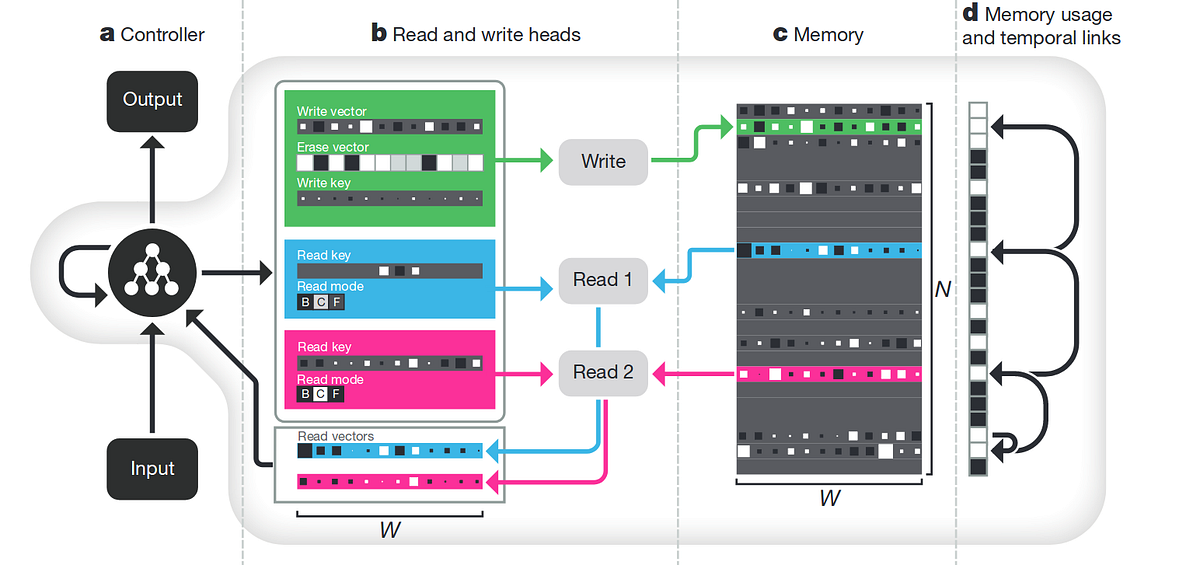

Differentiable Neural Computer

A differentiable neural computer can be trained using either supervised learning or reinforcement learning techniques.

The entire system is differentiable, and therefore can be trained end-to-end using modified gradient descent and backpropagation techniques, allowing the network to learn how to organize external memory in a targeted manner.

A Turing neural machine can retrieve information from memory in the order of its slot indices, but not in the order in which the memory slots were written. A differentiable neural computer has this ability.

The principles of operation of a differentiable neural computer can be read Here.

The results of the work of LSTM, NTM, DNC are presented in tables on the tasks bAbI-task.

Disadvantages of DNC

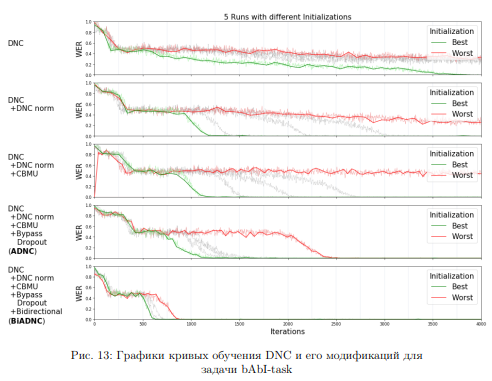

In this paper, we analyze DNC in QA tasks. In work [29] Four main problems were identified:

high memory consumption makes it difficult to efficiently train large models;

a big difference in learning efficiency with different initializations;

slow and unstable convergence requires a long training time;

the unidirectional architecture makes it difficult to deal with different kinds of questions in QA tasks.