Applications and services in Docker

Docker has become widespread due to its containerization capabilities and, above all, due to the functionality that it provides for working with applications in containers. In this article, we will look at working with applications and services in Docker containers, talk about how services differ from applications and how to work with them.

Containers and images

Sometimes novice users when working with Docker have some confusion related to the concepts of container and image. So, let’s try to figure it out. An image is a file that stores the entire program run configuration in a Docker container. The image does not change while the container is running.

The container, in turn, is an instance of the image. When running, a Docker container is similar to a virtual machine, but it does not have a separate operating system. Essentially, a container is just another process on your machine that is isolated from all other processes on the host machine. Each container installs applications or software by following the instructions in the image files. At the same time, we can control the degree of isolation of the network, storage or other container subsystems from other containers or from the main machine. The number of containers is unlimited, they all run on a common host infrastructure and a common operating system. In Docker, each container needs an image, so when the container is first launched, we unload the image. On subsequent launches, we no longer waste time downloading, since the image should be in the cache.

Working with Applications

So what happens when we start the container. As a classic example, consider running hello-world:

docker run hello-world

Team docker run tells Docker to run the application in the container. After starting, Docker looks in the cache for the desired image called hello-world. If there is no such image, then the Docker server contacts the Docker hub, where it downloads the image with the specified name. The Docker Hub receives a request from the Docker server to download a specific image file from the public repository. As a result, Docker downloads the desired image and, if necessary, other images. And according to the initial settings from the image, a simple hello-world program is launched in the container. As you can see, everything is quite simple here.

Ready-made containers are certainly good, but for practical use, we usually need more custom solutions, and here it becomes necessary to create our own container.

To create our own container based on the finished image, we need to prepare a special Dockerfile that will contain all the necessary commands for our container to work. A Dockerfile is a text document that contains all the commands that a user can call on the command line to build an image.

Here is an example of such a file for hello-world:

FROM busybox:latest

CMD echo Hello World!To build and run the container, run the following commands in the directory where we created the Dockerfile:

docker image build -t hello-world .

docker run hello-world

With the -t switch, we assign a tag to the container. As a result, we get the following:

On the first line we start the busybox image, and on the second line we tell Docker to run the command echo Hello World! So we see that Docker can build images automatically by reading the instructions from the Dockerfile.

Each instruction performs a specific action on a new layer on top of the current image and creates a new layer that is available for subsequent steps in the Dockerfile. Let’s look at some more examples a little more complicated.

In the following example, we use the busybox image, in which we declare the environment variable FOO=/bar, then we declare the working directory with the value of the variable FOO, and copy from $FOO to /quux.

FROM busybox

ENV FOO=/bar

WORKDIR ${FOO} # WORKDIR /bar

COPY \$FOO /quux # COPY $FOO /quuxAs you can see from this example, we can perform directory operations while working with containers.

In the following example, we are not limited to directory operations, although they are also present here. In this example, we are using RUN and CMD commands to run commands. The RUN instruction allows you to execute any command, while CMD (and its equivalent ENTRYPOINT command) determine which command will be executed when the container starts.

FROM python:3.7.2-alpine3.8

RUN apk update && apk upgrade && apk add bash

COPY . ./app

ADD https://content_file \

/my_app_directory

RUN ["mkdir", "/a_directory"]

CMD ["python", "./my_script.py"]Both CMD and RUN have two forms exec and shell. In the example above, RUN uses both forms, while CMD only uses the exec form.

ENTRYPOINT ["executable", "param1", "param2"]

ENTRYPOINT command param1 param2

CMD ["executable","param1","param2"]

CMD command param1 param2

And one more example, useful from a practical point of view, is the creation of a container with the Nginx web server, which, at startup, copies content and configuration files from external directories and saves event logs also to an external resource mounted to the container (VOLUME instruction).

FROM nginx

COPY content /usr/share/nginx/html

COPY conf /etc/nginx

VOLUME /var/log/nginx/logAfter preliminary preparation of all the necessary resources, we can build this container using the commands already familiar to us:

docker image build -t nginx .

docker run -p 8080:80 nginx

Here, when starting the container, we forwarded the local port 80 to the external port 8080.

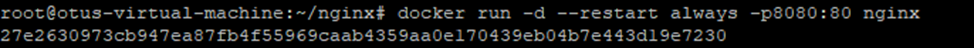

When You Need a Demon

In the nginx example, we created and launched the container, but it will only work while it is running in the console, which, you see, is not very convenient, especially in a productive environment. It would be much more convenient to run the container in service mode, by analogy with daemons in Linux. You can implement this mode using the -d switch when starting the container.

docker run -d -p 8080:80 nginx

Now our container can run in daemon mode. However, if we talk about working in production, then we need to be sure that our container will be automatically restarted in case of any failure.

Restart Policy

Docker has a restart policy with which you can specify whether or not to restart the container on exit. The following 4 values are possible:

no – do not restart the container automatically (default option)

on-failure – restart container if exit due to error (non-zero exit)

always – always restart the container if it stops. If the container is manually stopped, it only restarts when the Docker daemon is restarted.

unless-stopped – similar to always, except that when the container is stopped, it does not restart even after restarting the Docker daemon

As you can see, we can choose which restart option suits us best. For the nginx example, I suggest using the always option. The policy is set when the container is started using the –restart switch.

docker run -d --restart always -p 8080:80 nginx

Of course, in Kubernetes, the “survivability” of containers is provided by much more powerful means, but our article is devoted exclusively to Docker.

Conclusion

In this article, we talked about how to create your own containers and how to make them fail-safe. In the next article, we will talk about such a rather exotic thing as Docker Inside Docker.

In conclusion, I recommend to visit public lesson “Kubernetes: pods, replication controllers and services” coming soon to OTUS. At this meeting you:

– understand the basic entities in K8s;

– understand how the replication controller, pod and service are related;

– learn how to provide a single point of entry to pods and maintain them in the required number for stable operation.