Windows 10 with Tesla T4 on Azure using Stable Diffusion and Automatic1111 as an example. Inexpensive

Introduction

Since I like a high degree of control, all sorts of discord / telegram chats could not suit me. Unless you get your first experience. Well, how about without inpaint …

The next step is Colab. Quite a lot can be generated within the free limits. When using a laptop (.ipynb) with Automatic1111, the control is almost complete. Unless there are minor troubles (posex did not open – well, I installed openpose-editor, except that canvas-zoom did not win). And with the connection of a shared disk to work with one installation from different accounts, and in general beauty. And yet I wanted to try “completely my own.”

Since it’s not for me to work for 8 hours a day, I don’t have a computer (there’s nowhere to plug in a card), but I have a laptop with Thunderbolt 3 support, I began to look towards external boxes like AORUS. However, the used ones were mostly with 10-11GB of video memory, which is enough for generation, but not very much for training. Even for Vicuna 13B may not be enough (recommend 12GB). Something more decent is already too expensive to stand idle (usually, a simpler card is enough), and still may not be enough for rare serious needs.

While I was thinking, reality suggested that it was definitely not worth messing with a piece of iron.

In general, I began to look at how to launch in the cloud in the “pay for use” mode.

Options

One of the main requirements was the use of spot instance, so that the execution was cheaper, so “ready-made” scripts were not very suitable. I wanted to launch the instance exactly the way I needed it, and set everything up in it myself. Paying for a ready-made image from the marketplace is also not interesting.

And preferably (though not necessarily) – Windows [10]because there are fewer problems with drivers, there is an excellent portable assembly …

In AWS, prices seem to be normal.

g4ad.xlarge (4vCPU, 16GB RAM) spot about $0.15 per hour. It’s true AMD Radeon Pro V520 GPUs that Stable Diffusion will require dancing.

g4dn.xlarge (also 4/16), but already NVIDIA T4 GPUs are a completely different matter. The truth is already $0.20 per hour (spot, of course).

However, using a Windows server costs extra money. And in order to install Windows 10 with your own license, you must first compile the VHD locally and upload it to AWS. In general, it is not trivial. Although if anyone needs description is.

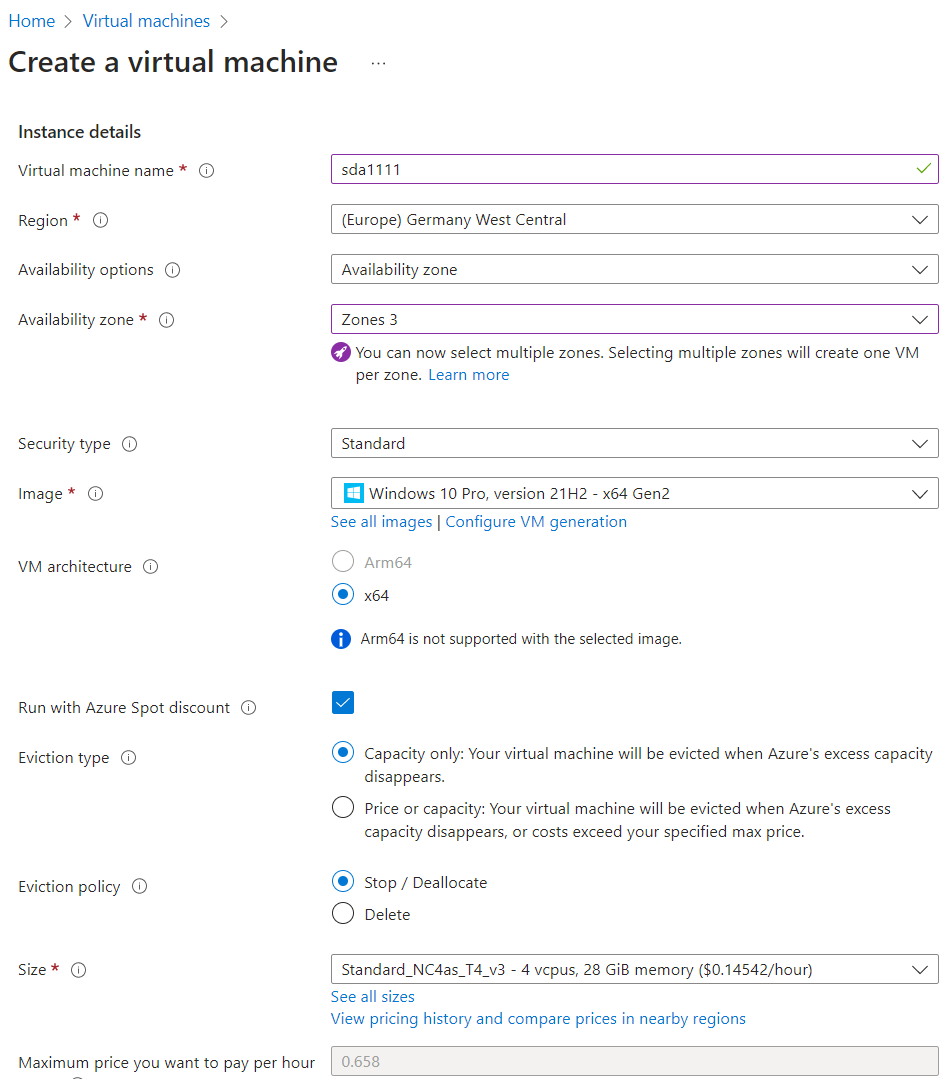

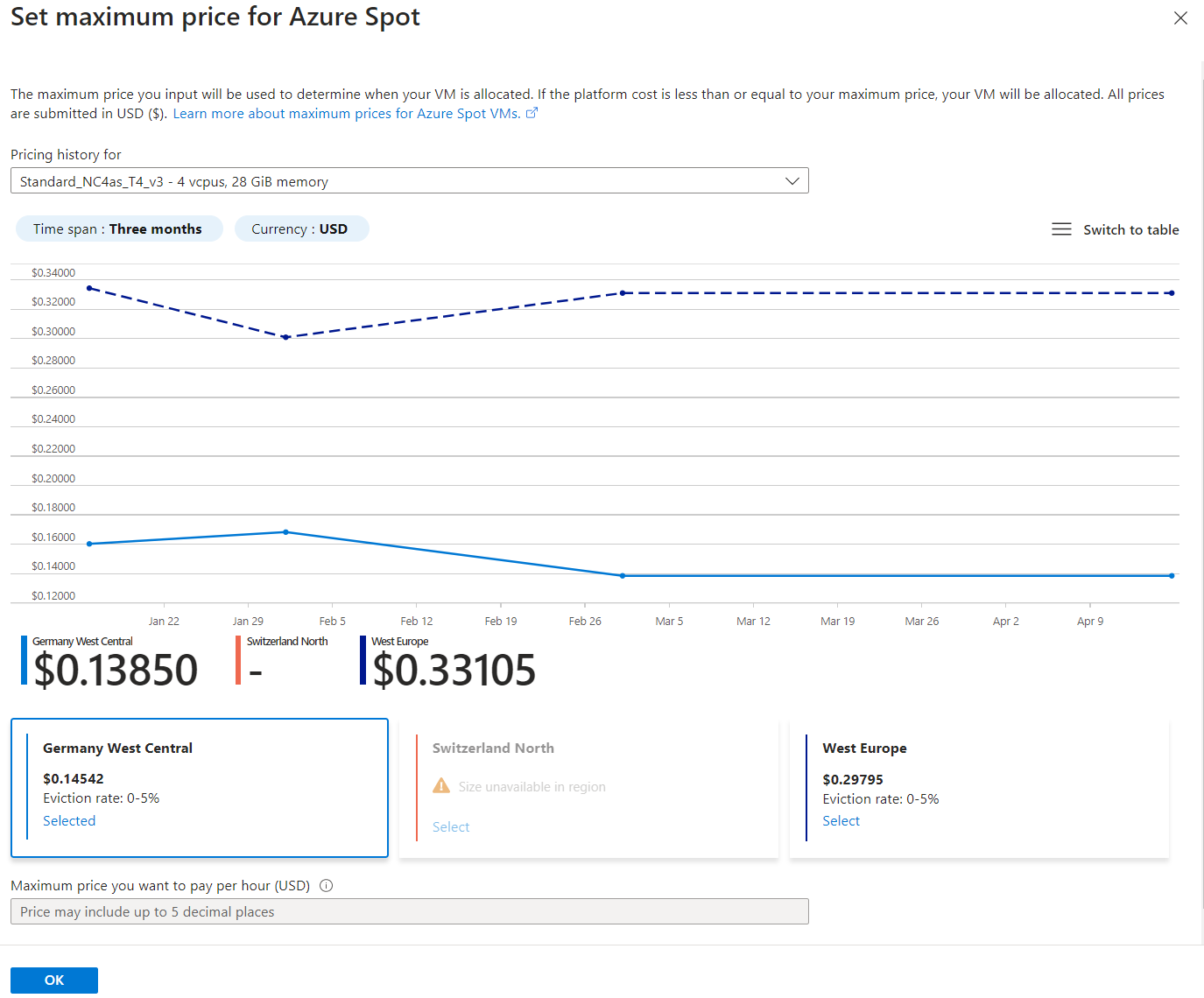

Began to look in Azure. There, everything is ready to start Windows 10. And you can confirm that the license is yours (I really have one). Therefore, I settled on NC4as_T4_v3 (4 vCPU, 28GB RAM, also Tesla T4). $0.15/hour on the spot.

Installation

I will not describe in detail, I will give screenshots for reference and point out the main points.

Type

Let me tell you right now, this is not the best option. With this choice of Availability options (by default), you can really find a zone (in this case 3) with cheap instances. Moreover, looking at the view pricing history, you can see that in Western Europe it is much more expensive:

However, in this case your IP address will be Standard. Reserved. And they will take money for it when the virtual machine is turned off (about $ 2.5 per month).

It’s better to specify that failover is not required:

Set a password for the administrator.

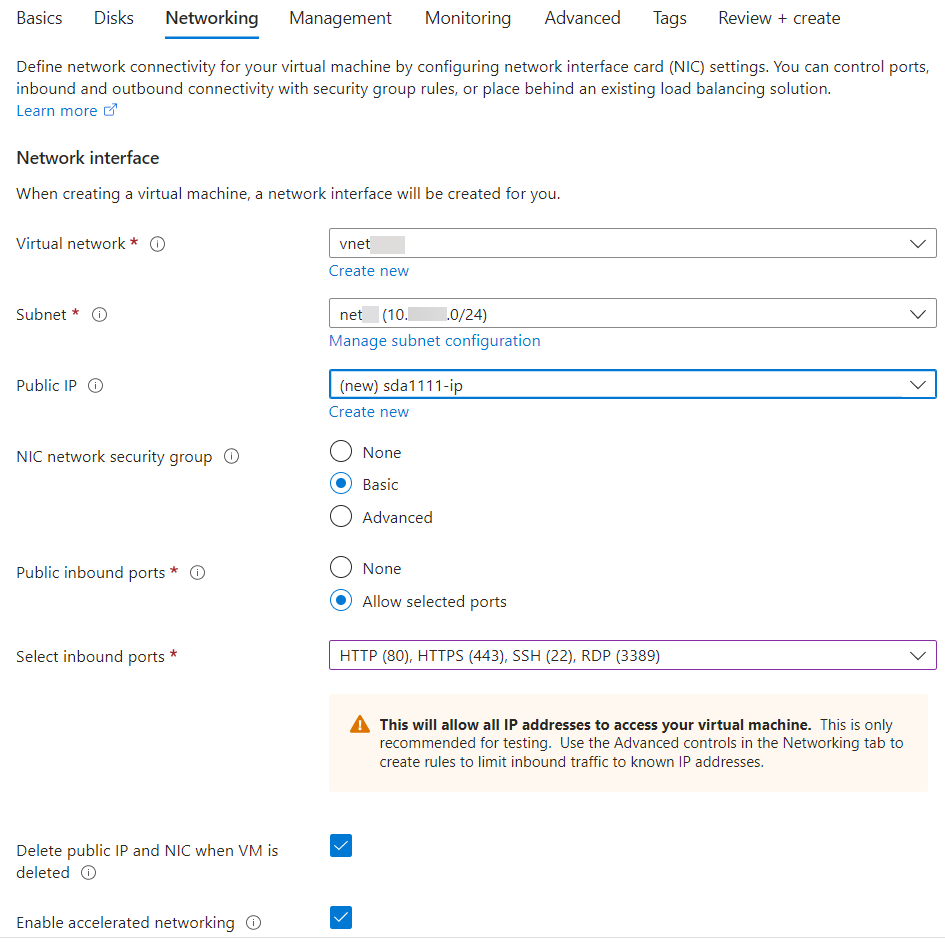

I opened ports 80 and 443 in case of publishing the SD web interface for myself, possibly with LetsEncrypt. And also opened SSH. I’ll explain my idea later.

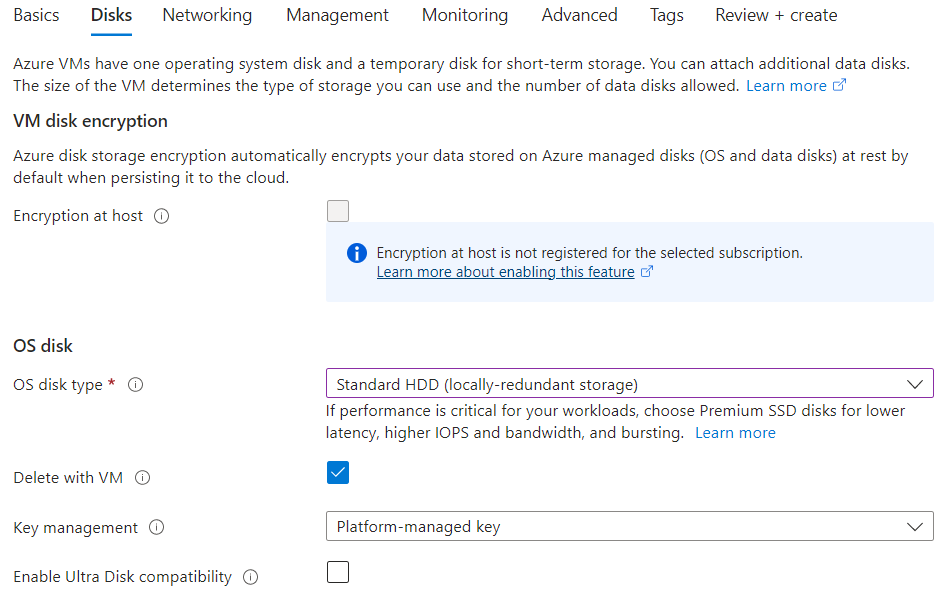

Disk

When the virtual machine is turned off, you will have to pay for disk space. And this type has a considerable amount of space – 128GB (you can’t choose on the portal, it seems that when you start through Terraform, you can reduce it). Therefore, I chose the disk HDD. It will cost about $6 per month. And I would not say that it slows down a lot. Reloading is also extremely fast. My laptop on NVMe takes longer to load (there, however, a lot of things are installed)

However, you can also choose an SSD. Then about $10 a month will come out.

Net

It is better to explicitly create a public address (Create New) and specify Basic, Dynamic

Extensions

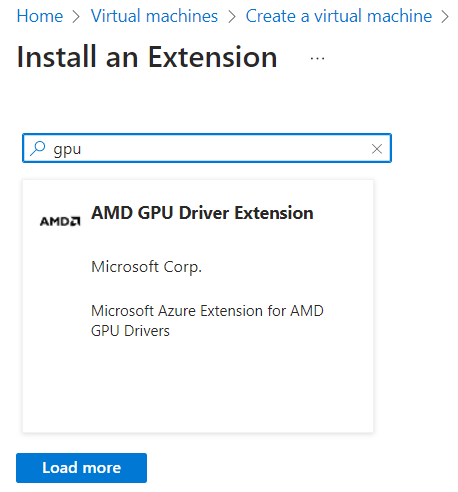

We need to install drivers for Tesla T4. Let’s specify it right away (Select an extension to install):

And here is a surprise. About GPU only AMD.

You need to click Load more, and NVIDIA will appear

Of course, you could immediately dial NVIDIA. The page would be empty, after Load more the search would appear.

Might be worth adding SSH as well. At least, instead of publishing the web interface, I plan to throw a tunnel there via SSH using a certificate so that the browser works locally (as with Colab). From the laptop there will be an appeal to 127.0.0.1:7860, but in reality, through the SSH tunnel, go to the virtual machine. Although, of course, you can sit on RDP without exposing the Web to the outside at all.

We confirm that everything is fine

And we wait until everything is installed, including the drivers.

After creation

Something needs to be set up. Since we want to save on IP, each time we start we will be given a new one. So it is more convenient to connect by DNS name. Click Not configured in DNS Name.

And specify the desired name for DNS (I have sda1111). Then Save

Now we see the DNS name sda1111.germanywestcentral.cloudapp.azure.com, through which we can connect via RDP (i.e. save it in the RDP client and connect with one click), although the IP will be new every time you start.

In the screenshot just above, in IP address management there was only the Static option (expensive, because of the “fail-safety” at the very beginning of the configuration).

If “no fault tolerance” was selected, then Dynamic will be selected. He is needed.

The virtual machine must be stopped. Those. this can be done later (if you forgot at the creation stage).

If something does not work out, you can generally delete the public IP and create a new one. Only first it needs to be “untied” (dissociate). And then a new correctly created associate back.

Important point

Even after Shutdown from the inside of the virtual machine (Windows stopped), the resources remain allocated (allocated), and Azure takes the money, as if the virtual machine is running. We must not forget to do Stop (deallocate) with it.

To be safe, I recommend setting the automatic shutdown

Well, to finish on this with the Azure interface, I’ll immediately show you for the future. Suddenly one Nvidia Tesla T4 is not enough for something super-big. On a turned off virtual machine, you can resize to NC64as_T4_v3 with four Teslas.

In Windows

Checking drivers

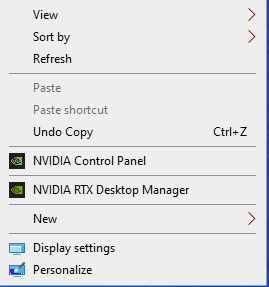

NVIDIA Control Panel is. Already good.

device manager:

There is our Tesla T4. True, this is a separate adapter, we are now working through the Microsoft Remote Display Adapter. Maybe that’s why I don’t see the GPU:

It may be necessary to activate something similar, but I did not understand.

I need to quickly generate pictures, and not play toys on a virtual desktop.

Let’s see what the native NVIDIA utility will show (drag and drop nvidia-smi to cmd)

Like in Colab 🙂 Tesla T4, 16GB VRAM.

CUDA 11.4 can be upgraded to version 12.

If anyone is interested, dxdiag:

We put Stable Diffusion

I will use a convenient portable assembly https://github.com/serpotapov/stable-diffusion-portable

You can see about it here: https://www.youtube.com/watch?v=jepK6ufemMw. In general, I highly recommend this channel and the author.

I download the zip-archive in one file from the link from step 1

I open the archive, put it in the root, rename the folder for my convenience just to sd.

I run webui-user-first-run. A rather lengthy process starts.

At this time, I’m downloading the first model (it won’t start without it). In paragraph 4 there is a link to Deliberate – what you need.

As soon as models\Stable-diffusion appears in my sd folder, I put this model there. You can also download VAE and so on for the time being, but all this can be done later. A model is enough to run.

While everything is being installed, I add –theme dark to the main launch file webui-user.bat (also not necessary)

An error may appear after installation is complete.

It’s not scary. The main thing is not about “no GPU found”

Just close this window, start, as expected, webui-user.bat and wait for the link to the web interface

Maybe even the browser itself will open.

You definitely shouldn’t use share=True for external access. There are different options. For example, in that video of Khachatur that I cited, it is described. There are extensions incl. for SSL. I’m planning through an SSH tunnel.

So far, just “from inside RDP”.

The first generations may be slow. The console shows that the system is downloading something.

As a result, we get almost 7 iterations per second. In Colab I usually have 2.5-3.5 it/sec

Also ran 512×512 generation, Euler a, Sampling steps 20, Batch Count 24. Completed in 90 seconds.

24 count * 20 steps / 90 sec – so it turns out 5 with something. Feels really faster than Colaba.

What is the final cost?

Consider using “an hour a day”. We’ll skip a day or two, sit more on weekends, conditionally 30 hours a month. It will take me even less time to SD. Well, i.e. at first it may be the same, and then it will clearly decrease.

30 hours per month * $0.15 = $4.5 – for the duration of the virtual machine.

The S10 128GB drive costs $5.89 per month.

Disk work: “We charge $0.0005 per 10,000 transactions for Standard HDDs”. I’m not ready to evaluate, I don’t know how intensely the SD pulls the disk. Even the slow HDD I chose, even in peaks, was loaded weakly, and by the feeling of delay it was not at all in the disk. So it’s unlikely to be worth anything.

In principle, there is another option – an “ephemeral disk” is attached to virtual machines of this type. Temporary, only for the duration of the current run. Perhaps it would make sense to first copy something intensively disk-based to it and pull it from there, and not just send the swap and temporary directory there. Although with 28GB of RAM (significantly more than a similar instance in AWS), I think everything is cached quite well.

In other words, about $11 a month for “study, indulge.” Since it literally rises in an hour, you can generally raise it only “for the duration of the vacation”.

For professional use, “8 hours a day to help Photoshop” is a little expensive. Although $5.89+22days*8h*$0.15 = $33 per month is actually the cost of a video card for 3 years. Given dynamically changing circumstances, mobility requirements, it may also be an option to try and decide how much you need your own card, what performance, with how much memory …

In theory, a couple of people can simultaneously work via the Web with one such virtual machine (if not for video, we generate many frames in a row). At least I’ve run from different browsers at the same time, and it’s fine (just a delay until another session is generated). If they could make an output directory for each of them (by adding an IP address to the name, for example), it would be great in general. Well, the Stable Diffusion type is common, but each Web UI has its own, with its own settings. Maybe even one has Automatic, and the other has Comfy, for example.

Questions

The question of how the video card is divided between applications is very interesting. Let’s say I have Stable Diffusion running, but nothing is generated at this time. Can I run something else? Conditional LLaMA/Alpaca/Vicuna?

Well, like a picture is being generated – text queries are waiting. Text is being generated – pictures are waiting. Or does SD capture the card exclusively and constantly keep the video cards of the model and all that in memory?

I’ve seen something like “GKE’s GPU time-sharing feature allows you to partition a single NVIDIA A100 GPU into up to seven instances” and “You can configure time-sharing GPUs on any NVIDIA GPU on GKE including the A100”. The first option “instead of one powerful card, get several small ones” is not particularly interesting to me. And with the second one, it is not clear how it is with loaded textures, models, and so on in the case of frequent context switching. And whether support is required from the software (one must understand that someone slammed the textures previously loaded into the video memory).

The Remoting API does not perform well (Performance ranges from 86% to as low as 12% of native performance in applications that issue a large number of drawing calls per frame).

In general, I will be glad to hear your thoughts.