Unifi prometheus exporter

Hello! The other day I wanted to make graphs for all our access points, we have a lot of them, some are based on Mikrotik and there are no problems with them, it is easily polled via SNMP and gives statistics at once for all points, but with Unifi everything is more difficult, you need to poll each point access separately, and they sometimes change with us, so we need some kind of solution that will track these changes automatically. There was nothing ready-made on the Internet, so we decided to write another “bicycle”, but I wanted to make it as compatible as possible so that others could use it.

Our approach

We decided that we would write an application / daemon that can log in to the Unifi controller, get a list of access points, and then, upon request to the /metrics handle, it will access all access points via snmp and give the result out in the prometheus format

Implementation

I will not dwell on the details of the implementation of the application, I filled everything on github and documented in detail. There are also docker image.

I will describe some of the approaches that have been used:

development language golang

framework used to process cli-arguments and environment variables urfave/cli/v2

used as an http request router gorilla/mux

to limit the simultaneous polling of access points, the synchronization primitive “semaphore“

used mutex to synchronize the list of access points between goroutines

to poll access points, the application refers to a third-party implementation snmp-exporter

Environment setup

We need to set up two applications:

snmp-exporter – the daemon that we will access from our application

unifi-prometheus-exporter – our app

I’ll say right away that I honestly dug into the snmp-exporter code, hoping that it would be possible to import the code and use its functions natively, but everything is not very good there, the code is not modular, it cannot be imported, so you have to use two applications.

snmp-exporter

First you need to run snmp-exporter, which we will use to poll our access points, I will show how this can be run in docker-compose and kubernetes. I will not consider installing docker-compose and kubernetes here, this is not the purpose of this post.

The exporter listens on port 9116, in order to poll the remote host via snmp, it is enough to send an http request to the exporter in the /snmp handle with the module (if_mib by default) and target (what we are polling) parameters, for example:

curl http://127.0.0.1:9116/snmp?target=10.0.0.1docker-compose

docker-compose.yaml

version: "2"

services:

nexus:

image: prom/snmp-exporter

ports:

- "9116:9116"kubernetes

snmp-exporter.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: snmp-exporter

labels:

app: snmp-exporter

spec:

replicas: 1

selector:

matchLabels:

app: snmp-exporter

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

labels:

app: snmp-exporter

spec:

containers:

- image: prom/snmp-exporter

imagePullPolicy: IfNotPresent

name: exporter

ports:

- containerPort: 9116

---

apiVersion: v1

kind: Service

metadata:

name: snmp-exporter

spec:

ports:

- port: 9116

protocol: TCP

targetPort: 9116

name: snmp-exporter

selector:

app: snmp-exporterunifi-prometheus-exporter

now we launch our application, it has a number of cli-arguments duplicated by environment variables:

NAME:

exporter - экспортер snmp-метрик от точек доступа unifi

USAGE:

exporter [global options] command [command options] [arguments...]

COMMANDS:

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

--controller-login value логин от exporter-контроллера [$CONTROLLER_LOGIN]

--controller-password value пароль от exporter-контроллера [$CONTROLLER_PASSWORD]

--controller-address value адрес exporter-контроллера (default: "https://127.0.0.1:8443") [$CONTROLLER_ADDRESS]

--snmp-exporter-address value адрес snmp-экспортера (default: "http://snmp-exporter:9116") [$SNMP_EXPORTER_ADDRESS]

--access-points-update-interval value интервал обновления списка точек (default: 1h0m0s) [$ACCESS_POINTS_UPDATE_INTERVAL]

--listen-port value порт прослушки http-сервера (default: 8080) [$LISTEN_PORT]

--parallel value количество потоков для опроса точек-доступа (default: 10) [$PARALLEL]

--poll-timeout value таймаут для опроса точек доступа (default: 15s) [$POLL_TIMEOUT]

--help, -h show help (default: false)in principle, here, in my opinion, everything is clear. run in docker-compose/kubernetes

docker-compose

version: "2"

services:

nexus:

image: maetx777/unifi-prometheus-exporter

environment:

- CONTROLLLER_LOGIN=admin

- CONTROLLER_PASSWORD=123456

ports:

- "9116:9116"kubernetes

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: unifi-snmp-exporter

labels:

app: unifi-snmp-exporter

spec:

replicas: 1

selector:

matchLabels:

app: unifi-snmp-exporter

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

labels:

app: unifi-snmp-exporter

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '8080'

prometheus.io/path: '/metrics'

spec:

containers:

- image: maetx777/unifi-prometheus-exporter

name: exporter

ports:

- containerPort: 8080

env:

- name: CONTROLLER_LOGIN

value: admin

- name: CONTROLLER_PASSWORD

value: 123456

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

name: unifi-prometheus-exporter

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

name: unifi-prometheus-exporter

selector:

app: unifi-prometheus-exporter focus on the nuances

CONTROLLER_LOGIN – change if necessary

CONTROLLER_PASSWORD – if necessary, change 🙂

CONTROLLER_ADDRESS – change to the address of the unifi controller indicating the protocol and port, for example https://1.2.3.4:8443

SNMP_EXPORTER_ADDRESS – change to the address of the snmp-exporter that was launched earlier, you can use the dns-name

transfer secrets in kubernetes in the specified way poorlythere is a special resource for this secretbut this is not considered here, each myself will finish it for himself

After launch

After launch, we will see something like this in the unifi-prometheus-controller logs

INFO[0000] Daemon start

INFO[0000] Start http server

INFO[0000] Start fatals catcher

INFO[0000] Start signals catcher

INFO[0000] Start access points updater

INFO[0001] Http client authorized

INFO[0001] Update access points list

INFO[0001] Access point name Room1, ip 10.0.0.10

INFO[0001] Access point name Room2, ip 10.0.0.20now we can refer to our controller to get the metrics of the found points

# curl -s http://127.0.0.1:8080/metrics|grep ifOutOctets

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="ath0",ifIndex="6",ifName="ath0"} 0

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="ath1",ifIndex="7",ifName="ath1"} 3.319545249e+09

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="br0",ifIndex="9",ifName="br0"} 2.7572029e+07

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="eth0",ifIndex="2",ifName="eth0"} 4.93001573e+08

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="eth1",ifIndex="3",ifName="eth1"} 0

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="lo",ifIndex="1",ifName="lo"} 3572

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="teql0",ifIndex="5",ifName="teql0"} 0

ifOutOctets{ap_name="Room1",ap_ip="10.0.0.10",ifAlias="",ifDescr="vwire2",ifIndex="8",ifName="vwire2"} 0

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="ath0",ifIndex="6",ifName="ath0"} 0

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="ath1",ifIndex="7",ifName="ath1"} 6.28150693e+08

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="br0",ifIndex="9",ifName="br0"} 2.7178302e+07

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="eth0",ifIndex="2",ifName="eth0"} 4.95262026e+08

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="eth1",ifIndex="3",ifName="eth1"} 0

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="lo",ifIndex="1",ifName="lo"} 8180

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="teql0",ifIndex="5",ifName="teql0"} 0

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="vwire2",ifIndex="8",ifName="vwire2"} 0

ifOutOctets{ap_name="Room2",ap_ip="10.0.0.20",ifAlias="",ifDescr="wifi0",ifIndex="4",ifName="wifi0"} 0the request takes some time, the more points – the longer the request will work, but the polling occurs asynchronously in multithreaded mode, so it usually fits within an acceptable time (we have 10 points polled in 10 seconds)

metrics are also “enriched” with tags with the name (ap_name) and ip-address (ap_ip) of polled points

Prometheus

I won’t write here how to install prometheus, this is not the purpose of this article, this business works for us based on kubernetes_sd_configin the presented kubernetes config, an annotation is specified that tells the prometheus system the port and handle for polling

Grafana

Finally, I’ll show you a simple dashboard grafana to view graphs

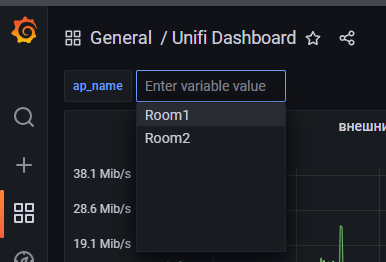

After creating the dashboard, we immediately go to Dashboard settings, create a variable

Query: ifOutOctets{ap_name=~".+"}

Regex: /ap_name="([^"]+)"/the meaning of this variable is that we select all unique values of ap_name so that it can be further selected from the list:

now create a graph and write formulas there

A: irate(ifOutOctets{ap_name=~"[[ap_name]]"}[5m])*8

A.Legend: {{ap_name}} {{ap_ip}} {{ifDescr}} out

B: irate(ifInOctets{ap_name=~"[[ap_name]]"}[5m])*-8

B.Legend: {{ap_name}} {{ap_ip}} {{ifDescr}} inmultiplying by 8 is necessary because ifOutOctets is a value in bytes, and we need a graph in megabits / sec

multiply by -8 so that the incoming traffic is displayed down, yes, I know that there are transformations, but it worked somehow crookedly for us, the graph disappeared completely, and we didn’t understand what was wrong

and at the output we get the opportunity to view the chart for any of the access points: