The future after orchestrators

Orchestration is killing time.

How much time did you spend managing tasks in the orchestrator? Hundreds of hours? Thousands? Whatever it was, such an activity was of little value, and you probably thought so too.

The main problem is that the orchestrator is trying to control the operations on the data instead of allowing the operations to flow from the data.

Why do we have orchestrators?

Short answer: heritage.

How written by Louise, a data orchestrator is “a software solution or platform responsible for automating and managing the flow of data between different systems, applications, and storage locations.” I would complement her thought with the fact that, ultimately, the orchestrator is a stateful solution for managing script execution.

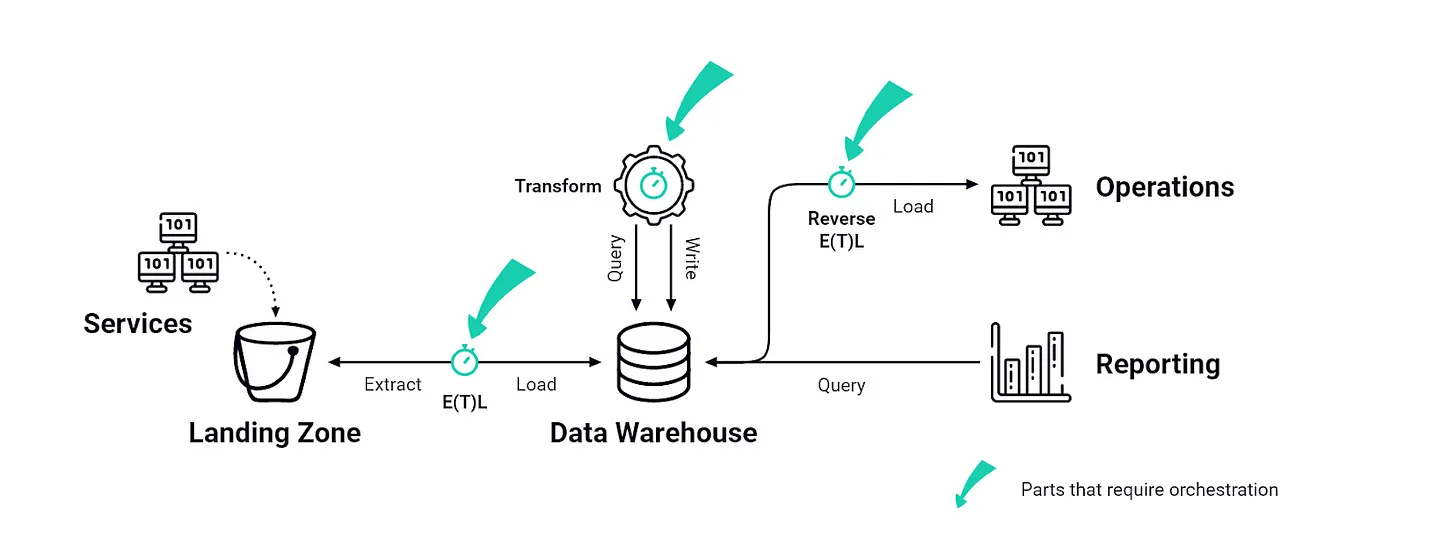

In hindsight, the need for data orchestration arose from the complexity of the startup logic, when simple script scheduling did not consider dependencies. One script depended on the other, and at the center there had to be a software component that would not only start the entire process, but also ensure that individual stages start only after the successful completion of all previous dependencies.

As a single building block, the orchestrator serves a variety of purposes, the main ones being:

State saving: keeping track of what happened and what didn’t. Probably the main function of the orchestrator.

Trigger function: the actual mechanism for executing the script, often using a set of parameters set at the level of the task in question or the job being executed.

Providing dependency constraints (Directed Acyclic Graphs, DAG. Directed acyclic graph): sorting which of the scripts should be executed before one or the other, and making sure there are no loops.

Surface logs: provide a complete view of the status of tasks that serves as an entry point for troubleshooting.

What is the problem?

Hint: it’s about orchestration.

I could go on and on about the time it takes to maintain deep dependency graphs (large number of sequential tasks), the delays they introduce in forcing workflows to adjust to the slowest task, the cost they incur in an already resource-intensive practice, about their poor compatibility with modern tools (or complete incompatibility, as in stream processing), about the burden they place on data consumers, who are forced to depend on the work of engineers for the slightest updates …

But, in the interest of being efficient, the problem with orchestrators comes down to two things: they’re just another building block in the stack, and, most importantly, new data technologies don’t need them.

What do they do after orchestration?

Just-in-time data operations.

Replacing the orchestrator means eliminating the synchronization overhead, and the main way to do this is to try to make it so that the execution time is not so significant. Currently, this means the implementation of asynchronous processing, or high-frequency batches (a small data packet).

High frequency batches

Let’s take a look at the high frequency batches first, because I see this as an aberration.

High-frequency batching is the execution of processing steps at a very high frequency (according to batch mode application standards) – for example, every 5 minutes or so. At the same time, one can consider almost each independent task as asynchronous. This helps make individual tasks independent without the need for a stateful orchestrator, but forces you to go down the darkest path of one of the hardest trade-offs: agility at the expense of cost.

Everyone who tried to scale the launch of high-frequency batches faced two problems. Firstly, the latency increases rapidly – you do not need to have a deep dependency graph so that high-frequency tasks no longer correlate with high-availability datasets. And secondly, pricing is a sore subject. Most data solutions are not designed for this kind of use for a simple reason: running a LEFT JOIN statement every 5 minutes means over-scanning a ton of data.

Asynchronous processing

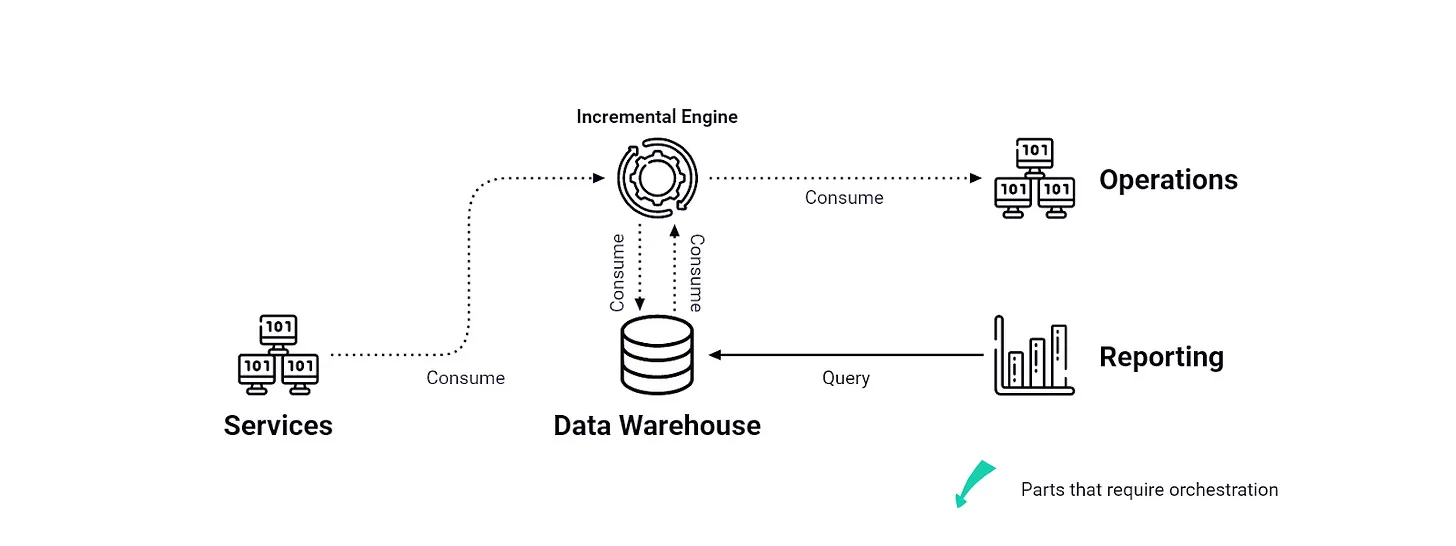

Asynchronous processing or continuous systems – think incremental engines such as stream processors, real-time databases, and to some extent HTAP (Hybrid Transaction/Analytical Processing) databases – do not require an orchestrator as they are continuous in nature, and the data is self-updating: the change is instantly reflected in consumer systems and downstream views/tables.

For those who are not yet too familiar with streaming, this is the difference between Pull and Push data operations: the former require you to constantly run the actions you would like to perform (as in the ETL/ELT model, for example), while second passively And incrementally compute and distribute data points. No orchestration! Each task is almost like a microservice, a kind of data service.

Unfortunately, Benoit already beat me to quote documentation”Why not Airflow“, so I’ll spare you that. But, as mentioned earlier, one of the main problems with orchestrators is compatibility: you just can’t orchestrate everything. This adds complexity and latency to your data operations and is, in general, a “very efficient way to ” spend a lot of time a week on activities that will eventually be unnecessary.

Imagine if everything you put into production must was to be orchestrated, then that would be the death of agile. Indeed, why is this still happening in the data world? (Rhetorical question, please refer to the first paragraphs in the article).

What is this new set of tools?

He’s already here, in a way.

If you’re taking the time to fix the Airflow DAG, pardon the expression, but it’s unlikely to be your biggest contribution to the company. Fortunately, alternatives already exist, so the choice is up to the techno-political leadership of your organization. When it comes to creating data services, all of the asynchronous data processing options mentioned earlier already exist and work on an enterprise scale near you.

What is really missing is some form of reusable control layer with the basic requirements to enforce the acyclic and directive aspects of the dependency rules, and to bring operational commands and statuses to a central level for convenience. It is more of a general DataOps tool that inherits some of the Orchestrator’s responsibilities, especially in terms of metadata and command enforcement, although it does not interfere with the life cycle of tasks – therefore, is no longer a mandatory part of the stack. This is where the trend isactive metadata” could be an interesting move, acting as a passive observer of what is happening, while not actively interfering with data operations.

In Popsink, we don’t have an extra orchestration brick, which saves us time and money. We ended up creating our own control plane for convenience, in part because some open standards today are incompatible with the concept of no orchestrator (such as OpenLineage, which is built around jobs having a “start” and “end”).

However, this works great: Jobs are now asynchronous constructs with passive consumers that don’t need to fetch data when predefined conditions are met. To use a metaphor that I like, we now use pipes instead of buckets. Much less effort is spent here, so you can sit back and watch the data flow.

Final words

You did it!

In many organizations, data products are still the result of lengthy data processing that requires many orchestrations. But this is not necessary at all, solutions already exist, for example, mentioned by Hubert (Hubert) flow workflow constructors or what we are working on Popsink.

Due to the ongoing process of transition to continuous operations, it is worth exploring what the post-orchestral world is like, if not for your peace of mind, then for your wallet. I also really like the term “data services” because it goes well with the idea of subscriptions to data on demand as opposed to out-of-the-box “data products”.

It will take a little more time for full feature parity and good reusability standards to emerge, especially as current active metadata and lineage systems are deeply rooted in orchestrators, but the potential is there.

It’s time to move on with life.

How to prepare the Clickhouse database for data loading and its efficient use? How to tie everything together, from storage and data loading method to graphs? You can get answers to these questions in an open class “Data Visualization Based on Clickhouse and Apache Superset”. As a result of this meeting, you:

– Get an understanding of one way and how to build a repository aimed at visualizing information.

– Familiarize yourself with modern reporting tools.

– On applied examples, you will conduct a practical application of theoretical knowledge.

The lesson will be held as part of the online course “Data Warehouse Analyst”. Sign up for a class link.