How to speed up binary search

Hello Habr community. I decided to talk about how to speed up a regular binary search hundreds of times and search for data in a regular text file FASTER than using classic databases. Now I will try to solve the binary search problem without them, I will tell you about the main optimization methods, and at the end I will make a comparison. This is a very real task that I will face when developing my own project, and therefore I have something to tell you.

The binary search is very simple – you sort the data array alphabetically, and then look for the necessary information in it in the same way as in a paper dictionary – open the book in the middle and see which side the word you are looking for is on the right or on the left. Then open the desired part in the middle and again look where to move. And so on until you find the right word.

Thus, it is possible to find the necessary information in logarithmic time, but only in theory.

def binary_search(arr, x):

left, right = 0, len(arr) - 1

while left <= right:

mid = (left + right) // 2

if arr[mid] == x:

return mid

elif arr[mid] < x:

left = mid + 1

else:

right = mid - 1

return -1

arr = [1, 3, 5, 7, 9]

x = 5

print(binary_search(arr, x))

>>2In practice, we usually do not have access to the entire data array. Binary search is effectively used when there is a lot of data and it is impossible to fit them into RAM. And if it were possible, they would have to be read from the disk, which would take a lot of time. Fortunately, most programming languages have a wonderful seek() function that allows you to move to any location in the file being read. At the same time, the transition time does not depend on the distance to which you need to move (if the file is not defragmented). This means that we can implement binary search directly inside the file without reading it.

But you might have a question – for binary search you need to move to a line with a certain number. If the strings are the same length, the problem is purely mathematical. But what if not? Is it really necessary to pad them to the same length with spaces? Or store in a separate string length index? Of course no.

When you look up the right word in the dictionary, you don’t need to open it strictly on a specific page. Enough to know how to open it approximately in the middle. Indeed, if we read the file in the middle, it does not matter to us that there are 995 lines on the left and 1005 lines on the right. It is enough to know that we need to move to the left, and we will not miss the desired line.

You might also have another question – let’s say we moved to the center of the file. How can we count one line? We could get to its center and do not know where the beginning.

The simplest solution is to shift one character to the left until we reach a newline character. And then read the whole line through readline.

n = 10000000

with open('text.txt', encoding='utf-8') as file:

file.seek(n)

while True:

n = n-1

file.seek(n) # перейти на один символ назад

char = file.read(1)

if char == '\n' or file.tell() == 0: # если дошли до конца строки или начала файла

break

S = file.readline()

print(S)However, this solution has an unobvious problem – HDDs don’t really like to read backwards, and therefore it will work slower in the case of large strings. It’s much easier to do this:

n = 10000000

with open('text.txt', encoding='utf-8') as file:

file.seek(n)

file.readline()

print(file.readline())Yes, you can just skip the line we hit and work with the next one. In this case, however, binary search will not be able to find the very first line in the file, but we can put column headers there and say that this is not a bug, but a feature. Although one bug is still worth fixing:

UnicodeDecodeError: 'utf-8' codec can't decode byte 0x90 in position 0: invalid start byteThe fact is that we get into a random byte of the file. And some Unicode characters consist of several bytes, and getting “inside” such a character, we get an error. They occur quite rarely, since most characters are single-byte, and therefore such problems can simply be ignored. To do this, we can specify the errors=”ignore” parameter in the file open function. Or use the try – except link and some code. Approximately like this:

n = 10000000

with open('text.txt', encoding='utf-8') as file:

file.seek(n)

while True:

try:

file.readline()

break

except UnicodeDecodeError:

n=n+1

file.seek(n)

print(file.readline())How else can you speed up the search? Let’s pay attention to what kind of files we are reading. With each search, we read the line at the center of the file. Half the time we read lines at 25% and 75% of the file. And so on. The same positions are read multiple times. Is that bad. If we use an SSD, this approach can wear it out over time, and if we have an HDD, then the transition from one position to another takes a relatively long time, since the read head physically needs to move to the right place.

But why do we need to read the center of the file and other key points with each search? You can count them one single time and remember. This optimization method is called caching and can be implemented using the lru_cache decorator.

from functools import lru_cache

@lru_cache(maxsize=100000)

def get_data_in_position(file_name, point):

with open(file_name, encoding='utf-8') as file:

file.seek(point)

while True:

try:

file.readline()

break

except UnicodeDecodeError:

point=point+1

file.seek(point)

return(file.readline())In this case, the maxsize variable is responsible for the maximum number of lines stored in the cache. After all, we do not need it to fill all the RAM.

But caching doesn’t end there. After all, now we reopen the source file at each iteration of the search, which takes a lot of time. Why don’t we store the open file variable in the cache as well? Here, for variety, we will do without ready-made solutions to show that caching is not at all difficult.

open_file_list={} #кэш открытых файлов

def open_file_cashe(name_file):

global open_file_list

if open_file_list.get(name_file)!=None:

return(open_file_list.get(name_file))

else:

t=open(name_file,'r',encoding='utf-8',errors="ignore") #,'\n'.join(a[Min:])

if len(open_file_list)<10: #кэшируем до 10 открытых файлов

open_file_list[name_file]=t

else:

File = open_file_list.popitem()

File[1].close()

open_file_list[name_file]=t

return

# открываем соединение с базой данных

conn = sqlite3.connect('hashes.db')

cursor = conn.cursor()

# генерируем 10000 случайных чисел и ищем их хэши

total_time = 0

for i in range(100000):

# выбираем случайное число

num = random.randint(1, 1000000)

# получаем хэш числа

hash_value = hashlib.md5(str(num).encode()).hexdigest()

# засекаем время перед запросом

start_time = time.time()

# выполняем запрос по хэшу

cursor.execute('''SELECT id FROM hashes

WHERE hash_value = ?''', (hash_value,))

# получаем результаты и добавляем время запроса к общему времени

result = cursor.fetchone()

end_time = time.time()

total_time += end_time - start_time

# закрываем соединение

conn.close()

# выводим общее время запросов

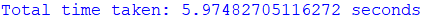

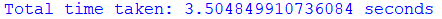

print(f'Total time taken: {total_time} seconds')

def find_hashes_csv():

# генерируем 10000 случайных чисел и ищем их хэши

total_time = 0

for i in range(100000):

# выбираем случайное число

num = random.randint(1, 1000000)

# получаем хэш числа

hash_value = hashlib.md5(str(num).encode()).hexdigest()

# засекаем время перед запросом

start_time = time.time()

find_in_file('hashes.csv',hash_value) #функция нашего поиска

end_time = time.time()

total_time += end_time - start_time

# выводим общее время запросов

print(f'Total time taken: {total_time} seconds')Results:

Thus, we put together a search engine on the knee, twice as fast as in real databases! Yes, and more economical in terms of memory!

But there is a simple explanation for this – databases are a multi-tool, a universal tool with dozens of possibilities, with various search options and with complex algorithms for adding and deleting data. During their development, they sacrificed the speed of work in favor of convenience and versatility. Therefore, a simpler algorithm may well be faster.

And so that you can make sure that it really works and I didn’t take the numbers out of my head, I will tell you about a project in which I actually applied everything that I talked about above. I created a telegram bot to search for data among various leaks. Such a bot is needed for osinth, breaking through scammers, checking your own data, etc. Initially, I developed it for companies – to check user accounts in bulk, identify those vulnerable to hacking through leaks and force them to change passwords, but now I have made it available to everyone.

When I implemented the search, the SQL database was not suitable for me. because I don’t like SQL because the size of all the files is 2.5 TB and, together with the indexes, they simply did not fit on my disk. Therefore, I studied everything that I wrote about above and, as a result, implemented the bot the way I originally wanted.

This bot can run 20 queries per second with a database size of 40 billion rows! And all this is exclusively on binary search. No SQL, plain text files and the maximum number of optimizations. Not only those that I have covered in this article, but also some others that I will write about in future articles.

And here is this bot, you can check how it works: DataLeakAlertBot

And I hope you enjoyed this article. Ready to answer any questions you might have.