Seastar as a 5G backbone platform and a brief comparison with Boost.Asio, userver and others

I have been researching some open-source frameworks that are platform candidates for carrier-grade 5G backbone, and I want to share my findings. I’ll compare Seastar, mTCP, Boost.Asio, userver, and ACE, explain why timing primitives are bad, and then take you deep into Seastar.

The main requirement for a 5G core network platform is to handle loads. To start off with something, let’s assume that the core network should provide a throughput of 100 Gbps user plane traffic and serve 100 thousand active users.

Even with such low loads, it immediately becomes clear that you can’t get off with an ordinary epool reactor. Of course, it would be possible to fasten XDP and eventually squeeze out even more than 100 Gbps, but further you will see that the framework makes it easier to achieve the desired performance, and also provides many additional goodies.

Let’s fix a few more requirements:

open-source due to cost effectiveness, since there is no need to allocate a budget for the purchase of a proprietary solution at the very beginning of product development;

out-of-the-box scaling – since the number of cores on servers is constantly growing, I would like to be able to scale across them without additional effort;

efficient utilization of iron, minimizing monetary and time costs;

usage modern C++, which would be a good choice if we are thinking about backbone efficiency. And its more modern standards will only contribute to the development.

Open source frameworks

So, given the above, let’s see what is available in the open spaces:

Framework | Advantages | Disadvantages | Synchronization primitives | Language |

– share-nothing architecture – lock-free cross-CPU comm. | – “sharding“ | no | C++ | |

– user-space TCP stack over DPDK – per core architecture | – no SCTP, no UDP – no IPv6 | Yes/No | C | |

– rich functionality – big community | – no SCTP | Yes | C++ | |

– even richer functionality – async DB drivers | – stackful of coroutines – no SCTP | Yes | C++ | |

– complete networking functionality | – huge and complex | Yes | C++ |

First comes Seastar with his killer features: share-nothing architecture, lock-free cross-CPU communication and lack of synchronization primitives. At the same time, the framework is, of course, asynchronous. We will talk more about this, as well as about sharding, a little later.

The next tool is mTCP. Strictly speaking, it is wrong to call it a framework, rather it is just a userspace TCP stack on top of DPDK. mTCP implements an architecture similar to Seastar share-nothing, which the authors themselves call per-core architecture. We will not focus on the differences in detail, but I note that although, according to the authors, mTCP does not use synchronization primitives, it was still not possible to completely get rid of them during the interaction between the application and the framework.

“…We completely eliminate locks by using lock-free data structures between the application and mTCP.” (Source)

For example, inside the framework there are a large number of mutexes. Another important aspect is that when developing a core network, it is necessary to have basic protocols such as TCP, SCTP and UDP, as well as support for IPv6. As can be seen from the table, mTCP lacks almost all of the above.

Further, the well-known Boost.Asio. It is worth noting that this framework is the de facto standard for writing network applications in C++. It has rich functionality and a huge community. “In the box” Boost.Asio and did not deliver SCTP. Due to the deeply hidden POSIX and the desire to keep the established API, this turned out to be a non-trivial task. Like many others, Boost.Asio uses synchronization primitives.

The next one is userver, a framework from Yandex. Learn more about its benefits here, but in short, it turned out to be a really powerful product with such features right out of the box as asynchronous database drivers, tracing, metrics, logging and much more. Although the userver has its own synchronization primitives, which we’ll talk about in more detail a little later, the framework still needs them. As in many other frameworks, SCTP was not delivered to userver. It is important to note the use of stackful coroutines. Later we will compare stackful and stackless coroutines, but, looking ahead, I will say that the stackless option is more suitable for the needs of the core network.

Last in line, but not the coolest – ACE. You can read more about it here. Its key difference from other frameworks is a complete set of functionality for writing network applications. In particular, it has a reactor, a proactor, many protocols and socket types, an implementation of connectors, acceptors, and much more. The framework is perfect for writing a core network based on the epoll reactor or creating any application that uses networking. Like many, ACE has a need for synchronization primitives. There are no key drawbacks in the form of a lack of protocol support, like other frameworks, but, in my subjective opinion, a high entry threshold due to a not very friendly API can be attributed to the disadvantages.

Although the presence of synchronization primitives is placed in a separate column from the disadvantages, in fact this factor has become almost the key when comparing frameworks. Items listed in the flaws column, with the exception of stackful coroutines, can be fixed since all frameworks are open source and you can add the missing items yourself. But getting rid of the synchronization primitives will not work. As you can see from the table, Seastar is the only solution that does not use synchronization primitives at all. Let’s use Mutex as an example to discuss why this is so important.

Why synchronization primitives are bad

Below is a summary table taken from the article Anatomy of Asynchronous Frameworks in C++ and Other Languages. It presents the results of measurements, where the mutex is locked and unlocked in an infinite loop from a different number of threads. Compares std::mutex and Mutex from the userver framework. Unlike C++ mutex, userver mutex does not block std::thread, does not switch contexts, and does not allocate dynamic memory. Despite the fact that Yandex Mutex got rid of the main problems inherent in mutexes, we still observe overhead in the region of 700 nanoseconds already on four threads. As the number of threads grows, you can expect overhead to get bigger and bigger. At the same time, Anton Polukhin, one of the key authors of userver, recommends using other synchronization primitives for four or more threads. But, as will be seen later, it will be possible to get rid of synchronization primitives in principle.

Competing threads | std::mutex | Mutex |

1 | 22ns | 19ns |

2 | 205ns | 154ns |

4 | 403ns | 669ns |

If we take a standard mutex, then one of its key drawbacks is context switching.

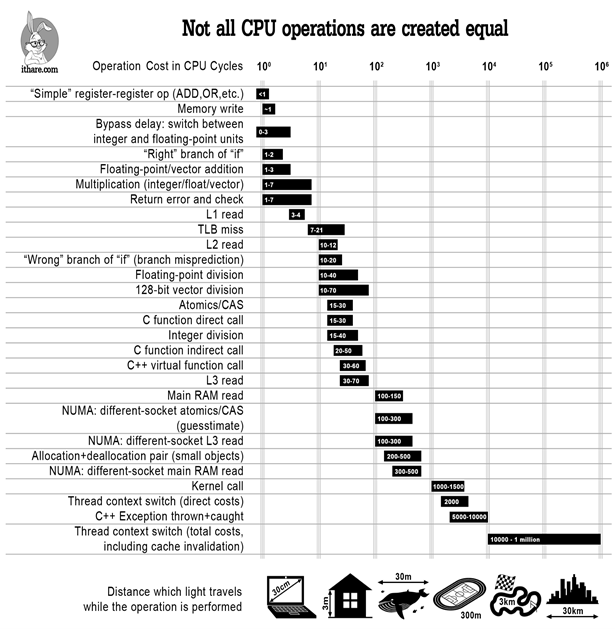

Why context switching is bad

The picture below shows a fairly well-known, albeit slightly outdated in terms of the correctness of measurements, taking into account modern hardware, a table. The logarithmic scale shows the cost of various operations in processor cycles: for example, a simple addition of two registers takes less than one cycle, L1 cache reading – three to four cycles, reading RAM – 100-150. But the most interesting thing is the last line, which shows that the cost of a context switch with cache invalidation (if you try to make a lock on an already locked mutex, a context switch will just happen and, most likely, with cache invalidation) is huge and can reach a million cycles, not to mention already that there are cache misses. From this data, we can assume that a framework that works without synchronization primitives and is generally implemented mostly in userspace will be an order of magnitude faster than its competitors.

If the above is still not enough to fix the problems of the synchronization primitives and the mutex in particular, here is here see a few more arguments.

Seastar Performance

Before moving on to the story of how Seastar was able to completely get rid of the synchronization primitives, let’s look at a few benchmarks to make sure that the assumption made earlier about the gain due to the lack of synchronization primitives really works.

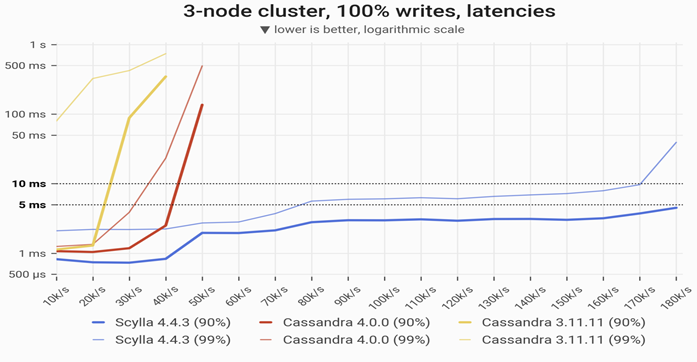

Here is the first benchmark. The logarithmic scale compares the latency for writing to the Scylla and Cassandra databases. Scylla is the same Cassandra, but based on Seastar. In general, Seastar was originally developed by Avi Kivity (for a second, progenitor Kernel-based Virtual Machine) and the team as a Scylla database framework. As you can see, comparing the 99th percentile, Cassandra version 4.0.0 at 30-35 thousand records per second is kept in the range of 5-10 milliseconds. Meanwhile, Scylla manages to write 170 thousand in the same latency range. That is, almost five times more than Cassandra! Here you can see a lot of Scylla vs Cassandra benchmarks with detailed conclusions.

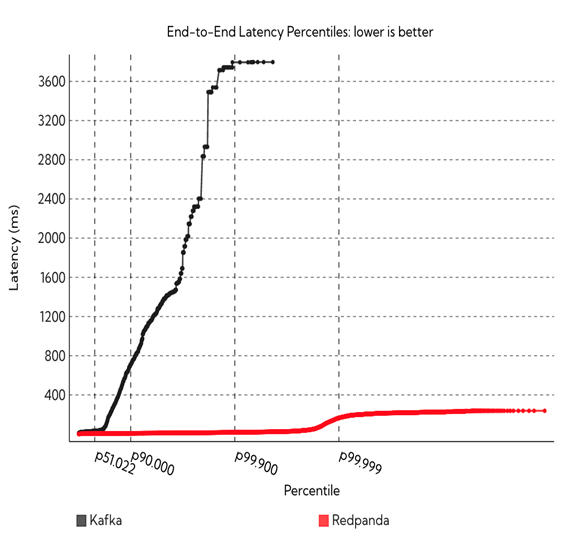

The second benchmark is Red Panda vs Kafka. Red Panda is the same as Kafka, but, again, written in Seastar. Here is the same story as with the previous benchmark: five nines and beyond, Red Panda consistently keeps latency below 400 milliseconds, while Kafka already at three nines is at latency values of 3600 milliseconds.

After it became obvious that Seastar shows incredible performance, it’s time to answer the question of how he does it.

Share-nothing design

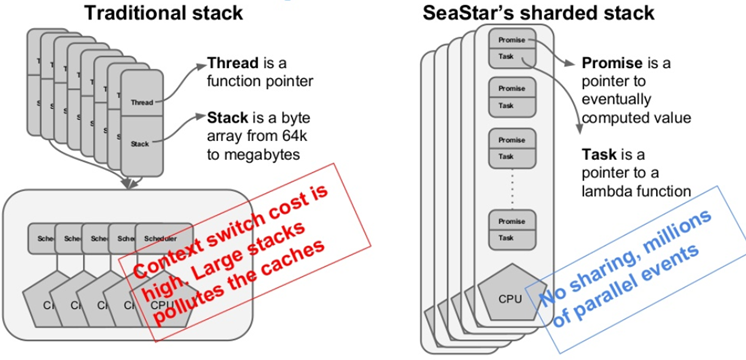

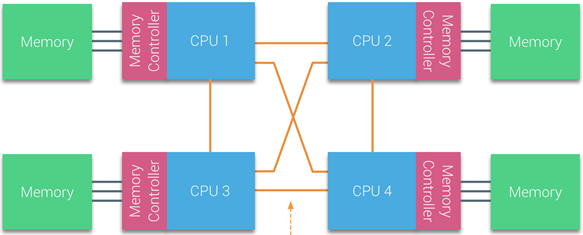

The key feature that allows Seastar to show such results, and also provides the ability to completely abandon synchronization primitives, is share-nothing architecture. The idea is that each core (shard) works independently, and there is no need to share memory between cores.

But what if the current kernel does not own the memory required to perform the operation? For such cases, Seastar has a highly optimized lock-free cross-CPU communication. Seastar provides convenient API, which allows you to redirect the execution of the current operation to another(s) core(s). Seastar also has map-reduce. The share-nothing architecture allows you to get locality first of all, that is, the kernel always accesses only its memory. This, in turn, has a positive effect on memory allocations, caches, and the ability to utilize the architecture. NUMA. And, finally, what we talked about so much – synchronization primitives are not needed, because each core does not go to someone else’s memory on its own.

It is worth noting that such an architecture requires load balancing between the cores, because if one core accepts all incoming connections, then there will be no sense in share-nothing. It’s good that Seastar provides three balancing mechanisms out of the box:

connection distribution – distribution of all connections equally between all cores. The new connection is sent to the shard with the fewest connections. This is the default;

port – distribution of new connections based on peer’s (source) port. Formula:

destination_shard = source_port modulo number_of_shards.When choosing this method, the client has an interesting opportunity: if he knows the number of server shards, he can choose his port in such a way that connections always come to a certain shard;fixed – all new connections are allocated to a fixed shard.

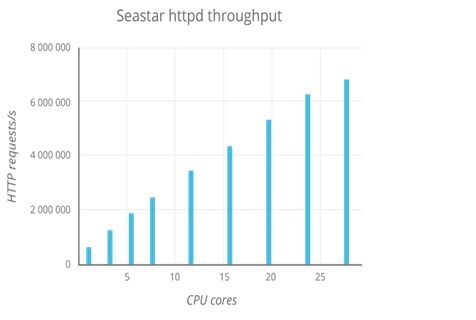

What else gives share-nothing

At the very beginning of the article, along with the rest of the requirements, we fixed the desire to scale out of the box by the number of cores. The graph below shows how, thanks to share-nothing, the number of HTTP requests per second grows linearly with the number of cores.

networking

It’s time to talk in more detail about what Seastar can do in terms of network programming. After all, we consider it as a platform for writing a 5G backbone network.

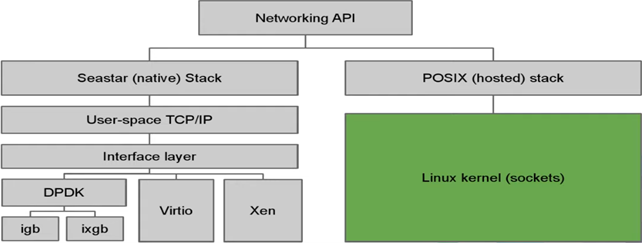

Seastar provides two stacks. The first of these is POSIX, implemented in three backends: linux-aio, epoll and io_uring. A cool feature of the implementation is the shared API, which means seamless transition between backends. Moreover, you can do it directly from the command line. Let’s say you wrote an application for epoll, and then decided that you want to switch to io_uring. It will be enough for you when you start the application on the command line to specify: --reactor-backend = io_uring, and your application will start working under io_uring without any additional effort. The default is linux-aio if available.

The second stack is the so-called Native, implemented on top of DPDK, Vitrio, or Xen. We will not go into the details of this stack, we will only note that the upstream version of DPDK is not used, while the changes, as far as we know, are not dramatic. Also, when choosing DPDK in combination with Poll Mode Drive, Seastar will utilize 100% of the CPU.

Let’s list a few more Seastar goodies that have a positive effect on network programming and not only:

userspace TCP/IP stack;

each connection is local to the core, so there are no locks;

the absence of cache-line ping-pongs, which means cache lines do not “jump” between cores;

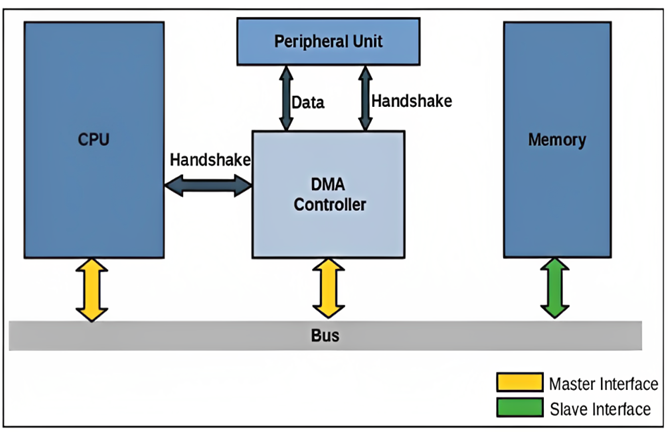

NIC through DMA redirects the packet to the core responsible for it;

zero-copy send/receive API allows direct access to TCP

The zero-copy storage API makes it possible to write and read data from storage devices via DMA.

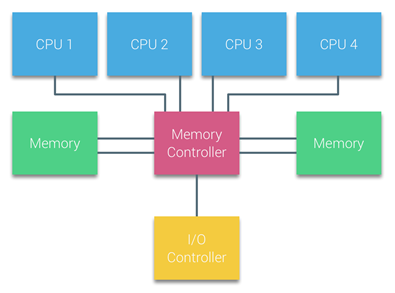

CPU and memory

Now let’s take a closer look at how Seastar utilizes the processor and memory. By default, an application written in Seastar takes all available cores (this can be configured up to running on a single core), allocating one thread per core, and all available RAM (which can also be configured), evenly distributing it across all available cores . In this case, the memory is distributed not randomly, but taking into account the NUMA architecture, that is, each core will receive the memory closest to itself. Seastar achieved this nice feature by redefining memory allocation and release functions. In other words, Seastar has its own heap memory management.

At this point, it would be correct to mention the lack of Seastar. For it to work effectively, it needs to allocate whole nuclei. If this is not done, it will function, but not as briskly as we would like. Therefore, in a cloud environment, there may simply not be such control over the CPU. And in general, it would probably be correct to note that not every application is able to utilize share-nothing design.

Now back to the good. Seastar is also great because all its primitives are preemptive, that is, tasks do not get stuck in execution, allowing you to effectively utilize the asynchrony of the framework. What to do with your own code, the execution of which may require a lot of CPU time, for example, with a loop for many iterations, hash counting, compression, and the like? For this there is maybe_yield – just insert it into a potentially thick piece of code, and Seastar, if necessary, will force out this potentially resource-intensive task.

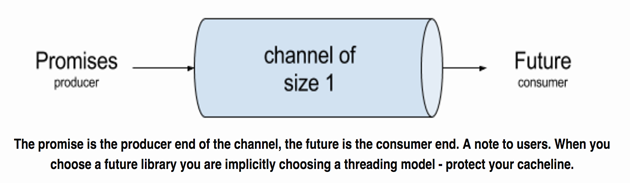

Asynchrony

All asynchronism falls on the concept future promise. But it wouldn’t be Seastar if std::future and std::promise were just used, as they are known to block the thread. The authors of Seastar, with its share-nothing architecture and lack of synchronization primitives, did not want to allow this, so they implemented their own Future-Promise pair, which does not block.

And the developers of Seastar are adherents of modern C ++, just like we wanted, formulating the requirements at the beginning of the article. As soon as coroutines were brought into C++20, the authors of the framework immediately adapted them, taking into account the same share-nothing and the lack of synchronization primitives.

Continuation vs coroutine

Let’s digress from Seastar and remember why so many people love coroutines. To do this, the two code snippets below provide the simplest example of an asynchronous connect function.

Connect using continuations:

seastar::future<> connect(std::string_view name_sv)

{

return seastar::do_with(std::string{name_sv}, [](std::string& name) {

return seastar::net::dns::resolve_name(name).then([&name](seastar::socket_address addr) {

return seastar::connect(addr, {}, {}).then([&name, addr](seastar::connected_socket fd) {

g_lg.info("connected from {} to {}/{}", fd.local_address(), name, addr);

return seastar::make_ready_future();

});

});

});

}

Connect using coroutines:

seastar::future<> connect(std::string_view name_sv)

{

std::string name{name_sv};

auto const addr = co_await seastar::net::dns::resolve_name(name);

auto const fd = co_await seastar::connect(addr, {}, {});

g_lg.info("connected from {} to {}/{}", fd.local_address(), name, addr);

}

The connect function takes a name as input, resolves to an address via DNS, connects to this address and logs. It would seem that it would not be easier to come up with, but even this example shows how continuations bloat the code, it becomes hard to read, out of the blue a triple nesting level of lambdas appears, and lambdas are the need to explicitly capture variables, which often leads to type errors use-after-move and so on. While the implementation on coroutines is a linear code, as many are accustomed to writing in ordinary synchronous programs. But you need to use the co_await, co_yield and co_return operators. Also, Seastar developers claim that performance when writing on coroutines increases by 10-15% due to fewer dynamic locations. So the presence of coroutines in the framework cannot but rejoice.

From the depths of Seastar

It’s time to look deeper into Seastar and discover some interesting things there. First of all, Seastar has stream-based SCTP, that is, the SOCK_STREAM socket type is used. You can live with this like TCP: write formers that will “feed” on packets until they form one complete message and transmit it to the client, and then they will “feed” on packets again, and so on. In other words, it is some mechanism that determines the beginning and/or end of a message in a byte stream. In the case of TCP, there is no choice, we work as always. SCTP is a different story, because it provides built-in mechanisms for determining the end of a message (even for stream-based sockets), namely the MSG_EOR flag, which means the end of a message. There are different ways to get this flag sent by the SCTP stack, depending on the socket API that is used to send and receive messages. However, by default, Seastar does not provide an option to enable the sending of this flag. Moreover, in addition to this flag, I also want to receive MSG_NOTIFICATION in order to control the state of the SCTP association, as well as the Stream ID, Stream Sequence Number and Payload Protocol ID. To take control of all this goodness, two POSIX calls were added to Seastar: sctp_sendv and sctp_recvv, as well as several sockopts: SCTP_RECVRCVINFO, SCTP_INITMSG and SCTP_EVENT. The last two are user configurable for the values of these sockopts. For example, we are interested in controlling the sinit_num_ostreams and sinit_max_instreams fields for sctp_initmsg. Voila! We now have finer control over SCTP and no formers are needed.

Next point of interest: Seastar has an entity server_socket, which is actually a wrapper around the listening socket. This entity has a method abort_accept, which, as the name suggests, is designed to stop accepting new connections. This method is implemented for TCP and SCTP in the same way. — internally called by POSIX shutdown with the SHUT_RD flag. And now a joke: in the case of TCP, everything works as expected, but in SCTP, the stack will return ENOTSUPP (Operation not supported), which Seastar will happily wrap in an exception and throw you. Perhaps, you should not sin on Seastar, because it simply transfers control to Linux, and come what may. But the fact that the default Seastar API shoots you in the foot out of the blue is pretty funny. A crutch solution to the problem is to pull the destructor of the server_socket entity instead of calling abort_accept. It seems to work.

And finally, three bugs in the class web socket. However, the class is in the experimental namespace, so we will not be particularly critical of Seastar. The first bug is related to the sequence of calling destructors on the server and its connections – first, of course, you need to release the connections, and only then the north. The other two are related to the formation of header size & value when sending data. By RFC-6455 payload length & value are filled differently depending on the size of the buffer. Seastar knows about it, but there was a kind of off by one error – instead of length = 4 we set 3, and instead of value 0x7f we set 0x7e.

stackful vs stackless

And finally, a little holivar, so that there is something to argue about in the comments to the article. I mentioned above that userver uses stackful coroutines. Let’s immediately fix that this is not good and not bad. It’s just that stackless is more suitable for the needs of the core network, and here’s why:

Type | Advantages | Flaws |

|---|---|---|

stackful | – no code changes – stack-based memory | – fixed size stack – context switch |

stackless | – “inf” number of coroutines – low overhead per coroutine – no context switch – less memory per frame | – dynamic memory alloc. – co_* operators – futureize the whole path |

The table shows the advantages and disadvantages of both options. Stackful coroutines have two big advantages. The first is the absence of the need to somehow change the code: no co_* operators for you, you don’t need to miss the future all the way. The second is stack-based memory, that is, the memory for the coroutine frame is not dynamically allocated. However, the second advantage is also the main limitation of stackful coroutines. The stack has a fixed size, which is average 2 MB, therefore, you can spawn exactly as many stackful coroutines as there is a stack available. And you can’t optimize the size of the coroutine frame, because it’s not known in advance how much memory will be needed. In addition to this, there is a context switch, and this is bad.

Let’s move on to stackless coroutines. They, unlike stackful, can spawn a huge amount, since heap is used for allocations. Also, stackless coroutines have less overhead, including due to fine tuning of the amount of memory allocated for the coroutine frame, and not a fixed piece, as for stackful. Plus there is no context switching. Of the shortcomings, of course, the very fact of the presence of dynamic allocations. However, compilers, knowing that they work with coroutines, can use different optimizations and allocators, sharpened to allocate memory for the coroutine frame. Well, the need to use the co_await, co_yield and co_return operators, as well as to miss the entire future path.

This concludes my dive into Seastar and invites you to discuss in the comments whether the choice of this framework is the most appropriate solution for the implementation of the carrier-level 5G backbone network.