remember how infrastructure was managed 20 years ago

“Why not remember how it all began?” – we thought and gathered a council of elders. Those who “were there 3000 years ago” and saw a time when there was no sign of DevOps in the world, automation was done with custom scripts, and developers sometimes experimented directly in production.

We deliberately moved away from the “company anniversary biography” format. First of all, it's boring – both for us and for you. Still, it is much more interesting to look at the context of the era as a whole. See what has changed. Understand the history of the development of your own products and carefully look into the future.

Below the cut you will find our lamp memories of the times when trees were beautiful and big, and server rooms were small and stuffy.

Infrastructure management: from shell to Kubernetes

At the beginning of the 2000s, the word “server” was used to mean something iron, real, brutal. Such machines were controlled via a terminal or COM port. At best, using KVM. By the way, COM is still used to work with hardware that does not have its own display – with routers, for example.

If there were 1-2 servers in the company, it was possible to cope with them with “handles”. What if there are 5, 10 or more cars? It’s impossible without automation. Too many routine operations.

Administrators began writing special scripts. As a rule, straight to the shell, no frills. But at some point, these scripts inevitably grew and exceeded 1000 lines of code. It’s also inconvenient: if the script encounters an error at the very beginning, all the “automation” will go down the drain. Once again, you will have to dive into the depths of the code and look for what went wrong.

Closer to 2010, specialized automation tools began to appear – Puppet, Chef, CFEngine, etc. Unlike the imperative approach typical of scripts (come on, computer, perform these actions sequentially), the declarative approach (achieve the desired state described in the file) is much better suited for automating processes.

Roughly speaking, if in a script you had to write something like “add the line foo to the file bar,” then tools like Puppet allowed you to formulate the task as “there should be a line bar in the file foo.” Accordingly, the system did not blindly execute the instruction, but first checked whether the required line was in the file. If there is, we skip it. If not, add it. And if the program could not perform some step, all the errors were visible.

Instead of a thousand-line script, it was enough to create a declarative file (in JSON, INI format, depending on the system) of plus or minus 50 lines. It's much easier to maintain now.

As promised, we share our memories of the past IT:

Once upon a time I wrote a program that was supposed to send packets over the network. And it didn't work for me. You launch it and nothing happens. It seems: take it and debug it. But the level there is very low. Assembler, interrupts. No error logs. For example, I executed the code in an interrupt, and the value 10 came into the AX register. What is this, where did it come from? Do with it what you will. I spent quite a lot of time until I found a typo in the book from which I copied the code fragment. And there was absolutely nowhere to look, just to delve into it and guess for yourself. And it was like that everywhere: do you need information? Look for those who have it. No other way.

But it’s one thing to automate a routine. And it’s completely different to manage an iron zoo. Each company had its own stack of infrastructure management tools. For each narrow task it was necessary to find an individual solution. Semi-automatic or even manual. Roughly speaking, you could get a job as a system administrator with several years of experience behind you, and spend six months just learning new tools. Simply, because in your previous company you were used to using a radically different set of tools.

For example, here is a non-trivial task: from switch A comes Express train wire and rushes somewhere to the ceiling. Where is he going? Why is he coming? Mystery. It would be good if a disciplined colleague documented everything in some text file during installation or even drew it in Visio. But finding this file, finding the right place in it and decrypting it is a huge investment in time.

Or what if management decides to switch to servers from another vendor? Old scripts are not suitable, you will have to understand and create new ones.

As for administration, in those years many kept records of racks, IP addresses and much more, for example, information about occupied/free units, in regular Excel files. It was all terribly inconvenient. A bunch of scattered files, a bunch of versions, they need to be sent to each other by mail and not to forget to update…

And the accounting department could come to the department asking where the server bought three years ago is located. But no one remembers. During this time, even the staff could change. And the inventory and running around begins. An irritated admin makes tea and hides in the smoking room to delay the search for the serial number of the server, which is 100% blocked in the rack by other units. You can’t read it until you understand half the rack…

Actually, our DCImanager grew out of a whole set of scripts and other tools that we used within the company to solve similar problems.

Today it is possible to visualize the racks. Add your data center cards. When a company has more than 1000 servers, problems arise in understanding in which part of the room which machine is located. In addition, DCImanager receives all data directly from the equipment, and IP addresses are automatically distributed to servers from the IPAM module.

The service has an accounting module that stores the current location of the server, what invoice it was purchased with, when it was delivered, and all its additional peripherals. You don’t have to disassemble or look for anything—it’s enough to show a little responsibility when filling out the data. In the future, we want to add a cable log so we can account for cable connections between racks.

In fact, this service is built on a foundation from our personal experience, which we then reworked and made available to all users.

Virtualization

Imagine a company has an iron server. It needs to support accounting, mail, a website, various internal services, etc. Having a separate piece of hardware for each task is wasteful. Keeping everything within one OS is inconvenient and unsafe. Therefore, virtualization, when several operating systems isolated from each other can easily coexist on one machine, is an irreplaceable practice.

Previously, solid machines were “cut” for VM purely by hand. I had to click the mouse on the same fields an incredible amount. Nowadays this process takes seconds, but at that time each VM had to be configured separately. There was a high probability of a banal human error: in order to create 10 identical VMs, it was necessary to repeat the same set of operations 10 times. And if the cars were slightly different, it was possible to press somewhere in the wrong place on the machine. Wrong? Redo it. Nowadays, a virtual machine can be described in code and deployed using a template. Comfortable? Undoubtedly.

Another important thing is a set of ISO images of systems. If several people in a company worked on virtual machines, even systems with the same technical specifications could be deployed from different, slightly different images, which created problems.

VMmanager is responsible for virtualization. Like DCImanager, it actually grew out of internal automation tools.

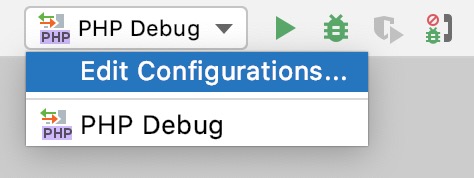

Over the years of its existence, a lot has changed in it: the interface, integration with third-party tools and monitoring tools. Under the hood, everything was also constantly improved and modernized. Instead of FreeBSD jail, we switched to Xen, and later introduced KVM. Added support for Terraform to store infrastructure as code.

Previously, it was necessary to separately configure monitoring and various interactions. A separate point is notifications. Now you don’t even need to go to your email, all alerts come to Telegram.

And then everything was different:

One day at our institute, where we were responsible for infrastructure, the electricity was turned off. In the middle of the night, when no one expected. I remember that my colleagues and I were just culturally celebrating some professional holiday. After a couple of hours, the light came on, and a reasonable thought crept into our heads: it would be nice to turn on the server and check that everything is working. So it's late at night. I, determined to fix everything, got to the institute and want to go to the server room. The security guard, guided by the classic “not allowed” and “not allowed in this form,” sends me on my way. What to do? I obey. But the very next day, having finally turned on the ill-fated server, I draw up a memo. Say: due to the excessive enthusiasm of the security service, I was unable to carry out the necessary technical work. Therefore, all the rector’s mail was irretrievably lost within 24 hours. As a result, I was officially given 24/7 access 🙂

Billing

In 2003, if you wanted to provide hosting services, you would have to write a billing system from scratch, and only for internal use.

The client submitted a request to connect the service. The manager or admin processed this request (manually set up everything that the client needed), then informed the accounting department so that they would issue an invoice to the client through 1C.

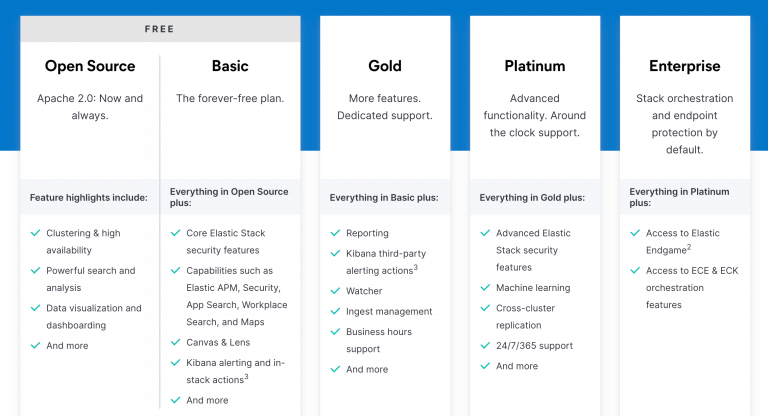

An alternative option is to contact a large integrator, who will implement billing in the company within a few months. There were very few boxed products on the market. Now our BILLmanager supports many payment options, including cards – and then there wasn’t even such a system.

Here's another story from our colleague from one of his previous jobs:

We had a funny story with one foreign client. In my opinion, French, but I can’t vouch for how long ago it was. And at our provider, when creating a login, a password was generated automatically. One day, technical support picks up the phone and hears a complaint from this client: why did you give me a swear word in French as a password?! We explained the situation and offered to change it to something more censored. The client's representative immediately came into his own and laughed: no, no, he said. Leave it as it is. Indecent, but well remembered.

In those years, billing was purely a tool for mutual settlements. Modern products (including ours) automate all processes, from leaving a request by the client to actually connecting the required service – for example, deploying a VM. No manual intervention is needed.

On the other hand, general automation has given rise to the phenomenon of “I won’t do this, I wasn’t hired for this” in the new generation of programmers. It's hard to say whether this is good or bad.

Everything was a little different before. All the specialists in the city knew each other. The IT specialists were almost personally acquainted with the clients and their technical departments. Get-togethers and general meetings took place regularly. And it was unthinkable to hear a phrase like “it’s none of my business” from a person. Whatever the problem: running a wire, setting up software, installing hardware, people willingly agreed to work. Partly out of interest. Partly out of professional pride. Partly because there was no one else to solve the problem. They were burning with new ideas, trends and fearlessly moved forward. In modern IT, becoming a pioneer is perhaps no longer so easy, however, the fire of curiosity and passion for knowledge still burns in today’s IT specialists.

Want to get a little nostalgic? Share your stories in the comments!