Myths about Unreal Engine 5 – Nanite

Good day,

The success of the new version of Unreal Engine has not left professionals of all stripes unnoticed. Attempts to give a rational explanation to the ongoing revolution have clouded the minds of the most stubborn unit business people. Journalists make comparisons with everything that comes their way in order to try to transfer technologies from the world of ideas into any available space. There are rumors that a couple of coders were admitted to a psychiatric ward, they only stammered about mesh shaders. And those who have seen the source code talk about the unusual color of the code, clearly not from our world, perhaps the theme was even light.

All this madness gave rise to myths and entire cults, the ray-tracer went against the ray-tracer, the ray-caster was smeared between frames, the Z-brasher, with wild laughter, copied and pasted two brush strokes from a photo scan of obscene models directly into the game, even called Chat-GPT the demonstration is deep-fake, and the news is stuffing. Kroll ate a dragon, a dog and a cat… and yes, what am I talking about…

So, I can’t tell you what this Nanite actually is, but I can tell you what it definitely isn’t. So..

The Nanite technology itself, or Nanite(s) in Russian, is a technology of virtual or virtualized geometry that came to us with the alpha version of Unreal Engine 5, version 5.3 is currently available. The main advantages that Nanite provides are simply a colossal amount of displayed geometry. So different from the ordinary that a reasonable question arises – “Why was it possible?”

Regular games can display up to several hundred thousand polygons, and the budget per model reaches several tens of thousands for the most detailed models.

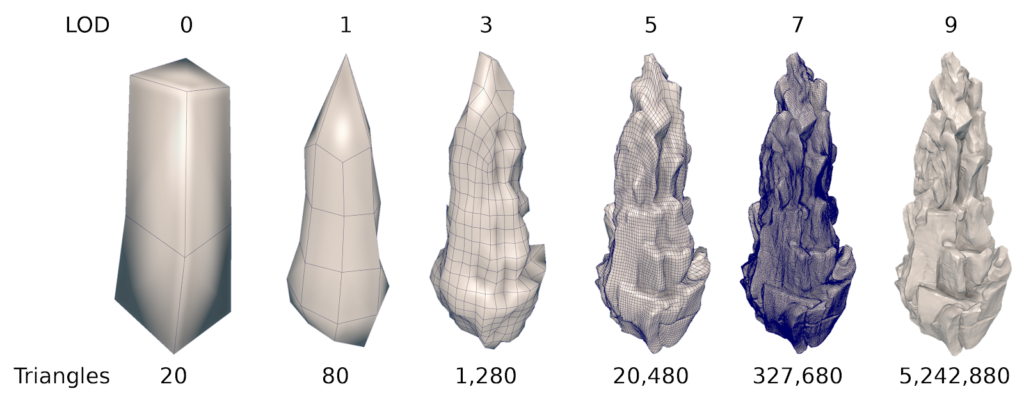

Games on Unreal Engine, meanwhile, can display billions of polygons per model, and top versions of video cards can display a trillion polygons at 60 hertz in debug mode. Here’s a diagram that proves something:

And to everyone’s surprise, Nanite did not require a nuclear reactor to launch. Moreover, polished versions of the demos ran on the latest consoles. Not polished either, but that was later.

Consoles are the most important point because throughout history, consoles have been the limiting factor in game development. You could have the most powerful advanced hardware, exceeding all the power of consoles by 3-5 times, on a video card alone. And all you could do with this power was increase the resolution or frame rate, because the maximum level of graphics was limited by what the core game engine, which was written for consoles, supported.

The word Nanite itself means a nano-robot, popular in science fiction and culture in the early 2000s and currently being developed in laboratories. Nanites work together – in a swarm and in their numbers they can create huge figures, any with precision down to nano-scale. What is the analogy to the work of the technology itself?

We’re done with the preludes, we can move on to the myths.

Myth one: Nanite is a custom mesh shading

This is perhaps the most widespread myth, and this is the first thing that people posing as experts in anything try to pass off Nanite technology as. This myth was created by a team of dissenting Unitists. And a sect of race witnesses of a new generation.

The point here is the presentation of Unreal Engine 5, the presentation was so devastating and amazing that all the competitors and their servants found themselves losers in a race they didn’t even know about. But the journalists knew about it, or rather, they invented it, but they invented it in such a way that it was not they who invented it, but that it was real, and they were simply witnesses to it. One way or another, journalists began to pester everyone with questions, “what will the rest show?” And then a new myth appeared, a myth within a myth – it turns out to be a nesting doll scam. The new myth spoke of some kind of response from mainly Unity and everyone else they could find. Myth, that same race.

It’s not that new versions of Unity didn’t come out after May 2020, they did, but as history has shown, no one came to the race itself. Therefore, this myth does not even need to be destroyed.

However, in the flow of these same questions, journalists themselves created a series of answers. The answers about the technology were as follows: allegedly, Nanite is the next iteration of conventional technologies and competitors are about to have exactly the same technology. And Unity will make such an answer.. But what conventional technologies? Technologies that Nvidia showed at its presentation in 2018. After all, lodes and cutting off geometry were shown there. Almost like in UE5 or not?

GDC 2018 / Nvidia / Asteroids

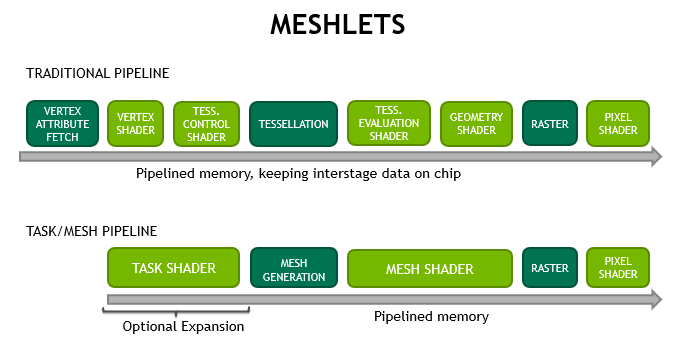

In 2018, Nvidia introduced a new technology implemented at the hardware level, specifically in Turing chips and nothing else. The technology was called Mesh shading; it added some Mesh shaders instead of the standard pipeline. In particular, everything related to vertices and up to the rasterizer was replaced.

And this is all technology. Actually everything. Literally. No nuances. Right, absolutely everything. If this makes it clearer, humanity, including Huang, knows nothing about mesh shaders within the visible universe. The rest of the demonstration is arbitrary code running on top of the mesh shaders themselves.

Mesh shaders solve a specific problem known with DirectX 3D 9, that is, for a very long time. But as for the myth, already at this stage it can be refuted in many ways:

The Asteroids demo can be downloaded from the Nvidia website, as well as parts of the source code responsible for autoloading and geometry clipping. With detailed explanations and a whole article about how everything works there. Since 2018.

Mesh shaders are available to anyone as part of DirectX 12 Ultimate and if they have a video card that supports them in hardware. Since 2018.

As you know, no one else showed anything like this to the Nanites. Since 2018. The myth has been destroyed.

For those who are really interested in how mesh shaders specifically work and why they are definitely not them, continued.

Mesh shaders and geometry

From the beginning of the existence of the graphics pipeline, everything was simple – there are vertices and there are pixels, we load vertices into the video card and get pixels. We can do whatever we want with vertices and pixels. And everything was going well until a logical question arose. But what if we want to at least somehow change the number of vertices themselves? You can change the number of pixels through the vertices, but the vertices themselves are taken from the video card memory. And half of all the technologies introduced since then in DirectX 10 and 11, half of everything that distinguishes generations of graphics APIs, is a solution to the problem of dynamically changing vertices.

The first solution is to directly read vertices from the RAM of the central processor. And this is the most expensive method for synchronization between the GPU and CPU, restrictions on the bus bandwidth and processor memory. Attempts to apply this method to models en masse are the road to slide shows.

Of course, DirectX 9 included another straightforward solution – a geometry shader. A geometry shader is a program that runs on the video card after the vertex shader. The geometry shader can do additional work and add vertices. The problem was almost solved in DirectX 9.

However, at the moment, according to the documentation, anyone who uses geometric shaders commits a crime against honor, conscience, people and God, and is also a hereditary pervert.

It is worth delving into the operation of the video card to understand the problem.

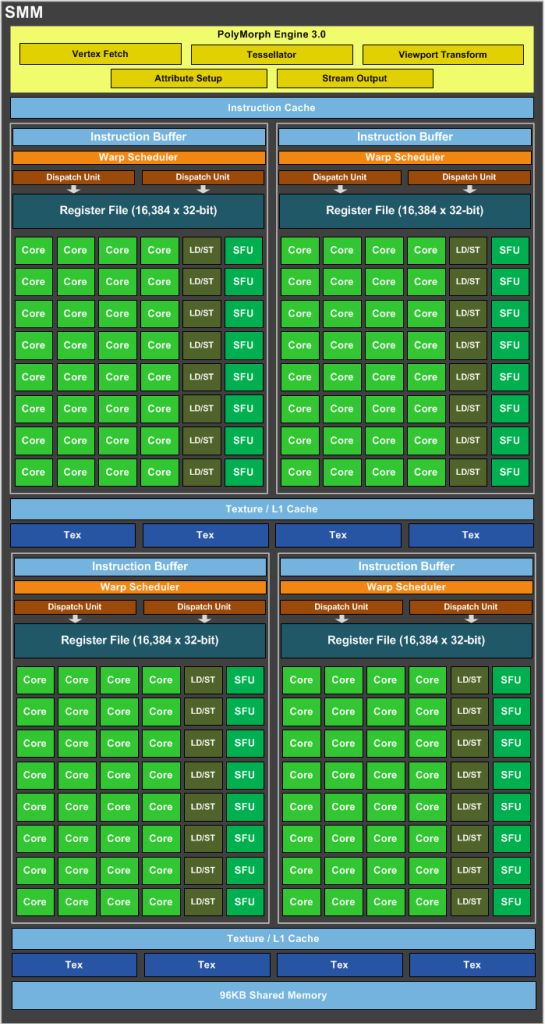

Oh, not that:

And so, before us is one of the large blocks (SMM) of the Nvidia Maxwell generation chip of the 2010s. In one such SMM there are 4 SM blocks, which are what interests us. The Warp Scheduler, which creates threads, is marked in orange, and the green Core cores are the very Cuda cores that perform the calculations.

Vertex and pixel shaders run on green Core cores and in this case, one large block can process 128 vertices or pixels at once. But only Warp Scheduler can add a thread for execution, of which there are only four per large block, and it does this one thread at a time. This is where the bottleneck is created, because it is on the Warp Scheduler that the geometry shader instructions that add vertices are launched. After adding vertices, calculations can also be started above them, which may not fall within the size of one small block – 32 threads. As a result, a geometry shader is a game of “the floor is lava”, where the programmer must take into account the conventions of a particular hardware, and any other hardware can cause uncontrollable lags.

To solve the problems of the geometry shader, which was already a big question, a computational shader appeared with DirectX 10.1. A computational shader works even simpler: a program is written that is executed in any way, the main thing is that the output is an array of vertices, which are already transferred to a regular pipeline.

Of course, if everything were so simple, we would have stopped at DirectX 10.1. Compute shaders have their biggest problem – they are unified, they run on cores for general computing and all the specialized blocks (marked in yellow) of the graphics card, which are much cheaper in transistor budget, power efficiency and performance. Moreover, sometimes you have to repeat the behavior of specialized blocks – in a programmatic way, which becomes similar to the Ouroboros of performance. So then the result must again be sent to the standard pipeline, where it will again go through specialized stages. In early versions of video cards, not all blocks were available for compute shaders, but these are nuances that have been corrected over time.

Tessellation is the hero of the situation, who could, but turned out to be of no use to anyone. She is also a shader tessellation and she solved the issue of dynamic geometry generation through specialized blocks, and the choice of the level of detail and the resulting transformations are programmable blocks that ideally fit on the video card cores. It was such a good solution from an architectural point of view that it’s hard to come up with a better solution. It all turned out to be exactly the same, a large set of shortcomings in practice.

In modern graphics, all the geometry at a level can be called several times for rendering in one frame, and even so, processing vertices can take 10% of the total work, the remaining 90% goes to the work of pixel shaders. Tessellation turned the tide and was able to make vertex processing as slow as pixel processing.

It’s simply incredible, but tessellation breaks each polygon (triangle) into a set amount from 1 to 32 parts, that is, just to make it clear – 32 polygons are created for each polygon, each of which undergoes full processing and is cut off only at the rasterization stage. A stage that weighs 32 times more than a normal one, half of which is not visible. So the fixed function also turned out to be a patch, the patch was applied by a template fixed for the model.

The developers tried, they honestly tried to make 3D textures/patches for the models in order to improve the graphics, they honestly tried to change the very structure of the scene processing in order to somehow use tessellation. As history has shown, not all models lend themselves to templates, but the biggest blow, a blow that not a single video card could survive, is the blow to performance. This was tessellation as we knew it, and this is no longer the case.

And now, 2018, the moment when the winner, the hero – who once overthrew the very first principles in the industry, geometric shaders, the one who was supposed to be the savior of everyone who was supposed to be impeccable – himself found himself in the status of “Outdated interface” / “Not recommended” for use.”

Meet Mesh Shading! finally! A shader that solved all problems radically and simply. It completely removes all vertex processing stages of the classic pipeline, right up to rasterization. As a result, an array of polygons is supplied as input, and the same mesh shader is launched for each polygon. Already from the mesh shader you can launch sub-threads and process polygons further as you please. The output values will be passed directly to the rasterizer.

You understood correctly, that very loud, advertised Mesh shader is in fact a good old computational shader for polygons. This

int Main(Triangle Args)

Saying that anything at all runs on mesh shaders is the same as saying “it runs on a compute shader” / “it runs on a video card” / “it runs on a computer”. This explains absolutely nothing.

Using computational shaders, you can create your own custom culling, which you can write using your own algorithms. As was the case at the Nvidia presentation, where the culling source code is available to everyone.

All connections between Nanite technology and Mesh shading are a hoax. Nanite is the technology, the algorithm itself. Mesh shader and compute shader are the platform on which the algorithms run. One cannot be the other.

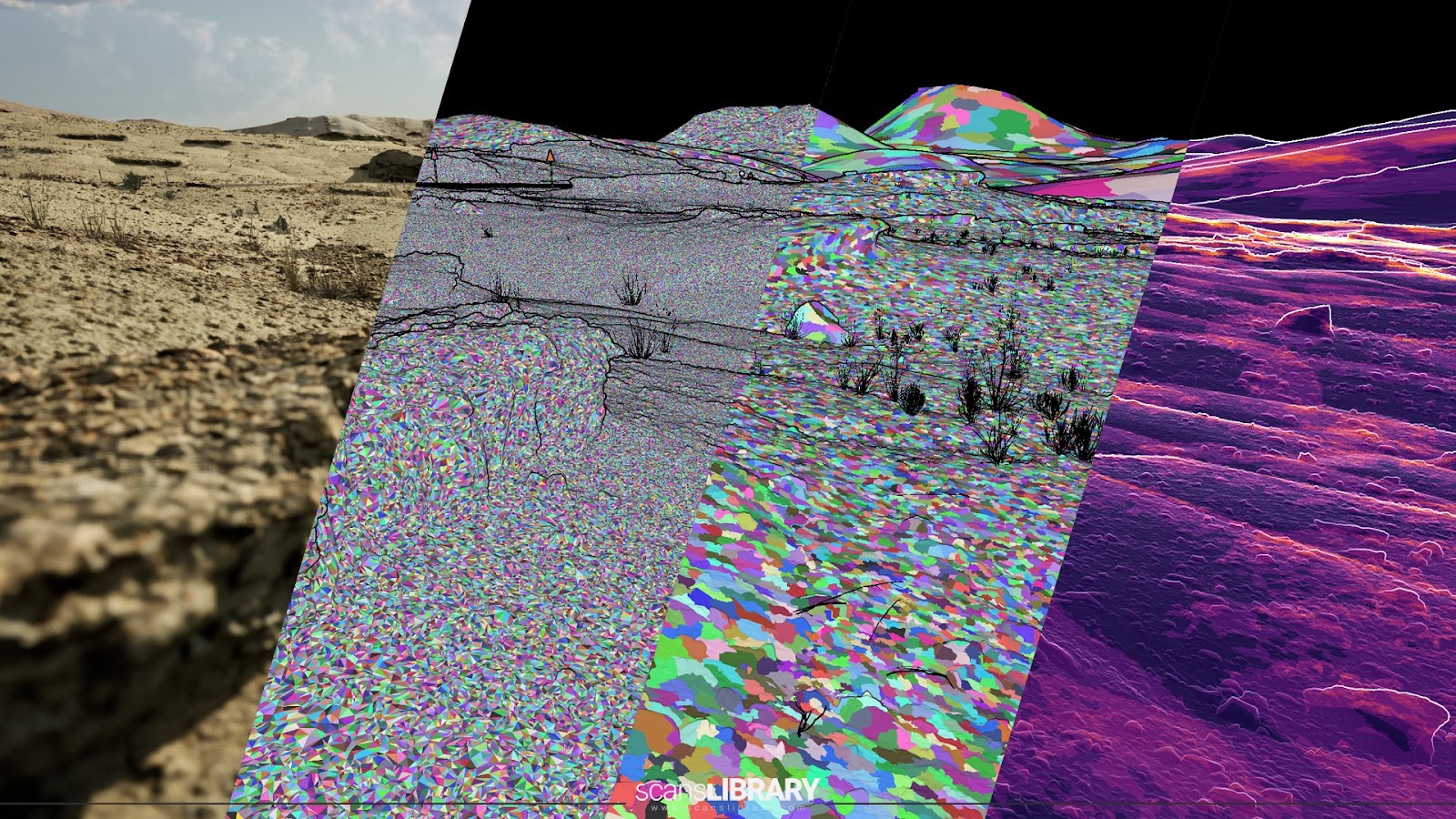

Do you notice the similarity?

Now do you understand those brilliant analysts who came up with all this? They’re colorful! So Nanite is mesh shaders!

The final nail in the cap for this comparison is Meshlets. Meshlets in mesh shaders are any group of vertices processed in parallel; this is WorkGroupID. Clusters in Nanite are part of the model’s visibility hierarchy; they are a structural part of the model itself. All that connects these two phenomena is that they are both shown in different colors, only for marking; in the real program, they all don’t even have a color.

Myth Two: Nanites are auto-LoD

Not the last one, I deceived you. The second explanation for the amazing quality of Nanite models is the automatic level of detail. A distinctive feature of Nanite is the absence of LoDs.

Accordingly, the myth says – Nanites are supposedly some kind of hidden LoDs, where all work with LoDs is hidden from the developer and automated by the engine, that is, somewhere, inside the Unreal Engine itself creates less detailed versions of models and determines the distance for them, and the approach is used displaying a redundant version of the model, which is why their change is not visible.

Guess where this assumption came from? Yes – GDC 2018 / Nvidia / Asteroids.

The demo shows LoD’ing, and regular LoD’ing, not something similar to LoD’ing, but exactly that. Simple software loading launched on the video card, not on the processor:

The most ordinary LoDing, not even auto-LoDing – it couldn’t be simpler.

In this case, the myth was boosted most of all by the Unitists. Since in Unity you can buy the auto-LoD’ing plugin as a separate plugin, which means Unity can do this too. This means that the phrase that “There are no LoDs in Unreal Engine” is just an advertising gimmick. The Unitists themselves went further in their fantasies and have already called auto-LoD itself – not popular in Unity itself, which means it supposedly has some kind of a bunch of disadvantages => in Unreal Engine everyone will also turn it off and in general it takes away performance, so that this is generally a minus of Unreal Engine, and not a plus. All this was thrown in as “I fantasize what I want.”

With the release of the engine to the public, of course, it immediately became clear that LoDov really does not exist in any form. And all this stuffing of Unity users disappeared as quickly as it appeared. But still, there are Unity-philes who for some reason continue to talk about auto-LoDs and “Unity can do that too, but doesn’t want to.”

Nanites actually have nothing to do with lods. Nanites break the model into clusters of points, which in turn are used for culling. And if we consider such things as LoDs, then with the same success we can consider any cooling algorithm as loading. Tessellation also becomes LoDing, since there are also levels of detail there. And you can, only this loses its meaning, since the term LoD itself no longer means anything specific, but becomes a kind of abstraction meaning anything, and applying it will not make sense. Moreover, one can also apply the term Anisotropic Filtration, which would have even more in common with Nanites. Since clusters of different quality are used to form the final mesh, which is not the case with LoDs.

Myth three: Nanite is a quality trick

The most plausible myth, which is difficult to criticize if you don’t know for sure. The myth clings to the fact that Nanite is a clipping algorithm and claims in presentations that read “Nanite converts a billion polygons to 5-6 million displayed per frame” and “Nanite can display as many polygons as the eye can see.” And Unity sniffers, along with journalists, immediately dubbed it Nanite – a loss of quality and almost an analogue of frame reconstruction and upscale, due to which performance is achieved. This means Nanite is bad and will be turned off for quality reasons.

Of course, this is not true either, Nanite has a quality level setting. And at 100% quality, Nanite displays geometry in reference quality. That is, the image constructed by Nanites – pixel to pixel is equal to the image drawn by a classic conveyor belt.

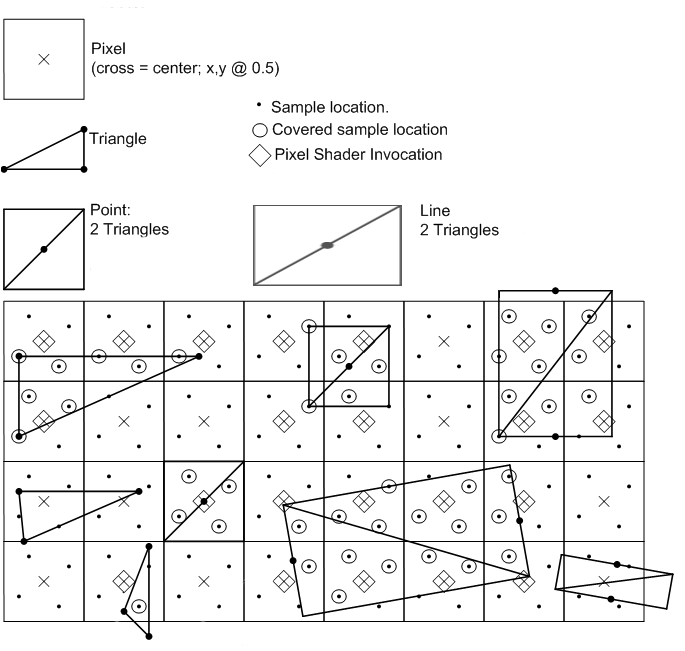

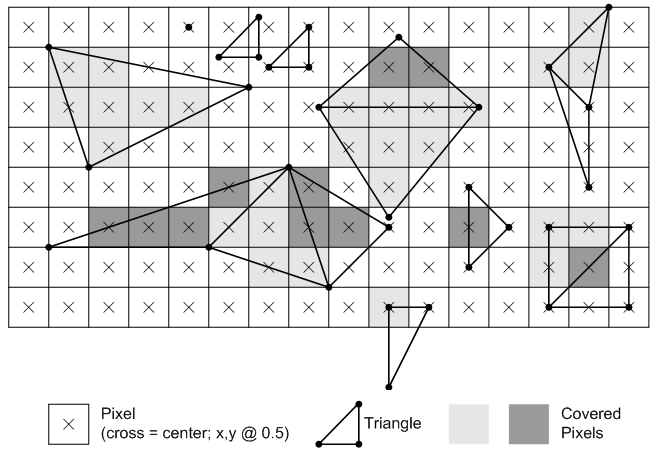

There is a trick here, but this trick belongs to the classic APIs. Which already discard polygons during sampling. In the classic pipeline there is a Sample Point, this is the sampling point for each pixel that the polygon must intersect, usually this is the center of the pixel. If the polygon intersects it, then it is sent to the rasterizer, and if not, then it is simply discarded.

That is, if you imagine that many model polygons fit into one pixel, then of all of them, only one will be displayed, which intersects the center, no matter how many initially fall into the pixel, neither a million nor a billion, but exactly one. Even if multiple polygons intersect exactly in the center, there is a separate rule, usually a clockwise priority rule, that tells which one single polygon will be drawn.

Myth four: Nanites are the next iteration of technology, soon everyone will have it.

In the first paragraph I already touched on this point, and this point runs through the entire article. As I already noted, no one came to the race. Although journalists are still constantly prophesying in hope that a new version of some, usually very specific engine will be released, which will be no worse, or even better, than this Unreal Engine of yours. Everything is being used, attempts to pass off photogrammetry and 3D scans as “the same as in Anrial,” attempts to say that the others also show good and beautiful graphics. And it’s hard to disagree with them, however, Anrial’s lead is simply colossal, and there is a gap between good and amazing graphics.

As I already noted, all the technologies debated about the future existed long before the Nanites came out. Unity is a very advanced game engine, in the latest versions of which almost completely custom rendering is available. Anyone can connect both Mesh shaders and any other technology to Unity.

No one in all this time has done anything like the Nanites. Nanite is a completely new, separate technology created from the ground up. Developed by entire R&D departments. And no one even made applications for the implementation of at least something revolutionary, and there is no need to talk about a competitor to the Nanites at all. Everyone else is doing the next iteration, Unreal Engine 5 has launched a revolution.

Small myths

Further “criticism” of Anrial is the exploitation of its limitations, which are usually expressed as “well, Anrial is not a silver bullet.” However, it is easy to see that silver bullets do not exist at all. Moreover, the versatility of technology usually comes at the expense of productivity. And then only you can make a choice.

Nanite technology indeed imposes many limitations, but Unreal Engine 5 is under development and is constantly evolving. So, for example, until recently, it was impossible to apply custom attributes to meshes with Nanite, which simply imposed huge restrictions, and then with version 5.2 they were simply added in full and now Nanite can be applied to vegetation.

In connection with this, journalists have developed a very strong myth about the high gluttony of Unreal Engine 5. And this is not true at all. New technologies are optional. On naked Anrial 5, the game transferred from Anrial 4 will work faster. Nanite is also a technology that reduces resource consumption, it can produce not only high-quality models, but also a huge number of models themselves, the inclusion of Nanite technology for a project on Anrial 5 will increase productivity. The only voracious technologies are global illumination technologies – ray tracing and its faster analogue Lumen.

Images of the future

A little about what awaits us. Firstly, Nanite is not present on mobile devices. For Nanite to work, it requires support for virtual resources, also known as tile resources, which exist in DirectX version 11.2 and are supported from video cards starting from the Nvidia Maxwell 2 generation (900 series). The latest versions of mobile devices and VR support them, and Nanite’s release on mobile devices is just a matter of time.

Unreal Engine is developing at a wild pace, crushing the market under itself. At the moment, almost all news and events indicate that the dominance of the Unreal Engine is only growing. Now even large studios are switching from their custom engines to Unreal Engine, and moreover, Unreal Engine has always been the most similar to the custom engines of large companies. Therefore, if you are suddenly faced with the question of choosing technologies for the future, it doesn’t matter whether you are studying or choosing an engine for yourself – you should definitely make a choice in favor of Unreal Engine, at least in test projects, so as not to find yourself in limbo when one or another engine will suddenly sink.

Competitors will never release analogues. The most important of them, Unity, has always occupied a completely different niche. All its oddities are due to the fact that Unity is a company living on credit; even in their most popular years they were an unprofitable company. This is an absolutely normal practice, and Unity has never been about graphics technology. Moreover, an attempt to enter this market is a clear step off a cliff. They are just about to start making money in their niche, and they cannot have any huge R&D departments for expansion, even if they really wanted to. Unity still has a strong business model; they buy advertising companies, not technology companies. In order for them to compete with Anrial, they need to not be Unity at all. At the moment, within 1-1.5 years, Unity will remain in its niche. But it is the development of Anrial and the very possible release of the latest technologies on mobile phones that is the first bell about how everything can end, as evidenced by the movements of the company itself.

PS If suddenly for some reason you liked this article and you feel that you really want to give me money, you can always do this through Donation Alerts or directly

To Tinkoff card: 5536 9140 1318 2153

Bitcoin: bc1q4t96kej6plpw4lzrv7wlupv74l4l63rqhdml8q

Etherium: 0×528c7e3eEB9e06Ef50Fec97cEd8Bf293 938C7Dd5

Which I will be very grateful for.