Leap through time or How to upgrade from Asterisk 11 to 18

Once we at Intersvyaz decided to upgrade Asterisk from version 11 to version 18. The story turned out to be interesting and instructive. My name is Gleb, I am an engineer in the telephony management group of a telecom operator. And here is our experience.

We know from colleagues from other companies that many people have a similar situation: the version of Asterisk used is several years behind the current one. Someone even has version 1.4 or 1.8. Why don’t they update? Everyone has their own reasons, but ours are related to the fact that, due to the risks of disrupting the operation of a huge infrastructure, our predecessors did not dare to take such a step. And we decided.

Why did we decide to update

The telephony infrastructure of Intersvyaz has been written for a long time. From our ancestors, we inherited the Asterisk 11 version cluster and numerous FastAGI scripts written in Perl. It would seem that it could be worse? When it’s too much.

I could place about 50 such AGI circles here, if I show a real diagram. With all this, we were very successful until 2022, developing and maintaining scripts and FastAGI that implement all of our versatile business logic.

Time passed, 10 years have passed since the release of Asterisk 11 at the time of 2022. Subsequent versions were equipped with new features, closed the problems of old releases, and along with this, reasons appeared and goals for the update were formed.

Goal #1 – get rid of the outdated Asterisk 11 from sources and get the current version from the packages, which will allow us to acquire current features and facilitate future updates.

Goal #2 — optimize our infrastructure. Initially, this was not considered, it was formed during the update, when we sorted out the difficulties that had arisen.

Goal #3 – Implement the use of ARI to modernize VoiP development, use new features and modern applications.

I will tell you about all the goals in more detail, because on the way to each of them there were difficulties and nuances.

How we updated Asterisk

We did not want to reduce everything to a banal update. I wanted to get a tool with which we could configure the server with Asterisk 18 for our tasks with one command.

We use a cluster of four Asterisk servers, one of them is on standby. Balancing between them is done using Kamailio, so automating this process is more relevant for us. If we manually updated the servers, it could take two or three days for each of the four. The risk of forgetting something and updating it was not unified also increased.

Ansible was chosen as the tool. We refactored the project, removed unnecessary unused infrastructure elements, optimized what did not work in the best way. As a result, we developed the Ansible role, which installs a fresh Asterisk and configures the server in about 20 minutes. In the future, this role can carry out absolutely any manipulations with the server: configuring iptables, systemd, updating software, etc.

What problems did you encounter:

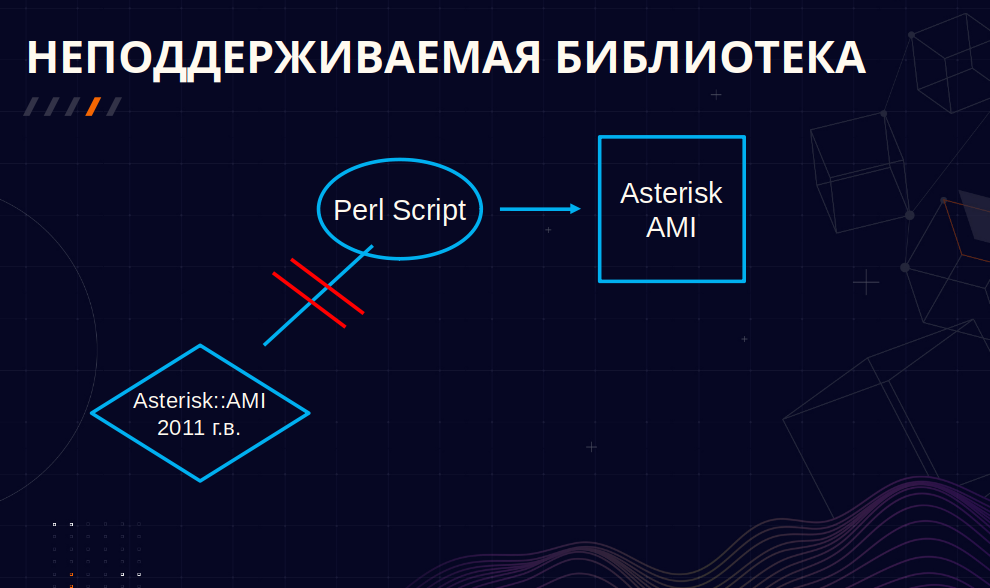

1. Unsupported library for working with AMI.

The job of AMI is that whatever event occurs on Asterisk, be it the beginning or end of a call, setting a variable, or anything else, all this initializes an AMI event with a message that contains information and parameters for processing this event.

The core of our telephony was written using the Perl language. We used the Asterisk::AMI Metacpan library, which was released back in 2011. However, with the release of Asterisk 13, the “exhaust” of AMI messages has changed, and this library is no longer supported.

Of the considered options for solving the problem, it is to implement our own API on the Asterisk servers, for example, in Python using Panoramisk, which we would access from our scripts, and it would already work locally with AMI accordingly.

Another solution to the problem is to rewrite all scripts on the Asterisk REST interface (ARI) and work with Asterisk directly. Such an option, to put it mildly, would greatly slow down the update process, given the number of scripts to rewrite.

As a result, the solution to the problem was found not without luck – by a quick googling, we came across a ready-made solution from Zhenya Gostkov in GitHub, who, having added the processing of changed messages, “taught” Perl to work with Asterisk 16+. As practice has shown, including with version 18. Problem solved.

2. Change of some events and as a result – our scripts did not accept and did not know how to work with new events.

Not only the names have changed, but also the content of the events. Take a look at the most obvious example.

This is the Bridge event, version 11 of Asterisk:

Event: Bridge

Bridgestate: <value>

Bridgetype: <value>

Channel1: <value>

Channel2: <value>

Uniqueid1: <value>

Uniqueid2: <value>

CallerID1: <value>

CallerID2: <value>And here is what it is already in the 18th version:

Event: BridgeEnter

BridgeUniqueid: <value>

BridgeType: <value>

BridgeTechnology: <value>

BridgeCreator: <value>

BridgeName: <value>

BridgeNumChannels: <value>

Channel: <value>

ChannelState: <value>

ChannelStateDesc: <value>

CallerIDNum: <value>

CallerIDName: <value>

ConnectedLineNum: <value>

ConnectedLineName: <value>

Language: <value>

AccountCode: <value>

Context: <value>

Exten: <value>

Priority: <value>

Uniqueid: <value>

Linkedid: <value>

SwapUniqueid: <value>In addition, the behavior of some events has also changed. If earlier the Bridge event was called once on two channels at the same time, now the BridgeEnter event that replaced it is called twice per call and on each channel. We had to study all such changed events and rewrite their handling.

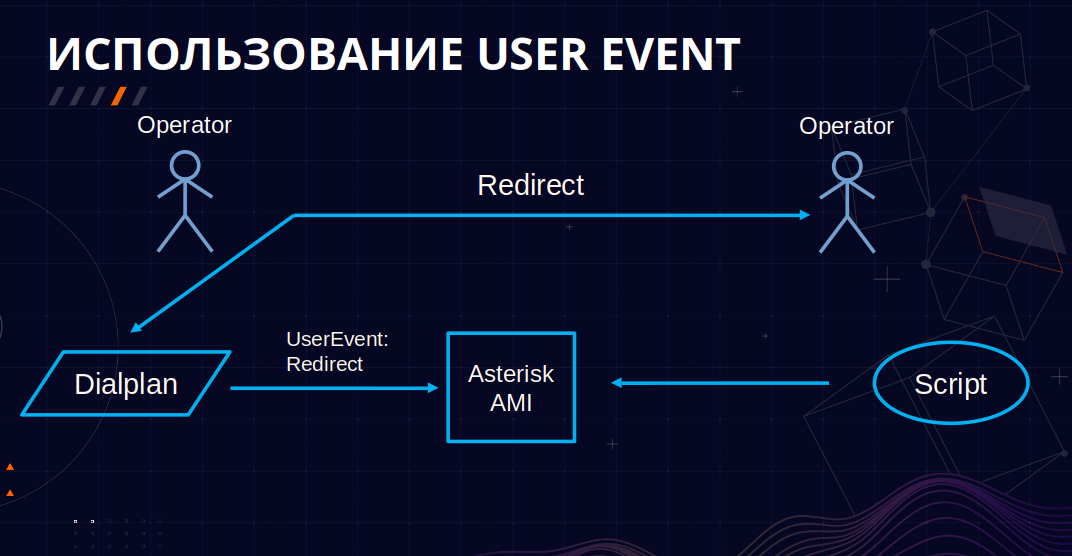

3. Application Queue.

The third problem was that deep under the hood we have a Queue dialplan application, and it has also undergone quite a few changes between versions 11 and 18. We broke the logic when transferring a call between the queues of our call center: our Asterisks no longer understood that the call had left the queue. Apparently, there was a bug in the application earlier that served as a guide for us, signaling that the call was leaving the queue. Perhaps, with the release of new versions, the bug was fixed, which is why we lost this landmark.

We looked at the source codes of the application, decided that we would not edit them, but instead implement the logic when transferring a call from one queue to another based on UserEvent, whose names do not depend on the version of Asterisk.

UserEvent is a dialplan application that allows you to create a custom AMI event with the parameters we need, which can consist, for example, of the current channel variables.

Dialplan example:

[redirect]

exten => 1,Verbose(Перевод звонка)

. . .

. . .

same => n,UserEvent(Redirect,MemberFrom:\ ${MEMBER_FROM}, MemberTo:\ ${MEMBER_TO})

. . .

. . .How did we use it? In addition to the Queue application, our call center is implemented using a dialplan and various scripts, which allows us to easily configure any stage of the call. On the example of transferring a call: when the operator makes it, we call the corresponding UserEvent in the dialplan, which is caught by our AMI message listener and processed by the corresponding script. And in this script, the statistics on the conversation with the current operator are recorded and the recording of the conversation with him is stopped.

How We Optimized Infrastructure

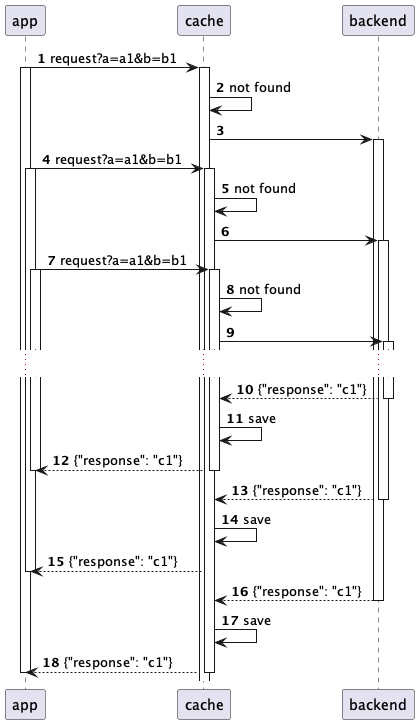

During the upgrade process, we discovered the need for optimization and some reworking of our infrastructure implementation. In the original implementation, the scripts made a direct connection with the AMI, which, as practice has shown, is unstable under high load. With a large number of calls, or due to some network problems, the connection with AMI breaks, and all data on calls is lost. We lose statistics, call processing is disrupted, there are double and triple calls from one operator.

To solve this problem, we started adding events from the Asterisk servers to the cache, preventing their loss, and the scripts, in turn, will read events from this cache for further processing.

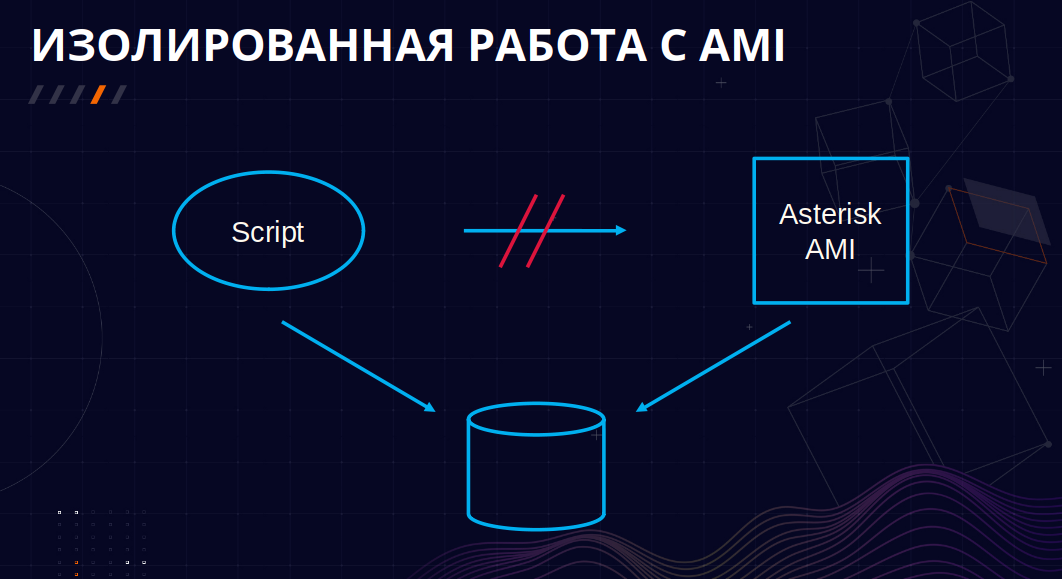

We isolated the work of listening to AMI events into a separate script that reads AMI messages and caches them as Redis, and scripts that work with processing these events simply take them from the cache and work as with a regular JSON object.

What other benefits have we gained from this scheme?

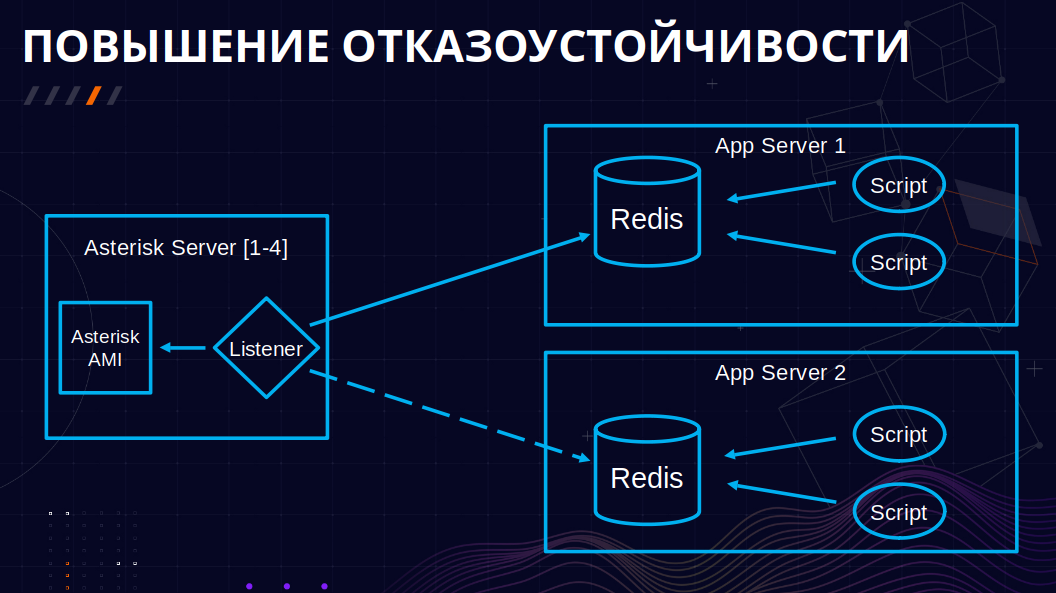

1. We have achieved an increase in fault tolerance.

We have a cluster of four Asterisks, as well as servers that run our scripts. The event listener script resides locally with each Asterisk, reads the messages, and tries to put them into Redis, which resides on the application server. Applications listen to the Redis queue of these events and process them. So what happens if Redis is unavailable for some reason, such as a network problem? The Listener will understand this and start sending messages to another similar server that hosts the same applications.

Thus, the call data will not be lost even if one of the servers fails.

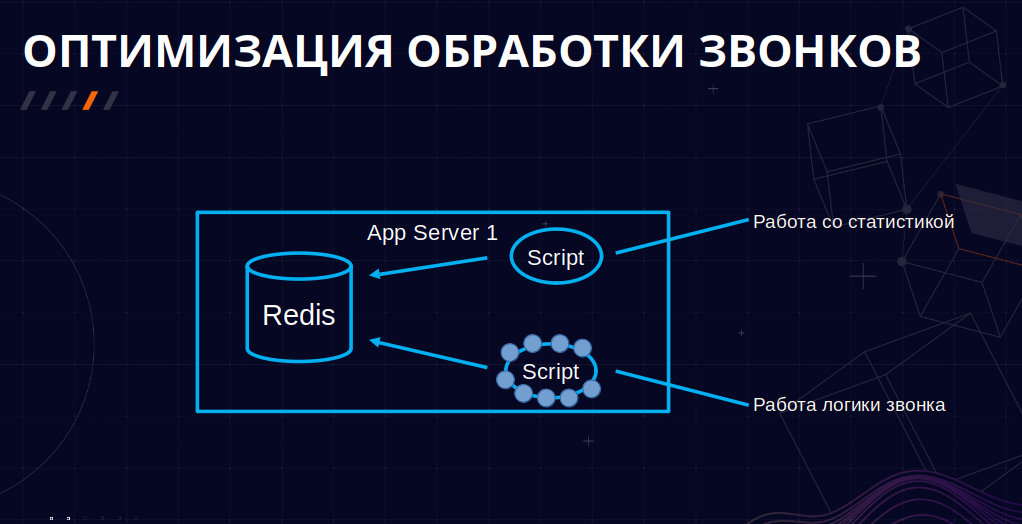

2. We have optimized the processing of our calls.

Let’s take a look at a piece of this circuit separately:

These two scripts work in parallel, one is related to the processing of the call itself, the other is related to working with statistics. We found out that they work with the same AMI events. Therefore, we decided that it was enough to abandon the ancient script for processing the call flow, transferring its logic to a younger one for working with statistics. The old script for its work generated a huge number of forks, and it was already inconvenient to follow it. As a result, we got such a concise scheme.

3. We organized event filtering.

In the course of further optimizations, we found out that for work we do not need all the events that Asterisk generates, but only a small part of them – about 2.5%

For example, there is a NewExten event that is fired when a call moves from extent to extent. They accounted for almost half of the entire flow of events, but we did not use them.

Another example is VarSet events, which are fired whenever a variable is set in a call. For our scripts to work, it is enough to catch only 10-15 such events.

How can we optimize this? Perhaps someone did not know, but Asterisk has a function for filtering AMI messages in the manager.conf configuration file. We can specify only the necessary set of messages in it. So they did.

For example, this is how we indicated only those events that we need, excluding NewExten and others from here:

eventfilter=Event: AgentConnect

eventfilter=Event: AgentComplete

eventfilter=Event: AgentRingNoAnswer

eventfilter=Event: Bridge

eventfilter=Event: BridgeEnter

eventfilter=Event: DialBegin

eventfilter=Event: Hangup

eventfilter=Event: Hold

eventfilter=Event: MusicOnHoldStart

eventfilter=Event: MusicOnHoldStop

eventfilter=Event: NewCallerid

eventfilter=Event: Newchannel

eventfilter=Event: Unhold

eventfilter=Event: UserEvent

eventfilter=Event: QueueCallerAbandonAnd according to this pattern, you can specify only those variables for the VarSet that we need:

eventfilter=Variable: QUEUE_NAME

eventfilter=Variable: BRIDGEPEER

eventfilter=Variable: ALARM_QUEUE_ID

eventfilter=Variable: LOCAL_CALL_TO

eventfilter=Variable: AUTODIAL_NUM

eventfilter=Variable: SUBSCRIBER_DIAL

eventfilter=Variable: SUBSCRIBER_CALLERID

eventfilter=Variable: TICKET

eventfilter=Variable: DIALSTATUS

eventfilter=Variable: AUTO_MONITOR

eventfilter=Variable: REVERSE_DIAL

eventfilter=Variable: REALNUMBER

eventfilter=Variable: DAMAGE_EXISTS

eventfilter=Variable: PLANNED_KTV

eventfilter=Variable: DEBT_EXISTSIf in the future some event names change or new ones are added, we will simply change the filtering and implement their processing. The results of achieving the optimization goal are presented below. The data comes from our event queue monitoring in Redis.

If earlier we had an average of 20-50 events in the queue, and at peaks the load could reach up to 200 events, now we have an average of 1-2 events in the queue, and peak states reach 4.

How we implemented ARI

We have started using ARI in our infrastructure. For example, ARI Proxy is a module for Golang that allows you to scale ARI applications horizontally and makes it easier to work with external services.

Previously, we worked with external APIs directly through the CURL dialplan application. Because of this, we had to parse the response from the API differently, depending on the request, spend dialplan lines on validation and processing. It was inconvenient.

Now, using the application on ARI Proxy, into which we fall in one line through Stasis, we can concisely and uniformly process requests and responses.

At the same time, we got the opportunity to send different types of requests, with different headers, with a custom body.

The second example: we began to take data on the channels for jitter in order to display possible problems with the voice and quickly take measures to eliminate them. To do this, we use a Golang application using CyCoreSystems/ARI.

We are also gradually moving away from AMI. We write all new applications in ARI, and we try to parse and rewrite the old ones.

conclusions

Summing up, the implemented update allowed us to achieve the following successes:

We have developed a tool for setting up servers with the current version of Asterisk from packages. Let me remind you that the Ansible role has become this tool.

We created a fault-tolerant system for unified and extensible call processing by optimizing and isolating work with AMI.

We started implementing ARI, which, in addition to the convenience in our work, allows us to lower the entry threshold for new developers who will interact with Asterisk using the REST interface they are used to.

Things to watch out for when upgrading your Asterisk version:

compatibility of the libraries you use in applications;

nuances when working with AMI, for example, changing event names.

Happy updates! Hope our experience helps you.

Thank you for your attention!

![How to pump ads using Google Ads modifiers [5 примеров]](https://prog.world/wp-content/uploads/2020/10/qzfautqdehkka5dqicsiez84q20-768x403.png)