Is it possible to teach a chatbot to always tell the truth? Part 2

As users, we want to be able to simply load all the documents we need into RAG and use them without additional configuration. Most traditional RAG approaches also use the received documents “as is”, without checking whether these documents are relevant or not. Moreover, current methods mainly treat complete documents as reference knowledge, both during retrieval and during use. But much of the text in these extracted documents is often not important to generate and only makes it more difficult to find relevant information. And if the result of the retriever’s work turns out to be of poor quality, there is a high probability of getting a hallucination in the answer.

To solve the problem, you can move in several directions.

1. So that the model itself determines whether to use the RAG results or not

This approach is the basis of the FLARE (Forward Looking Active Retrieval Augmented Generation) methodology, which combines retrieval techniques to obtain relevant information from external data sources with RAG and generative models and seeks to mitigate hallucinations by integrating external verified information in the generation process.

FLARE's task is to answer 2 questions:

when to receive? According to the authors[1]an external database should only be accessed when the LLM lacks the necessary knowledge and the LLM generates tokens with low probability.

What i need to get? FLARE doesn't just retrieve the content, it also evaluates the next sentence to get data for it in advance as well.

There are two types of FLARE – instruction and direct

FLARE Instruct. This mode prompts the model to generate specific queries to find information. The model pauses generation, retrieves the necessary data, and then resumes running, integrating new information.

FLARE Direct. The model uses the generated content as a direct query for retrieval when it encounters low confidence tokens.

An example of working with Flare can be found in LlamaIndex documentation.

“Can you tell me about the author’s journey in the startup world?” – asks the author of the example, using the biography of Paul Graham as a dataset.

And this is the result the method produces:

Текущий ответ: Прогнозный ответ: Автор начал свой путь в мире стартапов с помощью [Поиск (Чем автор занимался в мире стартапов?)]

Here we see that the method returns the beginning of the phrase, and then immediately asks itself a new search question – what did the author do in the startup world?

Next step, the answer to this is also in the document:

Обновленный прогнозный ответ: Автор начал свой путь в мире стартапов, основав Y Combinator (YC), акселератор стартапов, который пакетно предоставлял финансирование и поддержку стартапам. Они стремились решить проблемы в индустрии венчурного капитала, делая большее количество мелких инвестиций, финансируя более молодых и более технических основателей и позволяя основателям оставаться генеральными директорами. Автор также написал Hacker News, агрегатор новостей, изначально предназначенный для основателей стартапов, на новой версии языка программирования Arc.

Next, the model takes the second step, starting from the answer just built and asking a new question: what famous startups has the author worked with?

Текущий ответ: Автор начал свой путь в мире стартапов с того, что стал соучредителем Y Combinator (YC), акселератора стартапов, который пакетно предоставлял финансирование и поддержку стартапам. Они стремились решить проблемы в индустрии венчурного капитала, делая большее количество мелких инвестиций, финансируя более молодых и более технических основателей и позволяя основателям оставаться генеральными директорами. Автор также написал Hacker News, агрегатор новостей, изначально предназначенный для основателей стартапов, на новой версии языка программирования Arc. Прогнозный ответ: с тех пор автор занимался наставничеством и консультированием многочисленных стартапов, помогая им расти и добиваться успеха в своих отраслях. [Поиск(С какими известными стартапами работал автор?)] Обновленный прогнозный ответ: с тех пор автор занимался наставничеством и консультированием многочисленных стартапов, помогая им расти и добиваться успеха в соответствующих отраслях. В число известных стартапов, с которыми работал автор, входят Reddit, Джастин Кан и Эммет Шир (который впоследствии основал Twitch), Аарон Шварц (который помог написать спецификацию RSS) и Сэм Альтман (который позже стал вторым президентом YC). Текущий ответ: Автор начал свой путь в мире стартапов с того, что стал соучредителем Y Combinator (YC), акселератора стартапов, который пакетно предоставлял финансирование и поддержку стартапам. Они стремились решить проблемы в индустрии венчурного капитала, делая большее количество мелких инвестиций, финансируя более молодых и более технических основателей и позволяя основателям оставаться генеральными директорами. Автор также написал Hacker News, агрегатор новостей, изначально предназначенный для основателей стартапов, на новой версии языка программирования Arc. С тех пор автор занимался наставничеством и консультированием многочисленных стартапов, помогая им расти и добиваться успеха в своих отраслях. В число известных стартапов, с которыми работал автор, входят Reddit, Джастин Кан и Эммет Шир (который впоследствии основал Twitch), Аарон Шварц (который помог написать спецификацию RSS) и Сэм Альтман (который позже стал вторым президентом YC). Упреждающий ответ: готово

And finally the final response to the user:

Свой путь в мире стартапов автор начал с того, что стал соучредителем Y Combinator (YC), акселератора стартапов, который порционно обеспечивал финансирование и поддержку стартапов. Они стремились решить проблемы в индустрии венчурного капитала, делая большее количество мелких инвестиций, финансируя более молодых и более технических основателей и позволяя основателям оставаться генеральными директорами. Автор также написал Hacker News, агрегатор новостей, изначально предназначенный для основателей стартапов, на новой версии языка программирования Arc. С тех пор автор занимался наставничеством и консультированием многочисленных стартапов, помогая им расти и добиваться успеха в своих отраслях. В число известных стартапов, с которыми работал автор, входят Reddit, Джастин Кан и Эммет Шир (который впоследствии основал Twitch), Аарон Шварц (который помог написать спецификацию RSS) и Сэм Альтман (который позже стал вторым президентом YC).

The undoubted benefit of the FLARE method lies in the ability to collect material from several unrelated parts of the document, which individually would not receive a sufficient rating to be shown to the user. The bottleneck of the method remains the lack of user control over the thinking process.

2. Find a way to distinguish correct answers from incorrect ones

Corrective RAG (CRAG)

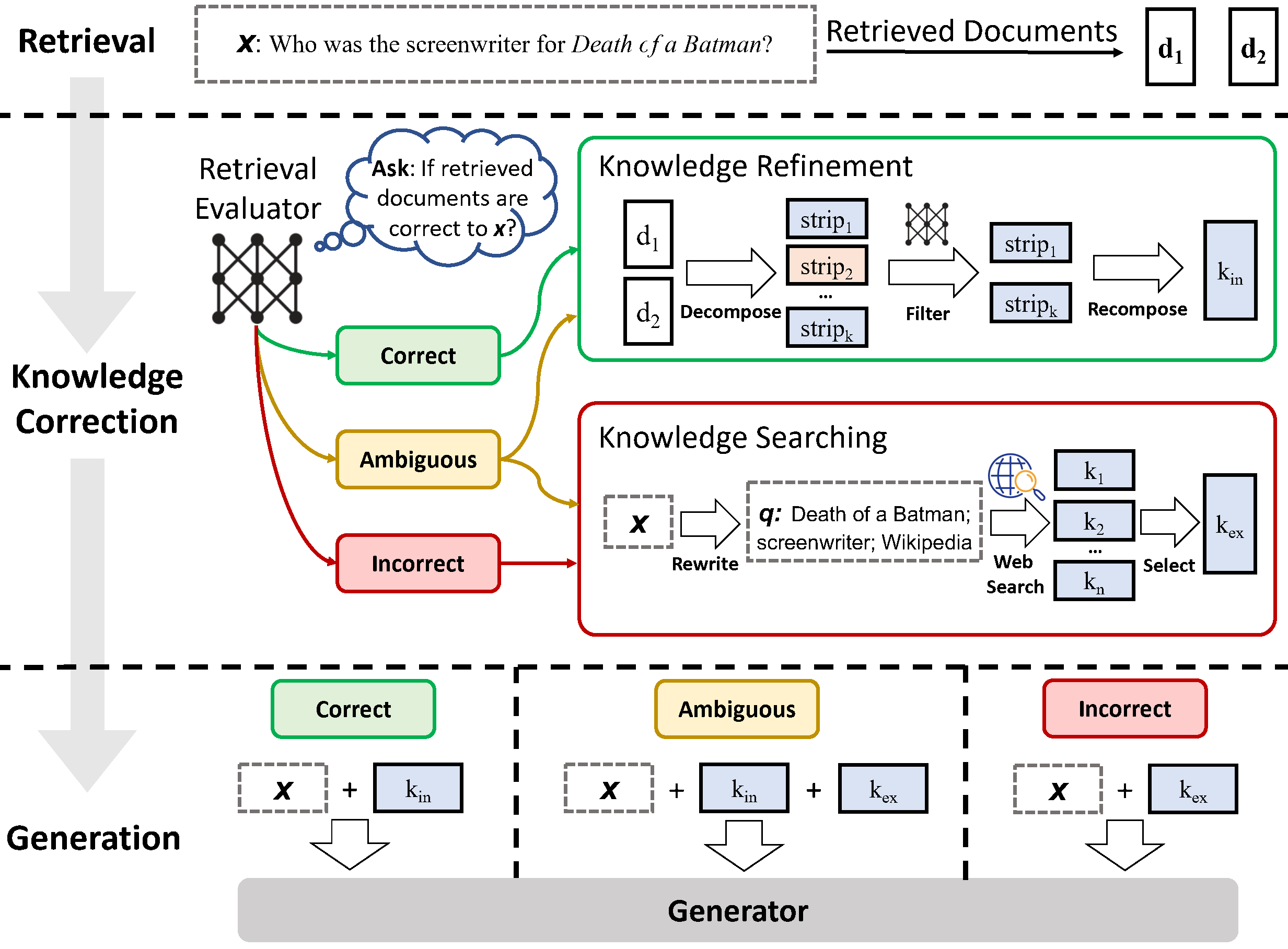

CRAG consists of three main components:

Generative model: Responsible for creating a preliminary generation sequence in an autoregressive manner.

Search Model: A search engine that selects relevant passages from a knowledge source based on pre-construction and context.

Orchestrator: controls the iteration between generator and receiver, ranks creation candidates, and determines the final output sequence. The orchestrator is the glue that binds the retriever and generator together in a CRAG. It maintains the state of the incomplete text generated so far, requests candidates from the generator to expand that text, extracts knowledge using the updated context, evaluates the candidates in terms of both textual probability and actual alignment, and finally selects the top one candidate to add to the output at each generation step. [2]

But the most important component of CRAG is the retriever evaluator, it quantifies the degree of confidence of each document (True, False, Ambiguous), based on which various knowledge extraction actions can be initiated.

When the search is successful

The search is considered successful if the confidence indicator at least one extracted document exceeds the upper threshold. This means that there is an answer in the results, but it is inevitably noisy with unnecessary context, so a knowledge refinement method is further developed to extract the most important areas of knowledge from this document. Each document received is segmented using heuristic rules, then the search retriever is used to calculate the relevance score of each segment and based on these scores, irrelevant segments are filtered out and relevant ones are sorted.

When the search is unsuccessful

The search is considered unsuccessful if the reliability indicators all retrieved documents below the lower threshold. This means that there are no relevant documents in the knowledge base, so we need to look for new sources of knowledge, such as the Internet. This corrective action helps overcome the vexing problem of not being able to use reliable knowledge.

Uncertainty

If the search evaluator is unsure of his judgment, the methods for processing successful and unsuccessful searches are combined to complement each other. Implementing such a restraining and soft strategy can significantly contribute to strengthening the reliability and resilience of the system, creating a more adaptable structure for optimal performance.

CRAG has unconditional advantages for use:

improves consistency of facts.

significantly reduces the number of hallucinations, as the researchers described in the article, they managed to achieve 84% of cases when the results contained reliable facts.

The modularity of the method allows you to flexibly integrate any models of retrievers, generators and other components.

There are 2 bottlenecks:

a multi-move strategy and the need to look for information on external resources significantly slows it down relative to a simple RAG.

Coverage of knowledge sources is critical to search quality.

An example of the method implementation from the authors can be found here

Implementing a method in llamaIndex here

3. Eliminate unnecessary information due to the size of embeddings

This approach requires a middle ground between cutting out unnecessary information and losing valuable content. There is no one-size-fits-all solution. What works for one knowledge base may not work for another. Therefore, there is a whole family of approaches to how to choose the right context length. An alternative is the RAPTOR tree structure.

The developers of the Pinecone vector database offered their strategies for choosing the length of embeddings [3]I’ll give a few reasons here:

A shorter query, such as a single sentence or phrase, will focus on specific details and may be better suited to matching sentence-level embeddings. A longer query that spans more than one sentence or paragraph may be more suitable for embedding at the paragraph or document level since it is likely to look for broader context or topics.

The index can also be heterogeneous and contain embeddings for blocks miscellaneous size. This can create problems in terms of the relevance of query results, but can also have some positive consequences. On the one hand, the relevance of a query result may fluctuate due to inconsistencies between the semantic representations of long and short content. On the other hand, a heterogeneous index can potentially capture a wider range of context and information because different chunk sizes represent different levels of detail in the text. This will allow you to process different types of requests more flexibly.

To select the correct sizes, it is recommended to first answer the following questions:

What is the nature of the content being indexed?

Do you work with long documents, such as articles or books, or shorter content, such as tweets or instant messages?

What embedding model do you use and what fragment sizes does it work optimally for?

What are your expectations regarding the length and complexity of user queries?

Will they be short and to the point or long and complex? This may also influence how you decide to chunk your content to ensure a closer correlation between the inline query and the inline chunks.

How will the results be used in your specific application?

For example, will they be used for semantic search, question answering, summarization, or other purposes? For example, if your results need to be transferred to another LLM with a token limit, you will have to take this into account and limit the size of the fragments depending on the number of fragments you want to fit into the request. L.L.M.

I recommend it for independent experiments. democompiled from arxiv documents, which shows how search results change depending on the choice of a particular length.

RAPTOR

The RAPTOR architecture consists of three main components: the tree building process, search methods, and a query-response system.

The process of building a RAPTOR tree begins by segmenting the search corpus into short, contiguous texts of approximately 100 tokens each. These pieces of text are then embedded using SBERT, forming the leaf nodes of the tree structure [4].

To group similar text fragments together, RAPTOR uses a clustering algorithm based on Gaussian mixture models (GMM). GMMs assume that data points are generated from a mixture of multiple Gaussian distributions, allowing for soft clustering in which nodes can belong to multiple clusters without requiring a fixed number of clusters. To mitigate the problems associated with high dimensionality of vector embeddings, RAPTOR uses uniform manifold approximation and projection (UMAP) to reduce dimensionality.

To search for information, algorithms typical for trees are used, such as traversal by depth, width, etc.

RAPTOR has several strengths:

Hierarchical structure allowing for different levels of abstraction

Scalability

Interpretability, the ability to very precisely establish the coordinates from which the context was extracted, which can be of great importance, for example, in legal documents.

The bottleneck of all methods with multiple levels of vectors is the need to build a multiple of more vectors than in a regular RAG.

An example of method implementation from the authors here

Implementation in llamaIndex here

4. Search not among the answers, but among the questions

Ask LLM to generate a question for each piece of text and build embeddings on these questions to look not among the answers, but among the questions, and then refer to the original question based on the found question. This approach improves search quality due to higher semantic similarity between the query and the hypothetical question compared to what we would have for a real snippet. There is also a reverse logic approach called HyDE[5], when we ask LLM to generate a hypothetical answer based on a query, and then use its embedding along with the query embedding to improve search quality.

This method is convenient in cases such as responding to user requests, because The wording of the answers does not always contain verbatim what the user asked.

Implementation in llamaIndex here

Conclusion

RAG is actively developing and improving. In March of this year alone, more than a dozen publications can be found on Arxiv with more and more new approaches to increasing accuracy. Outside the scope of this article are no less promising graph knowledge bases, which will definitely become one of the next topics. But now we can say with confidence that although there is no single universal solution to get rid of hallucinations, the abundance of available methods for most everyday tasks allows you to find the right method so that the benefits of communicating with a chatbot are significantly greater than the volume of undefeated hallucinations .

Used sources:

[1] – Active Retrieval Augmented Generation

[2] – Corrective Retrieval Augmented Generation

[3] – Chunking Strategies for LLM Applications

[4] – RAPTOR: RECURSIVE ABSTRACTIVE PROCESSING FOR TREE-ORGANIZED RETRIEVAL

[5] – Precise Zero-Shot Dense Retrieval without Relevance Label