In search of the most powerful video card! Testing A100 and A6000 Ada on a large language model

Large Language Models (LLMs) have revolutionized the world of ML. More and more companies are looking to benefit from them in one way or another. For example, at Selectel, we are evaluating the rationale for deploying a private LLM to help support staff answer customer questions. We decided to combine this task with testing new hardware –

Ada video cards with 48 GB RAM

. The A100 with 40 GB was chosen as its opponent.

Let’s say right away that it is almost impossible to properly train LLM on a single GPU, but such a task is quite suitable as a performance test. Below the cut we tell you how we test drove two GPUs and what conclusions we came to.

Use navigation if you don't want to read the entire text:

→ Why can't you just use the most powerful GPUs?

→ Why we love A100 and A6000 Ada

→ Test – two GPUs solve the same problems under the same conditions

→ Test results

→ Conclusion

Why can't you just use the most powerful GPUs?

Based only on the characteristics of video cards declared by the manufacturer, it is easy to fall into the trap of thinking that the more memory and CUDA cores a card has, as well as the wider the bus, the better. On the one hand, it is obvious that more complex tasks require more memory. In addition to GPU RAM, cores also affect the speed and complexity of calculations. On the other hand, buying or renting a card with top specifications for all occasions is not the best idea. And that's why…

It is expensive

To train large models and work with massive datasets, you need really expensive video cards. The price of the chosen configuration often makes you wonder: a little less memory and cores will slow things down, but will this slowdown be so critical that you need to pay millions more every month? Besides, a few extra gigabytes may never be useful.

Your resources will be idle

You purchased one or more video cards or

rented a ready-made server with a GPU

. Of course, you immediately loaded it with 100% training of ML models. But the GPU is unlikely to work 24/7/365, even if complex tasks are put on stream.

Most likely, the picture will be like this. The data scientist took the entire resource for himself, but used the GPU, say, four hours a day. The rest of the time he trained the model on the CPU, drank coffee or solved other problems. To ensure that paid resources do not disappear during this time, they should be given to other specialists. And here we gradually approach the next point.

We'll have to take into account the nuances of GPU sharing

GPU sharing

– a great move when you need to solve several problems at the same time, none of which requires all available resources. We take the card, divide it into several isolated pieces, which have their own memory, cores, cache, etc., and give one to each of the data scientists for their task. But, of course, not everything is so simple.

Firstly, each sharing method has its pros and cons. Secondly, the same technology, for example MIG, can divide the A100 into a maximum of seven logical blocks, and the A30 into a maximum of four.

To summarize: if you are not ready for radical measures, for example, operating several dozen A100 or H100 connected to the motherboard via NVSwitch, the issue of choosing hardware for ML tasks will become very difficult. At the very least, the technical characteristics of the hardware alone are not enough to make a decision, since it is important to pay attention to the additional capabilities of the GPU in terms of sharing and monitoring operation. But going the empirical route, going through all possible options, is long and expensive.

Why we love A100 and A6000 Ada

Until recently, we would have chosen the already proven A100 without hesitation. This card has a GPU with 6,912 CUDA cores, 40 GB of video memory, support for Tensor Cores and virtualization technologies. An excellent solution for cloud computing, rendering, neural network training and simulation. All you have to do is follow the prompts in PyTorch, focus on the free amount of memory and enjoy. In addition, two A100s can be combined via NVLink to create a real “server monster”.

However, we recently got access to a new video card – the A6000 Ada. A quick glance at its characteristics suggests that this is the A100 on steroids: 10,752 CUDA cores and 48 GB of video memory are hidden in the miniature case. At the same time, the heat dissipation of the A6000 Ada is 300 W, while that of the A100 is 400. And one of the key differences between the cards is that the A6000 Ada does not support connection via NVLink and sharing via MIG – that is, these are devices of different levels of functionality.

We see that the characteristics of the A6000 Ada look more promising than the A100. But it is more expensive, therefore, a logical question arises: is it worth overpaying? To find the answer, we took LLM in three sizes and gave our video cards a test drive.

Test – two GPUs solve the same problems under the same conditions

We test video cards in additional training (fine tuning) of a large language model in different sizes:

Ideally, to train LLM for every billion of its parameters, you need about 24 GB of video memory – not only the model, but also other components are placed in RAM: gradients, optimizer states, temporary buffers, and so on. That is, using only one video card, as in our experiment, we will not be able to complete the training of very many LLMs. There are different outputs, for example, combine two cards via NVLink (or more cards via NVSwitch), apply

or another way to reduce the size of the model. And since we are interested in a fair test of each card, we will not combine them and increase computing resources, but will go exactly along the path of quantization (and we will encounter a lack of memory, but more on that later).

So, the models are loaded in quantized form using the library BitSandBytes. To make learning possible in principle, we use the approach LoRAimplemented in the library peft. The LoRA configuration remains unchanged (rank of multipliers = 16) with the exception of starting training of the largest model on the A100 – we lowered the rank of decomposed matrices to save memory and make it possible to at least load the model and batch into GPU memory.

Preparing the working environment

Let's prepare a working environment for testing. We need to install quite a few packages, but this is done with literally two scripts.

First we run the script apt.sh. With his help:

- disable kernel updates for Nvidia and Linux packages;

- update the list of packages;

- install new packages (for using the repository via https, checking the validity of SSL connections, transferring data from and to the server using various protocols, working with repositories and generating passwords).

#!/bin/bash

set -e

set -o xtrace

# Отключаем автообновления ядра

cat <<EOF > /etc/apt/apt.conf.d/51unattended-upgrades

Unattended-Upgrade::Package-Blacklist {

"nvidia-";

"linux-";

};

EOF

apt update

apt install -y \

apt-transport-https \

ca-certificates \

curl \

software-properties-common \

pwgen

Script apt.sh.

Next we run the script nvidia-drivers-install.sh. With its help we:

- install the linux-header packages (they are needed to build DKMS, in the form of which the out-off-tree driver is supplied; this driver, in turn, is needed for the operation of the Nvidia GPU);

- install Nvidia drivers;

- install nvitop, jupyter and other tools.

#!/bin/bash

set -e

set -o xtrace

# Опционально, возможно понадобится удаление устаревшего пакета

# linux-version list

# uname -r

# dpkg --list | grep -E -i --color 'linux-image|linux-headers'

# apt-get --purge autoremove

# apt --purge autoremove

# uname -a

# apt purge linux-image-5.4.0-166-generic

# dpkg --list | grep -E -i --color 'linux-image|linux-headers'

# Install Nvidia drivers for all kernels

for kernel in $(linux-version list); do

apt install -y "linux-headers-${kernel}"

done

apt install -y nvidia-driver-510 htop python3-pip git

pip install nvitop==1.3.1 jupyter==1.0.0 accelerate==0.21.0 peft==0.4.0 bitsandbytes==0.40.2 transformers==4.31.0 trl==0.4.7 scipy==1.11.3 tensorboard==2.15.1 evaluate==0.4.1 scikit-learn==1.3.2

Script nvidia-drivers-install.sh.

absl-py==2.0.0

accelerate==0.21.0

aiohttp==3.8.6

aiosignal==1.3.1

anyio==4.0.0

argon2-cffi==23.1.0

argon2-cffi-bindings==21.2.0

arrow==1.3.0

asttokens==2.4.1

async-lru==2.0.4

async-timeout==4.0.3

attrs==23.1.0

Babel==2.13.1

beautifulsoup4==4.12.2

bitsandbytes==0.40.2

bleach==6.1.0

blinker==1.4

cachetools==5.3.2

certifi==2023.7.22

cffi==1.16.0

charset-normalizer==3.3.2

comm==0.2.0

command-not-found==0.3

contourpy==1.2.0

cryptography==3.4.8

cycler==0.12.1

datasets==2.14.7

dbus-python==1.2.18

debugpy==1.8.0

decorator==5.1.1

defusedxml==0.7.1

dill==0.3.7

distro==1.7.0

distro-info==1.1+ubuntu0.1

evaluate==0.4.1

exceptiongroup==1.1.3

executing==2.0.1

fastjsonschema==2.19.0

filelock==3.13.1

fonttools==4.45.0

fqdn==1.5.1

frozenlist==1.4.0

fsspec==2023.10.0

google-auth==2.23.4

google-auth-oauthlib==1.1.0

grpcio==1.59.2

httplib2==0.20.2

huggingface-hub==0.19.2

idna==3.4

importlib-metadata==4.6.4

ipykernel==6.26.0

ipython==8.17.2

ipywidgets==8.1.1

isoduration==20.11.0

jedi==0.19.1

jeepney==0.7.1

Jinja2==3.1.2

joblib==1.3.2

json5==0.9.14

jsonpointer==2.4

jsonschema==4.19.2

jsonschema-specifications==2023.11.1

jupyter==1.0.0

jupyter-console==6.6.3

jupyter-events==0.9.0

jupyter-lsp==2.2.0

jupyter_client==8.6.0

jupyter_core==5.5.0

jupyter_server==2.10.0

jupyter_server_terminals==0.4.4

jupyterlab==4.0.8

jupyterlab-pygments==0.2.2

jupyterlab-widgets==3.0.9

jupyterlab_server==2.25.1

keyring==23.5.0

kiwisolver==1.4.5

language-selector==0.1

launchpadlib==1.10.16

lazr.restfulclient==0.14.4

lazr.uri==1.0.6

Markdown==3.5.1

MarkupSafe==2.1.3

matplotlib==3.8.2

matplotlib-inline==0.1.6

mistune==3.0.2

more-itertools==8.10.0

mpmath==1.3.0

multidict==6.0.4

multiprocess==0.70.15

nbclient==0.9.0

nbconvert==7.11.0

nbformat==5.9.2

nest-asyncio==1.5.8

netifaces==0.11.0

networkx==3.2.1

notebook==7.0.6

notebook_shim==0.2.3

numpy==1.26.2

nvidia-cublas-cu12==12.1.3.1

nvidia-cuda-cupti-cu12==12.1.105

nvidia-cuda-nvrtc-cu12==12.1.105

nvidia-cuda-runtime-cu12==12.1.105

nvidia-cudnn-cu12==8.9.2.26

nvidia-cufft-cu12==11.0.2.54

nvidia-curand-cu12==10.3.2.106

nvidia-cusolver-cu12==11.4.5.107

nvidia-cusparse-cu12==12.1.0.106

nvidia-ml-py==12.535.133

nvidia-nccl-cu12==2.18.1

nvidia-nvjitlink-cu12==12.3.52

nvidia-nvtx-cu12==12.1.105

nvitop==1.3.1

oauthlib==3.2.0

overrides==7.4.0

packaging==23.2

pandas==2.1.3

pandocfilters==1.5.0

parso==0.8.3

peft==0.4.0

pexpect==4.8.0

Pillow==10.1.0

platformdirs==4.0.0

prometheus-client==0.18.0

prompt-toolkit==3.0.41

protobuf==4.23.4

psutil==5.9.6

ptyprocess==0.7.0

pure-eval==0.2.2

pyarrow==14.0.1

pyarrow-hotfix==0.5

pyasn1==0.5.0

pyasn1-modules==0.3.0

pycparser==2.21

Pygments==2.16.1

PyGObject==3.42.1

PyJWT==2.3.0

pymacaroons==0.13.0

PyNaCl==1.5.0

pyparsing==2.4.7

python-apt==2.4.0+ubuntu2

python-dateutil==2.8.2

python-json-logger==2.0.7

pytz==2023.3.post1

PyYAML==5.4.1

pyzmq==25.1.1

qtconsole==5.5.0

QtPy==2.4.1

referencing==0.31.0

regex==2023.10.3

requests==2.31.0

requests-oauthlib==1.3.1

responses==0.18.0

rfc3339-validator==0.1.4

rfc3986-validator==0.1.1

rpds-py==0.12.0

rsa==4.9

safetensors==0.4.0

scikit-learn==1.3.2

scipy==1.11.3

screen-resolution-extra==0.0.0

SecretStorage==3.3.1

Send2Trash==1.8.2

six==1.16.0

sklearn==0.0.post11

sniffio==1.3.0

soupsieve==2.5

ssh-import-id==5.11

stack-data==0.6.3

sympy==1.12

tensorboard==2.15.1

tensorboard-data-server==0.7.2

termcolor==2.3.0

terminado==0.18.0

threadpoolctl==3.2.0

tinycss2==1.2.1

tokenizers==0.13.3

tomli==2.0.1

torch==2.1.0

tornado==6.3.3

tqdm==4.66.1

traitlets==5.13.0

transformers==4.31.0

triton==2.1.0

trl==0.4.7

types-python-dateutil==2.8.19.14

typing_extensions==4.8.0

tzdata==2023.3

ubuntu-advantage-tools==8001

ufw==0.36.1

unattended-upgrades==0.1

uri-template==1.3.0

urllib3==2.1.0

wadllib==1.3.6

wcwidth==0.2.10

webcolors==1.13

webencodings==0.5.1

websocket-client==1.6.4

Werkzeug==3.0.1

widgetsnbextension==4.0.9

xkit==0.0.0

xxhash==3.4.1

yarl==1.9.2

zipp==1.0.0

Now we have a virtual server with Nvidia drivers, a set of tools, a Python environment with the libraries necessary to run LLM.

Running jupyter-notebook

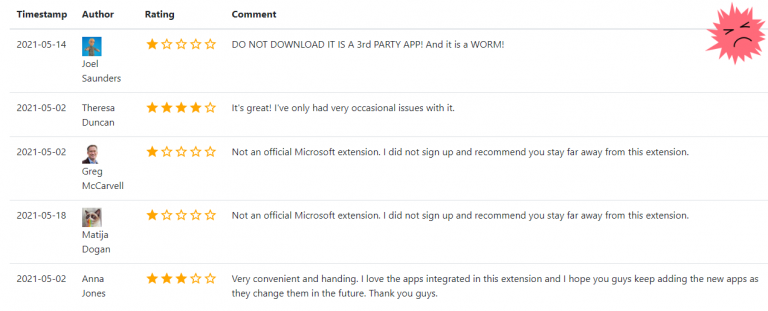

The training part of the data set was used for testing

with 1,000 texts ranging from 58 to 11,400 characters.

The distribution of text sizes is shown in the graph:

Test results

Of course, we tested both video cards using the same script. We summarized the training results of each model and the results of text generation in tables.

The Out of Memory (CUDA OOM) error indicates that the video card did not have enough memory to complete the task. Here we will not go into detail about RAM loading, since the purpose of the test is to compare the capabilities of video cards, and not to find the optimal way to train LLM. If you're interested in learning more about how GPU memory is consumed when training large language models, we recommend this article.

When reading all the tables below, it is worth considering that memory percentages and GPU core usage are taken from the nvitop utility.

Training meta-llama/Llama-2-7b-chat-hf

As we can see, when working with small batches, both video cards successfully cope with the load. By the way, the comparison clearly shows why you can’t rely only on technical characteristics when choosing a video card – the A100, which has less memory and the number of cores, coped with one of the tests much better than the more powerful A6000 Ada, although it used 97.7% of its computing resource .

However, when we shipped batches of 25 and 27 texts, the A100 had already gone to CUDA OOM, while the A6000 Ada continued to work, and we completed training the model. Therefore, it is impossible to formulate an unambiguous conclusion. If the card will consistently be dedicated entirely to one task, and the dataset batches will contain no more than 20 texts, it’s worth taking a closer look at the A100. However, in favor of the A6000 Ada is the fact that a larger amount of memory will allow testing more batch size options, which can affect the convergence of training.

Training meta-llama/Llama-2-13b-chat-hf

As with the previous model, until the available memory is exhausted, the A100 shows very good results, outpacing the A6000 Ada in learning speed. We approached the RAM threshold on the A100 (96.3% of available memory) only when uploading batches of 15 texts.

An attempt to upload batches of 25 or more texts sent both subjects to CUDA OOM. Obviously, if you use only one video card, be it A100 or A6000 Ada, its memory will not be enough. Yes, the reason is still the same: too heavy batches, along with a large model and components necessary for training, do not fit into either 40 or 48 GB.

Training meta-llama/Llama-2-70b-chat-hf

We conducted this test more out of curiosity, to find out whether the A6000 Ada alone can handle training a model with 70 billion parameters. Conclusion: she can handle it, but only if we give her one text at a time. An attempt to send two texts in a batch leads to exhaustion of RAM.

We didn't expect much from the A100. Here, quantization alone is not enough – in order to save some memory and simply load the model, we had to lower the rank of the decomposed matrices to two and eight (the same case of changing the LoRA configuration that was discussed at the beginning) and also load one text at a time. However, from a practical point of view, this makes no sense – it is more advisable to take either one A6000 Ada, or two A100 and NVLink.

Text generation

In terms of text generation, the A6000 Ada was the leader in every test.

As expected, as the number of tokens increases, the difference in data output speed becomes more and more noticeable. For clarity, we have converted the table values into graphs. Regardless of the size of the model being trained and the number of A6000 tokens, Ada is predictably faster.

As we have already noted, training of the largest model, meta-llama/Llama-2-70b-chat-hf, on the A100 was possible only when the LoRA configuration was changed. But even so, it was not completed. However, this in no way means that the A100 is unsuitable for generating text with large language models. During testing, we did not consider all options. For example, they did not combine two A100s via NVLink.

Conclusion

The obvious conclusion is that the A6000 Ada has better performance than the A100. For resource-intensive tasks, use it. But for the sake of such a conclusion, it was not worth conducting the test.

- The use of the A100 is justified in training “light” and “medium” LLMs when shipping small batches – this video card copes with tasks in the same time as the A6000 Ada, or even faster.

- The A6000 Ada, due to its larger memory capacity and number of cores, leaves room for experimentation with batch sizes.

- The A6000 Ada copes faster with generative tasks (for which everything is started), so this card looks interesting for working with already trained LLMs;

- The lack of support for MIG and NVLink imposes restrictions on the operation of the A6000 Ada – this is excellent hardware for tasks for which it will be devoted entirely and which it will solve alone. If sharing resources or combining cards to increase computing power is relevant, it is worth considering the A100.

As you can see, we do not have a clear conclusion about which card is better for working with LLM. We conducted testing to see the real capabilities of the A100 in comparison with the A6000 Ada under the same conditions. The choice of a particular GPU model should be made based on the number and complexity of real tasks, resource sharing capabilities and, of course, available finance.

However, we plan to launch several more tests of various GPU configurations to solve ML problems. In the comments, suggest candidates for testing.

Perhaps these texts will also interest you: