How assessing the relevance of a resume helped employers find candidates more effectively

Hello! My name is Vladislav Urikh, I am a product analyst at Avito Rabota. My team and I are improving the experience of employers who are looking for employees on Avito, and the quality of user resumes. In this article, I'll explain how we came up with a new approach to assess the relevance of such ads.

We saw a need to change the mechanics of working with resumes

On Avito Rabota you can publish your resume and search for employees. When companies or individuals are looking for workers, we offer them profiles of suitable people from the database. But in order to see the phone number, or to be able to write to the applicant, employers need to pay for a package of contacts.

We regularly study feedback from job seekers and employers, and as a result we have identified several important growth points:

Difficulties on the employers' side:

~71% of employers purchased contact with a job seeker, but did not receive a response. This could happen for various reasons, for example, if a person deleted our application and did not see notifications.

~40% of employers were faced with outdated resumes. This can happen, for example, when applicants find a job but do not delete their profile. Because of this, we assumed that they were still looking and showed them to employers. They bought a contact, contacted the person, but it turned out that the user simply forgot to delete the resume.

Difficulties are on the side of applicants. In addition to the fact that employers were not satisfied with the quality of the resume, we noticed that not all applicants received contacts: 62% had none at all, but 7% received 5 or more contacts.

To solve these problems, we decided to archive all irrelevant resumes and keep in the database only those who are actually looking for work.

We decided to archive outdated resumes, but ran into problems

We sent push notifications and called users to see if they were looking for work. If there was no response, the resume was archived. So we removed some of the irrelevant questionnaires, but this led to problems:

There are now 30% more contacts per resume. At the same time, the share of resumes containing at least one contact has not increased. That is, we began to offer the same resumes more often.

There was a shortage of resumes: the demand of employers was not covered by the offers of applicants.

We analyzed the results and realized that we needed to disable archiving and take a different approach.

In order for Avito to help job seekers find jobs and employers find employees, we decided to develop and implement a model for assessing the relevance of resumes. Then employers would see more relevant profiles, and applicants would receive more targeted contacts. But before developing the model, it was necessary to check whether the relevance of ads affects business metrics.

We began to develop an ML model to determine the relevance of advertisements

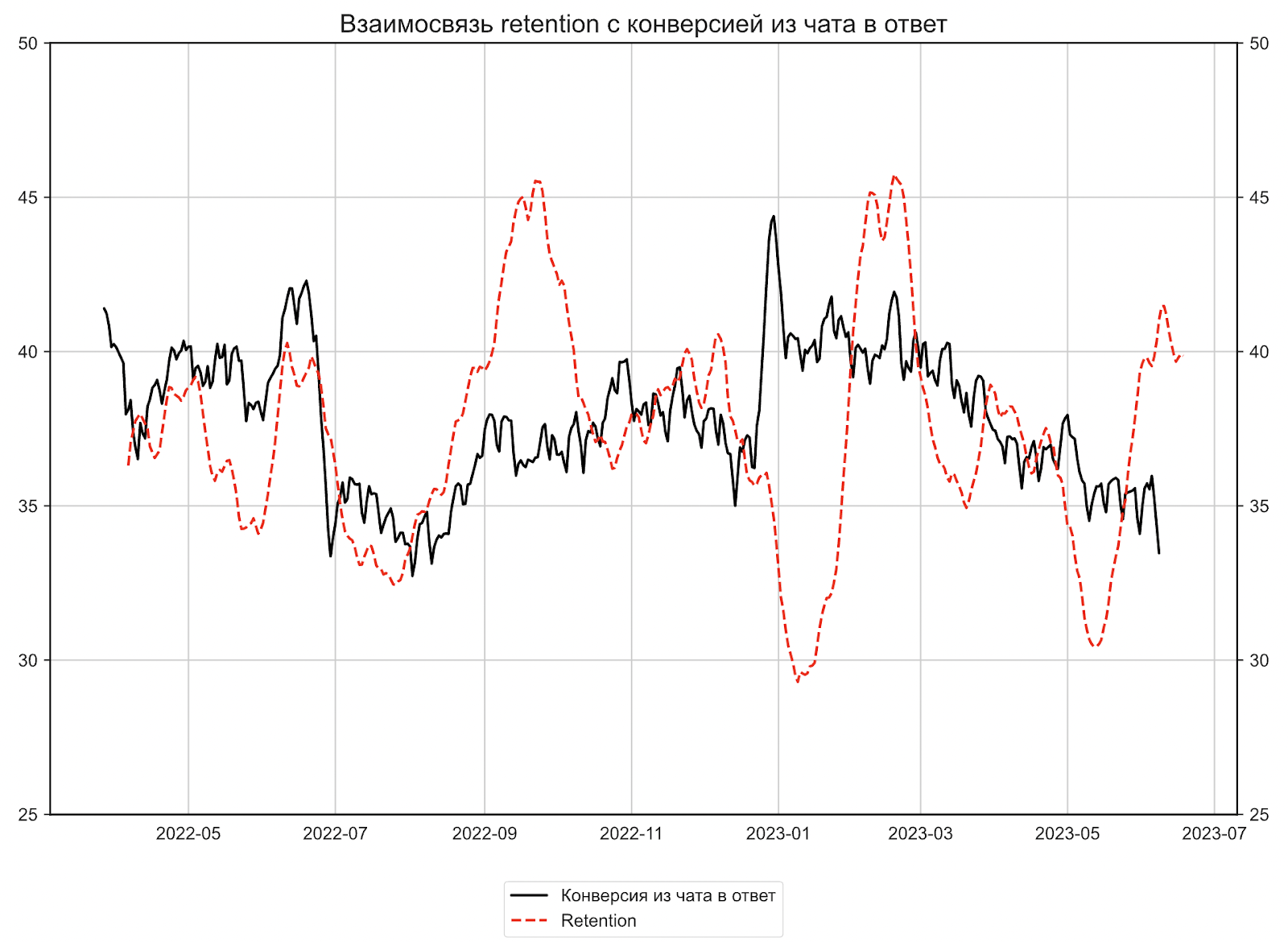

We determined the target metric. We conducted a study using the Granger test and saw that resume connectivity affects employer retention. That is, if they write and call applicants and receive responses, they are more likely to fill their need and then come back to look for employees on Avito.

We also found out that if we display current resumes higher in the search, the likelihood of receiving a suitable offer for the applicant will increase. And the number of cases where he will be hired will also increase.

We chose the share of answered messages as the main metric, since its increase affects the retention of employers in the repeated purchase of resumes.

We selected signs by which we can say that the user is looking for a job. We decided to train the ML model using data from job seekers’ chats with potential employers. A binary classification model was used: 1—the applicant responded to the employer within three days, 0—did not respond.

Thus, we wanted to train the model to determine the probability that the applicant’s resume is relevant. This parameter consisted of many characteristics of the resumes themselves and the behavior of applicants on the site:

factors of applicant activity: how a person contacts about vacancies and responds to employers when they write to him;

CV content factors: how much information about the applicant is in the advertisement, is the appropriate type of employment indicated, how active is the person in looking for work, is he ready to leave tomorrow;

resume interaction factors: how often the applicant updates the information in the resume.

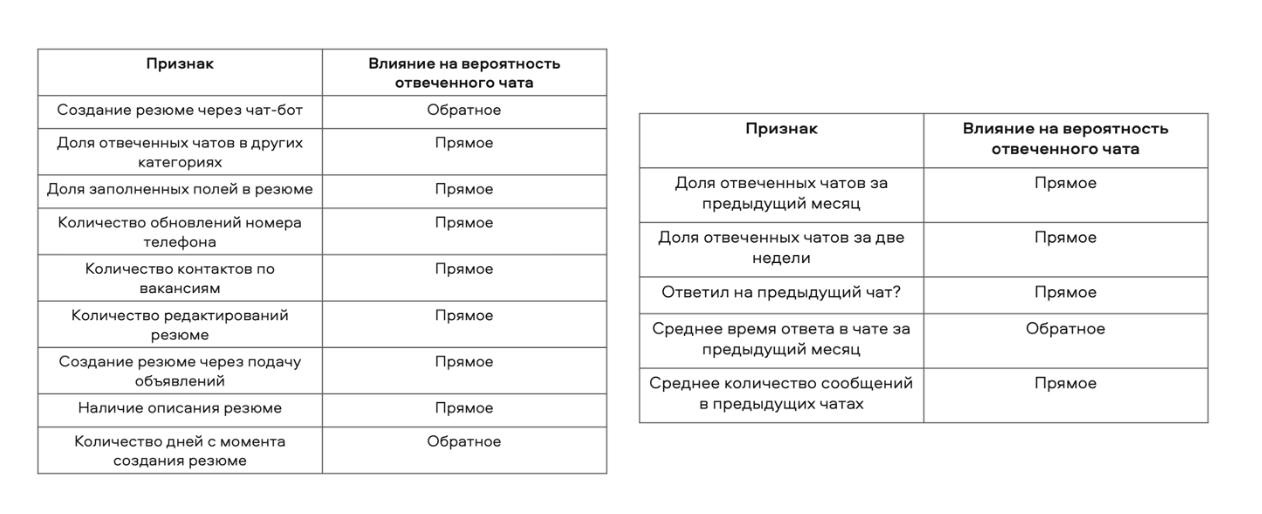

We determined the significance of the signs for the first contact and subsequent ones. After we described the relevance indicators, we began to check how different behavioral indicators affect conversion to answered chat.

If the influence is direct, then the user's behavior indicates the relevance of the resume. The opposite means that this action increases the likelihood that the resume is irrelevant and the user will not respond.

We divided the signs into 2 tables: significance for the first contact and for subsequent ones. This is necessary in order to equally qualitatively determine the relevance of new resumes for which there have not yet been contacts from employers, and those for which they have already managed to write or call.

We chose a model and checked how it compares with reality. We tried several classification algorithms and settled on Random Forest Classifier. This algorithm showed the best result in terms of the ROC-AUC metric – 0.751.

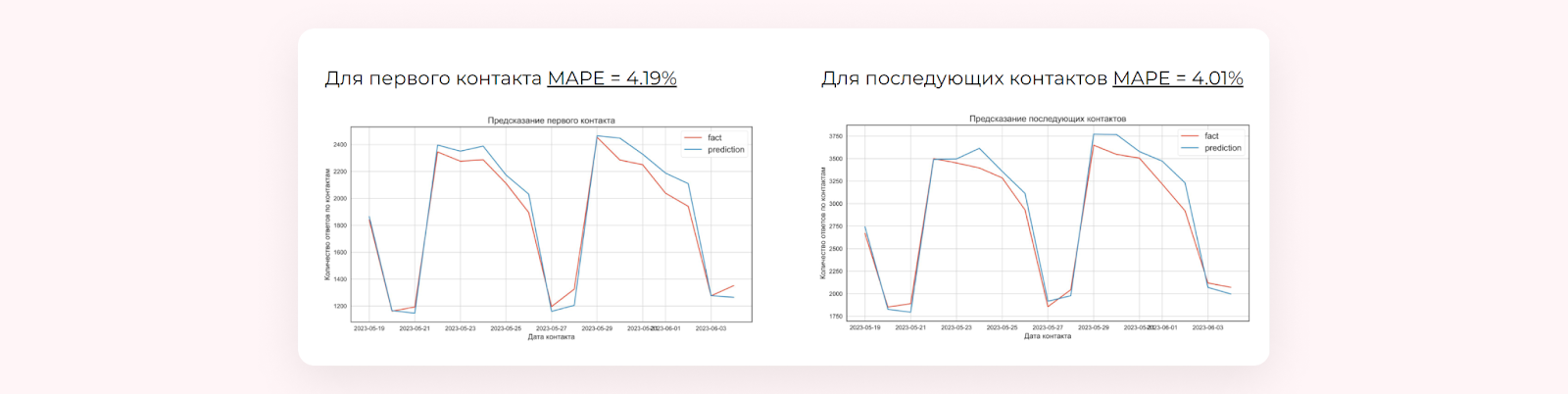

It was also important to us that the model estimates correlated well with the actual probability of response. To check, we took the scores obtained from resumes that were contacted on a certain day and compared them with the actual number of contacts responded.

For example, on September 1, a thousand users responded to messages from employers. We took all the resumes that had contact and added up their scores. Ultimately, we wanted to evaluate how the model's estimates compared to the actual number of chat responses.

The probability of response was assessed separately for the first contact and for subsequent ones.

We conducted tests and saw that the average difference between the predicted and actual values was 4.19% for the first contact, and 4.01% for subsequent ones.

Based on the general population, we can quite accurately determine all answers based on resume contacts if we weigh them according to model estimates.

We started implementing the model in search

We determined which stage of search results ranking to integrate into. On Avito there are two stages of ranking ads, they are called L1 and L2.

For example, a person makes a search request – looking for a designer. At stage L1, from the entire array of advertisements, we select the top 300 suitable ones for him. And at stage L2, we sort them again so that the most relevant ads are at the top.

To understand at what stage of ranking we need to integrate our model, we began to model new search results. We ranked the ads using the model and looked at whether the relevance of the ads increased or not. Here's what we found out:

In search results with fewer than 300 ads (their share is 48%), L1 ranking does not in any way affect the increase in the apparent relevance of ads. But if we integrate the model into L2 ranking, we can get a 12–14% increase in answered chats.

In search results with more than 300 ads (52% share), we cannot analytically evaluate the implementation of the model at the L1 stage. But on L2 we will also get a 12–14% increase in answered chats.

We assessed how relevance should influence search results. We cannot rank ads only by relevance; their relevance must be taken into account. Otherwise, we would begin to show employers resumes that may be relevant, but not suitable for the request. For example, those looking for a courier would see the latest profile of an accountant first in the search.

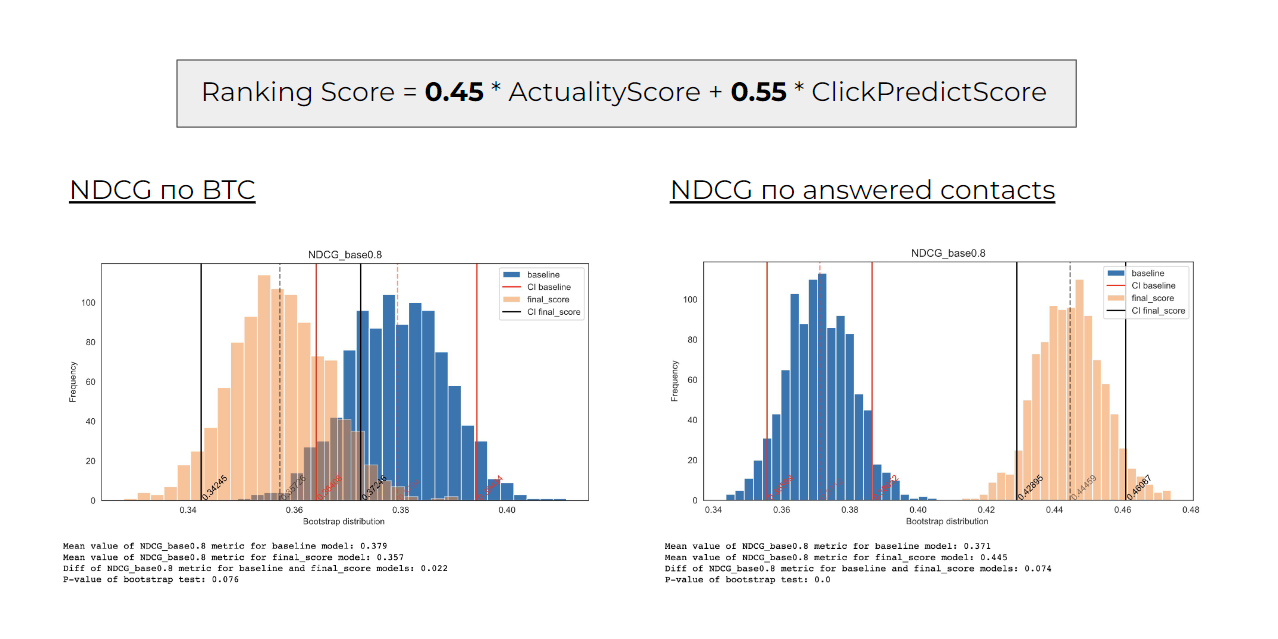

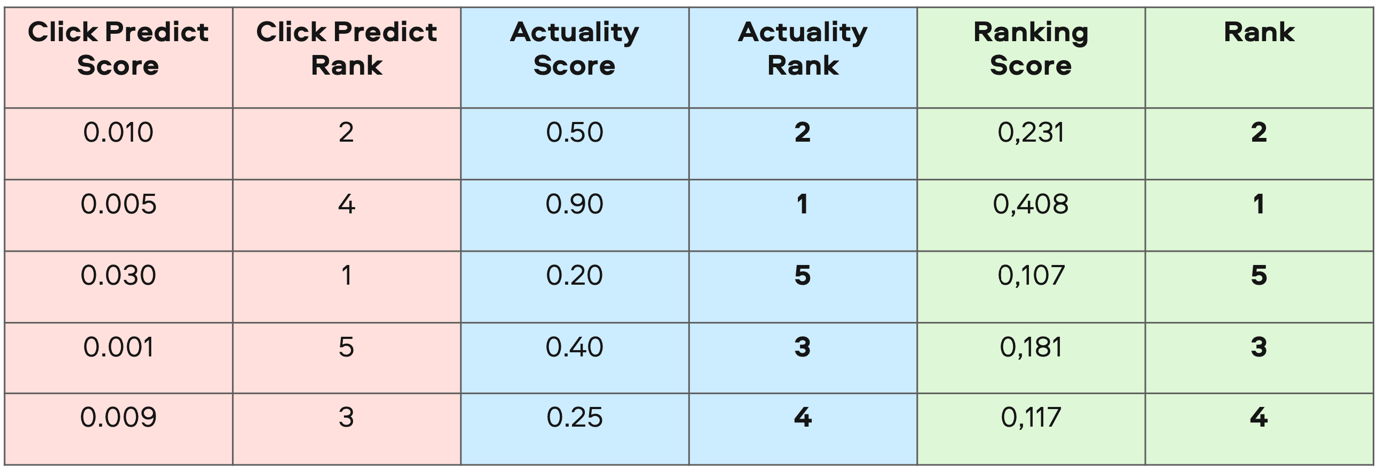

To determine the position of an ad in search results (RankingScore), in addition to relevance (ActualityScore), we introduced the Click Predict Score metric – it reflects the clickability and relevance of the resume. Then, based on historical data, we determined that we would mix them in the following proportions:

Such weights allow us to increase the share of contacts responded by increasing the relevance of resumes in search results, and at the same time not lose revenue due to the low relevance of profiles.

We assessed how our current ad delivery differs from the ideal. We consider this to be an issue in which the employer writes to just one applicant – and he eventually becomes an employee.

The Normalized discounted cumulative gain (NDCG) metric is responsible for assessing the ideal output. It was important for us that NDCG did not decline in terms of targeted actions by employers, and also that the number of answered chats increased. And so it happened:

Conducted A/B tests for search and saw that the number of chat responses increased

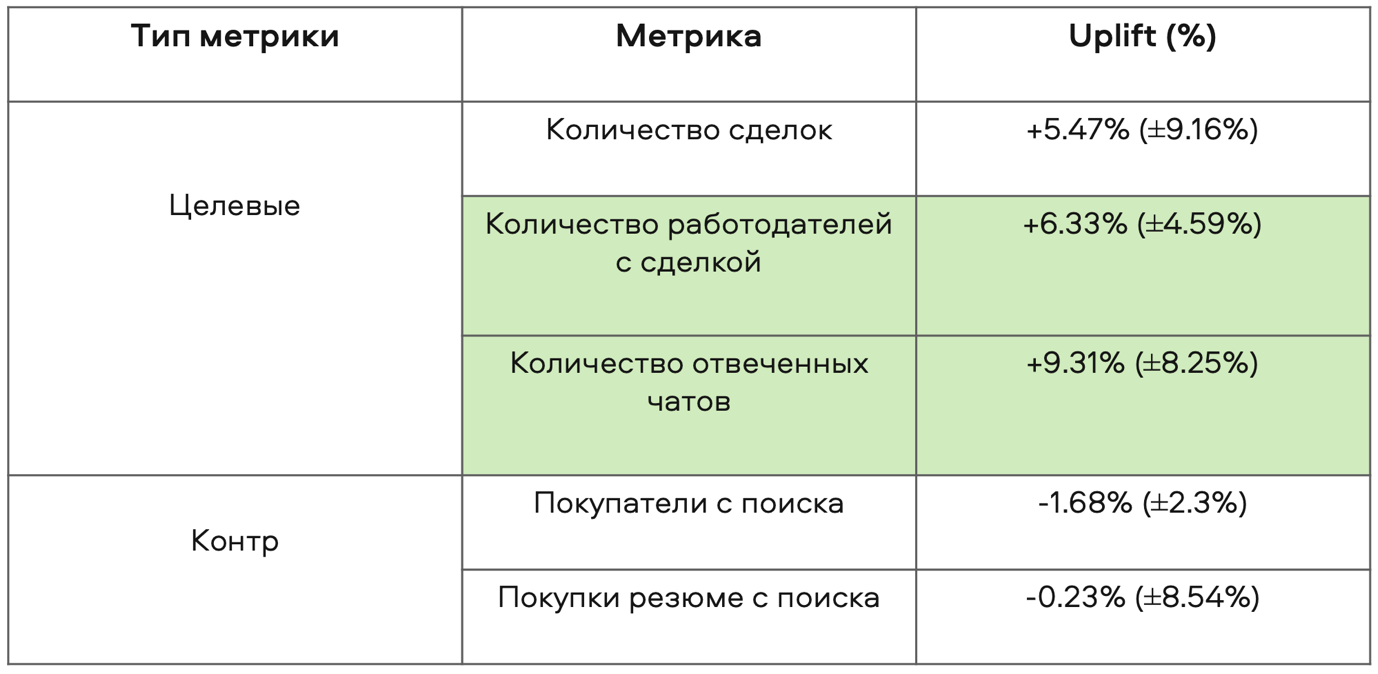

First A/B. From the very first test, we saw that significant metrics increased. Thanks to the new search results generated by our model, the number of answered chats increased, that is, applicants began to respond to employers more often, because we began to display relevant resumes higher.

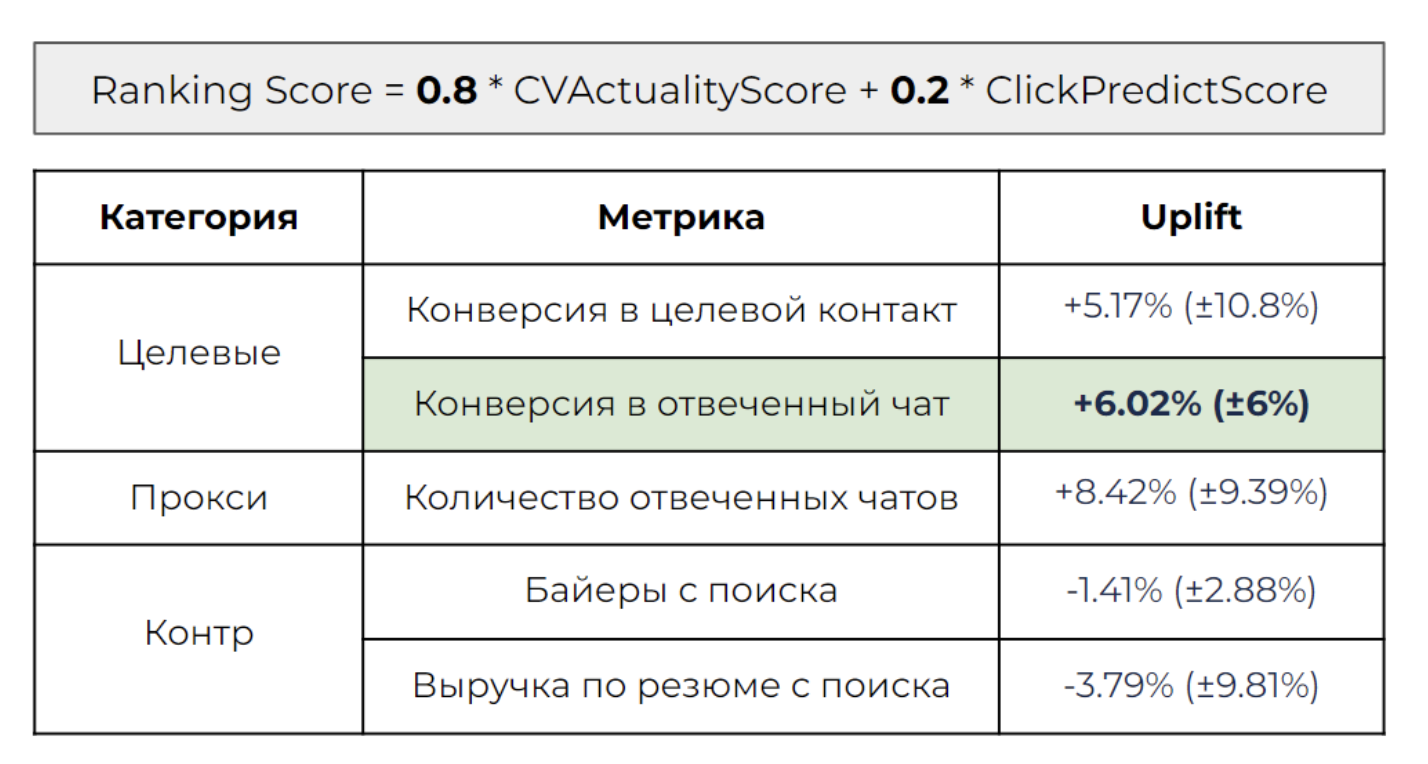

Second A/B. The second time we decided to increase the share of relevance in the final score – we took 80% by 20%:

We saw that the conversion to the answered chat increased, but the number of answered chats and the conversion to the target contact did not fall.

We began to implement the model in the recommendations block

As I wrote above, we implemented a model at the search stage so that it would rank resumes and show the most relevant ones at the top, and move the irrelevant ones down the search results. Since 2 previous A/B tests showed good results, we decided not to stop there and began to implement the model in the recommendation block.

As before, before implementing the model into recommendations, we checked what data our model showed in comparison with the ideal results. We had a test and a control group, and after checking, we were convinced that the results of the test group were significantly higher than the results of the control group:

Conducted A/B tests for recommendations

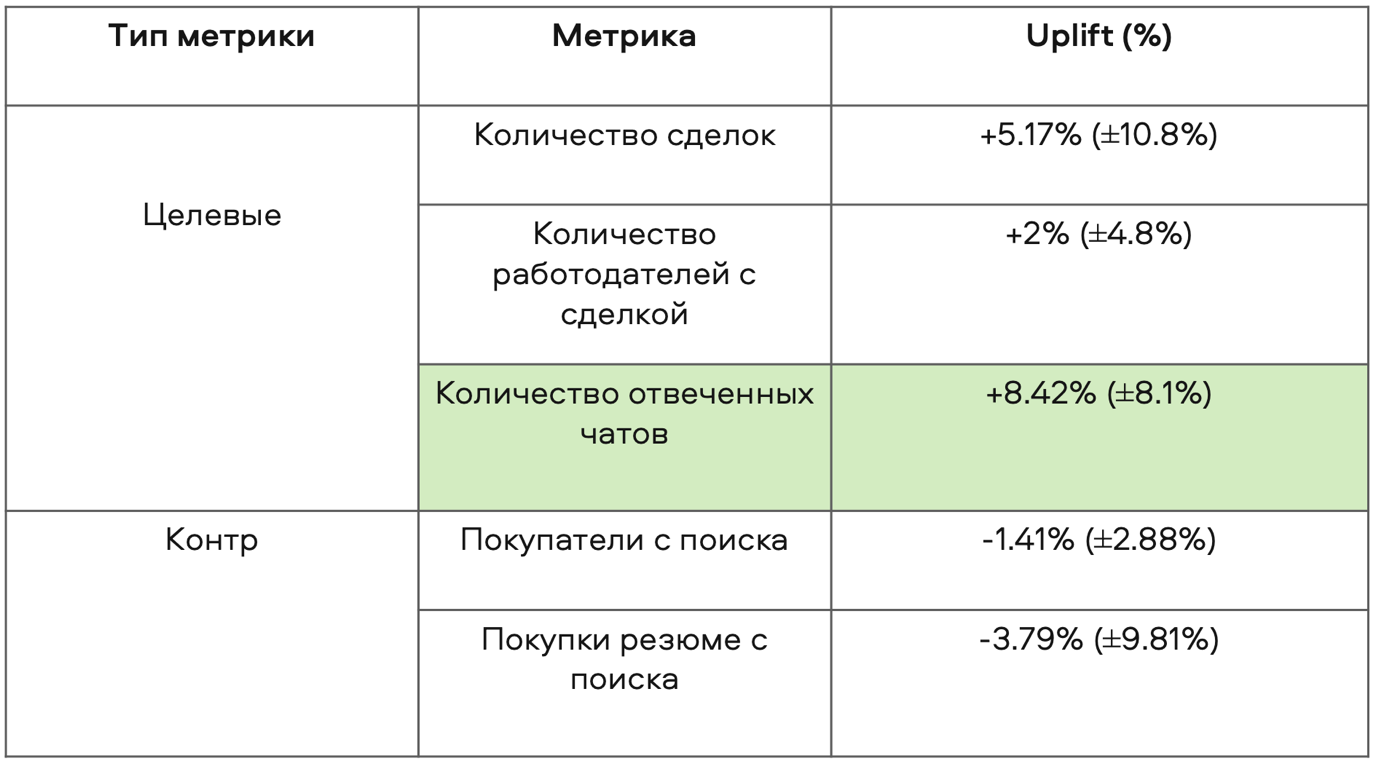

We decided to take the same approach as in search and rank ads using a formula with the same values:

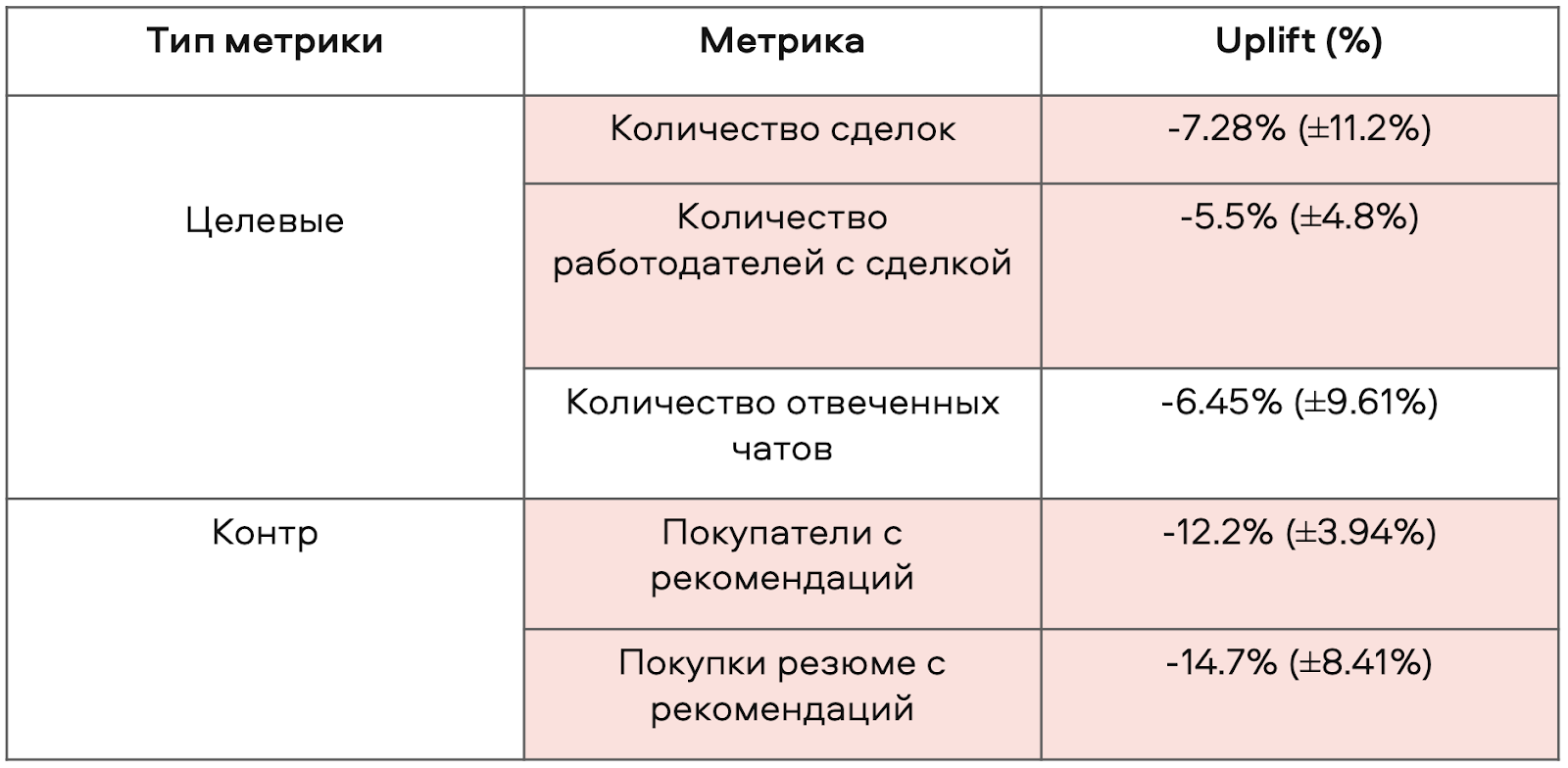

We conducted the first A/B test and got the following results:

The test was unsuccessful – all significant metrics fell. We found that in recommendations, ratings of ad relevance and topicality have different expected values and variances, unlike the same indicators in search.

The picture below shows that the final ranking was identical to the ranking by relevance. This means that we lost the relevance of recommendations – for example, we began to recommend resumes of accountants or SMM managers to those who were previously looking for drivers.

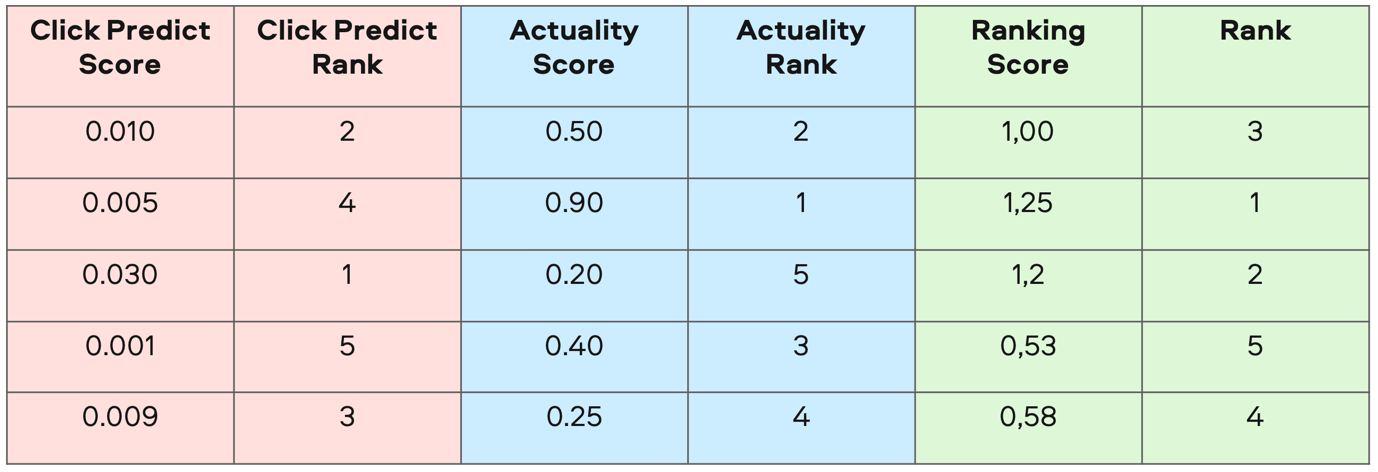

We realized that if we kept the old weights for relevance and relevance – 45% to 55% – then the relevance scores would not contribute to the ranking. Therefore, using ordinary heuristics, we switched from scores to ranks and began to rank according to their combination:

Now the final ranking of recommendations was not equal to the ranking by relevance. The new ranking took into account click-through rate, that is, relevance, and the likelihood that the applicant will respond to the employer.

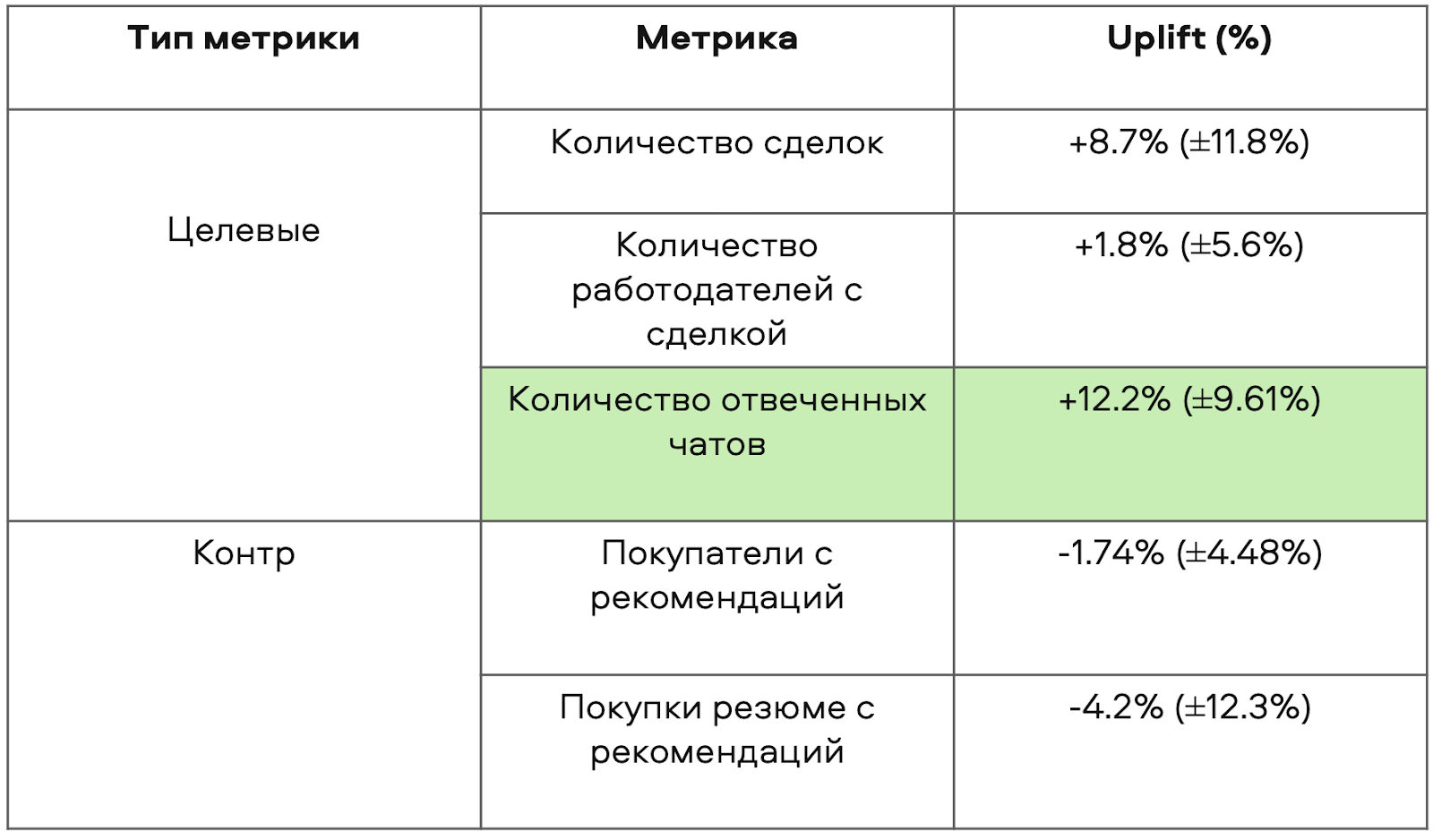

The second test was successful. We increased the conversion to answered chat, while the countermetrics fell slightly, which was a good indicator for us.

In reality, this means that we began to show more relevant and up-to-date resumes of applicants.

Final results: how we influenced the relevance of resume search results

Increased the number of transactions in the “Resume” category by 12–15%;

Increased employer retention for repeat purchases by 12–13%;

Revenue in the “Resume” category increased by 5–6%.