Horror stories about artificial intelligence as science fiction

Judge opponents of horror stories by the totality of their arguments, not by the average quality of each of them

From an intellectual point of view, I have always found the AI bogeyman arguments to be quite compelling. However, instinctively I always believed that they were wrong. It could be biased reasoning, because the thought of having to stop talking about what interests me and focus on this narrow technical issue is extremely distasteful to me. But for many years, I had a nagging suspicion in my head that, from an objective point of view, horror movie fans were wrong in predicting the future.

After much thought, I think I finally understand why I don't believe those who believe that AI has the potential to kill us all because its utility function will not align with human values. My reasoning is simple, but I've never seen anyone phrase something like this in this way before. For horror fans to be wrong, skeptics don't have to be right in all their arguments. It is enough for them to be right in one of them, and then the whole structure will collapse.

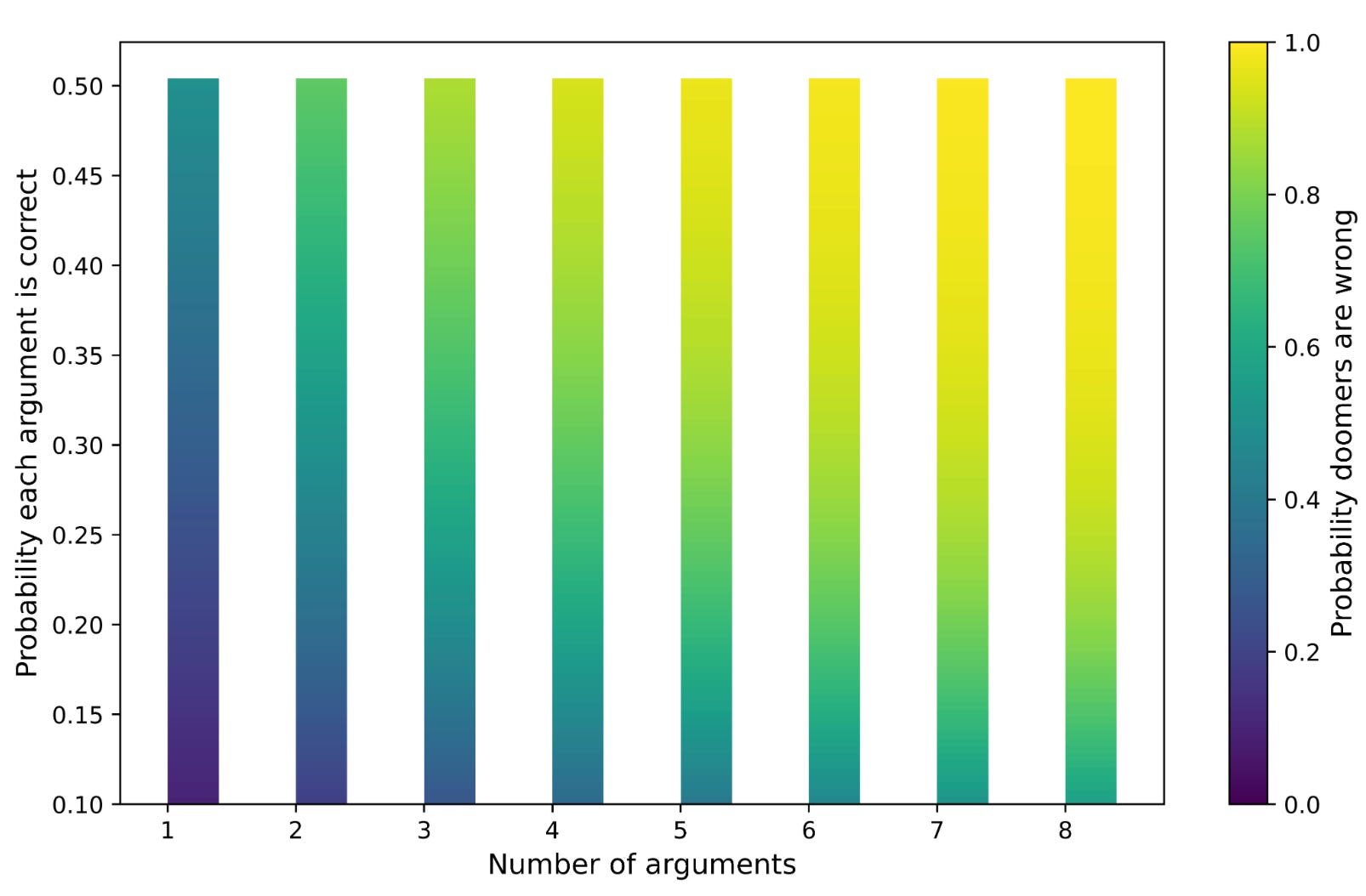

Let's say there are 10 arguments against horror stories, and each of them has only a 20% chance of being true. This means that the probability that horror story lovers are wrong is 1 – (1-0.2)10 = 0.89. Each individual argument against horror stories may be weak, but the overall argument against it is strong. If you play with the percentages and the number of arguments, the probability that the horror story lovers are right changes, as in the graph below.

I do not think that we will be able to evaluate the plausibility of certain arguments related to the question of AI control, since smart people disagree greatly on almost all of them. Here are eight opinions that don't seem crazy to me, with attached probabilities of their correctness, which I just pulled from my head:

Intelligence has huge diminishing returns, so a super AI that is smarter than us won't have that much of an advantage. (25%)

Controlling AI will prove to be a relatively trivial problem. For example, just ask the LLM to use common sense and he won't turn us into a ham sandwich when we ask him to “make me a ham sandwich.” (thirty%)

For AI to seek power, it will need to be programmed accordingly. Pinker: “There is no law of complex systems that states that intelligent agents are obliged to turn into ruthless conquistadors. Indeed, we know of representatives of one highly developed form of intelligence that evolved without this defect. They are called women.” (25%)

The AI will simply be friendly. Perhaps there is some law in which there is a close connection between reason and morality. This is why humans are more moral than other animals, and the smartest people and societies are more ethical than the dumbest. (10%)

The super-AI will find it useful to negotiate with us and keep us close, just as humans might form mutually beneficial relationships with ants if they could overcome the communication barrier. This argument brought Katje Grace. (10%)

Super AIs will be able to control and balance each other, which could create dangers for humanity, but also opportunities for its prosperity. (10%)

Artificial intelligence research will stall indefinitely, perhaps because it runs out of training data or for some other reason. (35%)

We are fundamentally mistaken about the nature of such a thing as “intelligence.” There are specific abilities and we have been misled by metaphors based on the psychometric concept general intelligence factor. See also Hanson on “betterness”. (35%)

One of the problems is that you can split the arguments however you like. Thus, the point “control is a relatively trivial problem” can be divided into different ways that can help us solve the problem of control. Moreover, isn't #3 a subset of #2? And No. 1 and No. 8 are conceptually quite close. But I think there are more ways to separate arguments and multiply them than there are ways to combine them. If I actually spelled out all the ways in which the alignment problem could be solved, and associated a probability with each one, the argument for the horror stories would look worse.

There is no ideal way to conduct such an analysis. But based on the arbitrary probabilities I gave for the eight arguments above, we can say that there is an 88% chance that the bogeymen are wrong. A 12% chance of the end of humanity still seems pretty high, but I'm sure there are many other arguments that I can't remember right now that could lower that number.

There is also the possibility that although AI will end humanity, there is nothing we can do about it. I would estimate the probability of this to be 40%. Moreover, it can be argued that even if a theoretical solution exists, our policies will not allow us to achieve it. Again, let's say there is a 40% chance of this happening. This leaves us with a 12% chance that AI is an existential risk, and 0.12 * 0.6 * 0.6 = 4% chance that AI is an existential risk and we can do something do with it.

I've always had a similar problem with the Fermi Paradox, and also with the supposed solution to this problem, whereby we know that UFOs have actually visited Earth. Regarding the last statement, to believe that we have seen or made contact with aliens, we must at least believe the following:

Superintelligent aliens exist.

They would like to visit us.

Their visit to us from beyond the observable Universe would be in accordance with the laws of physics.

It would be cost effective for them to visit us based on their priorities and economic constraints.

Even though they are willing and able to get to us, they are so incompetent that they are constantly caught, but not in a way that is too obvious.

Number 5 is the one that always struck me as the funniest of all. I can imagine aliens that can travel between stars, but not ones that can do so, but still leave behind evidence in the form of things like grainy photographs, and nothing that could provide more direct evidence that does not rely on to first-hand evidence. In an amazing coincidence, our first contacts with alien life provide a level of evidence that might make it into the National Enquirer, but falls short of the standards of real journalism. A few years ago, the media was gripped by UFO mania, and now there is much less talk about similar reports recently were debunked by the Pentagon.

Yes, I know that the Fermi Paradox does not predict that any particular civilization will make itself known, but it does say that, given the vastness of the Universe, at least one should be willing and able to do so. However, the assumption of an infinite number of civilizations, in my opinion, does not solve the problem, which is that any aliens that exist must be very far away from us. There are also endless options for trouble that will prevent them or their messages from reaching us.

I think the 4% chance that AI will be able to destroy us and we can do something about it is high enough for people to work on the problem, and I'm glad some are doing so. But I disagree with those who believe that existential risk is more likely than not, or even that it is certain that it will happen, if we follow a few maxims like “intelligence helps achieve goals” and “intelligent beings strive to control resources “.

We also have to consider that there is a potential price to pay for making people scared. I believe that if AI doesn't destroy us, it will be a huge boon to humanity, based on the fact that it's hard to think of many technologies that people have willingly used that haven't made the world a better place in the long run. So, AI horror stories are not something we can indulge in without worrying about the other side of the coin. If the mob frightens enough powerful politicians and business leaders, chances are they will delay the arrival of a better future indefinitely. An important precedent for such behavior can be called nuclear energy. Failure to maximize the capabilities of intelligence may itself pose an existential risk to humanity, since we will simply continue to be stupid until civilization is destroyed by some super-disease or nuclear war. As long as those who fear AI take jobs as programmers and seek narrow technical solutions rather than lobby for government control of the industry, I applaud their efforts.

It is a mistake to judge the case against AI horror stories by the quality of the average argument. The party telling the elaborate story bears the burden of proving the plausibility of its imagined scenario. We can dismiss most science fiction as unable to make good predictions about the future, even if we cannot say exactly how each work gets it wrong or what path future political and technological developments will take. Such skepticism is justified for all stories involving creatures with intelligence different from ours, be they machines or life forms from other planets.