Graph Neural Networks GNNs in Self-Learning Artificial Intelligence

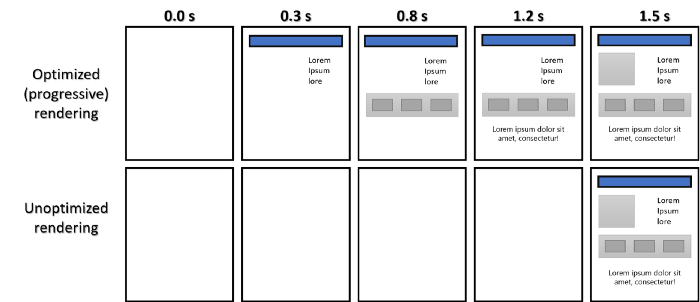

May 30, 2023 was on https://arxiv.org/abs/2305.19801 article was published Predicting protein stability changes under multiple amino acid substitutions using equivariant graph neural networks. But I’m interested in GNNs for a different reason. In 2016, the idea of creating a self-learning artificial intelligence came to my mind. The first drafts describing its architecture began to appear in 2018. Then I bet on GAN and genetic algorithms. The architecture of the “brain” unit then looked like this

In fact, the cluster is the most component element in the hierarchy of programs that are responsible for the perception of the environment and the analysis of what is happening. Clusters are grouped into groups. Each group is responsible for a certain functionality, like neurons in the human brain. Each group of clusters is included in the section of the “brain”, which specializes in solving problems in a particular area. The cluster itself is quite versatile in terms of functionality and its copies can be used to solve various problems.

However, the main problem here is that in order to generate knowledge, one would have to use either a genetic algorithm, filtering out at each stage unnecessary / incorrect generation options, a kind of “ideas”. There is no need to talk about generation with the help of a permutator, since it creates a huge amount of unnecessary data, of which less than one percent of the information received is needed to solve the problem.

The output appeared in 2021, after materials and articles were published on the Stanford youtube channel:

https://www.youtube.com/playlist?list=PLoROMvodv4rPLKxIpqhjhPgdQy7imNkDn

In addition to the lectures, the university’s course page also included links to scientific papers and python source code. Thus, it became possible to replace the cumbersome cluster with a knowledge graph. At the same time, GNN can be used as a constantly learning model. Using a simple graph example that contains several references to python functions, the scisoftdev development team and I got only working versions of function sequences. We created a knowledge graph that consists of function references, then half a mile of all possible paths in this graph with the condition that this path contains a working sequence, that is, a program consisting of a specific sequence of functions runs completely without errors when launched.

Here is the resulting knowledge graph. And here is the sequence of functions – the path that runs without errors:

{0: <function array_to_string at 0x000001C2A9277310>, 1: <function compute_average at 0x000001C2A9277550>, 2: <function string_to_array at 0x000001C2A92774C0>}

Working version of the code: [<function string_to_array at 0x000001C2A92774C0>, <function compute_average at 0x000001C2A9277550>]

result: 5.5We feed the array as a string as input

input_array = “[1, 2, 3, 4, 5, 6, 7, 8, 9, 10]”

The task is to get the average value of an array with only three functions: converting a string to an array, converting an array to a string, and calculating the average of an array.

as you can see from the output of the program, the resulting path consists of only two references to the function, this is the conversion of the string into an array and the function for calculating the average value. Naturally, there were other versions of graphlets (Graphlets are small connected non-isomorphic induced subgraphs of a large network), which included all three function references, but according to the condition of the problem, the path must be the smallest, while it must solve the problem.

Thus, this small experiment proved that it is possible not only to build a knowledge graph, but also to supplement it with new knowledge. For this, only two tools are needed – machine learning on GML graphs and graph neural networks GNN.