Creating a server for online MMO games in PHP part 14 – Network card and frame delay (Latency frame) according to RFC 2544 (1242)

In this part of a series of articles about developing a server for real-time games, I will tell you about the network device indicator that significantly affects the number of requests that your game server can handle.

In the article I will tell you how developers are misled by telling what should be considered first of all when developing servers (and not only for games), but silent about the real “bottlenecks” (problems).

A video version will be attached at the end of the article.

Bandwidth

When it comes to network cards, routers and other devices for receiving and transmitting packets, their main indicator is the throughput, which is often 100 megabits per second on home devices.

Many mistakenly believe that knowing this parameter, as well as the speed of your Internet, which is also expressed in Megabits per second (Mbps) and the size of the packets transmitted to your server, will be able to calculate the number of teams (packets) of players per second.

Reducing the batch size in the first place?!

For example, many believe that with an actual Internet speed of 10 Mb / s and a packet size from clients of 64 bytes, they can process 1,250,000 such packets per second (1 Mbps = 125,000 bytes) and in first of all tend to reduce the packet size as follows:

They choose a UDP protocol with no guaranteed delivery of out-of-order incoming packets due to a smaller header size (without thinking about the mandatory pauses between commands in games during which you can resend packets thousands of times if they are lost and suffer with out-of-order numbering of incoming packets)

They use at the design stage unreadable (except for their author himself) bytes – flags in which some kind of command is hidden (instead of using text-based commands understandable to a person, for example, in the same json)

Using compression (which wastes time before sending packets and slows down the process)

Why is the CPU important when networking?

Reducing the size of packages is a necessary part of development, but not at the design stage. However, very often a server developer starts with this, and any extra actions take time, complicate the project, increase the chance of errors and bring emotional burnout closer by delaying the release of your project to show other people (if the brain does not receive positive feedback for a long time, it begins to believe that it is doing nonsense) .

The mathematical conclusion above about the number of processed packets is not correct – one process (thread) is not able to read packets from the network stack at the same rate as the network device receives them.

And here lies the most basic thing that the developer should foresee – the possibility of the server running in several parallel processes (threads), and as I said in another article, the number of CPU cores and their threads is responsible for this.

If the server working in one thread is not able to read even 10% of the received packets (sometimes even 1%), then reducing the size of the packets (which are usually small in games) will at best give an increase in speed as much – it does not look like a priority, but rather the one that needs to be addressed as a last resort to optimize and reduce the number of CPU cores.

Network Device Latency

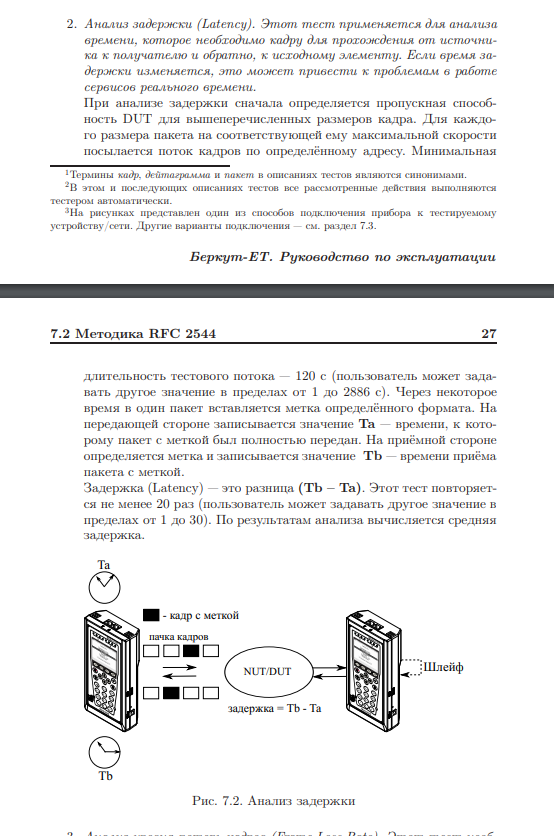

To determine the speed at which a network device can forward a packet, the manufacturers of these devices publish in tests performed by a standard called RFC 2544 in the section Latency (Frame Latency). Sometimes you can see the mention of RFC 1242 on which the measurement methods are based.

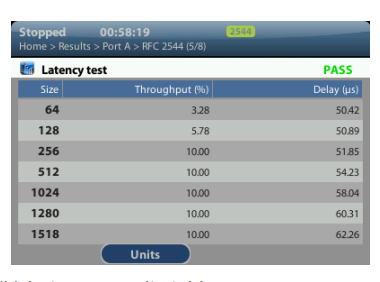

The following is an excerpt from the instruction of the network equipment test analyzer, which means the Latency indicator (delay)

However, it is possible to work with network devices in parallel streams, and since “under the hood” it works in parallel (on its built-in cores and threads) and, I believe, asynchronously – as the load on it increases, this speed may drop.

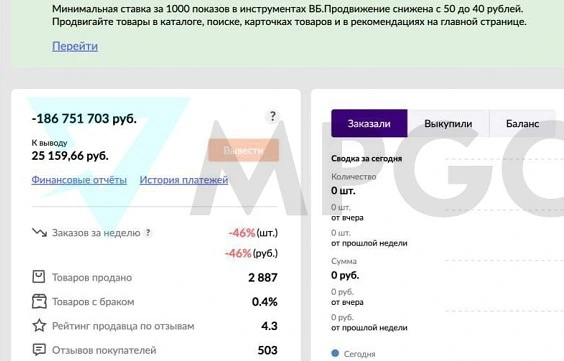

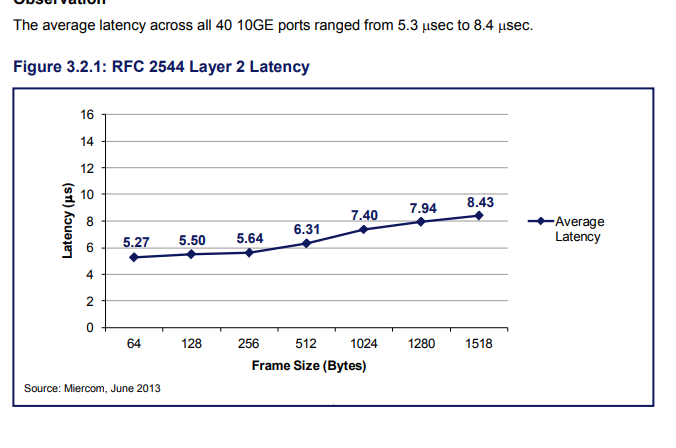

I will give examples of such tests, where us are microseconds:

And here is an example of a test where the number of frames (Frames) per second has already been counted:

According to public tests, the latency for 64-byte packets is on average from 7 to 50 microseconds, i.e. from 135,000 to 20,000 packets per second already received by the device can be read from it within a single stream.

This allows us to conclude that the delay is quite serious (if the device itself at a speed of 10 Mbps is capable of receiving 1,250,000 similar packets).

conclusions

One of the very first and important decisions when developing a server is to ensure that it works in multi-threaded mode, i.e. so that your service would be several threads (they can share memory with each other like in C #, use Channel channels like I do in Go or PHP, or even be different processes, data exchange between which can be established, for example, by establishing a TCP connection between them, use a socket (file ) to exchange information, etc.).

This approach can also be seen in WEB servers (apache, nginx) and PHP process managers – FPM where you need to manually specify the number of these parallel threads (processes) – they are called in them workers. Keep in mind that in addition to processing packets by the server, the OS must be left free for the OS to work (depending on what else is running on it, such as a database).

In addition to the server running in multi-threaded mode on the physical “machine” (hardware), there must be a sufficient number of threads (thread) in the CPU cores so that your threads (processes) are on different threads and really work in parallel (the OS has a built-in balancer on which, I believe, can be relied upon at least in the early stages of development).

Finally

I hope the article was useful for you. I did not go into all aspects of interaction with network equipment and network accelerators, however, this information will help people who decide to create their own online game with a high number of online players where they need their own server (including an authoritarian one that independently calculates game mechanics and physics).

Subscribe to my profile if you are interested in this direction – I am developing my own authoritarian server and demonstration Online Games. All articles are written by me personally based on my own experience and research. I also run a video blog Youtube

In the next article, I will tell you how to speed up your TCP server by 2 times by disabling only one standard parameter.

Video version of this article: