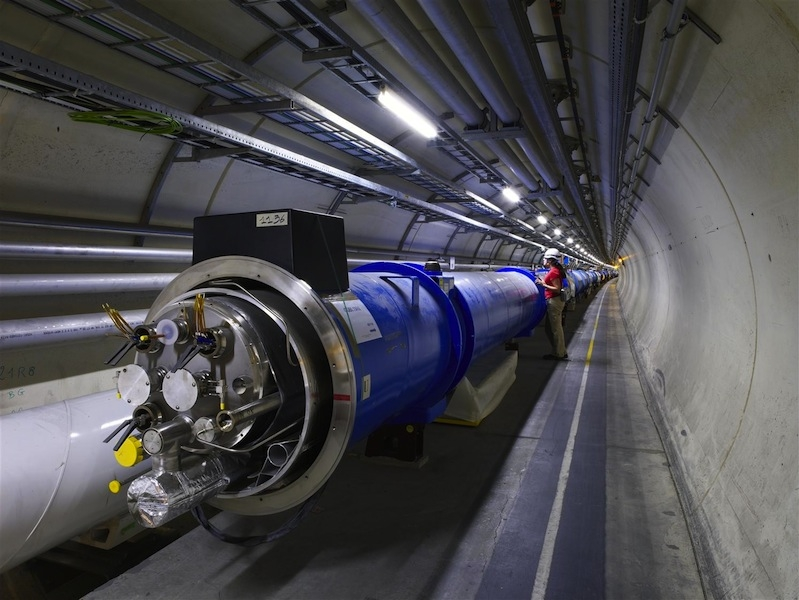

CERN has increased its storage capacity to its first ever exabyte. How Large Hadron Collider data is stored

When the Large Hadron Collider is running—as it was during its second cycle, from early 2015 to 2018—it processes events at 40 MHz. In other words, it accounts for 40 million events per second. This is necessary to track collisions between particles lasting less than 25 nanoseconds.

Each event contains approximately 1 megabyte of data. This means that approximately 40 terabytes of data enter the system while the collider is running. Per second! An absolutely fantastic amount of information, because a petabyte can be collected in about half a minute. About 72,000 average hard drives would fill up every hour.

It is not possible to process all this at the same speed; It takes years to analyze such volumes of data after the installation has finished operating. A significant part is filtered out at the collection stage, which also consumes huge computing resources. But still, the rest of the information needs to be stored somewhere. To achieve this, the European Organization for Nuclear Research (CERN) maintains the largest data center in the world.

How CERN data is stored

Even CERN cannot record all the information completely. Therefore, they filter events at the same speed at which they arrive. The system must filter from 40 million events per second to ~1000 actual events per second, which can then be recorded on media. Basically, such filtering is done through levels.

The LHC network is organized into levels: 0, 1, 2 and 3. Level 0 processes primary information and is located at CERN itself. It consists of thousands of commercially purchased servers—both old PC-style boxes and iblade systems that look like black pizza boxes, stacked in rows on shelves. New servers are constantly being purchased and added. Data transmitted to level 0 by the LHC data acquisition systems is archived on magnetic tape.

Level 0 is the CERN data center. All LHC data flows through this central node, but overall it provides less than 20% of the total computing power. CERN is responsible for securely storing the raw data (trillions of digital readings from all detectors) and performing the first step of converting this data into meaningful information. Useless data is thrown away and not recorded anywhere. Other raw and reconstructed data goes to Level 1. It is the main one and the most active at all times when the LHC is not working.

There are still no people at this stage; the distribution of information is automated. Level 1 consists of 14 data centers in different countries, each with an area of 3-5 thousand square meters. The power of such a data center is about 3 MW. Data centers provide 24/7 network support and are responsible for storing their portion of raw and reconstructed data, as well as performing large-scale processing and categorization of information for subsequent storage.

Tier 1 data centers are responsible for distributing data to Tiers 2 and 3. They also store a portion of the simulated data that Tier 2 produces. Fiber optic links operating at a minimum of 60 gigabits per second, connect CERN with each of the 14 largest Tier 1 centers around the world. Some of them are located in Hungary, others in Switzerland, France, the Netherlands, and Britain. This dedicated high-bandwidth network is called LHC Optical Private Network (LHCOPN). Every few years, a new data center is connected to the network: in 2014 there were 12, in 2018 – 13.

The raw data from the experiments exists in two copies: one at CERN at Level 0 and the other at major Level 1 centers around the world.

Second-level centers – small computing stations at universities and research institutes – are also connected to the LHC computer network. There is not always space there to install large data centers, but they also have a high-speed communication line extended to them so that they can quickly receive the data they need, structured at level 1. Even in such a situation, at speeds of 10-60 Gbit/sec, sometimes downloading the necessary data for analysis takes several weeks.

There is also level 3 – individual computers of individual professors, their students and, in general, volunteers around the world. They too often help with processing power and can store specific segments of data to take the load off the network. All this is processed data that came from level 1, so even if some of it is lost, it’s not a big deal. At level 0 inside CERN all the data that was filtered during the operation of the installation is stored.

First ever exabyte

Therefore, CERN needs a gigantic repository capable of containing all the experimental results accumulated over several years. Even after a sharp reduction in the volume of new data during the current lull, the data center inside CERN processes an average of one petabyte (one million gigabytes) of data per day. This is analyzed data that is gradually accumulated.

To cope with the load, the institute is constantly adding new storage facilities. And at the end of September the organization announcedthat their volume has reached more than one exabyte, that is, 1024 petabytes (or 1 million terabytes). This is a 39% increase compared to the same period last year: in 2022, CERN’s storage capacity had a capacity of 735 petabytes.

In the official statement The research organization noted that one million terabytes of disk space is needed not only to record the physical parameters of the LHC. It also hosts other CERN data, including secondary experiments, data from other laboratories, and various collaborative online services used by scientists.

The 1 exabyte capacity is provided by 111,000 devices, predominantly hard drives, with a growing share of flash drives. About 60% of CERN’s data storage is HDD, and only 15% of the disks (about 17,000 devices) are SSD (but they represent much less than 15% of the total system capacity).

The SSDs are controlled by CERN’s open source software solution called EOS. It was created by employees of the research institute in 2010 specifically to meet the extreme computing needs of the LHC. Moreover, this software is available to anyone – it can be installed for free from the laboratory’s website. In 2021, they had about 30 thousand clients and stored about 8 billion files. Now, in 2023, these numbers can probably be doubled.

For long-term data storage, CERN mainly uses magnetic tapes. And they are updated. Data from the old archive is constantly transferred to new tapes, with more advanced technologies and higher recording densities.

With more than a hundred thousand devices, breakdowns are inevitable, so data is constantly replicated. CERN says that “component failures are common, so the repository is built to prevent critical losses.” Start the experiment again, which costs at $1 billion a year, no one wants it.

Andreas Peters, project manager for EOS, CERN’s disk-based data storage system, said the huge increase in storage capacity is in preparation for the upcoming heavy ion experiment at the Large Hadron Collider:

“We achieved this new record for storage infrastructure after expanding capacity to be able to collect all heavy ion data. What is important here is not only increasing data capacity, but also increasing productivity. For the first time, the read speed of our combined data storage exceeded the threshold of one terabyte per second (1 TB/s).”

Thanks to this, in theory, per day of operation of the collider, the laboratory will now be able to process and store not one, but three petabytes of data. This means that three times more information about experiments will be collected. This way, it will be possible to achieve higher measurement accuracy – reducing the amount of data discarded during filtering.

Joachim Mnich, CERN’s Director of Research and Computing, said in a statement announcing the new storage capacity milestone: “With this, we once again draw the world’s attention to how important it is to continue to expand data processing and storage capabilities. We are setting new standards for high-performance storage systems, including for companies and other laboratories. And, of course, this is a very important milestone for future LHC launches.”

Today’s storage capacity represents a whopping 3,100% increase over what CERN had ten years ago in 2012. Back then, the LHC’s data storage could only hold a modest 32 petabytes.

What’s interesting is that so far the capacity of Tier 0 storage is developing strictly in accordance with with this schedule. Vladimir Bagil, German Sanchio and other CERN scientists predicted back in 2015 the amount of data needed to continue the project. If you believe their work, then by 2029 the storage will have to be increased by another 4 (!) times. It will already contain 4 exabytes of data. This is approximately 0.003% of the data of the entire modern Internet (~120 zettabytes).

The UFO flew in and left a promotional code here for our blog readers:

-15% on any VDS order (except for the Warm-up tariff) – HABRFIRSTVDS.