Boson – DBMS Development from Scratch (Part II)

1. Introduction

In the first part of the article, we discussed the development of the lowest layer of the Boson DBMS – CachedFileIO. As mentioned, the statistics of such a phenomenon as Locality of Reference suggests that in real applications ~ 95% of data requests are localized in 10-15% of the database. The average read/write ratio is 70%/30%. This makes it efficient to use a cache based on the Least Recently Used (LRU) algorithm. Having implemented it, we got a 260% -600% increase in read speed with 87% -97% cache hits.

If we recall the simplified Boson architecture, then it is divided into four main software layers, each of which provides another level of abstraction.

2. Functional requirements

The next layer of the Boson DBMS after the cache is the RecordFileIO record store. This is the first prototype of the database, which is beginning to bring practical benefits. Let’s formulate the top-level requirements specification:

Storage of records of arbitrary* length (create, read, modify, delete). Arbitrary length will be understood as binary records up to 4Gb in size.

Record navigation as a doubly-linked list (first, last, next, previous), as well as the service ability to directly indicate the position in the file.

Reusing the space of deleted records.

Checking the integrity of data and headers based on checksums.

3. Data structures

To implement the above requirements, we need to organize the following data structure in the cached file, which, along with the storage header, will contain two doubly linked lists: data records and deleted records for reuse when creating new ones.

It took much longer to draw the diagram than it did to describe the storage title and post title in code. They will have the following simple structure:

//----------------------------------------------------------------------------

// Boson storage header signature and version

//----------------------------------------------------------------------------

constexpr uint32_t BOSONDB_SIGNATURE = 0x42445342;

constexpr uint32_t BOSONDB_VERSION = 0x00000001;

//----------------------------------------------------------------------------

// Boson storage header structure (64 bytes)

//----------------------------------------------------------------------------

typedef struct {

uint32_t signature; // BOSONDB signature

uint32_t version; // Format version

uint64_t endOfFile; // Size of file

uint64_t totalRecords; // Total number of records

uint64_t firstRecord; // First record offset

uint64_t lastRecord; // Last record offset

uint64_t totalFreeRecords; // Total number of free records

uint64_t firstFreeRecord; // First free record offset

uint64_t lastFreeRecord; // Last free record offset

} StorageHeader;

//----------------------------------------------------------------------------

// Record header structure (32 bytes)

//----------------------------------------------------------------------------

typedef struct {

uint64_t next; // Next record position in data file

uint64_t previous; // Previous record position in data file

uint32_t recordCapacity; // Record capacity in bytes

uint32_t dataLength; // Data length in bytes

uint32_t dataChecksum; // Checksum for data consistency check

uint32_t headChecksum; // Checksum for header consistency check

} RecordHeader;At first glance, it may seem that some of the fields in the header are redundant. This is true. But it is dictated by the requirements for the possibility of changing records, reusing the place of deleted records, checking the integrity of the checksums and the ability to navigate both in forward and reverse order. Ultimately, we will be storing JSON documents, so it is expected that the size of most entries will be in the range of 512-2048 bytes and a header size of 32 bytes will be acceptable.

4. Implementation of RecordFileIO

The implementation itself will have the following simple logic:

The records file is opened based on the CachedFile object. If the file could not be opened, we throw an exception. If the file turned out to be empty, but writable, then we create storage headers so that we can work further. If the file is not empty or the header is not correct or broken (the checksum did not match), then we throw an exception.

Create an entry. If the list of deleted records is empty, or there is no required capacity among the deleted records, then we add a new record to the end of the file (according to the data from the header). Associate the last record with the new one. Update the storage header with the new end-of-file and the new last record. We write the data. We save the checksum of the data in the header. Save the header checksum at the end of the header. If the list of deleted records has the required capacity (we run through the list), then we associate it with the last record, write the data and update its header with checksums.

Record update. If the length of the data to be updated is less than or equal to the capacity of the record, simply update the data. If the new data is larger than the record capacity, then delete the current record. We create a new record, then we associate the newly created one with the previous and next record of the one that was deleted. We save the checksum of the data in the header of the record. Save the header checksum at the end of the header.

Deleting an entry. We link the previous and next record directly. And the remote record is added to the end of the list of deleted records.

Post navigation. The position of the first and last record is taken from the storage header. Moving forward and backward through the records is based on the information about the previous and next record in the title of the current record. Jumping to an absolute position in the file checks the correctness of the record header checksum.

To a first approximation, the RecordFileIO class definition looks like this:

class RecordFileIO {

public:

RecordFileIO(CachedFileIO& cachedFile, size_t freeDepth = NOT_FOUND);

~RecordFileIO();

uint64_t getTotalRecords();

uint64_t getTotalFreeRecords();

void setFreeRecordLookupDepth(uint64_t maxDepth) { freeLookupDepth = maxDepth; }

// records navigation

bool setPosition(uint64_t offset);

uint64_t getPosition();

bool first();

bool last();

bool next();

bool previous();

// create, read, update, delete (CRUD)

uint64_t createRecord(const void* data, uint32_t length);

uint64_t removeRecord();

uint32_t getDataLength();

uint32_t getRecordCapacity();

uint64_t getNextPosition();

uint64_t getPrevPosition();

uint64_t getRecordData(void* data, uint32_t length);

uint64_t setRecordData(const void* data, uint32_t length);

private:

CachedFileIO& cachedFile;

StorageHeader storageHeader;

RecordHeader recordHeader;

size_t currentPosition;

size_t freeLookupDepth;

void initStorageHeader();

bool saveStorageHeader();

bool loadStorageHeader();

uint64_t getRecordHeader(uint64_t offset, RecordHeader& result);

uint64_t putRecordHeader(uint64_t offset, RecordHeader& header);

uint64_t allocateRecord(uint32_t capacity, RecordHeader& result);

uint64_t createFirstRecord(uint32_t capacity, RecordHeader& result);

uint64_t appendNewRecord(uint32_t capacity, RecordHeader& result);

uint64_t getFromFreeList(uint32_t capacity, RecordHeader& result);

bool putToFreeList(uint64_t offset);

void removeFromFreeList(RecordHeader& freeRecord);

uint32_t checksum(const uint8_t* data, uint64_t length);

};Here you can see the implementation of the class itself RecordFileIO.cpp.

6. Functional testing

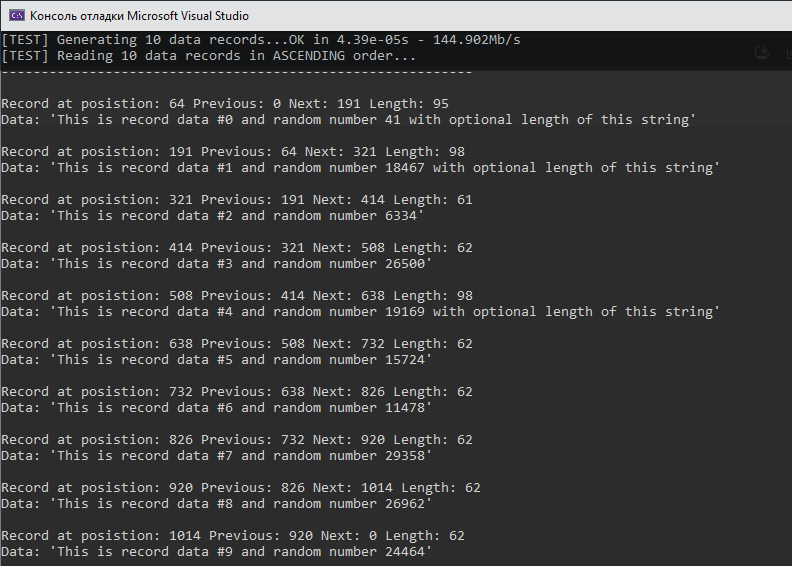

First, let’s check the basic functionality that records are being added. The links are correct and the data is complete. For these purposes, we will write 10 records (in this case, lines of text with variable length) into the database and close it. Let’s open it again, read it with service information and output it to the console.

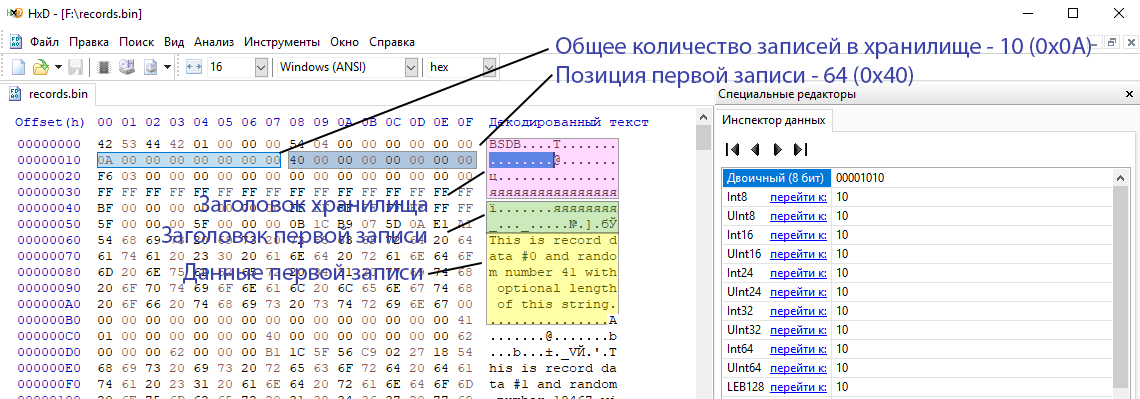

It seems that the records are written and read smoothly, the links are correct and the lengths of the records are correct. Let’s take a look through the HEX editor by checking the storage header and first entry fields. By default, we write numbers in the Big-Endian file format, with low bytes at the beginning, high bytes at the end. It may be different on other systems, but for now we will test under Win x64. I checked the titles of the posts, everything is correct.

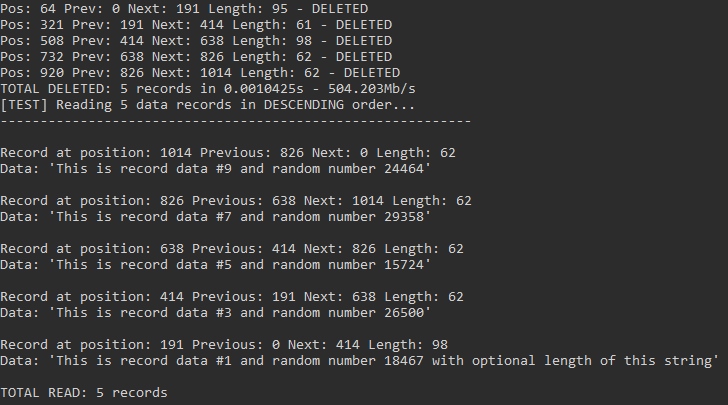

Now let’s check the mechanism for deleting records and check if all links are correct. Let’s remove the even-numbered records and at the same time read them in reverse order from the end of the storage. And let’s see what happens (5 entries should remain, the links are correct, in reverse order):

Deletion works correctly. And now let’s insert 3 records that are obviously less than the length so that the place of the previously deleted records is used. Let’s output the entries to the console in direct order. Let’s see what happened (the order is correct, new records filled the place of deleted records in positions 64, 321, 508):

It worked. Now let’s look at the overall load test for writing and reading a million records. For now, let’s check it out.

In general, not bad, considering that in our test the reading is not sequential and is counted with each write, and when reading, the checksum of the header and the data itself is checked. Of course, there is a lot of room in the code to clean up and optimize performance. But I’ll do it later. Here here is the source code for the knee test.

Thus, we have implemented the second architectural layer of the Boson DBMS – Record File IO (record storage), which solves the following tasks: the ability to create, delete, change records, reuse the place of deleted records, check the integrity of the checksums and the ability to navigate both in direct, and vice versa. The developed class solves the problem – and that’s great!

See you!