attack on ChatGPT via third-party channels

Researchers from Ben-Gurion University published

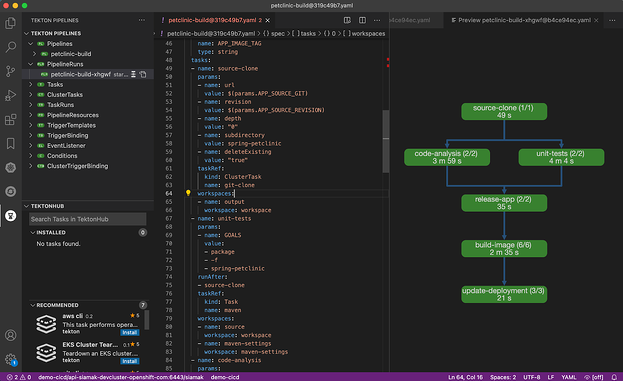

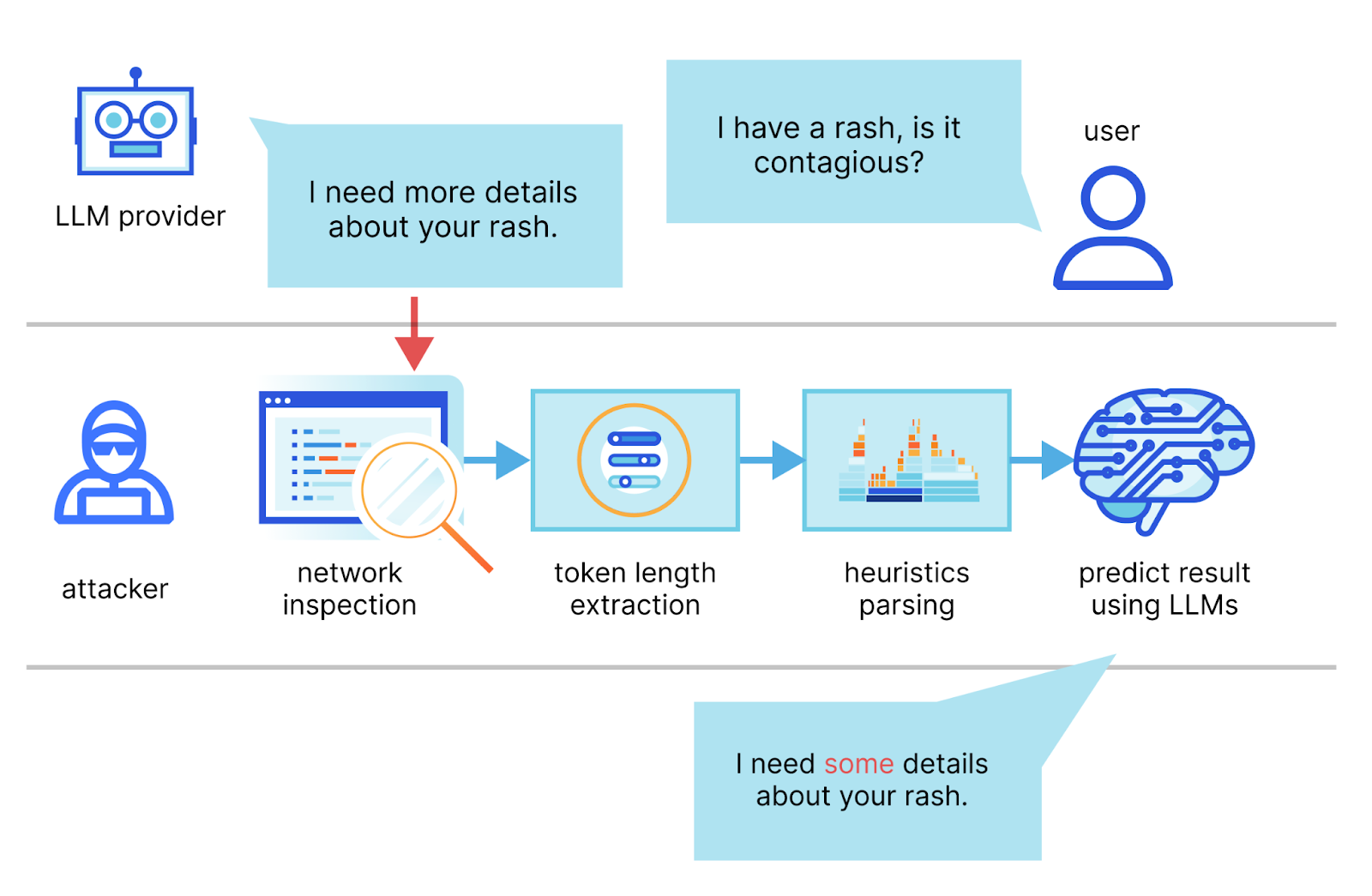

, which showed a new attack method that leads to partial disclosure of data exchange between the user and the AI chatbot. Analysis of encrypted traffic from the ChatGPT-4 and Microsoft Copilot services in some cases allows you to determine the topic of the conversation, and this is not an attack on the encryption algorithm itself. Instead, a side-channel attack is used: analyzing the encrypted packets allows the length of each “message” to be determined. It is noteworthy that another specially prepared LLM is used to decipher the traffic between the large language model (LLM) and the user.

The attack is possible only in data streaming mode, when the response from the AI chatbot is transmitted in the form of a series of encrypted tokens, each of which contains one or more words. This method of transmission corresponds to the method of operation of the language model, when each sentence is broken down into individual words or even fragments of words for subsequent analysis. The authors of the work consider most modern AI chatbots vulnerable, with the exception of Google Bard and Github Copilot (the principle of interaction with which differs from Microsoft Copilot, despite using the same GPT-4 model). In most cases, the request from the user is transmitted to the server in one piece, but the response is a series of tokens transmitted sequentially and in real time. Before encryption, the data is not changed in any way, which means it is possible to calculate the length of the encrypted fragment. And from this data, get an idea of the topic of the conversation.

The attack is briefly shown in this video from the authors of the study:

It is assumed that the attacker can intercept encrypted communication between the user and the AI chatbot. Researchers have studied in some detail the decrypted data packets from two similar services, which look something like this:

This allowed us to find a way to calculate the size of the encrypted word (or syllable) in each token. And this is the only information that was available to the researchers. In a situation where we are talking about simple text messages, and tokens are transferred sequentially, this is not so small. To simplify the task, the authors of the work limited themselves to analyzing the dialogue only in English. The researchers developed a special language model that makes assumptions about the context of a conversation based on this data. It can also predict how a conversation will evolve based on the data it's already extracted, making it easier to decipher (or, to be precise, guessing) subsequent tokens. The model was trained on a large volume of decrypted data, where it was possible to compare the original text and encrypted tokens. Accordingly, specialized LLM training should be carried out on the basis of interaction with the service that will be attacked in the future. The behavior of each AI chatbot is different, and so are the methods of transmitting traffic. For example, OpenAI ChatGPT-4 quite often combines multiple words into one large token, while Microsoft Copilot does this very rarely. This makes analysis difficult, but such unification does not always occur.

As a result, in the best-case scenario, the researchers were able to correctly reconstruct 29% of the AI chatbot's responses and determine the topic of conversation in 55% of the dialogues. This is an impressive result considering how little data is available for analysis. A little more detail about this vulnerability leads Cloudflare, which quickly closed this problem in its own AI services. It is noted that the attack, of course, is good, but unreliable: in one case it is possible to reconstruct the entire sentence, in the other it is possible to “correctly find” only prepositions. Cloudflare has implemented one of the recommendations of the Israeli researchers: a random-sized set of garbage data is now added to each token. This approach inevitably increases the amount of traffic between the chatbot and users, but completely eliminates the possibility of analyzing the length of each token. Researchers also propose other methods to combat this problem: you can group tokens and send responses in “large chunks” or even accumulate them on the server side, and send the user a ready-made phrase.

Despite the “innovativeness” of the topic, the problem discovered by Israeli researchers is not novel—it is rather a typical case of a non-optimally implemented data encryption algorithm. The work demonstrates how new technology can suffer from problems long studied and solved elsewhere.

What else happened:

Kaspersky Lab researchers lead own analysis of the most common vulnerabilities in web applications, complementing the well-known rating OWASP Top Ten. Research by Kaspersky Lab experts prioritizes the list of vulnerabilities differently. For example, vulnerabilities that make it possible to forge cross-server requests (Server-side request forgery) are in 10th place in the OWASP ranking, and in third place in this publication. The most typical vulnerability of web applications in both cases was recognized to be problems with access control.

March 12 was released another set of patches for Microsoft products. Among the closed vulnerabilities, there are two serious ones in the HyperV hypervisor, the exploitation of which can lead to the execution of arbitrary code on the host.

Study American scientists propose a new approach to reconstructing text from the sounds of keystrokes. The attack has a fairly low efficiency (43% of correctly recognized characters), but its important difference from previous works is its realism. Unlike earlier studies, non-reference recordings of keyboard typing were analyzed here under ideal conditions. Instead, the work was carried out with recordings with a lot of extraneous noise, made with a low-quality microphone. Typing was carried out on arbitrary keyboards, and subjects were not required to follow any typing style or maintain a certain speed.

Akamai Researchers they tellhow they managed to achieve arbitrary code execution using two recent vulnerabilities in Microsoft Outlook.

Critical vulnerability discovered in two WordPress plugins designed to protect websites from hacking. The problem in the Malware Scanner and Web Application Firewall plugins will not be closed – the development of both extensions was stopped immediately after the vulnerability was discovered.

Published technical description a serious vulnerability in the Mali GPU driver used on a number of Android devices, including the Google Pixel 7 and 8 Pro.

Interesting publication discusses in detail the process of analyzing radio signals from a car key fob.

New research shows how attackers spread malware send out fake letters asking you to sign some document in the DocuSign system.

In 2023, Google paid more than $10 million under the bug bounty program. 632 researchers from 68 countries received payments for information about discovered vulnerabilities.