Apache NiFi. Quick access to logs

Developers of data processing flows on the Apache NiFi service know that all processor events of interest can be displayed in the interface in the “Bulletin Board” section. However, if you are not currently monitoring the interface, it becomes impossible to see the message.

Apache NiFi stores logs (by default) in the “./logs” folder. Processors write their events to the “nifi-app.log” file. And if you have access to the directory with logs, then there are no difficulties – open the file, read it, fix the stream. What if you don’t have access? Or is there a desire to quickly obtain information about the event in order to understand the context of its occurrence? Situations are different. Consider a simple way to get information from the log.

Other options for obtaining data from logs

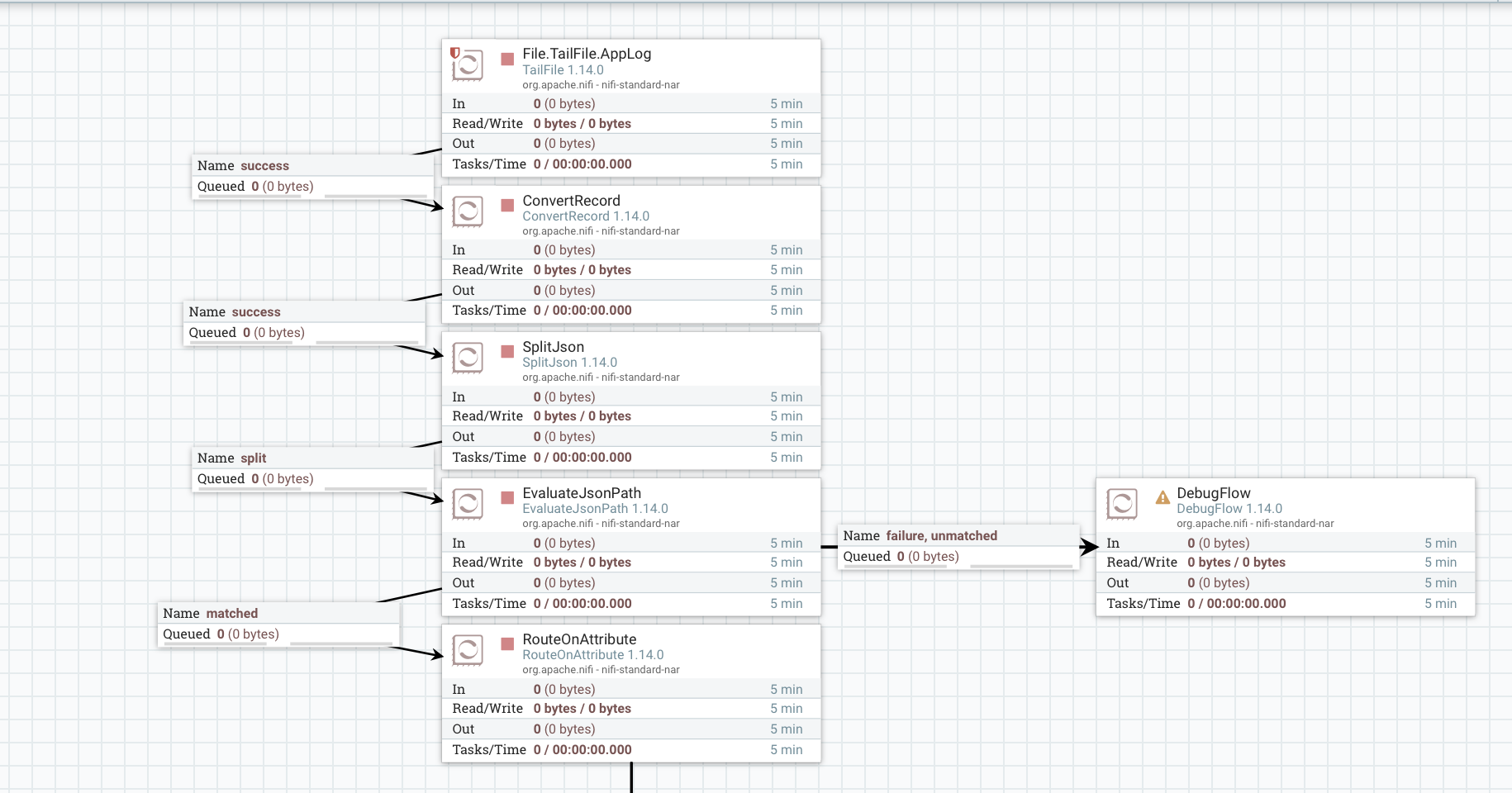

To read the log file, we will use the TailFile processor, then we will convert the received data, split it into separate records, extract the data into attributes and send it to our Slack channel:

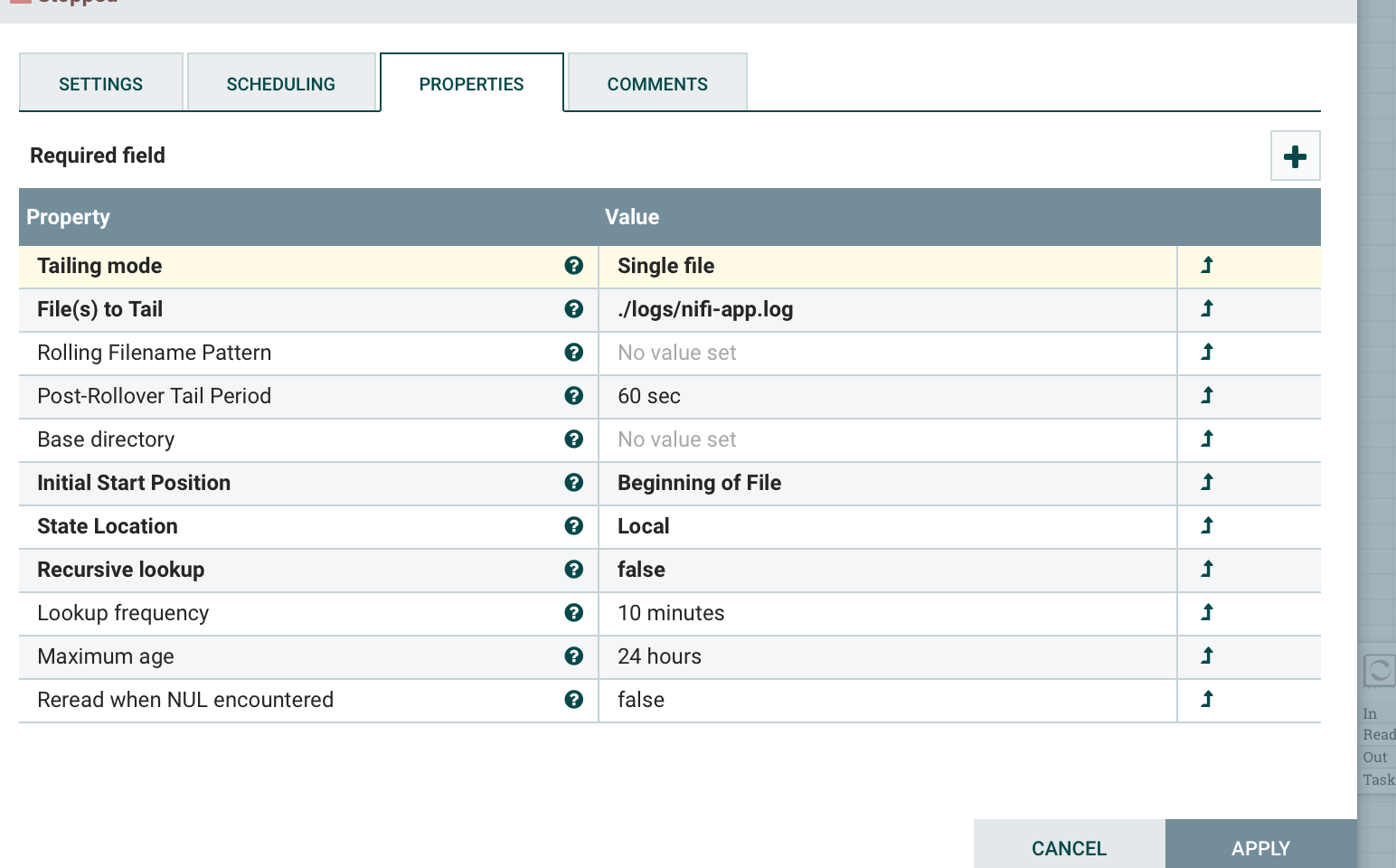

TailFile settings – read one file, specify its location, starting position and frequency:

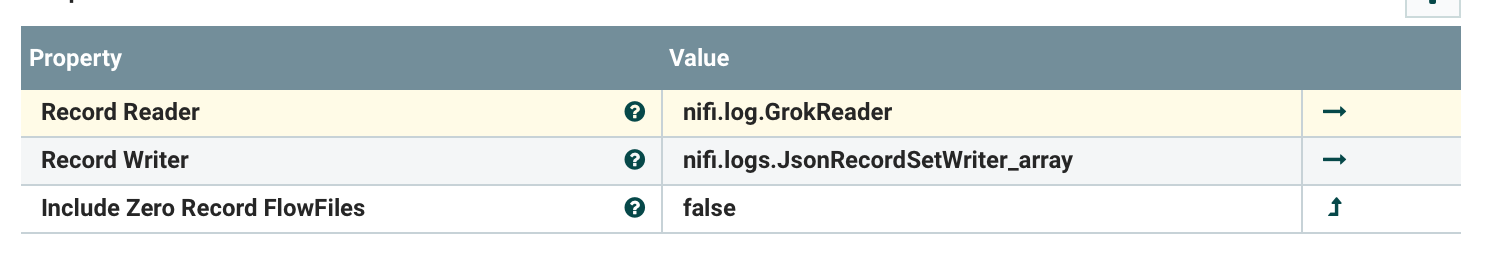

Next, we convert the received data using ConvertRecord:

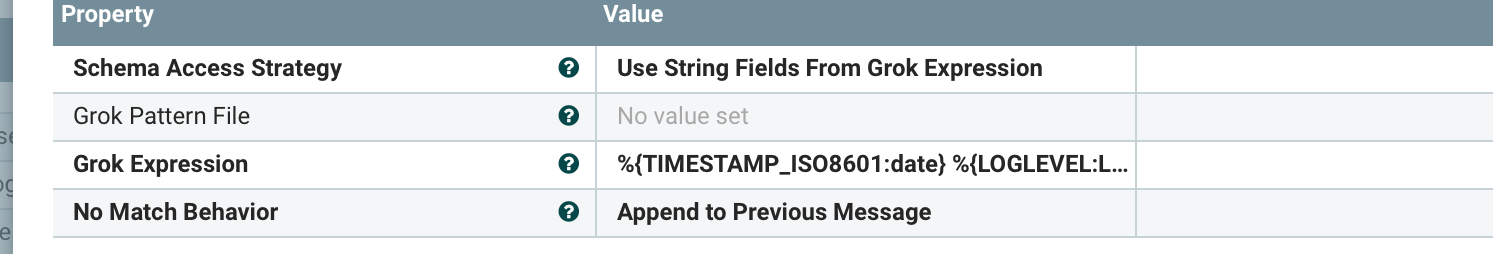

Reading data is done using GrokReader, which allows you to structure text data. There are many posts about Grok, for example here from @chemtech.

In the settings, specify the template, and set the parameter that adds data to the previous message if they do not fit the template.

The log structure corresponds to the expression:

%{TIMESTAMP_ISO8601:date} %{LOGLEVEL:Level} \[%{DATA:thread}] %{DATA:logger} %{GREEDYDATA:message}As a result, we have a Json of the form:

{

"date" : "2022-06-01 23:34:02,905",

"Level" : "INFO",

"thread" : "pool-10-thread-1",

"logger" : "o.a.n.c.r.WriteAheadFlowFileRepository",

"message" : "Initiating checkpoint of FlowFile Repository",

"stackTrace" : null,

"_raw" : "ds (Stop-the-world time = 2 milliseconds, ..."

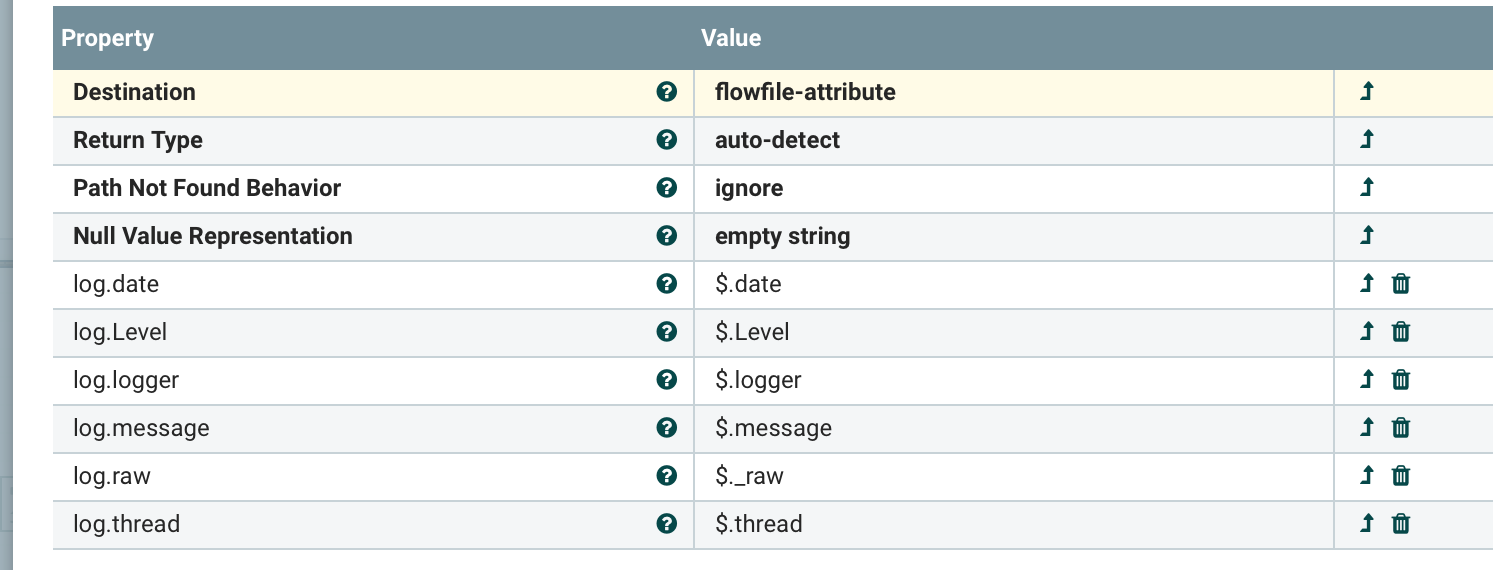

}We extract data from the content. to attributes using EvaluateJsonPath:

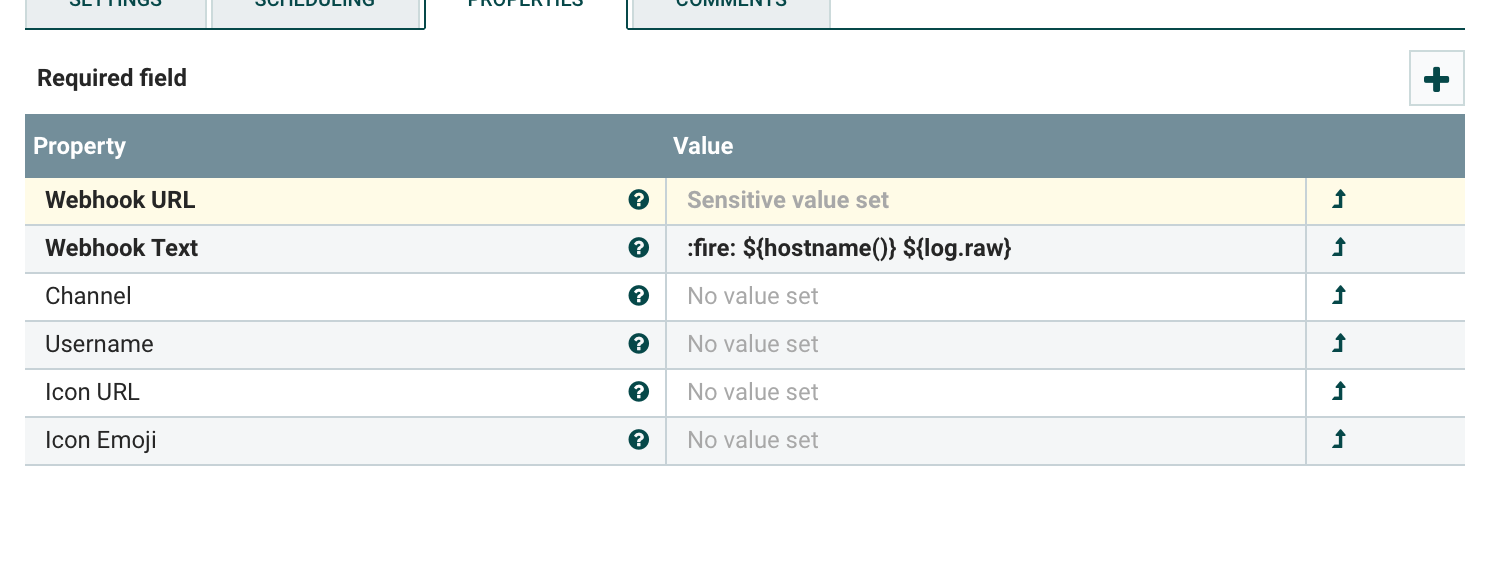

Next, we send messages to the Slack channel:

Example of sending to Slack:

In conclusion, I will say that this approach is not my personal development, it has been known for a long time, and you can find various examples of Nifi threads reading logs. Alternatively, you can send logs not to Slack, but to Telegram or Elastic.

Such a structure is not a panacea, and does not pretend to be at the production level. On a good note, all logs must be processed through the infrastructure designed for this – ELK, Zabbix, etc.

How do I use this:

-

When developing a thread, I create my own logging level and catch certain events through LogEvent / LogAttribute.

-

When testing streams for a long time (test / stage), I catch errors.

-

I’m trying to catch download errors in order to have time to quickly manually download the data and be in time before the start of the DWH download.

Useful links:

-

Documentation Apache Nifi.

-

Useful YouTube channelwhere I saw the principle of building such a stream.

-

Community in telegrams

All good.