An improved version of the Nvidia A100 AI accelerator is freely sold in China. What model is this?

Nvidia's Ampere A100 is the most powerful AI accelerator until the Hopper H100, not to mention the H200 and the upcoming Blackwell GB200. But, as it turned out, there is a more advanced version of the A100, it is improved compared to the regular model. The accelerator is freely sold in China, despite US sanctions. Perhaps Nvidia experimented with the accelerator, or it was modified specifically for China.

Features of the advanced model

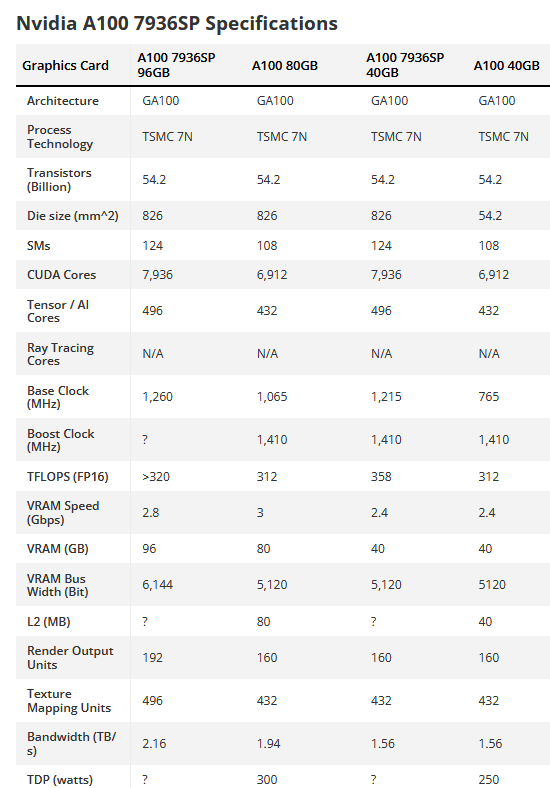

Despite the improved characteristics, the A100 7936SP same chip, the same as the regular A100. However, the first one has 124 enabled SMs (Streaming Multiprocessors) out of 128 possible on the GA100 chip. While not the maximum configuration, the A100 7936SP has 15% more CUDA cores than the standard A100, which means a significant performance boost.

The number of tensor cores also increases in proportion to the number of SMs. Based on specs alone, increasing the number of SM, CUDA, and Tensor cores by 15% could similarly improve overall performance for AI by 15%.

Nvidia offers the A100 in 40GB and 80GB configurations. The A100 7936SP is also available in two variants. The A100 7936SP 40GB has a 59% higher base clock than the A100 80GB while maintaining the same 1,410MHz boost clock. On the other hand, the A100 7936SP 96GB features a base clock speed that is 18% higher than the regular A100 and also allows for up to 96GB of total memory.

The memory subsystem of the A100 7936SP 40 GB is identical to the A100 40 GB. The 40GB HBM2 operates at 2.4GB/s over a 5 120-bit memory interface using five HBM2 stacks. This solution provides maximum memory bandwidth of up to 1.56 TB/s. However, it is worth paying attention to the A100 7936SP 96 GB model. The graphics card has 20% more HBM2 memory than Nvidia offers, thanks to the sixth HBM2 stack included. Training very large language models can require a considerable amount of memory, so additional features will definitely not be superfluous.

The A100 7936SP 96GB appears to have an upgraded memory subsystem compared to the A100 80GB – HBM2 memory runs at 2.8 GB/s instead of 3 GB/s, but sits on a wider 6,144-bit memory bus, which helps compensate difference. As a result, the throughput of the A100 7936SP 96GB is approximately 11% higher than that of the A100 80GB.

The A100 has 40 GB and 80 GB – TDP 250 W and 300 W, respectively. Given its more advanced specs, the A100 7936SP will most likely also have an improved TDP. But here's a caveat: since the A100 7936SP is likely an engineering prototype, the accelerator may not use all three power connectors, but it has higher power consumption than the standard A100 thanks to the additional CUDA cores and HBM2 memory.

Another interesting thing is that many Chinese sellers are listing the A100 7936SP on eBay. The 96 GB model starts at $18,000 up to $19,800.

Where does all this come from? Here we can only guess whether the accelerators are technical samples coming out of Nvidia’s laboratory, or whether they are specialized models developed by the chip manufacturer for a specific client.

How do these chips even arrive in China?

Since the advent of the A100 and more powerful chips, their supplies to China have been prohibited by the United States. But there are ways around it, and the Chinese are actively using them. We are talking about small channels that make it possible to supply relatively modest batches of chips to China. They are mainly purchased by research centers and universities. Moreover, the suppliers remain unknown, since manufacturers are not among them. Thus, a representative of Nvidia in 2023 stated that the company complies with all export control laws.

Last year, Reuters journalists managed to find information about the supply of small quantities of AI accelerators to the country. For example, the Harbin Institute of Technology purchased six Nvidia A100 chips to create and develop a “deep learning model,” and in December 2022, the China University of Electronic Science and Technology purchased one A100 for as yet unknown purposes. This is only what “lies on the surface”, but there are probably shadow supply channels through which larger quantities arrive in China. By the way, Tsinghua University managed to purchase over 80 A100 chips after the 2022 sanctions. In addition, Tsongqing University, Shandong Chengxiang Electronic Technology and others also purchased chips.

In general, we are talking about hundreds of small supply channels that can be tracked if desired. One thing can be said with certainty: all this is used by the Chinese for its intended purpose – for the development of AI technologies.

Manufacturers are also making separate attempts to avoid losing customers from China. Thus, Nvidia relatively recently announced the GeForce RTX 4090D card. The chip was produced specifically to meet the requirements of the Chinese market, without violating sanctions regulations. There are probably other tools for circumventing export controls, which are used by both companies from the PRC and their partners.