4 types of common errors in Event-Driven systems

Over the past few years, large companies have seen a significant increase in the implementation of event-driven systems. What are the main reasons for this trend? Is this pure hype or are there compelling reasons to implement this architecture? From our point of view, the main reasons why many companies choose this path are:

Weak coupling between components

Having separate components interact asynchronously through events provides loose coupling. We can seamlessly modify, deploy, and operate these components at different times independently of each other; this is a huge advantage in terms of maintenance costs and productivity.

“Fire and Forget” concept

This benefit is largely due to loose coupling, but we thought it was worth mentioning separately because of its importance. One of the biggest advantages of these systems is that we can trigger an event, and we don't care how or when the event is processed, as long as it survives and has longevity within the relevant topic.

Once an event type is registered in a topic, new consumers interested in those events can subscribe to them and start processing them. The producer will not have to do any work to integrate new consumers, since the producer and consumers are completely separate.

With traditional synchronous HTTP communication between components, the producer would have to call each consumer independently. Given that every call from every consumer has the potential to fail, the cost of implementing and maintaining this approach is much higher than using an event-driven approach.

No time dependence

One of the main advantages is that the components do not have to run at the same time. For example, one of the components may be unavailable, and this will not affect the operation of another component (as long as it has something to do).

A number of business models fit very easily into event-driven systems

Some models, especially those in which entities move through various stages of some life cycle, fit very well into event-driven systems. Defining a model as a set of interrelated relationships based on cause and effect can be quite simple and quite reasonable. Each “cause” generates an event that leads to a specific “effect” for a specific entity or even for several entities in the system.

Considering all the above advantages, you can understand why this architecture has become increasingly popular in recent years. Flexibility and cost-effectiveness of service are very important to companies. The choice of a specific architecture can significantly affect the efficiency of a company. We must understand that in the coming years many companies will be forced to increasingly adopt technology, which is why our work and our architectural decisions are so important to the success of any of them.

At the same time, we should not forget that creating event-driven systems is not an easy task. There are several common mistakes that newbies often make when working with such systems. Let's look at some of them.

4 common mistakes

As with any system where asynchronous operations occur, a lot can go wrong. However, we have identified a small set of errors that are quite easy to detect in projects in the early and middle stages of development.

No guarantee of order

The first problem we'll look at is when we lose sight of the need to ensure in our business model the order in which certain operations are performed on a particular entity.

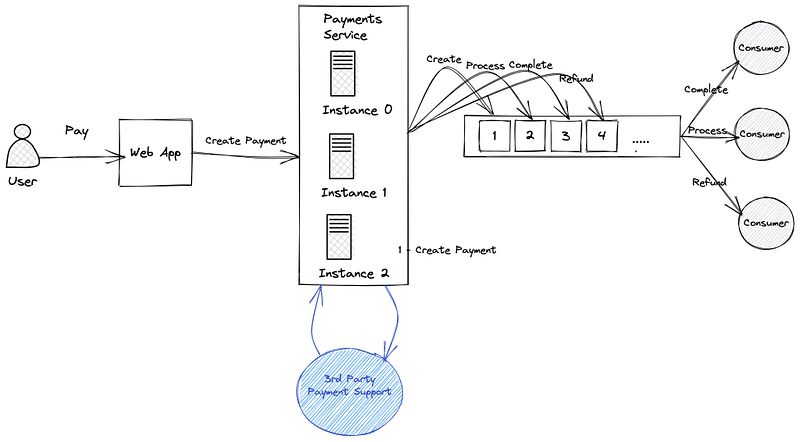

Let's say we have a payment system where each payment goes through different states based on certain events. We may have an event ProcessPaymentevent CompletePayment and event RefundPayment; each of these events (or commands in this case) moves the payment into the appropriate state.

What happens if we don't guarantee execution order? We may find ourselves in a situation where, for example, in some payment an event RefundPayment can be processed before the event CompletePayment. This will mean that the payment will remain completed even though we intended to receive a refund for it.

This happens because when the event is processed RefundPayment the payment is still in a non-refundable state. We could take other measures to solve this problem, but it would be ineffective. Let's look at this situation in a diagram to better understand the problem!

In the illustration above, we see how consumers can simultaneously use payment events for the same payment ID. This is definitely a problem for a few different reasons, the main one being that we always want to wait for one of the events to process before proceeding with the next one within the same payment.

Pub/Sub messaging systems such as Kafka or Pulsar, provide a mechanism to easily implement this. For example, in Apache Pulsar you can use shared key subscriptionsto ensure that events for a particular payment ID are always processed by the same consumer in order. In Kafka you will probably have to use partitions and ensure that all events for a specific payment ID are assigned to the same partition.

In our new scenario, all events related to an existing payment will be processed by the same consumer.

Non-atomic multiple operations

Another common mistake is to do several actions at once in a critical section and assume that each operation will always be completed successfully. Always remember: if something may not work out, it will happen sooner or later.

For example, one typical scenario is when we save an entity and dispatch an event immediately after the entity has been saved. Let's look at a specific example to better understand the essence of the problem:

class UserService(private val userRepository: UserRepository, private val eventsEngine: UserEventsEngine) {

fun create(user: User): User {

val savedUser = userRepository.save(user)

eventsEngine.send(UserCreated(UUID.randomUUID().toString(), user))

return User.create(savedUser)

}

}What happens if an event is sent UserCreated will fail? The user will still be saved, so there will be inconsistency between our system and the systems of our consumers. Some of you might suggest then sending the event first UserCreated. But what happens if, after sending the event, the user is not saved for some reason?

Consumers will think that the user has already been created, but this is not the case! Now the main question is what should we do; There are different ways to solve this problem. Let's look at some of them.

Using Transactions

One simple way to solve the inconsistency problem is to take advantage of our database system and use transactions if they are supported. Let's assume we are given a method withinTransactionwhich will start a new transaction and roll back if anything that was wrapped in it fails.

fun create(user: User): User {

return withinTransaction {

val savedUser = userRepository.save(user)

eventsEngine.send(UserCreated(UUID.randomUUID().toString(), user))

User.create(savedUser)

}

}Please note that in some cases transactions can have a significant performance impact, so check your database documentation for this issue before making a decision.

Transactional Outbox pattern

Another way to solve this problem is to send an event in the background after the user has been saved using transactional outbox pattern. You can see how this can be implemented Here.

Sending multiple events

A similar problem occurs when we try to send multiple events at once in a critical section. What happens if sending an event fails after other events have been sent successfully? Again, different systems may end up in an inconsistent state.

There are several ways to avoid this problem. Let's see how.

Chains of events

Probably the best way to solve this problem is to simply not send multiple events at once. You can always build a chain of events so that they are processed sequentially rather than in parallel.

For example, consider this scenario. When the user is created, we need to send an email indicating that the registration was successful.

fun create(user: User): User {

return withinTransaction {

val savedUser = userRepository.save(user)

eventsEngine.send(UserCreated(UUID.randomUUID().toString(), user))

eventsEngine.send(SendRegistrationEmailEvent(UUID.randomUUID().toString(), user))

User.create(savedUser)

}

}If sending SendRegistrationEmailEvent fails, we will not be able to correct the error even if we try again. Therefore, the registration letter will never be sent. What if we did this instead?

By splitting the events we allow other consumers to continue working after the event is dispatched UserCreatedand at the same time we separate the sending of the registration letter into a separate function, which can be repeated as many times as desired independently of each other.

Transaction support

If your messaging system supports transactions, you can also use them to be able to rollback all events if something goes wrong. For example Apache Pulsar supports transactionsif necessary.

Changes that break backward compatibility

The last common problem we'll look at is neglecting the fact that events should be backwards compatible when we change anything about them.

For example, let's say we add multiple fields to an existing event and simultaneously remove an existing field from that event.

In the image below we see the wrong and right ways to make changes like the ones we just described.

In the first case, we immediately remove the field middleName and add a new field dateOfBirth. Why is this bad practice?

This first change is sure to cause problems, leaving some existing events in a locked state. Why?

Imagine that when we launch the deployment of our new version, in our topic Users there are several events UserCreated. The most common way to deploy applications without downtime is to smooth updateso henceforth we will assume that our application is deployed in this way.

During deployment, it may happen that some of our nodes will already contain code changes for the new version of events. However, some nodes will still be running the old version of our code and therefore will not support the new version of events.

We also need to consider that the code released in our first update needs to make new fields optional to be able to handle old events pending in the topic. As soon as we are 100% sure that there are no old events in our topics, we will be able to remove this restriction and make them all mandatory.

Conclusion

We've seen how useful event-driven architectures can be. However, implementing them properly is not an easy task and requires some experience. In this case, it was especially helpful to do pair programming with someone who has experience with a similar architecture, as it could save us very valuable time.

If you are looking for a good book to better understand Event-Driven systems, we highly recommend “Building Event-Driven Microservices“.

In conclusion, we invite you to an open lesson “Ways to divide microservices into components,” which will take place today at 20:00. In this lesson:

— We will learn how to effectively divide applications into microservices, paying special attention to functional decomposition.

— Let’s dive into the EventStorming technique, which will help us better understand business processes and the interaction of various application components.

— Let's study the API First Design approach, which puts emphasis on describing the API and contracts before starting to develop microservices.

You can register for this lesson on the Software Architect course page.