Stop calling containerization virtualization

Virtualization: what is it really?

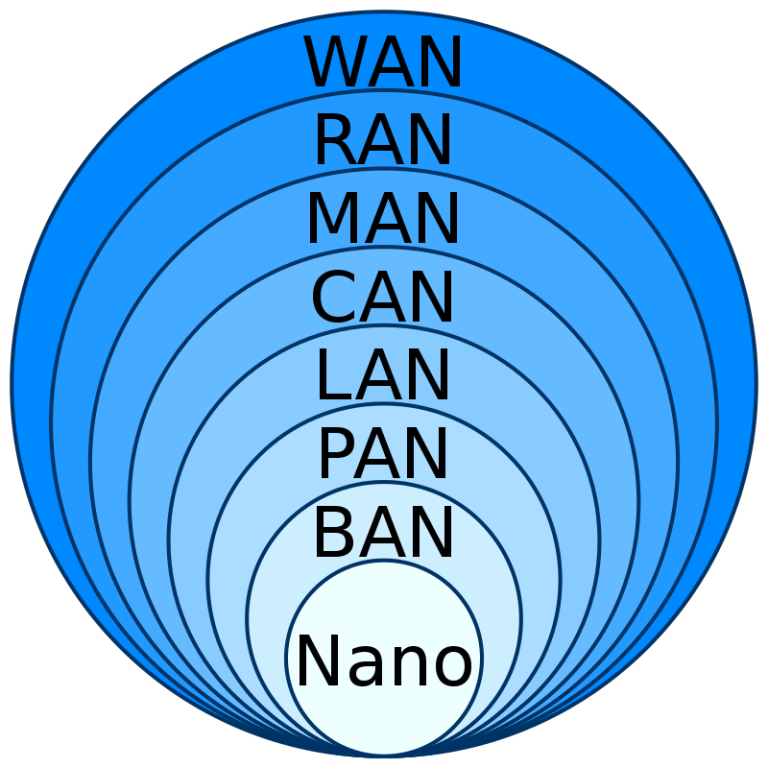

Virtualization is a technology that programmatically creates copies of physical devices: servers, workstations, networks and data storage. From the processor to the video card, from RAM to network interfaces.

Virtualization takes its toll start in the 70s of the last century, when Gerald Popek and Robert Goldberg formalized the operating principles of this technology in their article “Formal Requirements for Virtualizable Third Generation Architectures”. And the main thing there is not file systems or processes, but how to isolate privileged processor instructions. How to make one physical hardware pretend to be several independent computers, providing each with its own environment.

If you go deeper into history, at that time computers were huge and expensive machines that required access to a variety of users and tasks. There was a need to share the resources of one powerful computer between different operating systems and applications, while ensuring isolation and security. Virtualization provided a solution to this problem by allowing multiple virtual machines to run on the same physical server, each of which thought it was running on its own hardware.

Modern virtualization is based on the same principles, but using more advanced technologies and hardware support. The processors have special instructions to support virtualization, such as Intel VT-x and AMD-V, which allow hypervisors to efficiently manage virtual machines without significant performance losses.

Hypervisors: theory, practice and harsh reality

In the world of virtualization, everything revolves around hypervisors – programs that manage virtual machines. They come in two types. And this is where the fun begins.

According to the classics of the genre, hypervisors of the first type or “bare-metal” hypervisors supposedly work directly on hardware without the participation of the operating system. Type 2 hypervisors operate like regular programs inside the operating system. A beautiful theory, isn't it? But the reality, as always, is more complicated.

Take, for example, KVM in Linux and Hyper-V in Windows. Both are positioned as type 1 hypervisors. However, they are actually part of the operating system. KVM is integrated into the Linux kernel and functions as a module, providing kernel-level virtualization capabilities. Hyper-V in Windows is also built into the system, but when activated, Windows itself becomes a virtual machine running on top of Hyper-V. It turns out to be a kind of nesting doll, where the host OS becomes a guest OS inside its own hypervisor.

Do you remember Intel VT-x and AMD-V technologies? They were designed to provide hardware support for processor-level virtualization. It is thanks to them that modern hypervisors can effectively manage virtual machines without resorting to complex software emulation of each instruction. This significantly improves performance and reduces overhead.

But here the question arises: if hypervisors of the first type must work directly with hardware, and the second – inside the OS, then where to include KVM and Hyper-V, which are kernel modules? Yes, and VMware ESXi, which is written into hypervisors of the first type, is also not independent, but requires an OS with a kernel that is suspiciously reminiscent of Linux.

The reality is that the boundaries between these types of hypervisors are blurred, and the classification becomes arbitrary. The main thing is how they work in practice and what opportunities they provide.

What about containers?

And this is where the fun begins. Containers grew from a completely different origin: the chroot utility, which appeared in Unix V7 in 1979. Its task was simple – to change the root directory for the process, creating the illusion that this is the entire file system. No magic with the processor, no hardware emulation – just a clever trick with the file system.

Later, additional mechanisms appeared – pivot_root, namespaces for isolating processes, cgroups for monitoring resource use. But the essence has not changed: all this works on top of one system core. Containers provide an isolated execution environment for applications but share a common operating system kernel. This is their beauty – ease and efficiency, and the problem is the limitations and risks associated with the general environment.

Containers have become popular due to their ability to quickly deploy applications, as well as provide consistency in the environment and efficiently use resources within a single host system, where there can be many containers at once. Docker and similar technologies have made containerization accessible and convenient by providing tools for managing containers and their images.

However, it is important to understand that containers do not provide complete isolation at the kernel level. They are more like separate processes running in isolated namespaces with limited resources. This means that if there is a vulnerability in the core of the system, it could potentially be used to escape the container and affect the host system or other containers.

Why is this important?

The difference between virtualization and containerization has practical implications for security, performance, and compatibility.

Virtualization creates a complete virtual environment. Each virtual machine receives a virtual set of hardware resources: its own processor, memory, disks, network interfaces. And most importantly – its own operating system kernel. This provides a high degree of insulation. If one virtual machine fails or is compromised, it does not directly affect the host system or other virtual machines.

Containers all run on one common core. They may have their own file systems, libraries, applications, but they have a common system core. It's like apartments in a multi-story building: each has its own layout and furniture, but the foundation and communications are common. If there is a problem with the foundation, all residents suffer.

So what now?

First, you need to stop comparing these technologies as competing solutions. Containers and virtualization solve different problems: containers are needed to package applications with all dependencies so as not to pollute the main system and ensure application portability. And virtualization is for creating full-fledged isolated environments, when you need to get several independent ones from one physical server.

Virtualization is needed where complete isolation is required, where different operating systems or their versions are needed, where security and compatibility are more important than performance. Virtual machines allow you to run completely different environments on the same physical server, providing a high level of isolation and flexibility.

To put it simply, all global hosting runs on virtual machines, because this allows you to make dozens of independent ones from one server. And containers are used by developers so that their application with all the libraries and dependencies is guaranteed to run on the customer in the same way as on their machine.

Comparing virtualization and containerization as equivalent or claiming that they compete with each other is like confusing packaging products into containers with dividing a supermarket into departments. It seems that both are about organization, but they solve completely different problems.

Conclusion

In IT, situations often arise when buzzwords and marketing terms overshadow the real essence of technology. Containers and virtualization are a prime example of this confusion of concepts. By understanding the fundamental differences between them, we can more consciously choose tools to solve specific problems, avoiding unnecessary complexity and optimizing resources.

Let's call a spade a spade and use technology where it is truly appropriate. This will allow us to create more efficient, reliable and productive systems.

Have you encountered the substitution of these concepts? What consequences did this lead to in your projects? You may have interesting stories about how misunderstandings about technology led to unexpected results or problems. I will be waiting for everyone in the comments!