Setting up CI

It is believed that building CI/CD is a task for DevOps. Globally this is true, especially when it comes to the initial setup. But often developers are faced with the need to tighten up certain stages of the process. The ability to fix something minor on your own allows you to avoid wasting time going to your colleagues (and waiting for their reaction), i.e. in general, it increases the comfort of work and gives an understanding of why everything happens the way it does.

There are a lot of settings for the Gitlab pipeline. In this article, without going into the depths of tuning, we’ll talk about what the pipeline script looks like, what blocks it consists of and what it can contain.

The article was written based on materials from an internal introductory meeting for developers.

Hi all! My name is Denis, I am a DevOps engineer on an internal project of our company – Mondiad. In this article we will talk about how to write pipelines for GitLab CI/CD, which is mainly used in our project. Let's talk about the contents of this script – how to read, understand and debug it.

The CI script is written in YAML. .gitlab-ci.yml is the only file that lies directly in the project root. In any other folders, GitLab simply will not read it, and therefore the pipeline will not work.

Diagnostic Tools

To debug and understand the pipeline, you can use the tool built into the GitLab shell itself: Build -> Pipeline Editor.

The tool interface has four tabs:

Items on the bookmark Edit allow you to correct the pipeline. In principle, you don’t even have to commit it, but work like this.

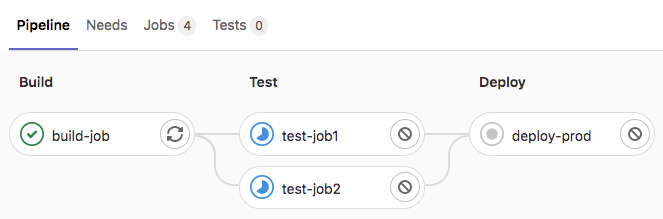

On the bookmark Visualize you can see all the stages and tasks that are included in the pipeline.

Validate performs validation – checks the syntax (otherwise you can write such a pipeline that GitLab will not understand it and will throw an error).

Last tab – Full configuration – displays the full text of the pipeline script. When we use various tricks to shorten the code, Full configuration displays the situation without them. It’s convenient to write a script in the editor and check the Full configuration to see how he put it together.

Minimal script

A minimal GitLab CI script looks like this:

stages:

- build

TASK_NAME:

stage: build

script:

- ./build_script.shThe pipeline stage and task name are indicated here.

Features of the .gitlab-ci.yml file

Stage

https://docs.gitlab.com/ee/ci/yaml/#stages

In addition to the stages that we create ourselves, there are two hidden stages:

.pre – always executed first;

.post – always executed last. As a rule, it is used to clear some data (for example, if an infrastructure is being deployed for testing tasks). .post passes even if errors occur as individual stages are executed.

These stages do not have to be announced. However, the pipeline cannot contain only these two stages.

stages:

- build

job1:

stage: build

script:

- echo "This job runs in the build stage."

first-job:

stage: .pre

script:

- echo "This job runs in the .pre stage, before all other stages."

last-job:

stage: .post

script:

- echo "This job runs in the .post stage, after all other stages."Default section

https://docs.gitlab.com/ee/ci/yaml/#default

This section contains a global set of job options that can be overridden later.

The most common:

before_script

after_script

cache

image

services

tags

retry

default:

image: ruby:3.0

retry: 2This section is mainly used to shorten the body of the script itself.

Variables section

https://docs.gitlab.com/ee/ci/yaml/#variables

To control the build process, you can use variables from GitLab itself in the script. Most used:

$CI_PROJECT_DIR – full path to the project inside the container.

$CI_COMMIT_REF_NAME – contains the name of the branch and the name of the tag.

$CI_COMMIT_TAG – tag name. This variable only receives data if we insert a tag.

$CI_PROJECT_NAMESPACE – namespace of the project.

$CI_PROJECT_NAME – project name.

$CI_REGISTRY – path for GitLab registry.

$CI_REGISTRY_USER – user for GitLab registry.

$CI_REGISTRY_PASSWORD – password for GitLab registry.

A complete list of predefined variables is in the documentation: https://docs.gitlab.com/ee/ci/variables/predefined_variables.html

If these variables are not enough, you can set your own in the variables section and use them in tasks.

variables:

DEPLOY_SITE: "https://example.com/"Workflow section

https://docs.gitlab.com/ee/ci/yaml/#workflow

The next section allows you to define general launch rules for the entire pipeline. In fact, this is also an abbreviation of the script – default rules for all tasks in the pipeline.

workflow:

rules:

- if: '$CI_PIPELINE_SOURCE == "merge_request_event"'

variables:

PROJECT1_PIPELINE_NAME: 'MR pipeline: $CI_MERGE_REQUEST_SOURCE_BRANCH_NAME'

- if: '$CI_MERGE_REQUEST_LABELS =~ /pipeline:run-in-ruby3/'

variables:

PROJECT1_PIPELINE_NAME: 'Ruby 3 pipeline'

- when: always # Other pipelines can run, but use the default nameimage option

https://docs.gitlab.com/ee/ci/yaml/#image

Our project mainly uses runners with docker images. Image specifies the name of the image in which the task will be executed. For example, if the project uses Ruby, then we need an image with Ruby installed inside.

rspec:

image: registry.example.com/my-group/my-project/ruby:2.7

script: bundle exec rspecJob Options

Option Tags

https://docs.gitlab.com/ee/ci/yaml/#tags

job:

tags:

- ruby

- postgresTags determines which runner the task should be executed on. For example, we need a runner that should be in a test environment where there is a certain database. In this case, you must specify the appropriate runner tag.

before_script / script / after_script options

https://docs.gitlab.com/ee/ci/yaml/#before_script

https://docs.gitlab.com/ee/ci/yaml/#script

https://docs.gitlab.com/ee/ci/yaml/#after_script

The main body of our task can be divided into three parts:

before_script is a set of commands that is executed immediately before the job body, but after all artifacts and cache have been received. Typically, commands are entered there to prepare the environment for completing the task.

script is the body of the job. Basically it consists of a set of commands that we, roughly speaking, type in the shell to complete this task – run, playbook.

after_script – a set of commands that is executed after the job body. It is important that this set is always executed, even if the job fails. The only option in which after_script will not work is if before_script fails, since in this case the task will not even start.

Another important point is that after_script only applies to a specific job. If you need a certain stage to work, there is .post.

Typically, tasks are placed in after_script that clean up sensitive data behind them. In the example below, this is the key that is used for access. There are also scripts that prepare data for tests or perform other tasks that need to be done anyway.

You can use long commands in scripts…

Scripts can use long commands that begin with I or >. The runner interprets these symbols differently.

I – each new line is a transition to a new command (similar to the shell);

> – the new command is interpreted after the new line.

In older versions of YAML, only one of the symbols was available, but now you can use both.

test-stage:

stage: deploy

image: ansible:2.9.18

before_script:

- echo "$DEPLOY_KEY" > ~/.ssh/id_rsa

- chmod 700 ~/.ssh/id_rsa

script:

- >

echo “run”

ansible-playbook -i invetory.ini playbook.yaml

after_script:

- rm ~/.ssh/id_rsaArtifacts option

https://docs.gitlab.com/ee/ci/yaml/#artifacts

Next come the artifacts. Typically, they are used to store some data that we create while running a job. These can be binary assemblies, logs for further processing, and anything else (otherwise we will simply lose all this).

In this section we write the path to the file (path) where we collect this data. The path can be specified through a mask using *. To include a folder and its subfolders, you should use the **/* construction (namely two asterisks), and exclude allows you to exclude some files.

job:

artifacts:

paths:

- binaries/

- .config

exclude:

- binaries/**/*.o

expire_in: 1 weekAnother useful thing is expire. It stores the artifact for a certain amount of time, after which it will automatically be deleted from GitLab so as not to take up space.

cache option

https://docs.gitlab.com/ee/ci/yaml/#cache

The next useful option is cache. It allows you to cache data that is created during the execution of a task and that needs to be reused in the future to speed up the pipeline (for example, so that the same Gradle does not pump out its packages every time). Caching is very often used for npm packages. Path allows you to specify the path to the cache folder.

By default, the cache is used everywhere. This is where Key can be useful, with which you can assign a unique key to the cache. This can be useful when you need to have a different cache for each branch, for example, if different versions of packages are used.

job:

script:

- echo "This job uses a cache."

cache:

key: binaries-cache-$CI_COMMIT_REF_SLUG

paths:

- .gradle/cachesOption needs

https://docs.gitlab.com/ee/ci/yaml/#needs

allows you to set a dependency on an artifact or other task. For example, a certain artifact appears at a certain stage, and accordingly, the task becomes dependent.

The option also allows you to speed up the transition to the next task (since with it you do not have to wait for the entire stage to be completed).

test-job1:

stage: test

needs:

- job: build_job1

artifacts: true

test-job2:

stage: test

needs:

- job: build_job2

artifacts: false

test-job3:

needs:

- job: build_job1

artifacts: true

- job: build_job2

- build_job3Services option

https://docs.gitlab.com/ee/ci/yaml/#services

The option allows you to launch an additional docker container for the job. For example, with a Postgres database or docker-dind (a container that is necessarily present when building docker images). Because We work inside docker, building is prohibited there. Accordingly, we can send the assembly to this service.

test-job1:

stage: test

services:

- name: docker:dind

- name: postgres:9.6When option

https://docs.gitlab.com/ee/ci/yaml/#when

The next option – when – allows you to set the simplest conditions for starting a task. For example, if we want to run it only manually.

deploy_job:

stage: deploy

script:

- make deploy

when: manual

cleanup_build_job:

stage: cleanup_build

script:

- cleanup build when failed

when: on_failurRules

https://docs.gitlab.com/ee/ci/yaml/#rules

More complex launch rules can be built using rules. Here you can use a simple if condition (the task will run if, say, it was launched from a specific branch) or track changes in files using changes (the task will run if a specific file has changed).

job:

script: echo "Hello, Rules!"

rules:

- if: $CI_MERGE_REQUEST_SOURCE_BRANCH_NAME =~ /^feature/

when: never

allow_failure: true

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

changes:

- Dockerfile

when: manual

allow_failure: trueHow to shorten the script

Let's move on to the final stage – how to shorten the script without losing much of its readability. There are two approaches:

Use Gitlab's built-in extends option https://docs.gitlab.com/ee/ci/yaml/#extends. It allows us to create something like a function where we place frequently repeated options. This is convenient if the script contains many tasks of the same type. Options from Extends are combined with what is already in the script.

.my_extend: stage: build variables: USERNAME: my_user script: – extend script TASK_NAME: extends: .my_extend variables: VERSION: 123 PASSWORD: my_pwd script: – task script | TASK_NAME: stage: build variables: VERSION: 123 PASSWORD: my_pwd USERNAME: my_user script: – task script |

Use reference links. It's almost the same. We also insert a link, but it allows you to overwrite options rather than add them. In some cases, for example, when you need to completely erase the entire default set of variables, it is convenient to use this approach. Reference links can be nested – each next one will overwrite the previous one.

.my_extend: &my_extend stage: build variables: USERNAME: my_user script: – extend script TASK_NAME: <<: *my_extend variables: VERSION: 123 PASSWORD: my_pwd script: – task script | TASK_NAME: stage: build variables: VERSION: 123 PASSWORD: my_pwd script: – task script |

Author: Denis Palaguta, Maxilect.

Thanks to the Maxilect DevOps team for their help in preparing and commenting on the article.

PS We publish our articles on several Runet sites. Subscribe to our page at VK or at Telegram channelto learn about all publications and other news from Maxilect.