Introduction to Apple Spatial Computing

Being engaged in iOS development for quite a long time, having personal experience in the development of VR devices and our own complex photogrammetry, this time I was frankly impressed with the long-awaited announcement of Apple’s Vision Pro mixed reality headset at WWDC2023. The company presented a new platform, by and large, with all the familiar modern iOS development tools. The ARKit, RealityKit and SwiftUI puzzles have finally come together and I’m happy to share a selection of links to resources and starter materials. Before reading, of course, it is useful to dream a little about future applications that are sure to change the world. Get ready to start diving or add material to your bookmarks (which you will never read), go!

So, Apple recently posted a detailed tutorial and video for mixed reality (MR) device developers using the SDK, which will be available at the end of June 2023. You can create multiple windows, add 3D content, or turn it into a fully immersive VR / AR / mr scene. Your app can be shown side by side in a shared space, or exclusively in a separate full space. Vision Pro uses frameworks such as SwiftUI, RealityKit, and ARKit to extend spatial computing and help developers build immersive applications and immersive scenes. You can develop applications using Vision Pro using tools such as Xcode, Reality Composer Pro, and Unity. Apple will soon release the VisionOS SDK, related tools and documentation to provide developers with comprehensive technical support.

Right now, Apple has opened up access to a large number of well-made videos, such as this:

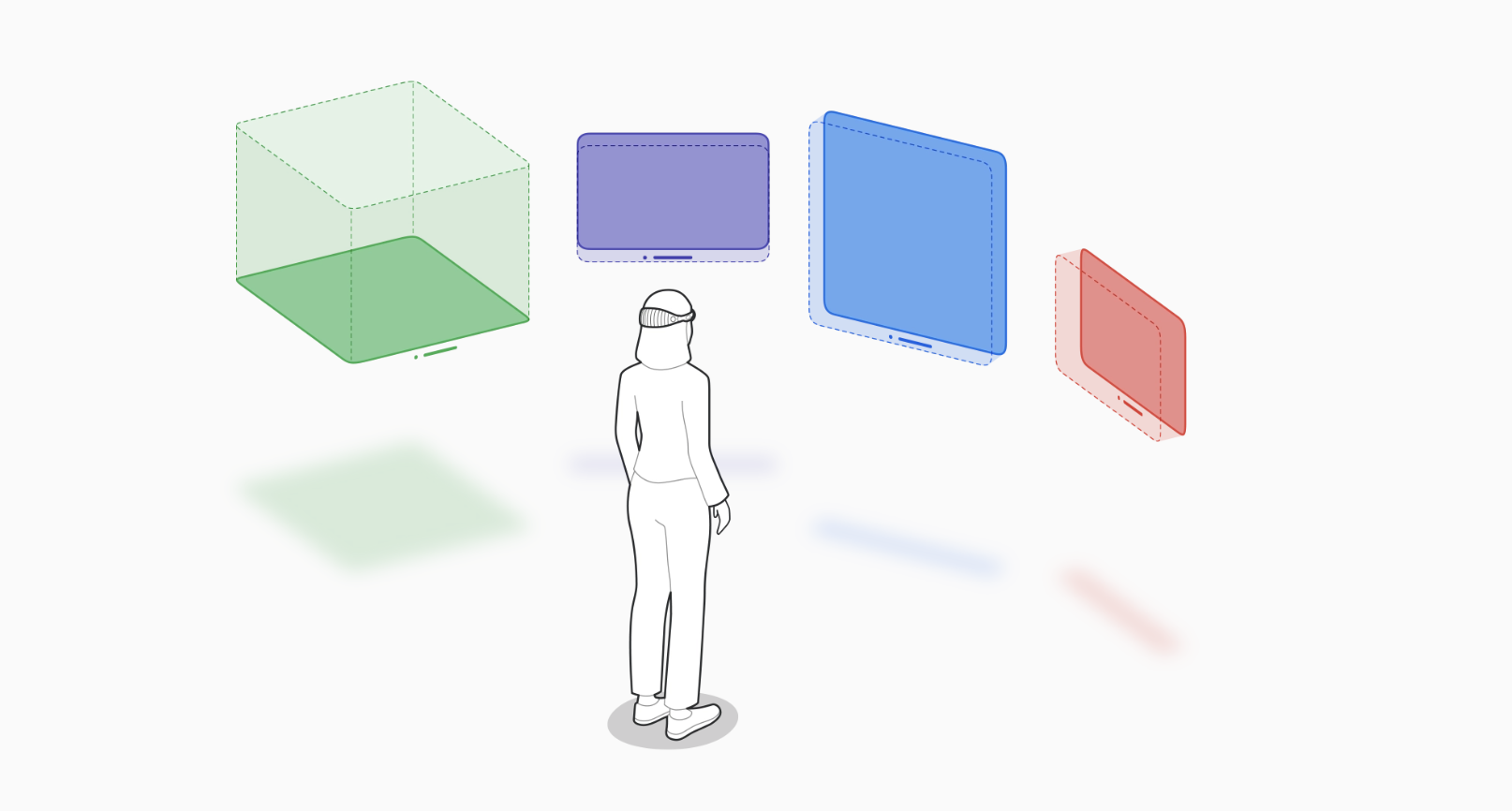

The course covers all aspects of mixed reality (MR) development, including an understanding of spatial computing. Learn the basic elements that make up spatial computing – windows, volumes and spaces and learn how you can use these elements to create immersive and immersive experiences.

An Overview of Spatial Computing Capabilities

Vision OS provides an endless 3D space to create new interactive and immersive experiences. You can discover the basics of spatial computing – windows, volumes, spaces – and learn how you can use these elements in application development. Explore new tools from Apple like Xcode 15 and the new Reality Composer Pro.

Developers can create multiple windows, add 3D content, or turn it into a fully immersive scene. The platform uses SwiftUI, RealityKit, and ARKit to extend spatial computing, helping developers build compelling, deeply immersive apps. Vision OS also emphasizes accessibility design, providing a comfortable and seamless experience for all users:

Design for VisionOS

Developing applications, games, and experiences for spatial computing in Vision OS requires an understanding of the new elements and components.

Learn how you can create great spatial computing apps, games and experiences, discover new input options and components. Whether it’s your first time creating spatial experiences or you’ve been developing fully immersive apps for years, you’ll be able to create magical heroic moments, captivating soundscapes, human-centric user interfaces and more with visionOS. The goal is to help users stay aware of their surroundings while exploring completely new worlds:

Development with Xcode 15

Despite the dislike of many iOS developers for XCode (and for good reason), you can still start developing for visionOS with it until alternative IDEs arrive. You’ll be shown how to add visionOS to your existing projects or create a completely new app, prototype in Xcode preview, and import content from Reality Composer Pro. You can also use the visionOS simulator to evaluate your experiences in various simulated scenes and lighting conditions. Learn how to create collision, obscuration, and scene understanding tests and visualizations for your spatial content, and optimize its performance and efficiency.

Dive into SwiftUI and RealityKit

To get the most out of Vision OS, you’ll need to learn SwiftUI and RealityKit. These tools allow developers to create amazing experiences in Vision OS spatial elements: windows, volumes and spaces. From understanding the 3D Model API to rendering 3D content using RealityView, these in-depth sessions will prepare developers to create immersive experiences:

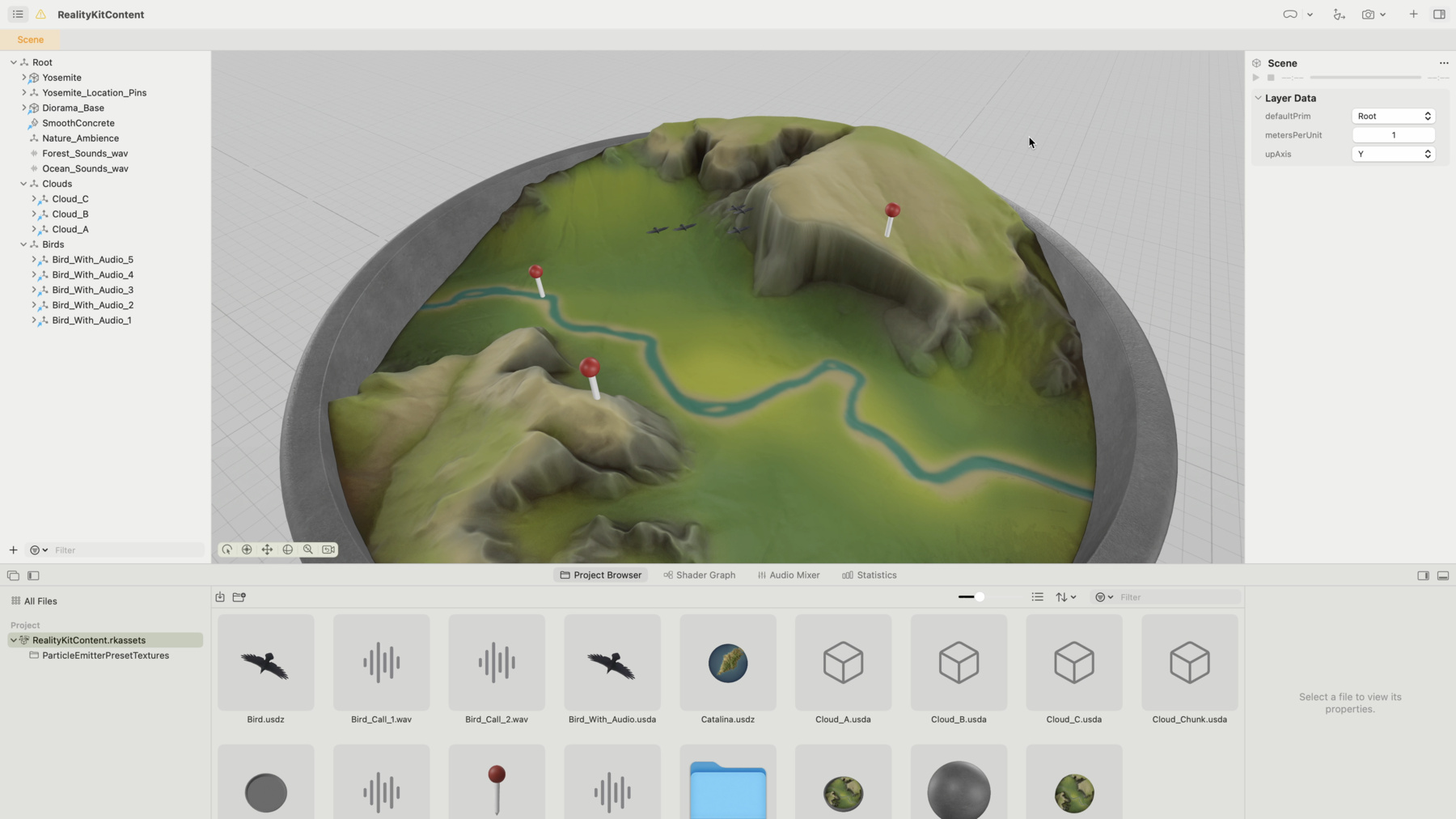

Reality Composer Pro

Reality Composer Pro is a new tool for previewing and preparing 3D content for Vision OS applications. Reality Composer Pro is available with Xcode and can help you import and organize assets such as 3D models, materials, and sounds. And best of all, it integrates tightly with the build process in Xcode to preview and optimize your VisionOS resources. Developers will be able to learn how to use this tool to create immersive content, add materials to objects, and enable Reality Composer Pro content in Xcode. You can also check out the latest Universal Scene Description (USD) updates on Apple platforms:

Getting started in Vision OS with Unity

VisionOS applications can be created directly in Unity. Unity developers can use their existing 3D scenes and assets to create applications or games for Vision OS thanks to the deep integration between Unity and Apple frameworks. Unity offers all the benefits of developing for the Apple platform, including access to native input and mixed reality features:

In addition to Unity, VisionOS offers a platform for creating immersive moments in games and media applications. Developers will learn how to use RealityKit to display 3D content, explore design aspects of visual and moving elements, and learn how to use Metal or Unity to create fully immersive experiences:

Development of collaborative and productive applications

Sharing and collaboration is an important part of visionOS, offering an app and game experience that makes people feel like they’re there, like they’re in the same space. By default, people can share any app window with other participants in a FaceTime video call, just like on a Mac. However, when using the GroupActivities framework, you can create the next generation of collaborative experiences.

Get started designing and developing for SharePlay on Apple Vision Pro by learning about the types of shared activities you can create in your app. Learn how to establish a common context between participants in your experiences and how you can support even more meaningful interactions in your app:

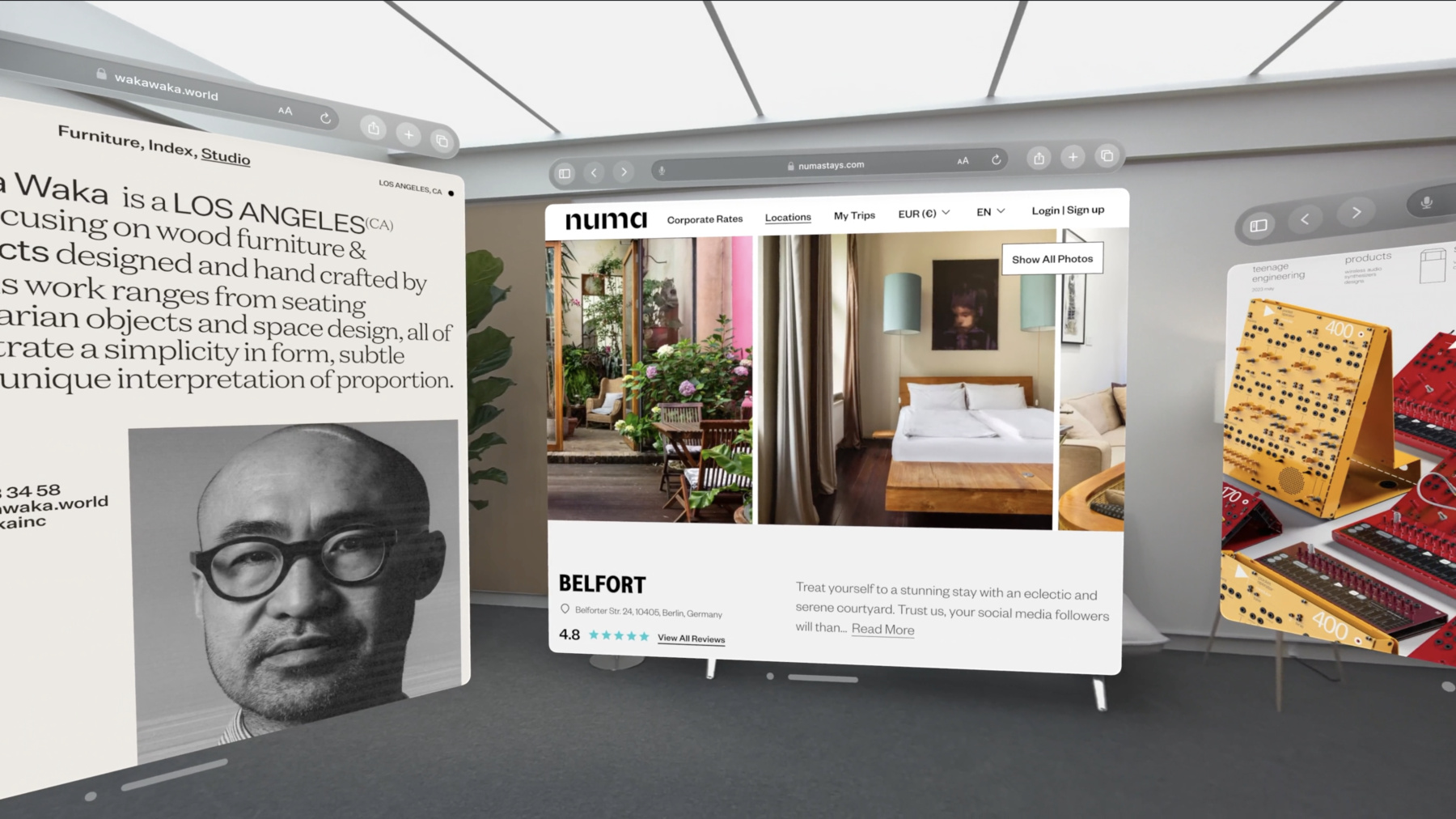

Working with Web

With Vision OS, web content can be experienced in a whole new way. Developers will learn how to optimize their site for spatial computing, understand the platform’s input model, and use Safari’s developer features to prototype and test their experiences.

There are also changes for quick viewing of 3D content (QuickLook), which can be found here:

Work iPhone and iPad app in Vision OS

Existing iOS and iPadOS apps can run on Vision OS. Developers will learn about framework dependencies and interaction with apps designed for the iPad. They will also learn how to optimize the overall experience for apps on iPad and iPhone, as well as improve the visual experience:

Conclusion

Over the past 10-15 years, in general, there have been no fundamental changes in mobile phones. We’ve watched smartphones get thinner, more powerful, cameras sharper, then smartphones get thick and heavy again, and screen sizes grow obscenely. Yes, mobile phones and applications have become quite dense in people’s lives and have gone the way of the Web. It is too early to predict the guaranteed success or failure of the new platform, however, this is a really interesting and capacious technology that will set the development of mobile technologies and computing for many years to come. Now is a unique time, and conclusions that can be drawn only years later.

In any case, for many, Vision OS is like a breath of fresh air, where the most unusual fantasies of applications, taking into account spatial experience, can be realized in super-interesting projects with dizzying stories. And in my opinion, this is the starting point right now.

In his tg channel I also periodically talk about aspects of development for VisionOS and product cases in a more concise format.