Integration Testing Pitfalls in Spring Boot

Some examples from a collection of problems that developers often encounter in the world of microservices testing.

Introduction – the testing pyramid, microservices and mortgages

In modern IT, microservice architecture has become the norm. It has many advantages, including flexibility and scalability, but fully testing products based on its principles is difficult and expensive. The cost of this testing increases as we move from unit tests of individual services to more extensive acceptance tests and beyond. As a result, modern approaches to development impose additional obligations on engineers to ensure the high quality of each of the system services, and the cost of testing a defect at a lower level of the pyramid increases many times over. You can read more about the pyramid, for example, Here, the main principle: at the base there are cheap unit tests, there are a lot of them, the higher we go, the higher and more expensive the tests, respectively, the fewer there are. In practice, this means that the presence of high-quality and reliable unit and integration tests for each service is vital for the successful development of the project, otherwise you will have to face great difficulties during development. After all, integration tests in the software development process are like a building inspector checking the quality and integrity of a building after construction is completed. Like an inspector, integration tests verify that all components and pieces of software are connected correctly and function together as they should in a well-built house.

And by the way, about real estate: my name is Alexander Tanoshkin, I have been a leading software engineer at Cian since 2018, my work is related to the marketplace of mortgage offers from banks – the Cian.Mortgage service. We provide clients with the opportunity to choose the best mortgage conditions from various banks. The product architecture is built on microservices; we work with technologies such as Java/Kotlin/Spring, PostgreSQL, Kafka, Kubernetes and Yandex.Cloud. A lot of time has passed since the release of the first version of our product, and during this time we have collected an impressive collection of integration testing “traps” – problems usually expressed in unpredictable and unobvious test failures, the investigation of which can be exciting, but extremely expensive. In this article, I will share some examples of the collection and offer practical recommendations on how to avoid them so that you can focus on the main task – quality assurance.

Illustrating the pitfalls of testing, I wrote a microservice example (CRUD API + asynchronous logic) using the now quite common Gradle/Spring Boot/Kotlin/Postgres/Kafka stack, while the problems described here and how to solve them are relevant for other technologies, the details will differ implementation. The example service consists of layers typical for such software – controllers, validators, business logic, repositories, etc. For the sake of brevity, this article contains only fragments of test code illustrating one or another trap; the full version of the code can be found Here.

For testing, the capabilities of Spring, Junit 5 and testcontainers are used. The big advantage of this stack is the automated launch of realistic environments: a well-known annotation @SpringBootTest allows you to check the application as a whole (a context is raised that unites all components, which allows you to check the application in the most realistic conditions), however, this is far from the only advantage: using the principle convention over configurationwe also get an exclusive backing service – for example, a full-fledged database – due to several annotations: in the fragment below we declaratively request a container with a postgres database and ask Spring Boot to configure the connection with this database.

@Container

@ServiceConnection

var postgresContainer = PostgreSQLContainer(DockerImageName.parse("postgres:13"))@SpringBootTest(webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT)

@Testcontainers

class DirtyDbPitfallIn some tests, to reproduce a particular problem, I reconfigured the default startup order. It is not recommended to do this in this project unless absolutely necessary:

@TestMethodOrder(MethodOrderer.MethodName::class)To run the examples from the project, you will need Java 21, a Docker environment, and the following commands:

Run all project tests

./gradlew clean testGranular launch (individual classes or methods)

./gradlew clean test --tests '*DirtyDbPitfall.createApiTest'

BUILD SUCCESSFUL in 21s

./gradlew clean test --tests '*DirtyDbPitfall.removeApiTest'

BUILD SUCCESSFUL in 21s

./gradlew clean test --tests '*DirtyDbPitfall'

DirtyDbPitfall > Removal API test FAILED

org.opentest4j.AssertionFailedError at DirtyDbPitfall.kt:79

BUILD FAILED in 21sSymptoms that can help identify testing pitfalls will almost always include: blinking tests, or in a situation where two tests, when run separately, are green, but when run together, they turn red.

Let's start examining the collection.

At the end of the article, I have compiled a table with a brief summary of each trap, how to bypass it and the tools used.

Trap collection

Dirty base (in the example DirtyDbPitfall.kt)

The essence of the trap: multiple tests that make up a single test suite (for example, multiple @Test methods within a Test class) test functionality related to something external that has state (the most obvious being a database), without clearing the state before each test runs .

How can this happen? For example, two tests appeared in one class as a result of merging two branches into the main one. Initially, each of them worked correctly both when run individually and when running all tests of the project, but after the merger they became incompatible due to mutual “claims” on the state of, for example, the database.

Example

One of the tests checks the create/read API: first, POST requests are called to create the resource, after which the list is read:

@Test

@DisplayName("Creation API test")

fun createApiTest() {

// given

val wantedEntitiesCount = 4

// when

val creationResponses = (1..4).map {

restClient.post().uri("/persons").body(mapOf("name" to "John Doe")).retrieve().toBodilessEntity()

}

assertThat(creationResponses).allMatch { it.statusCode == HttpStatusCode.valueOf(200) }

// then

val businessEntities = restClient.get().uri("/persons").retrieve().body<List<Person>>()

assertThat(businessEntities?.size).isEqualTo(wantedEntitiesCount)

}Another test also uses the API to create a new resource, but then deletes it and checks that there are no resources left as a result of deletion:

@Test

@DisplayName("Removal API test")

fun removeApiTest() {

// given

val creationResponse =

restClient.post().uri("/persons").body(mapOf("name" to "John Doe")).retrieve().toEntity<Person>()

assertThat(creationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

val createdId = creationResponse.body?.id ?: fail { "Created person ID could not be null" }

// when

val removalResponse =

restClient.delete().uri("/persons/{createdId}", createdId).retrieve().toBodilessEntity()

assertThat(removalResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

// then

val businessEntities = restClient.get().uri("/persons").retrieve().body<List<Person>>()

assertThat(businessEntities?.size).isEqualTo(0)

}If we run both tests, the build will fail with the following error:

expected: 0

but was: 4

at java.base/jdk.internal.reflect.DirectConstructorHandleAccessor.newInstance(DirectConstructorHandleAccessor.java:62)

at java.base/java.lang.reflect.Constructor.newInstanceWithCaller(Constructor.java:502)

at tano.testingpitfalls.dirtydb.DirtyDbPitfall.removeApiTest(DirtyDbPitfall.kt:79)The reason is that the tests use a real database running in a container and do not clean it before running. The result is that the data created as a result of previous test runs (creation API) remains in the database, and we get a red test when checking the deletion API.

Bypass

Cleaning the database using JUnit hooks (abstract @BeforeEach). In this case, the cleaning logic can be encapsulated in a separate test component (in the example it is called SystemUnderTest). This allows for potential future complication of the system (for example, if the task of implementing soft delete) save the test script code itself from being clouded by cleanup implementation details. Even if a test in accordance with a script requires a certain state, it is better not to rely on the results of other script tests, but to explicitly bring the system to the desired state (using the same JUnit hooks, or explicitly when preparing to execute the script in the test method itself).

@BeforeEach

fun setUp() {

systemUnderTest.cleanDb()

}Variations on this problem

Not only the database, but also any resources that outlive one test can become “contaminated.” The general principle here is the same – clean it (if it is not possible to do this through the program interface, you can take the path of re-creating the container).

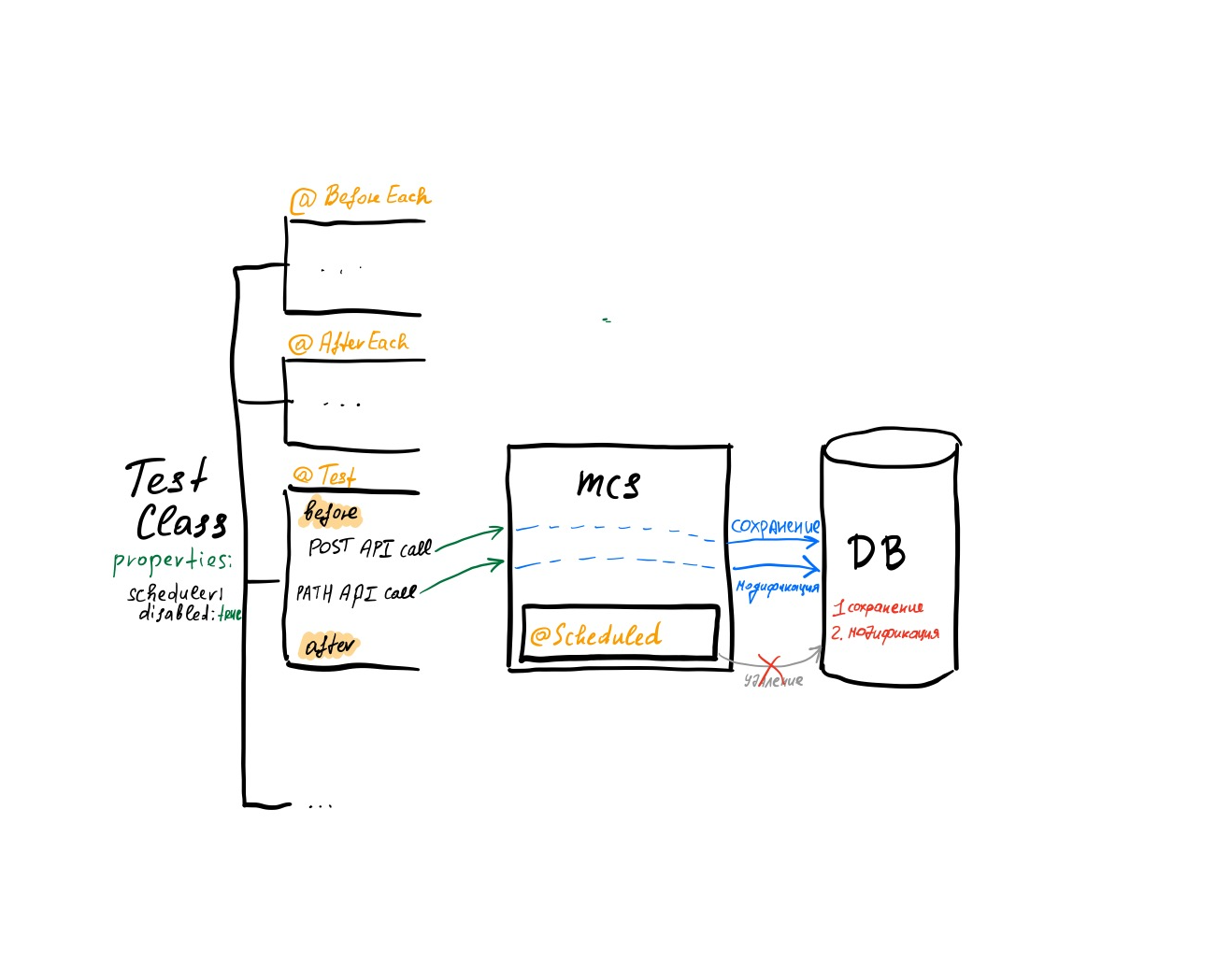

Unexpected SchedulerPitfall.kt)

The essence of the trap: The service has a scheduler that runs operations on a schedule or at a fixed frequency. As part of a test that tests something not directly related to the work of the scheduler, we are faced with side effects of the functions initiated by the scheduler (for example, the deletion of data that we intended to check in the test).

How can this happen? For example, the scheduler appeared after the implementation of those tests that it began to influence as a result, another option is that its launch parameters changed..

Example

In the above project there is a class LeadRemovalSchedulerService, it initiates the removal of users with a certain status, configured (for demonstration purposes) to run very frequently (once every 10 milliseconds):

class LeadRemovalSchedulerService(

private val personService: PersonService,

) {

private val logger = KotlinLogging.logger {}

@Scheduled(fixedRate = 10)

fun removeLeads() {

val count = personService.removeLeads()

if (count > 0) {

logger.info { "Removed $count leads" }

}

}

}The integration test creates an entry via the API and is going to modify it:

@Test

fun testModification() {

// given

val creationResponse =

restClient.post().uri("/persons").body(mapOf("name" to "John Doe")).retrieve().toEntity<Person>()

Assertions.assertThat(creationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

val createdId = creationResponse.body?.id ?: fail { "Created person ID could not be null" }

// when

val modificationResponse =

restClient.patch().uri("/persons/{createdId}", createdId).body(mapOf("status" to "CLIENT")).retrieve()

.toBodilessEntity()

// then

Assertions.assertThat(modificationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

}But it crashes because while he was making the request, the scheduler deleted data from the database:

org.springframework.web.client.HttpServerErrorException$InternalServerError: 500 Internal Server Error: "{"timestamp":"2024-03-14T06:41:38.650+00:00","status":500,"error":"Internal Server Error","path":"/persons/1"}"

Servlet.service() for servlet [dispatcherServlet] in context with path [] threw exception [Request processing failed: tano.testingpitfalls.domain.IllegalInputDataException: No person with ID 1 found] with root cause

tano.testingpitfalls.domain.IllegalInputDataException: No person with ID 1 found

at tano.testingpitfalls.service.PersonService.modifyPersonStatus(PersonService.kt:30)Bypass

The work of the scheduler should be predictable. To do this, you can enter a parameter that allows you to disable it (at least for test purposes):

@Configuration

@ConditionalOnProperty(prefix = "scheduler", name = ["disabled"], havingValue = "false", matchIfMissing = true)

@EnableScheduling

class SchedulerConfiguration@SpringBootTest(

webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT,

properties = ["scheduler.disabled=true"]

)If you need to check the operation of the scheduler itself, you need to write a separate test for this, and the parameters (frequency, schedule) of the scheduler itself should also be included in the configuration and checked separately from other test scenarios.

What time is it now?! (ClockPitfall.kt)

The essence of the trap: the service has functionality that depends on the current date and/or time, but this is not controlled at the test level, so the test's functionality depends on when it runs.

How can this happen? The most likely scenario is the appearance of functionality that works over time after the implementation of a certain basic scenario and tests for it.

Example

In the PATH API example project (@PatchMapping(“/{personId}”) allows you to modify the status field of person, in the corresponding service (PersonService) this causes an additional side effect – sending a greeting. We record the result of this step in the database:

@Transactional

fun modifyPersonStatus(personId: Long, newPersonStatus: PersonStatus): PersonEntity {

val personForModification = personEntityRepository.findByIdOrNull(id = personId) ?: throw IllegalInputDataException("No person with ID $personId found")

personForModification.status = newPersonStatus

if (newPersonStatus == PersonStatus.CLIENT) {

val greetingSent = greetingService.greet(name = personForModification.name)

personForModification.greetingSent = greetingSent

}

return personForModification

}The integration test checks the operation of the corresponding API by checking the value of the greetingMessageSent field:

@Test

fun testGreetings() {

// given

val creationResponse =

restClient.post().uri("/persons").body(mapOf("name" to "John Doe")).retrieve().toEntity<Person>()

assertThat(creationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

val createdId = creationResponse.body?.id ?: fail { "Created person ID could not be null" }

// when

val modificationResponse =

restClient.patch().uri("/persons/{createdId}", createdId).body(mapOf("status" to "CLIENT")).retrieve()

.toEntity<Person>()

assertThat(modificationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

// then

assertThat(modificationResponse.body?.greetingMessageSent).isEqualTo(true)

}To understand where the trap is here, let's look at the greet GreetingService method: the logic here is simple – if the user has become our client, we need to congratulate him by sending a message:

fun greet(name: String): Boolean {

if (clock.workingHours()) {

// We will skip the sending logic itself for the sake of simplicity

logger.info { "Greeting message for $name was sent successfully" }

return true

} else {

logger.info { "Greeting message for $name was not sent because it's not working hours" }

return false

}

}The most important thing here is the challenge clock.workingHours(), This is an extension method that allows you to determine whether it is working time or not:

fun Clock.workingHours(): Boolean {

val hour = this.instant().atZone(this.zone).hour

return hour in 9..18

}Please note: in our example, bean clock is already defined and configured (ClockConfiguration), which is used in GreetingService to decide whether to disturb the user or not:

@Bean

fun clock(): Clock {

// here in order to reproduce the clock pitfall we create fixed clock with nighttime

// however in real life we should use system default clock

val nightTime = ZonedDateTime.now().withHour(2).withMinute(0).withSecond(0).withNano(0)

return Clock.fixed(nightTime.toInstant(), ZoneId.systemDefault())

}At the same time, for the reproducibility of the trap, the clock is always configured to display night time. In reality, you may encounter situations where the clock component is not in the context at all, or it is defined and configured in accordance with the TZ adopted in the project, or in UTC.

Bypass

Setting the clock should be a preparation for running the test case: we can override the clock component for the test configuration and customize it for the needs of a specific test (TestClockConfiguration.kt):

@Bean

@Primary

fun workingHoursClock(): Clock {

val workingTime = ZonedDateTime.now().withHour(10).withMinute(0).withSecond(0).withNano(0)

return Clock.fixed(workingTime.toInstant(), ZoneId.systemDefault())

}Here the clock is set to always display working hours. You need to use the configuration in tests, and the problem is solved:

@SpringBootTest(webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT)

@Testcontainers

@ActiveProfiles("test")

@Import(TestClockConfiguration::class)

class ClockPitfall

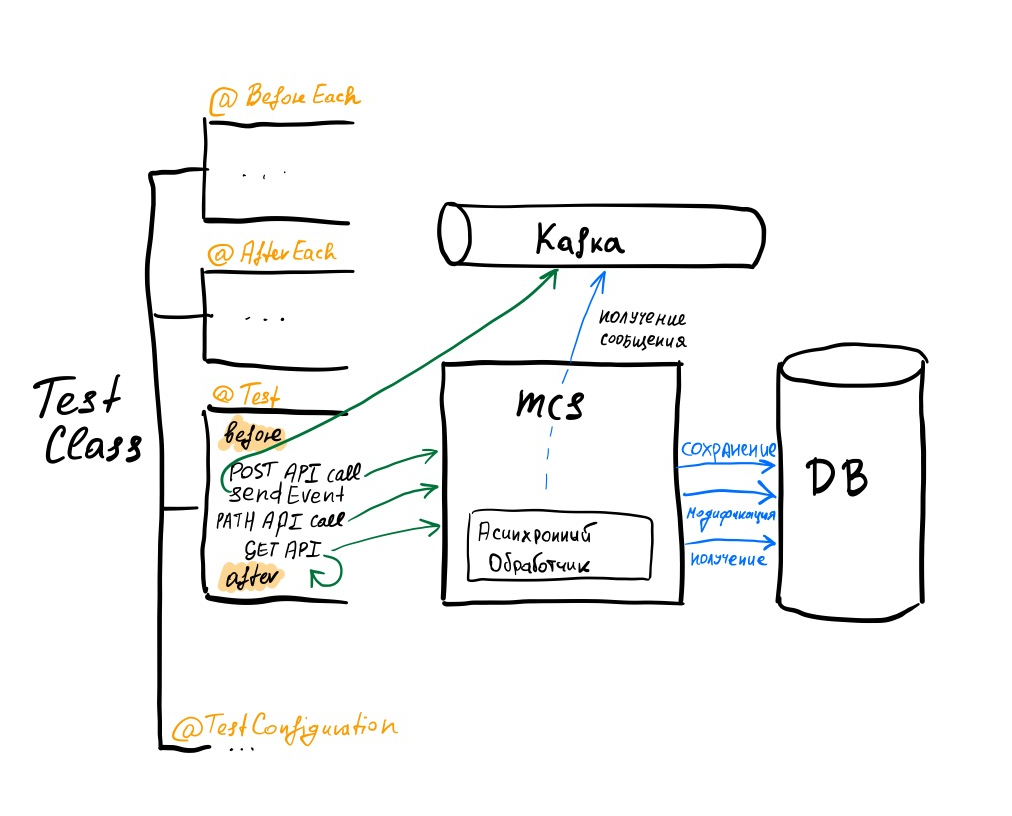

We couldn’t wait… (DidNotWaiPitfall.kt)

The essence of the trap: the service has an asynchronous handler that is subscribed to events coming from other services by the system through a message broker (RMQ/Kafka). In integration tests, we test the handler by programmatically sending messages to the broker (as if they came from an external service). Since the event handler is asynchronous, we cannot issue an assertion right away; we need to wait.

How can this happen? It’s easy to imagine a situation where initially an asynchronous handler manages to work even in the situation of a synchronous assertion within a test (for example, if additional actions preceded the check of the final state), but later it stopped, and the tests began to crash/blink.

Example

The example project has the LoyaltyProgramEnteredHandler service:

@Service(EVENT_TYPE_LOYALTY_PROGRAM_ENTERED)

class LoyaltyProgramEnteredHandler(

private val personService: PersonService

): EventHandler {

private val logger = KotlinLogging.logger {}

override fun handleEvent(event: Event) {

val personId = event.personId

val welcomeBonuses = personService.accrueWelcomeBonuses(personId = personId)

logger.info { "Successfully handled event $event, added $welcomeBonuses" }

}

}It is called by the consumer functional component:

@Bean

fun processEvents() = Consumer<Event> {

eventDispatcher.processEvent(event = it)

}Which, in turn, receives messages from the Kafka topic (the Spring Cloud Stream + binder library for Kafka is used):

cloud:

function:

definition: processEvents

stream:

bindings:

processEvents-in-0:

group: ${spring.application.name}

destination: eventsThe test configuration also has an additional binding configured, which allows messages to be sent from a script:

...

spring:

cloud:

function:

definition: processEvents

stream:

bindings:

...

events-out-0:

destination: eventsIn the test itself (DidNotWaiPitfall.waitingPitfall) we check the side effect of the handler activity.

First, we save and bring some data into the required form, check the initial state of the field, the changes of which we expect based on the results of calling the handler:

val creationResponse =

restClient.post().uri("/persons").body(mapOf("name" to "John Doe")).retrieve().toEntity<Person>()

assertThat(creationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

val createdId = creationResponse.body?.id ?: fail { "Created person ID could not be null" }

val modificationResponse =

restClient.patch().uri("/persons/{createdId}", createdId).body(mapOf("status" to "CLIENT")).retrieve()

.toEntity<Person>()

assertThat(modificationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

assertThat(modificationResponse.body?.bonusBalance).isEqualTo(0)Next, we send a message (supposedly from an external service) to Kafka using the binding that I wrote about above:

fun sendEvent(eventType: String, userId: Long) {

streamBridge.send("events-out-0", Event(personId = userId, type = eventType))Then we check the side effect by calling the GET API:

val resultingPerson = restClient.get().uri("/persons/{createdId}", createdId).retrieve().toEntity<Person>()

assertThat(resultingPerson.body?.bonusBalance).isEqualTo(100)As a result, our test fails with the following error:

Expected :100L

Actual :0L

org.opentest4j.AssertionFailedError:

expected: 100L

but was: 0LBypass

One way to get out of this situation is to not get the desired result within the assertion the first time, just wait and then try again until the outcome of the test is satisfactory.

To do this, you don’t need to write a lot of boilerplate code, just use a library like awaitility:

await untilAsserted {

val resultingPerson = restClient.get().uri("/persons/{createdId}", createdId).retrieve().toEntity<Person>()

assertThat(resultingPerson.body?.bonusBalance).isEqualTo(100)

}Here, with fairly reasonable default values, the data returned by the GET API is repeated until the condition turns green or the timeout expires.

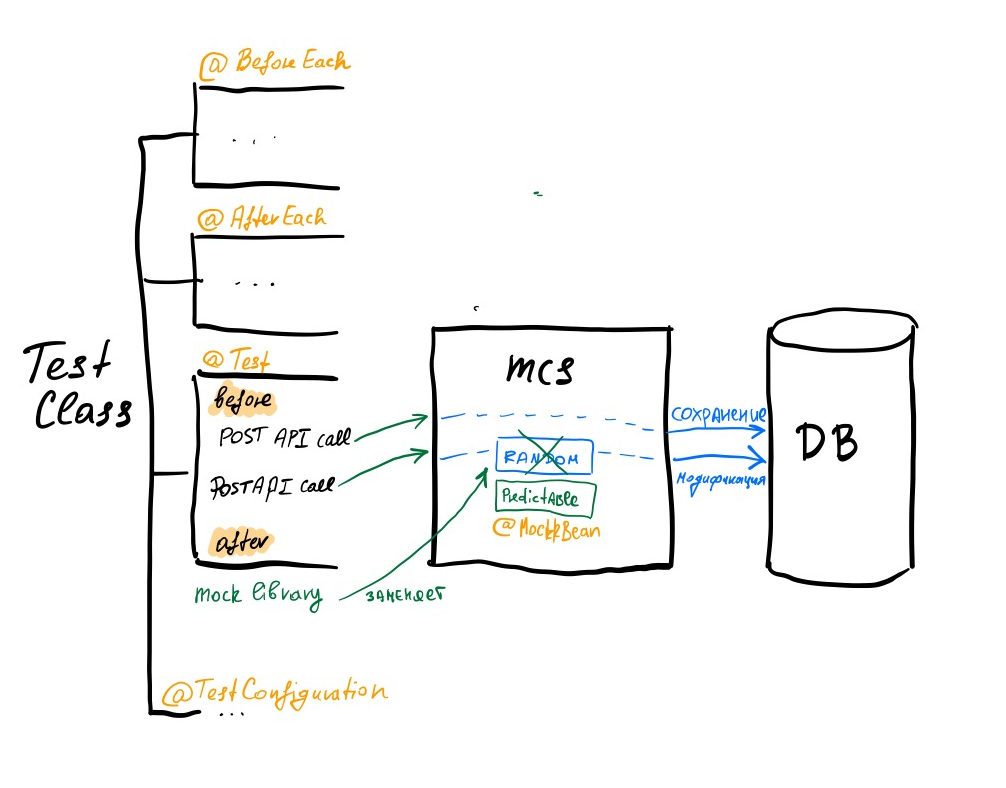

Unpredictable Pitfall.kt

The essence of the trap: in the service there is a component that generates unpredictable values, but in the test you want to predetermine its behavior. In a sense, the previous example with a clock can also be classified in this category, but here different tools will be used to bypass it, so it is highlighted separately.

How can this happen? Perhaps as a result of the evolution of the test, it became necessary to test a field related directly to the “unpredictable”.

Example

integration-testing-pitfalls has functionality to confirm the user's contact:

@Transactional

fun confirmEmail(personId: Long, email: String): EmailInformation {

val foundPerson = personEntityRepository.findByIdOrNull(id = personId) ?: throw IllegalInputDataException("No person with ID $personId found")

return foundPerson.emails.getOrElse(email) {

val confirmationId = confirmationIdGenerator.generateConfirmationId()

val emailInformation = EmailInformation(

email = email,

confirmationId = confirmationId,

isVerified = false

)

foundPerson.emails[email] = emailInformation

// We will skip the confirmation logic for now

return emailInformation

}

}Here the generation of confirmation ID is of interest to us:

fun generateConfirmationId() = UUID.randomUUID().toString()In the controller test, we want to check the confirmationCode field, which is impossible without overriding the behavior of the generator:

@Test

fun testUnpredictable() {

// given

val creationResponse =

restClient.post().uri("/persons").body(mapOf("name" to "John Doe")).retrieve().toEntity<Person>()

Assertions.assertThat(creationResponse.statusCode).isEqualTo(HttpStatusCode.valueOf(200))

val createdId = creationResponse.body?.id ?: fail { "Created person ID could not be null" }

val email = "john@domain.com"

val wantedConfirmationId = "CONFIRMATION_ID"

// when

val emailResponse =

restClient.post().uri("/persons/{createdId}/email", createdId).body(mapOf("email" to email)).retrieve()

.toEntity<EmailInfo>()

// then

Assertions.assertThat(emailResponse.body?.isVerified).isEqualTo(false)

Assertions.assertThat(emailResponse.body?.email).isEqualTo(email)

Assertions.assertThat(emailResponse.body?.confirmationCode).isEqualTo(wantedConfirmationId)

}Unlike the example with the clock, this time we will use the capability of the test library and define a bean in the context using the following construction:

@MockkBean

private lateinit var confirmationIdGenerator: ConfirmationIdGeneratorThe advantage of this approach is the ability to use the full power of the mock library to dynamically override the behavior of our component:

every { confirmationIdGenerator.generateConfirmationId() } returns wantedConfirmationId

conclusions

At each level of the testing pyramid, it is necessary to use appropriate and available technologies and automation tools, as well as adhere to industry best practices. Only such an integrated approach ensures the achievement of high quality and the creation of a reliable basis for the development of the project. When conducting integration testing, the developer may encounter difficulties and pitfalls, which I told you about in this article. Below is a table with a brief description of the traps and ways to bypass them:

Trap | The essence | How to get around | Tools |

Dirty base | Several tests check service functions related to working with state, but the state is not cleared based on the test results | Use test framework mechanisms to clean up state before executing a test | @BeforeEach |

Unexpected Planner | Tests via the API interact with a specific entity, while the scheduler intervenes in the process and changes the attributes of the entity, or even deletes it altogether, which is why the tests fail | Separate testing of the scheduler and the main functionality of the service: add to the service the ability to disable the scheduler at the configuration level, and test the work of the scheduler separately, calling it explicitly | @ActiveProfiles(“test”) |

What time is it now?! | Tests check an API whose implementation contains an algorithm that depends on the current time/date | Redefine the clock component and tune it to ensure predictable tests | @TestConfiguration |

Didn't wait… | There is an asynchronous handler, we are trying to check the results of its work in the test, not knowing for sure whether its call has completed or not | Using declarative means, organize a waiting loop with a timeout | testImplementation(“org.awaitility:awaitility-kotlin:4.2.1”) |

Unpredictable Component | There is a component in the system (for example, a random number generator), the result of which I would like to check by changing its behavior dynamically in different tests | Use a mock library to place a component in the context that allows you to dynamically change behavior through the API | @MockBean |

What else to read/watch on the topic

I hope the information was useful and interesting. I invite you to comment – let's discuss difficult situations with integration tests. Thanks for your support!