How not to drown in a sea of analytics events

A large product with many services and a large number of teams involved is always difficult. Moreover, the larger the product, the more specialists work on it. Consequently, the smaller the area of responsibility of each specific specialist. Therefore, looking at the same feature, different specialists can see very different nuances.

My name is Evgeniy Mochalin. I work in the front-end technical team of the medical company SberHealth. In this article, I want to share the story of how we built processes within the team so that work with all the company’s products would be transparent, standardized and obvious to all employees.

Our context

SberHealth is one of the most popular medical services in Russia. The company provides services at the intersection of medicine and IT. Including:

online consultations with doctors of various profiles, including narrow specializations;

prompt online consultations with on-duty therapists and pediatricians—you can contact them within a few minutes;

make an appointment in a few clicks for a face-to-face appointment with the desired doctor, for diagnostic procedures, medical procedures and tests;

monitoring of patients with chronic diseases for continuous monitoring of their health indicators and prompt response to any changes.

We are working on developing the entire pool of available services:

more than 10 product teams;

about 30 front-end developers who work with more than 60 front-end repositories.

Such a large team gives us more opportunities to improve our own services.

Each product has its own cross-functional team, and for a large product there may be several. This separation helps each employee effectively concentrate on their product.

Horizontally, there is a division into specialties: front, back, analysis and others. This allows for the formation of guilds and the sharing of knowledge between teams, as well as the development of best practices in the use of technology and problem solving.

However, in some aspects this can create a number of difficulties: the more products and events, the more difficult it is to track them.

For clarity, let's look at a simple example.

Analysis of an example close to reality

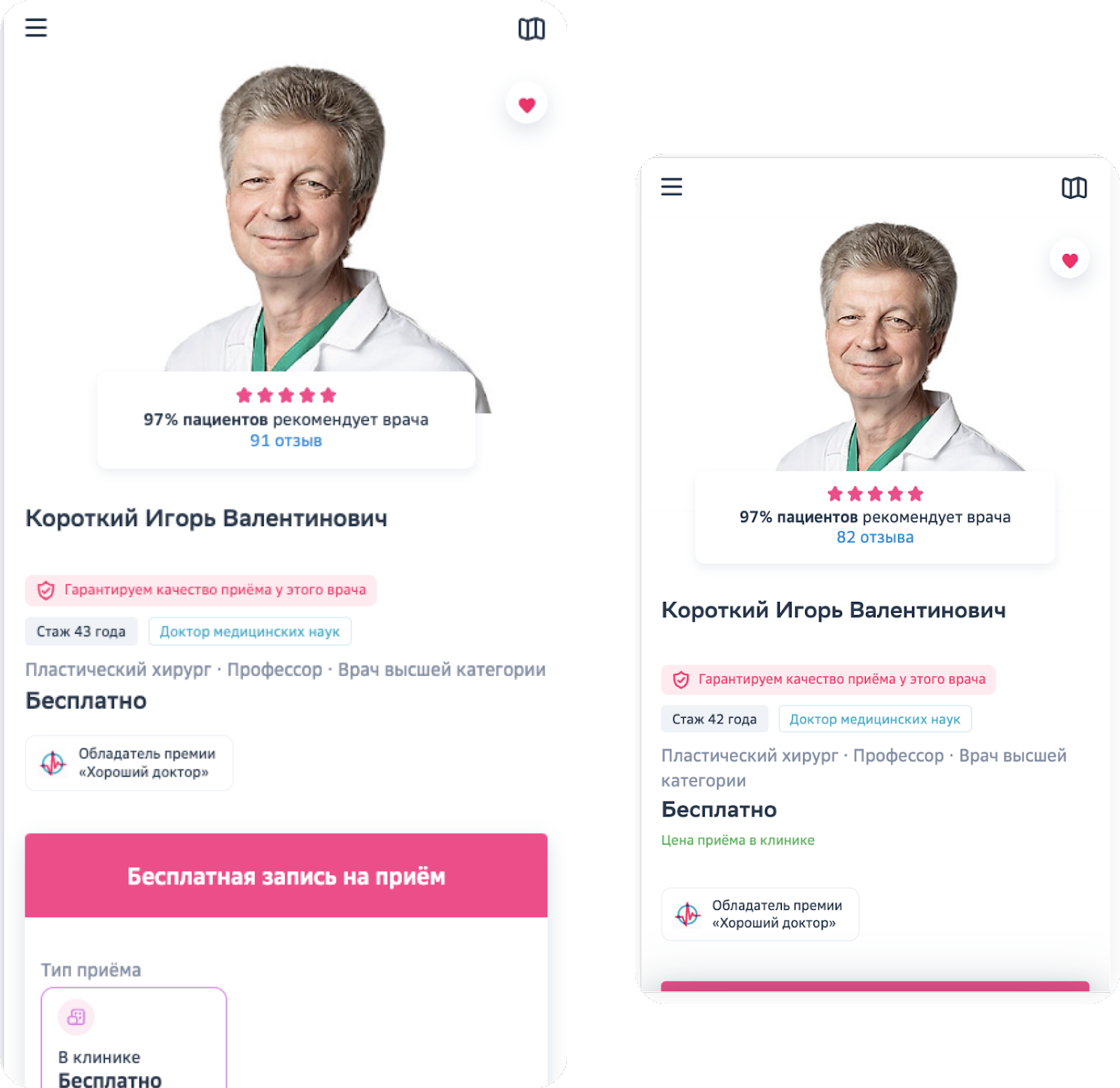

One of our main services is a website where users can study information about a doctor and make an appointment with him.

Let's say a product manager discovered that on smartphones with a small display (for example, iPhone SE or similar), the first screen does not have room for an appointment button. From this, the product can assume: “Users who navigate from the browser and immediately see the button are actively making an appointment, while those who do not see the button are likely to simply return to the search engine and click on the next link.” Based on this scenario, the product develops a hypothesis: “If there is a button on the first screen, then the conversion to order will increase.” The product is great, but does he see the whole picture?

With the idea of adding a button, the product goes to the designer. One solution is to add a large green button so that it definitely catches the user’s eye.

But what if the user comes to a resource familiar to him to choose a doctor, read comments, see experience, marks on training? He already knows how to sign up and doesn’t want to be distracted by that big green button on all the pages. This leads to the second hypothesis: “The conversion will be higher if you add a button in the corner, but make it small and gray – so it won’t get boring.” Both options have the right to life, therefore they are considered on equal terms.

This creates two hypotheses and two layouts with different buttons. Next, an analyst joins the discussion and decides that clicks on the buttons will send a 'click_button' event with the attribute 'type: big' and 'type: small'.

Then the project manager launches a typical development task, in which he describes that when a button is clicked, a 'click-button' event should be sent.

The developer reads the doc and adds an event dispatch.

<button

onClick={() => {

window.ym(12345678, 'reachGoal', 'clickButton', {

category: 'request',

type: 'big',

})

}}

>

Записаться

</button>Next, QA checks that everything is in order: when this button is clicked, the 'click' event is sent.

After this, a week-long A/B test is launched to test the hypothesis and choose the most successful option.

Let’s assume that initially the conversion was 10%, that is, out of 100 people who came to the page, 10 clicked on the button and made an appointment. But after adding the big green button, we expect that it will be clicked on much more often and the conversion will rise – for example, up to 30% (yes, we are not being modest). At the same time, we don’t have much hope for the small gray one, since it probably attracts less attention. We do not raise expectations for clicks; we even assume that the conversion will drop to 7%.

We are waiting for results, setting expectations.

We look at the real data and experience some shock—zero events.

Why is that? What's happened? Isn't our hypothesis so cool?

What are the difficulties? What are we talking about?

There may be several reasons for this behavior:

people don't click at all (possible, but unlikely);

the metrics are wrong (more realistic).

Moreover, problems with metrics are not always an error. It is quite possible that each specialist working on the task simply looked at the implementation differently. For example, the names of events and metrics may simply differ:

the analyst uses “click_button”;

The project specified “click-button” when setting the task;

The front-end developer wrote “clickButton” in the code.

In the end, everything was done correctly, but nothing works.

Thus, we have identified two bottlenecks:

a single point of truth – there is no single publicly accessible repository that clearly describes how events should be called and what attributes they should have, so that at any stage of development anyone interested can go in and check that he correctly understood and remembered the agreements;

manual labor – the more operations are performed manually, the higher the risk of human error, including common typos, inattention, habits and banner blindness.

Thus, in order to prevent the problems described in the mentioned case, you need to deal with their root causes.

To do this, we decided to design our own system.

We design the system

When designing the system, we needed to answer questions about:

data format;

storage systems;

client lib;

code generation;

CI;

CD.

Data Format

We want to be able to change the analytics system used, so we will store some configs that will be used to generate events for a specific analytics system (yaMetrika, ga, snowplow, …). It is these configs that will be our main, single point of truth.

You can write configs in any convenient format: JSON, YAML, TOML. In our implementation, we chose YAML. At the same time, it was immediately determined that the event must include:

description – a clear description for people of what this event is and how it is planned to be used;

id is a unique identifier that allows you to uniquely identify an event (in our case it is event_category + event_action);

attributes: — attributes of a specific event (name, type, indication of necessity and list of possible options).

description: "Клик на кнопку записи на приём"

definition:

event_category: request

event_action: clickButton

attributes:

type:

description: "Тип кнопки"

options: ["big", "small"]

type: str

required: true

Storage system

We have determined that we are working with YAML configs. It is important to us that they are available for reading and adding. The simplest and most obvious option in our case was git, because it has all the things we need out of the box:

storage and versioning;

discussion of changes to Merge Request and Pull Request;

convenient CI/CD integration.

Client lib

On the front end, we want to get a client lib that will contain all the available events so that developers can use them without unnecessary customization. The most obvious format for the front is an npm package from which you can import JS event objects. Better yet, use typescript. But we have projects in which the front is pure JS – they hang around and don’t really require support. Therefore, the most suitable option for us is to have .js files as a result of the build, and d.ts files nearby for typing.

const clickButtonEvent = {

category: 'request',

action: 'clickButton',

attributes: {

type: {

value: 'big',

},

},

}

Code generation

We have event configs in yaml format, but we need to make .ts from them with a description of the interfaces. The part with event storage and lib code generation is the responsibility of analysts, so it is most convenient to implement it in Python + Jinja2. For the front, the main thing is to get typescript so that you can build it in .js + d.ts.

export interface IProps {

{%- if attributes.attributes %}

{%- for attribute in attributes.attributes %}

{{attribute.name | to_lower_camel}}

{%- if (attribute.required != true)

or (attribute.options and attribute.options | length == 1)

%}?{%- endif %}:

{%- if attribute.options %}

{%- for option in attribute.options %} '{{option}}'

{%- if not loop.last %} |{% endif %}

{%- endfor %}

{%- elif attribute.type == 'int' %} number

{%- else %} string

{%- endif %},

{%- endfor %}

{%- endif %}

}

...

It is worth noting that in this article we are talking about the frontend in the context of web and browsers, but in this way you can generate code for any language, so the concept fits perfectly into libs for iOS and Android.

C.I.

To reduce the routine, we connect CI:

validate — we validate YAML configs, all recorded agreements;

generate – generate front-end lib code from YAML configs;

lint – run linters (there is auto-generation here, so such problems are unlikely, but they also require few resources, so let them be);

build – we assemble .js and .d.ts from .ts files;

test — run the necessary tests.

stages:

- validate

- generate

- lint

- build

- test

CD (nmp publish)

In the Continuous Delivery part, we need to put the npm package in the internal registry and assign it the correct version using semver.

Such an implementation with the entire set of described stages and components gives us:

A single point of truth. There is a git repository with YAML configs from which the client lib is generated. Therefore, we can guarantee that all projects use only these events and no others.

Reduction of manual labor. CI removes 90% of routine work, reducing the risk of human errors.

Implementation of the front line

Events

The core of the system is ready-made objects that we can use to send standardized events to different analytics systems. In our button example there is a “clickButton” event. The button click event has one attribute “type” and it is either “big” or “small”. To create such an object, the following function is suitable:

interface IProps {

type: 'big' | 'small'

}

export const clickButton = ({ type }: IProps) => ({

category: 'request',

action: 'clickButton',

attributes: {

type: {

value: type,

},

},

})

In this case, TypeScript will immediately prompt you when writing code if we have made a mistake in naming properties, or are trying to use invalid values.

Adapters

Next comes the question of sending events. Previously, we worked with Google Analytics and GTM, they completely suited us. Some projects worked with Yandex Metrica, which also suited us. Over time, we began to feel the lack of access to raw data because both of these tools only provide access to data in an aggregated form. To achieve this, we moved to our own solution based on Snowplow.

In total, a typical front-line project can work:

with one of the listed systems;

with several analytics systems;

be able to migrate from an external solution to an internal one.

Therefore, it was important for us to highlight the adapter layer.

The essence of the adapter is two functions: init and pushEvent.

init initializes the adapter, loads the script, and sets the necessary parameters.

For example, an adapter for Google Antalytics might look like this:

const initGA = (id) => {

!(function (i, s, o, g, r, a, m) { i['GoogleAnalyticsObject'] ...);

window.ga('create', id, 'auto')

window.ga('send', 'pageview')

}

For Yandex Metrics like this:

const initYaMetrika = (id, enableWebvisor = false) => {

!(function (m, e, t, r, i, k, a) { m[i] = m[i] || function () {...} );

window.ym(id, 'init', {

id,

clickmap: true,

trackLinks: true,

accurateTrackBounce: true,

webvisor: enableWebvisor,

childIframe: true,

});

};

For example, for GA:

const pushEvent = (event) => {

window.ga('send', {

hitType: 'event',

eventCategory: event.category,

eventAction: event.action,

...event.attributes,

})

}

For Yandex Metrica:

const pushEvent = (id, event) => {

const goalParams = {

event: {

[event.category]: {

[event.action]: {

attributes: JSON.stringify(event.attributes),

},

},

},

}

event.yaGoalNames.forEach((goalName) => {

window.ym(id, 'reachGoal', goalName, goalParams)

})

}

Actions

We have several analytics systems and each of them has its own adapter. Now the resulting structure needs to be secured. To do this, we invent some standard actions that are guaranteed to be included in every adapter.

These are the same init and pushEvent (or maybe some others). Let's call them Actions. The essence of the init action is to initialize all connected adapters, if there are several of them, and pushEvent is to send events to each adapter, if there are several of them.

In a simplified form it might look like this:

const initAllAdapters = (adapters) => {

adapters.forEach((adapter) => {

setTimeout(() => {

adapter.init()

}, 0)

})

}

Thus, the implementation of the front line consolidates solutions to fundamental problems:

It gives a single point of truth – there is an npm package that contains the events that need to be used. It’s best not to over-customize or use anything outside of this package.

Minimizes manual labor – events and adapters for all necessary systems have already been written, they just need to be imported and used.

Liba in the project

In the project, the implemented client lib works approximately as follows.

Installation

Install the npm package using any standard method:

$ npm install @sh/analytics

$ yarn add @sh/analytics

$ bun install @sh/analytics

Initialization

You need to collect a set of necessary adapters; each can be configured if necessary. For example, for a project that is in the process of migrating from GA to Yandex Metrica, the initialization could be like this:

import { init, createYaAdapter, createGAAdapter } from '@sh/analytics'

export const initAnalytics = (yaId: string, gaId: string) => {

init({

adapters: [

createGAAdapter(gaId),

createYaAdapter(yaId, { webVisor: true })

],

})

}

In 90% of cases this is enough. The remaining 10% may require additional parameters that we can pass with the options object.

All this is passed to an action called init, which initializes all adapters.

At the same time, the cognitive load on developers is minimal – the implementation is written once and works stably without the need to study everything “under the hood.”

Usage

Our button has three main variables:

color: green or gray;

size: big (big) or small (small);

design: with the inscription (“Sign up”) or a question mark.

import { pushEvent, clickButton } from '@sh/analytics'

export const ProjButton = ({ type }: IProps) => {

const className = type === 'big' ? 'green' : 'gray'

const buttonText = type === 'big' ? 'Записаться' : '?'

const handleClick = () => {

pushEvent(clickButton({ type }))

}

return (

<button className={className} onClick={handleClick}>

{buttonText}

</button>

)

}

Thus, with either in the product we also get what we are looking for:

a single point of truth in the form of .js + .d.ts files for each YAML config in the repository;

minimizing manual labor due to ready-made adapters, autocomplete, typing and CI/CD project.

Summary

Previously, we often encountered the effects of a complex product and a large team: we wrote a lot of code, used a lot of small xls files, and because of minor errors in releases we were forced to go back and go through the entire flow again with the involvement of a large team of specialists.

Introducing unification through system design helped us avoid these problems. So, our system has been in operation for more than two years and now we have:

a single, understandable, publicly accessible point of truth (YAML in GIT);

there are no obvious errors, which helps with type-check during development, as well as CI/CD to reduce routine, yaml validator, codegen, lint, test;

simple architecture, open to extensions (event, adapter, action);

understandable and well-known technologies (js, d.ts, lint, npm).

It is noteworthy that such a system is not strictly tied to the company: it can be flexibly expanded, changed and modified to suit the needs of a specific product. Therefore, using the patterns we have developed, everyone can try to implement something similar in their company.