easy to change and run ML experiments

Introduction

Typically, working on ML projects involves a large number of experiments with various data sets, model architectures, parameters and optimization methods. In order to effectively manage all these changes and results, it is necessary to use tools that will help organize and structure the process.

It is desirable to have a simple and quick way to change some parameters, look at the results of the work, and record changes if successful. In some cases, an external person who was not involved in the development of the project in the first place must do the same. The simpler it is, the better.

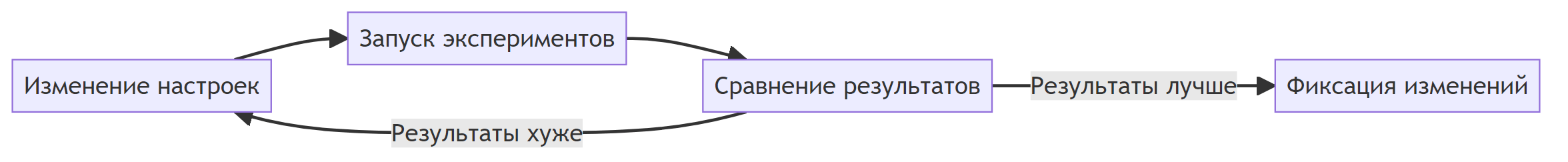

An approximate work cycle is shown in Fig. 1:

It is assumed that the project initially uses Git as a version control system and the reader has some knowledge of the tools discussed.

The question remains about how best to store configuration files and organize the launch of experiments.

Running experiments

To run experiments, you can write your own logic, but you need to spend time on this, and if you need to use this approach in different projects, you still need to think about universality. Another option is to use some ready-made tools from advanced MLOps solutions. This is usually needed when there is a need to run distributed experiments or support for complex scenarios is needed.

This article discusses the option of running locally with minimal settings and dependencies on external systems. Suitable for this DVC. DVC can be used for different purposes:

Working with data storage and versioning.

Launching pipelines.

Tracking metrics, parameters and comparing them.

Managing and running experiments.

Within the framework of this article, the main interest will be capabilities for managing and running experiments. In DVC, you can organize experiments as a task queue. The task is to launch stages from dvc.yaml with some code running. When all tasks are completed, all that remains is to select the best experiment and apply the changes to the main code base. With a certain organization of the project, most of it can be automated. Some information on working with experiments is in the article.

Let’s say there is a parameter alpha on some model. There is already written code and a stage has been defined. train. Need to check train for different parameter values.

The basic way to configure the behavior of the experiments themselves is to change the settings in the configuration files. To simplify the work, it is necessary to support maximum customization capabilities through configuration files without changes to the code. This requires a flexible system for application configuration.

Project configuration management

Typically, configuration files are stored as a set of files in popular formats: json, yaml, toml, ini, etc. These files store some settings for the entire project. In general, it is easier to manage one file rather than a collection of them.

The set of requirements for a configuration management solution is as follows:

All final settings should be in one file.

The ability to logically group files and create a final file from parts. Usually it is easier for a person to navigate and change some individual settings in small files than in one large file.

Reusing some values within the configuration file to reduce duplication.

Hydra just allows you to do what you need. DVC has appropriate integration.

It will work as follows. For example, the configuration files are organized as in the example below:

├───configs

│ │ train.yaml

│ │

│ ├───dataset

│ │ bin_cls.yaml

│ │ bin_cls_noise.yaml

│ │

│ └───model

│ dec_tree.yaml

│ log_reg.yaml

In file train.yaml indicated which parts to use:

...

defaults:

- model: log_reg

- dataset: bin_cls

- _self_

...

Hydra will take a set of files and transform it as if it were a single file with the following structure:

model:

... # содержимое bin_cls.yaml

...

dataset:

... # содержимое log_reg.yaml

...

Hydra is based on OmegaConfso some functions from OmegaConf will also work in Hydra.

Eat ability to reuse values from different parts.

More details about other aspects.

By adding new files to the appropriate groups, you can expand the project within certain limits without rewriting the code.

Case Study

The entire example is available in the GitHub repository.

The repository has a small example of organizing a project with DVC and Hydra to solve a binary classification problem on a synthetic data set. The structure of the configuration files is as follows:

│ train.yaml

│

├───dataset

│ bin_cls.yaml

│ bin_cls_noise.yaml

│

└───model

dec_tree.yaml

log_reg.yaml

There are two data sets for binary classification: basic (bin_cls) and additionally with noisy class labels (bin_cls_noise). Two models: decision tree (dec_tree) and logistic regression (log_reg). Main configuration file: train.yaml.

We want to test how well different models perform under different parameters on the bin_cls dataset or bin_cls_noise. There are two options for how to organize this in DVC:

Use hydra composition.

Don’t use hydra composition and add an intermediate step that creates the final configuration file.

All options have their advantages and disadvantages. In any case, you can easily add a new model while maintaining the interface agreement

Hydra composition

When this mode is enabled, DVC, before launching the specified stage, will use Hydra to collect the final configuration file and save it to a file params.yaml.

Pros:

The complete configuration file will be generated automatically.

IN

dvc.yamlyou can use the values of all parameters that are in the file. For example:

stages:

train:

cmd: python ./train.py

deps:

- ${data_dir} # Из params.yaml

outs:

- ${exp_dir} # Из params.yaml

When changing any parameter, the full configuration file will be automatically updated and DVC will understand when to restart the stage if the file

params.yamllisted asdepsVdvc.yaml.You can use the section

paramsto indicate which parts of the configuration file are parameters.Using

dvc exp run -S ...indicating the enumeration of parameters fileparams.yamlwill contain the current values.

Minuses:

Lack of full control over how the configuration file is created and resolved links inside it.

Some values cannot be set in advance. For example, they are determined dynamically based on data. In this case, you will have to fill in the value

???and inparams.yamlthis value will remain so. You’ll have to process it in code.If there are several stages, then all stages will have to accept the complete configuration file and take the part that is needed. In some cases, you can get by by changing the values in

dvc.yaml.

Without Hydra composition with intermediate stage

Here you need to write an additional stage that emulates the work of what DVC does with Hydra composition.

An example code is:

Sample code to get the full configuration file

import hydra

from omegaconf import OmegaConf

@hydra.main(version_base="1.3", config_path="configs", config_name="main")

def main(config):

...

OmegaConf.save(config, ..., resolve=True)

Next in dvc.yaml the resulting file is indicated as a dependency and in the section varsso that you can use the values from it:

stages:

train:

vars:

- путь_до_созданного_файла

cmd: python ./train.py

deps:

- путь_до_созданного_файла

The main advantages are the same as with Hydra composition. Additionally, it becomes possible to define some dynamic values and influence the formation of the final configuration file.

This can be combined using separate steps without being tied to the main configuration file. For example, dvc.yaml consists of the following stages as in Fig. 2.

Stages A and B can accept their own configuration files. For example, these are some common actions for the project. Step C generates the complete configuration file. Stage D is the stage that will run through dvc exp run ....

Minuses:

When starting experiments, you need to specify which file to change by doing

dvc exp runotherwise a configuration file with the same values will be generated.If there are several stages, then all stages will have to accept the complete configuration file and take the part that is needed.

Maybe,

dvc exp runyou’ll have to start from force-downstreamso that the step responsible for creating the configuration file is always executed and creates an up-to-date configuration file.

In the example from the repository, the Hydra composition option is used as it is a good starting point.

It is necessary to check how models with different sets of parameters work. Since the structure of the configuration file changes and the parameters also differ, it will not be possible to add all the experiments at once because the only way to run experiments at the time of writing was a complete search of all parameters with all, but this can be done in several commands:

dvc exp run --queue -S model=log_reg -S model.C=1.0e-2,100 train

dvc exp run --queue -S model=dec_tree -S model.max_depth=6,12 train

The output will be something like this:

Queueing with overrides '{'params.yaml': ['model=log_reg', 'model.C=0.01']}'.

Queued experiment 'lyric-feel' for future execution.

Queueing with overrides '{'params.yaml': ['model=log_reg', 'model.C=100']}'.

Queued experiment 'kooky-taka' for future execution.

All experiments have been added to the task queue. You can view the status via dvc queue status. Sample output:

Task Name Created Status

52dbbd4 lyric-feel 01:21 PM Queued

6ef169b kooky-taka 01:21 PM Queued

610131c meaty-name 01:21 PM Queued

3e6aab7 famed-life 01:21 PM Queued

Worker status: 0 active, 0 idle

There are currently 0 active performers. To start calculations, you need to add executors:

dvc queue start -j 2

To speed up calculations, we will add two executors. After this the output dvc queue status will be something like this:

Task Name Created Status

52dbbd4 lyric-feel 01:21 PM Running

6ef169b kooky-taka 01:21 PM Running

610131c meaty-name 01:21 PM Queued

3e6aab7 famed-life 01:21 PM Queued

Worker status: 2 active, 0 idle

When all tasks are successfully calculated, the status will be Success. If there were any errors during the calculation, the corresponding status will be:

Task Name Created Status

52dbbd4 lyric-feel 01:21 PM Success

6ef169b kooky-taka 01:21 PM Success

610131c meaty-name 01:21 PM Success

3e6aab7 famed-life 01:21 PM Success

Worker status: 0 active, 0 idle

If you need to look at the logs, just run dvc queue logs <name>Where <name> this is the unique name of the task. It is available as an environment variable DVC_EXP_NAME. Sometimes this is useful if an external logging system is used in parallel.

Experiments can be compared with each other. IN dvc.yaml a section with a metric—the proportion of correct answers (accuracy)—was indicated. It is shown during comparison and is a guide to which experiment turned out to be better. For a quick report, you can run the command:

dvc exp show --only-changed --sort-by accuracy --sort-order desc

If desired, you can save in other formats. When saving in Markdown there will be a table approximately (not all columns shown) as shown below:

Experiment | Created | accuracy | model._target_ | model.penalty | model.solver | model.C | model.max_depth |

|---|---|---|---|---|---|---|---|

workspace | – | 0.87667 | sklearn.linear_model.LogisticRegression | l1 | liblinear | 0.01 | – |

master | 01:20 PM | 0.92333 | sklearn.linear_model.LogisticRegression | l1 | liblinear | 0.01 | – |

├── 998d475 [famed-life] | 01:26 PM | 0.97333 | sklearn.tree.DecisionTreeClassifier | – | – | – | 12 |

├── 266d93e [meaty-name] | 01:26 PM | 0.97 | sklearn.tree.DecisionTreeClassifier | – | – | – | 6 |

├── 7a03577 [lyric-feel] | 01:26 PM | 0.92333 | sklearn.linear_model.LogisticRegression | l1 | liblinear | 0.01 | – |

└── c5f9a0b [kooky-taka] | 01:26 PM | 0.87 | sklearn.linear_model.LogisticRegression | l1 | liblinear | 100 | – |

It contains experiments performed with sorting by accuracy value, as well as existing branches and the current working copy. If you actively use VS Code, you can install extension for DVC. You can view basic information, but in the interface.

Once an experiment is selected, you can quickly save it as a separate branch:

dvc exp branch wormy-yate branch-from-exp

After creating a branch, you can see that the new parameters are contained only in params.yaml and the difference will be like this:

diff --git a/params.yaml b/params.yaml

index 36234f8..7218b6e 100644

--- a/params.yaml

+++ b/params.yaml

@@ -1,8 +1,6 @@

model:

- _target_: sklearn.linear_model.LogisticRegression

- penalty: l1

- solver: liblinear

- C: 0.01

+ _target_: sklearn.tree.DecisionTreeClassifier

+ max_depth: 6

dataset:

n_samples: 1000

n_features: 10

The values in the original configuration files, in configs, remain the same. This may cause errors if the configuration file is rebuilt rather than using the values from params.yaml. You need to keep track of which files the stage is called with when you restart it. The author does not know of any simple methods, other than manually updating the original files so that the state is always up to date. In this case, the values from configs can be considered as a starting point from where to take the entire structure, but the modified parameter values are already stored in params.yaml.

To remove unnecessary information, you must do the following:

dvc exp remove -A

dvc queue remove --all

conclusions

This article looked at an example of organizing an ML project using DVC and Hydra to quickly change basic settings and launch local experiments with various parameters. This approach allows you to manage changes and the results of experiments. This makes the process more organized and structured, making it easier to record changes when successful.

There are certain problems with the need to adapt to the logic of DVC operation, maintaining the current state of all configuration files in the project and performance. We run a separate process each time to check the combination of parameters. In some cases this may not be very effective. In other cases, the costs are acceptable. For example, for projects involving training neural networks. Sometimes it is not possible to run several parallel trainings at once and a queue of tasks naturally forms. Using this approach, you can plan training with testing different ideas and leave everything to be calculated at night, and during the day check what results were obtained and what remains to be calculated.

DVC and Hydra have more functionality than has been discussed. For more information, please refer to the documentation. Perhaps readers will have their own version of a combination of these tools for better organization of work.