Data directory on the example of DataHub. Part I

In today’s companies, the amount of data generated and collected is growing at an astonishing rate, making it necessary to organize and manage it. Data catalogs are becoming part of information systems, providing organizations with a convenient and efficient tool for storing, accessing and managing various types of data.

The data catalog is a central repository of information about the structure, properties, and relationships between data. It allows different users to easily find, understand and use data to make decisions and perform tasks, and will be useful to data analysts, business analysts, DWH and data management specialists.

The need to implement a Data Catalog depends on the size of the company and the complexity of the business. Large companies prefer to implement some kind of expensive vendor software (the list can be found Here), or develop your own solution (as, for example, it was done in magnet And Tinkoff).

Well, if you don’t have the resources for either, but you have already experienced all the pain of “bad” data, the complexity of maintaining documentation in Confluence, have experienced problems understanding what kind of data you have in general, you can start with open-source projects.

The most popular open-source Data Catalog tools are:

The requirements that a data catalog must meet may vary from company to company, but there are a few basic ones:

automatic discovery of data sets from various sources;

clear user interface;

data lineage visualization;

the ability to search by keywords, business terms;

display of the data structure;

display data owner;

the ability to integrate with other data management tools (airflow, great-expectations, dbt, etc.);

access control.

Welcome to DataHub!

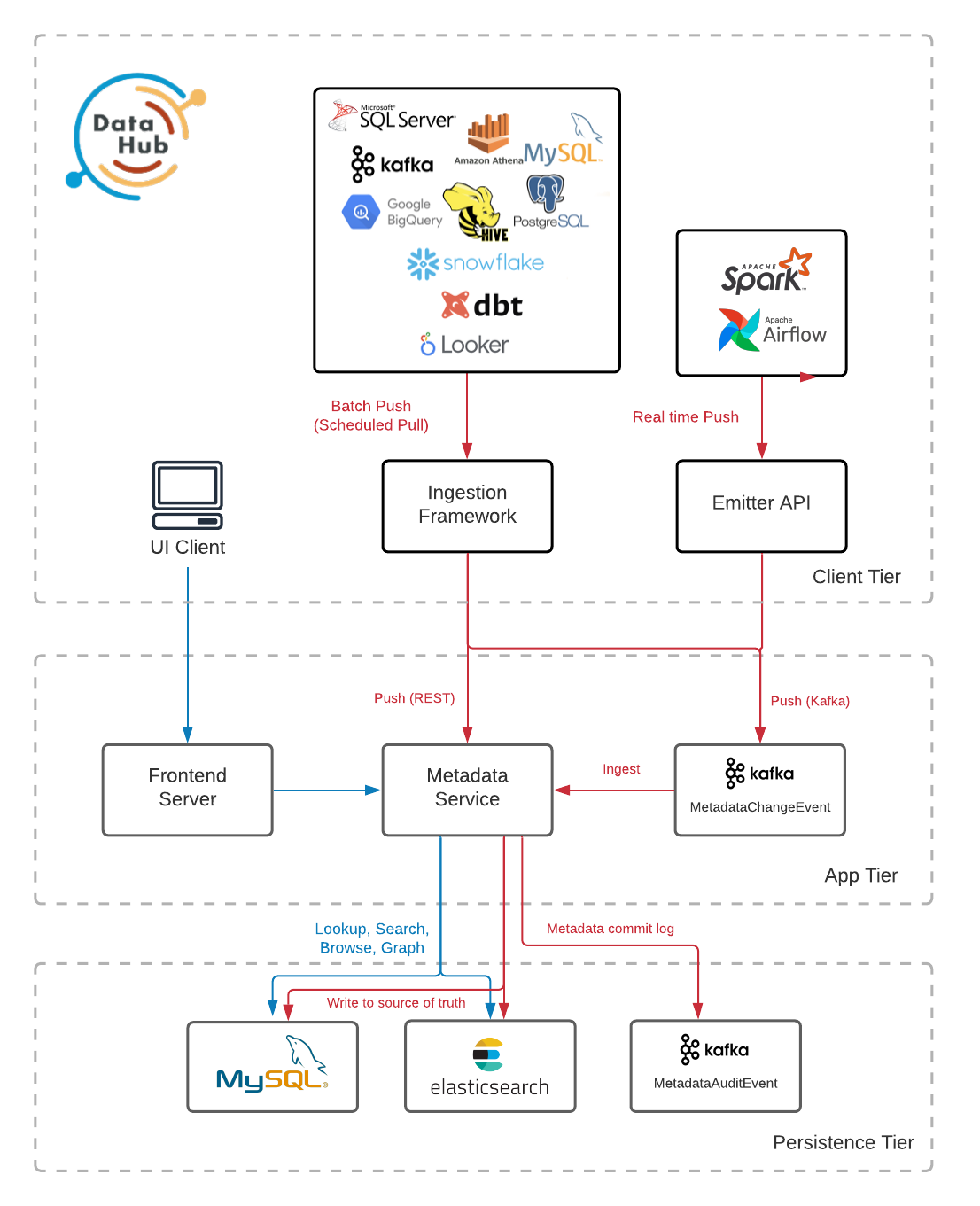

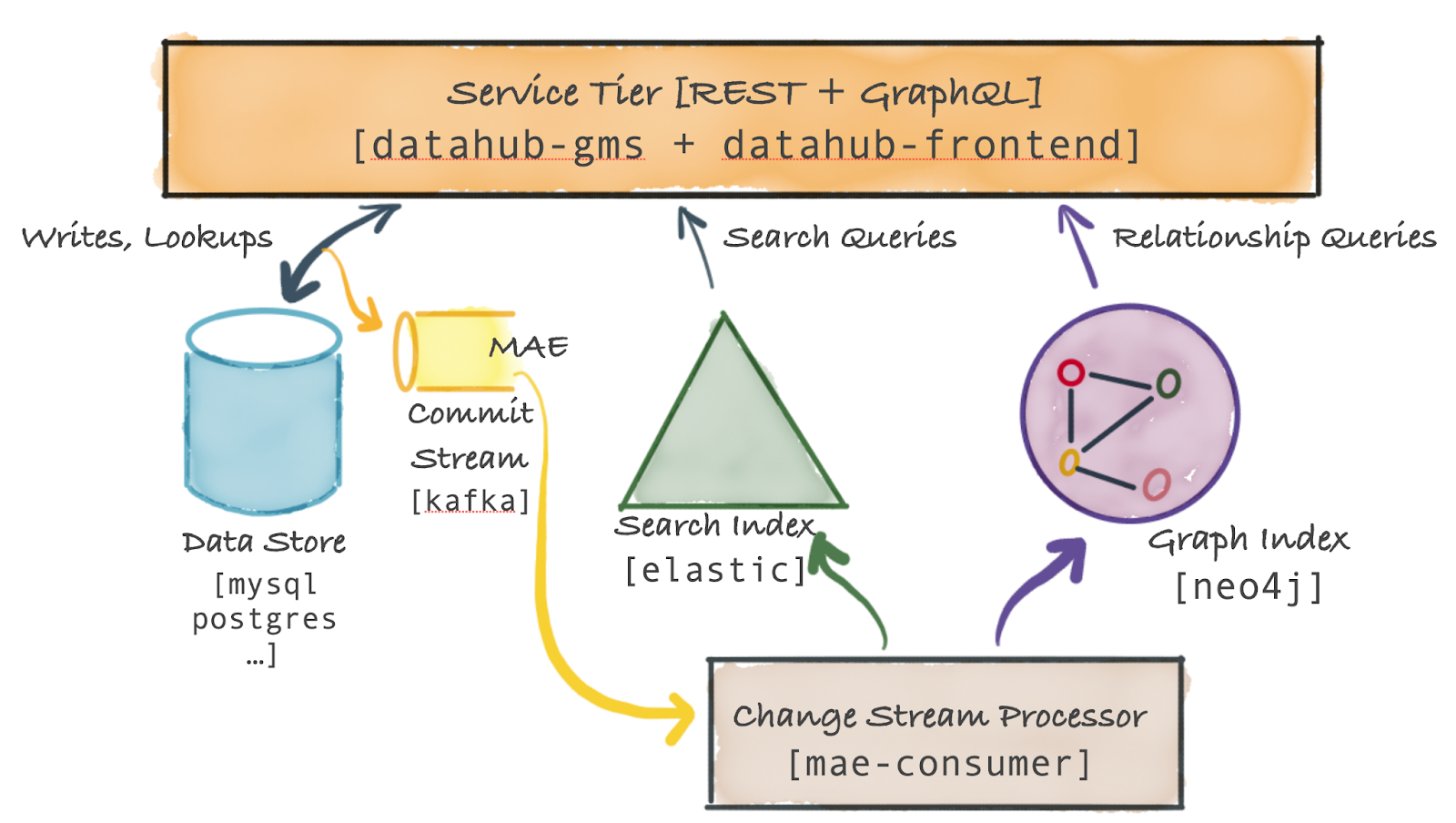

Expanded architecture data hub presented below:

data hub has a flexible metadata ingestion architecture that provides the ability to support push and pull integration models.

Pull-based integration is provided through an extensible Python library for extracting metadata from external systems (Postgres, Snowflake, MySQL, etc.).

Push-based allows metadata to be updated as it changes (eg AirFlow, Great Expectations). We can push changes using Kafka, REST, or a Python emitter.

Metadata goes to the so-called service layer. The central component of this layer is the metadata service.

The metadata service provides a REST API and a GraphQL API to perform metadata operations. Full-text and advanced search queries are sent to the search index, while complex graph queries, such as lineage, are sent to the graph index.

Any change to the metadata is committed to persistent storage. An event about this goes to the Metadata Change Log (Kafka), which is created by the metadata service. The stream of these events is a public API that external systems can subscribe to, allowing you to respond to changes in metadata (for example, through notifications in Slack).

The Metadata Change Log is used by the Spring job to make appropriate changes to the graph and search indexes.

DataHub setup

Expand data hub It is not difficult, just install the necessary libraries:

pip install ‘acryl-datahub’and deploy an instance data hub in docker:

datahub docker quickstartThe interface can be accessed through a browser (http://localhost:9002).

The root user is created by default. datahub with password datahub. How you change the default user depends on how you deployed data hub. You can read more about this Here.

About supporting the Data Mesh concept

data mesh is a decentralized approach to data management. The data is stored in different domains and managed by subject matter experts. However, the data must be publicly available.

data hub Allows teams to map their data asset groups to Domains—top-level categories, such as all the data that belongs to a sales organization.

Also, teams or business groups can link their data to industry standard business terms using the Business Glossary.

Let’s look at these concepts with an example.

PS The example reflects my understanding, and if I’m wrong somewhere, please correct me)

Let’s say we want to create a Financial Transactions domain. In the upper right corner we see a tab Govern:

To create a domain, click + New Domain:

Add some description to our domain and save:

We can add some data products to the domain. Usually the name corresponds to the logical purpose of the data product, for example, “Credit Transactions”, “Payment Transactions”, etc., and is also associated with an asset or set of data assets:

Next, we want to complete our understanding of the data and define a group of business terms that are relevant to our domain. Go to the Glossary tab:

Let’s create a Finance term group and add a few other terms to this group:

You can move terms to other parent groups, link multiple glossary terms using inheritance relationships, and break a term into subterms.

Let’s create a few more terms and link them together:

We can verify that the subterms correctly display the parent term:

It should be noted that the creation of a business glossary and domains requires the development of guidelines that data management teams can follow in order to use metadata concepts in the same way.

In general, the set of method data concepts provided by the DataHub is a good starting point and allows you to create complex data models.

We can now easily associate our data asset with the generated domain and business terms.

Adding a data source

There is nothing complicated here, DataHub supports many integrations out of the box. On the Ingestion tab, we can see the available sources of data reception:

We can also add metadata using a recipe – this is the main configuration file that tells the receive scripts where to fetch data from and where to put it.

Let’s deploy a local database Postgres with a small dataset (from here), and connect it to the DataHub. Clone repository Here.

docker compose -f "simple-postgres-container/docker-compose.yaml" up -d --buildOur database is available at localhost:5432. Now we need to connect our container to the network datahub_network and get to know him IPAddress(otherwise I couldn’t connect):

docker network connect datahub_network postgresdb

docker inspect -f '{{ .NetworkSettings.Networks.datahub_network.IPAddress }}' postgresdbChoosing a data source Postgres and follow the connection steps:

Our database has successfully connected:

And if we return to the start page, we will see that information about the platform has been added to our data:

Now we can go to our plate and associate the domain with it, the user responsible for this asset, add terms, tags and documentation:

It should be noted that at the moment one data asset can belong to only one domain.

The DataHub also supports the installation of tags, which are informal, freely controlled shortcuts that serve to search and discover data, and are designed to make it easier to tag data without having to associate it with a broader business glossary.

Conclusion

The data catalog is a great tool for solving problems related to data discovery, understandability, and use. Data should be well documented to show who is responsible for its authoring, detailing, management and quality.

DataHub is a great starting point for implementing a data catalog in a company, with flexible functionality and the ability to expand it to fit your needs.

In the next part, we will talk a little about access policies, data lineage, and how you can track metadata changes on the platform)