Creating DSL in Python with textx library

To describe objects and processes in terms of business logic, configure and define structure and logic in complex systems, a popular approach is to use domain specific languages (Domain Specific Language – DSL), which are implemented either through the syntactic features of a programming language (for example, using tools metaprogramming, annotations/decorators, operator redefinition and infix operator creation, such as in the Kotlin DSL) or by using specialized development tools and compilers (such as Jetbrains MPS or general purpose parsers such as ANTLR or Bison). But there is also a DSL implementation approach based on parsing and simultaneous code generation to create executable code as described, and in this article we will look at some examples of using the textx library to create a DSL in Python.

textX is a tool for creating language models (DSL) in Python. It allows you to quickly and easily determine the grammar of a language and generate a parser for that language. textX is open source, easily integrated with other Python tools, and can be used in a variety of projects where text-based languages need to be defined and processed.

With textX, you can define various types of language constructs, such as keywords, identifiers, numbers, strings, and so on, and define their properties, such as data types or value constraints. The definition of the grammar of a language occurs in a text file in a format called meta-DSL (and registered as a tx language associated with a mask of *.tx files). The metamodel is used to check the syntactical correctness of the DSL model and allows you to generate an object tree for use in a Python code generator (which can generate both other Python code and source code in any other programming language, and even make a PDF document or create a dot file for graphviz to visualize the meta model).

textX allows you to create simple and complex DSLs, for example, to describe configuration files, to create domain-specific languages (DSLs) used in various industries such as business rules, scientific computing, natural language processing, and many others.

To use textX, first of all, install the necessary modules:

pip install textX click

After installation, the textx console utility will appear, which will be used to check the correctness of metamodels and DSL modules (in accordance with the grammar of the language). Textx uses setuptools to find registered components and allows you to extend its capabilities by adding languages (the list can be viewed via textx list-languages) and connection of generators (textx list-generators). Extensions can be installed as modules via pip install, like so:

textx-jinja – use the jinja templating engine to convert a DSL model into a text document (e.g. HTML)

textx-lang-questionnaire – DSL for defining questionnaires

PDF-Generator-with-TextX – PDF generator based on DSL description

We will create our own language without using additional extensions to see the whole process. Let’s start with a simple task of interpreting a text file consisting of the strings “hello “, which will generate executable Python code to display address greetings. We will create the language generator and description in the hello package and also define the file setup.py for configuring the metamodel extraction entry points for the defined language and code generator.

The description (hello.tx) might look like this:

DSL:

hello*=Hello

;

Hello:

'Hello' Name

;

Name:

name=/[A-Za-z\ 0-9]+/

;

The definition uses conventions:

hello*=Hello– an enumeration of several elements (Hello), may be absent (will be collected in a hello list)hello+=Hello– one or more elementshello?=Hello– the element may be present, but not required (the model will be None)hello=Hello– exactly one element

The definition may also include string constants, regular expressions, their groupings (for example, a vertical bar indicates the choice of one of the values) with modifiers (+, ?, * have the usual meaning as for regular expressions, # implies a possible arbitrary order of definitions).

Let’s create a language definition in hello/__init__.py:

import os.path

from os.path import dirname

from textx import language, metamodel_from_file

@language('hello', '*.hello')

def hello():

"""Sample hello language"""

return metamodel_from_file(os.path.join(dirname(__file__), 'hello.tx'))

Here we define a new language with an id hellowhich will apply to any files with a name mask *.hello. Let’s add a definition setup.py:

from setuptools import setup, find_packages

setup(

name="hello",

packages=find_packages(),

package_data={"": ["*.tx"]},

version='0.0.1',

install_requires=["textx_ls_core"],

description='Hello language',

entry_points={

'textx_languages': [

'hello = hello:hello'

]

}

)

And install our module:

python setup.py install

And check if the language is installed:

textx list-languages

textX (*.tx) textX[3.1.1] A meta-language for language definition

hello (*.hello) hello[0.0.1] Sample hello language

Now let’s create a test model based on the grammar (test.hello):

Hello World

Hello Universe

Let’s check the correctness of the metamodel and our DSL model (for compliance with the metamodel):

textx check hello/hello.tx

hello/hello.tx: OK.

textx check test.hello

test.hello: OK.

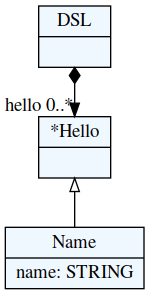

We can get a visual diagram to describe the composition of the DSL (a description can be created for Graphviz or for PlantUML):

textx generate hello/hello.tx --target dot

dot -Tpng -O hello/hello.dot

Now let’s add the ability to generate code, but before that we will programmatically parse the DSL definition on the metamodel:

from textx import metamodel_from_file

metamodel = metamodel_from_file('hello/hello.tx')

dsl = metamodel.model_from_file('test.hello')

for entry in dsl.hello:

print(entry.name)

Here we will see a list of names (World, Universe) that refer to the Hello terms (the terms themselves will be available through the hello list in the root object of the parsed model). Now let’s add the possibility of code generation, for this we add a function with the @generator annotation:

@generator('hello', 'python')

def python(metamodel, model, output_path, overwrite, debug, **custom):

"""Generate python code"""

if output_path is None:

output_path = dirname(__file__)

with open(output_path + "/hello.py", "wt") as p:

for entry in model.hello:

p.write(f"print('Generated Hello {entry.name}')\n")

print(f"Generated file: {output_path}/hello.py")

The generator is created for the hello language and will be available as target python. Add to entry_points registration in setup.py:

'textx_generators': [

'python = hello:python'

]

And now let’s run the code generation:

textx generate test.hello --target python

The generation result will look like this:

print('Generated Hello World')

print('Generated Hello Universe')

Now let’s complicate the task a bit and implement a state machine. A DSL definition for a state machine might look like this:

states {

RED,

YELLOW,

RED_WITH_YELLOW,

GREEN,

BLINKING_GREEN

}

transitions {

RED -> RED_WITH_YELLOW (on1)

RED_WITH_YELLOW -> GREEN (on2)

GREEN -> BLINKING_GREEN (off1)

BLINKING_GREEN -> YELLOW (off2)

YELLOW -> RED (off3)

}

Here we list the possible states and the transitions between them (with transition IDs). You can use the following description to define the grammar:

StateMachine:

'states' '{'

states+=State

'}'

'transitions' '{'

transitions+=Transition

'}'

;

State:

id=ID (',')?

;

TransitionName:

/[A-Za-z0-9]+/

;

Transition:

from=ID '->' to=ID '(' name=TransitionName ')'

;

This uses an optional modifier for a comma after the event ID. The created model can be used directly by applying the DSL metamodel to the description of traffic light states:

from textx import metamodel_from_file

metamodel = metamodel_from_file('statemachine/statemachine.tx')

dsl = metamodel.model_from_file('test.sm')

# описание перехода

class Transition:

state_from: str

state_to: str

action: str

def __init__(self, state_from, state_to, action):

self.state_from = state_from

self.state_to = state_to

self.action = action

def __repr__(self):

return f"{self.state_from} -> {self.state_to} on {self.action}"

states = map(lambda state: state.id, dsl.states)

transitions = map(lambda transition: Transition(transition.__getattribute__('from'), transition.to, transition.name),

dsl.transitions)

# извлекаем название действий (для меню)

actions = list(map(lambda t: t.action, transitions))

And now we implement the state machine:

current_state = states[0]

while True:

print(f'Current state is {current_state}')

print('0. Exit')

for p, a in enumerate(actions):

print(f'{p + 1}. {a}')

i = int(input('Select option: '))

if i == 0:

break

if 0 < i <= len(actions):

a = actions[i-1]

for t in transitions:

if t.state_from == current_state and t.action == a:

current_state = t.state_to

break

An alternative solution would be to generate a DSL-based transition function that would take an enum value from the state space and a transition (from the list of possible ones) and return a new state or an exception if no such transition was implemented.

Similarly, a more complex grammar can be implemented both for representing data and for describing algorithmic language constructs (such as branches or loops).

At the end of the article, I want to recommend a free lesson in which we will discuss the basics of API development using the FastAPI framework, as well as consider an example of a small application, and highlight the features of deploying operation.