Why is the kernel community replacing iptables with BPF?

Author’s Note: This is a post by longtime Linux kernel networking developer and creator of the Cilium project, Thomas Graf.

The Linux kernel development community recently announced bpfilter, which will replace the long-standing in-kernel iptables implementation with high-performance Linux-based BPF network filtering, while ensuring a seamless transition for Linux users.

From a humble packet filtering system at the heart of popular tools like tcpdump and Wireshark, BPF has evolved into a rich foundation for extending Linux in an extremely flexible way, without sacrificing key features such as performance and security. This powerful combination has led prospective users of Linux kernel technology such as Google, Facebook and Netflix, have chosen BPF for use in a wide range of applications, from network security and load balancing to performance monitoring and troubleshooting. Brendan Gregg of Netflix originally named BPF Superpowers (superpower) for Linux. In this post, we’ll explore how these “superpowers” make long-standing kernel subsystems like iptables obsolete, while allowing for new in-kernel use cases that few could have imagined before.

Having spent the last 15 years in the Linux kernel community writing code for many subsystems, including the TCP/IP stack, iptables, and many others, I’ve had the opportunity to observe the development of BPF up close. I soon realized that BPF was not just another feature, but a fundamental technological shift that would eventually change almost every aspect of Linux networking and security. I have contributed to and become one of the biggest supporters of this technology along with Alexey Starovoitov and Daniel Borkmann, who now support BPF. From this point of view, the transition from iptables to bpfilter is just the next logical step in BPF’s journey to update the Linux networking stack for the modern field. To understand why this transition is so interesting, let me take a little tour of the history of iptables in the kernel.

iptables and the basics of sequential filtering

iptables has been the go-to tool for implementing firewalls and packet filters on Linux for many years. iptables and its predecessor ipchains have been part of my personal Linux journey from the very beginning. First as a user, then as a kernel developer. Over the years, iptables has been a boon and a curse: a boon for its flexibility and speed of solutions. And by a curse – while debugging an iptables setup with 5K rules in an environment where several components of the system are fighting for the right to set certain iptables rules.

Jerome Petazzoni I once overheard a quote that is perfectly true:

Overheard: “In any team you need a tank, a healer, a damage dealer, someone with crowd control abilities and another one who knows iptables”

— Jérome Petazzoni (@jpetazzo) June 27, 2015

When iptables came into existence 20 years ago, replacing its predecessor ipchains, the firewall functionality was written very simply:

Protecting local applications from receiving unwanted network traffic (INPUT chain)

Protecting local applications from sending unwanted network traffic (OUTPUT chain)

Filtering network traffic forwarded/routed by Linux system (FORWARD chain).

The speed of the network in those days was low. Remember the sound the modem made when dialing a number? This was the era when iptables was originally developed and designed. The standard practice for applying access control lists (ACLs), as implemented in iptables, was to use a sequential list of rules, i.e. each packet received or transmitted is matched against a list of rules, one by one.

However, linear processing has an obvious major drawback: packet filtering overhead can increase linearly with the number of rules added.

Intermediate workaround: ipset

Some time passed, the speed of the network increased, and the iptables sets grew from a dozen to thousands of rules. Sequential listing of iptables has become unbearable in terms of performance and latency.

The community quickly identified the bottleneck: long lists of rules that denied or allowed specific combinations of IP addresses and ports. This led to the emergence ipset. ipset allows you to compress the list of rules corresponding to IP addresses and/or port combinations into a hash table, reducing the total number of iptables rules. Since then, this solution has served as a workaround.

Unfortunately, ipset is not the answer to all problems. A prime example is kube-proxy, a component Kubernetes, which uses iptables rules and -j DNAT to provide load balancing for services. It sets up several iptables rules for each backend that the service accesses. For every service added to Kubernetes, the list of iptables rules that need to be passed grows exponentially.

In a recent speech at KubeCon kube proxy performance has been considered in detail. The report presents measurement results showing unpredictable latency and performance degradation as the number of services grows. In addition, another major flaw in iptables has been identified: the lack of incremental updates. The entire list has to be replaced every time a new rule is added to it. As a result, the total installation time for 160K iptables rules representing 20K Kubernetes services is 5 hours.

Using IP/port based mechanisms in general has many other obvious disadvantages, especially in the age of application containers. Containers are often unwrapped and folded. This can result in a short lifetime of individual IP addresses. An IP address can be used by one container for a few seconds and then reused by another after a couple of seconds. This puts a strain on systems that rely on the use of IP addresses to provide security filtering, as all nodes in the cluster must be aware of the latest IP address and container mapping at all times. If within one cluster this is not particularly difficult, then between clusters it becomes an incredibly difficult task. A detailed discussion of this issue is beyond the scope of this article, so we leave it for the future.

BPF Development

In recent years, BPF has been developing at a breakneck pace, opening up what was previously inaccessible to the kernel. This is made possible by the incredibly powerful and efficient programmability that BPF provides. Tasks that previously required building a custom kernel and recompiling it can now be accomplished with efficient BPF programs within the safe confines of the BPF sandbox.

Below is a list demonstrating the use of BPF in various projects and companies:

cilium brings BPF to the container world and provides powerful and efficient networking, security, and L3-L7 load balancing. Read introduction to ciliumto get started with Cilium.

“What @ciliumproject does with eBPF and XPD – this is the cleanest network plugin I’ve seen, big respect”

— jessie frazelle (@jessfraz) August 16, 2017

Facebook presented an interesting work on load balancing based on BPF/XDP to replace IPVS, which also includes DDoS mitigation logic. While IPVS is an attractive next step over iptables, Facebook has already moved from IPVS to BPF, seeing about a 10x performance improvement.

“The performance of eBPF is amazing! A simple ingress firewall I wrote using XDP handles 11Mpps. Time for new optimizations!”

— Diptanu Choudhury (@diptanu) August 21, 2017

Netflix, in particular Brendan Gregg, used the power of BPF for performance profiling and tracing. Project bcc gives users access to BPF features, for example, to create amazing flamegraphs:

More information, including many examples of using BPF for application tracing, can be found in blog Brendan Gregg.

“As I have told many people looking for a job in systems engineering: BPF experience is very popular and it is getting more and more popular.”

— Brendan Gregg (@brendangregg) May 20, 2017.

Google is working on bpfd which provides powerful Linux tracing for remote tasks using eBPF. Based on the active engagement with BPF, they also seem to be considering migrating various internal projects to BPF.

Cloudflare uses BPF for mitigating DDoS attacks and has published several blog posts as well as presented several public speaking engagements on the subject.

Suricata is an IDS that started using BPF and XDP to replace nfqueue, which is an iptables-based packet sniffing framework. More information can be found in this Kernel Recipes report.

Open switch working on using datapath with eBPF support.

There are too many examples to list them all on this blog. More complete list projects using BPF, presented in BPF handbook.

One BPF to rule it all

The most recent development in the evolution of BPF is an exciting proposal to completely replace the iptables core part with BPF in a way that is completely transparent to the user, i.e. existing iptables client binaries and libraries will continue to work.

You can see the progress of the discussion at Kernel mailing list. Proposed by Daniel Borkmann (Covalent), Networking Specialist David Miller (Red Hat), and Alexey Starovoitov (Facebook). The proposal was featured in LWN article, which provides an excellent summary of the original discussion.

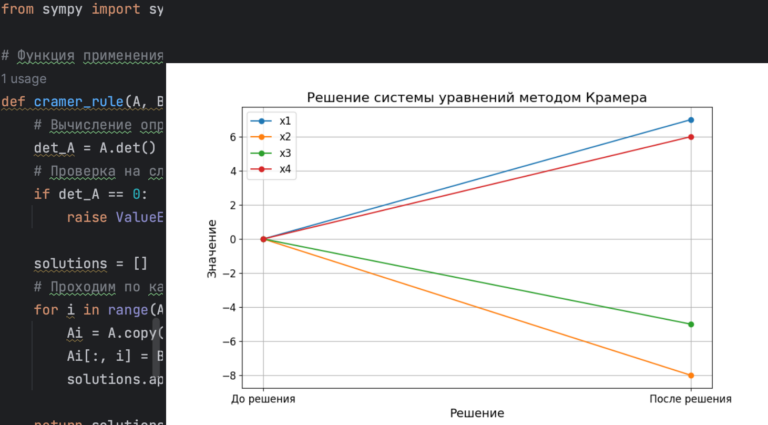

The following graph, presented by Quentin Monnet at FRnOG 30, shows a few initial measurements of bpfilter versus iptables and nftables. This shows both a software implementation of BPF and a test with hardware offload:

These early performance numbers look promising and testify to the power of the BPF. It should be noted that bpfilter and BPF itself will not solve the performance problems associated with iptables using sequential lists. This will require the use of BPF at the native level, as is done in the project cilium.

How did the kernel development community react?

Some of the members of the Linux kernel mailing lists are known for their heated battles. Did these wars break out in this case? No, in fact, there were immediately proposals from the main iptables maintainers directed towards BPF.

Florian Westphal proposed a frameworkwhich will run on top of bpfilter and convert nftables to BPF. This will keep the domain-specific nftables language, but get all the benefits of the BPF runtime with its JIT compiler, hardware offloading, and tooling.

Pablo Neira Ayuso seems to have been working on a similar proposal and published a series of articles in which he also translates nftables to BPF. The main difference in approach seems to be that Pablo intended to do the translation in the kernel. Since then, the community has agreed that any injection of BPF programs must occur through the user’s address space and BPF verifier to ensure safe BPF behavior.

Summary

I consider BPF the most exciting Linux development in recent years. We have only slightly touched its potential, but it still continues to develop. Replacing the core part of iptables with BPF is a logical first step. The actual transition will be to create your own BPF tools, as well as moving away from the traditional IP/port oriented designs.

The material was prepared within the framework of the specialization Administrator Linux.

If you are interested in learning more about the format of training and the program, get to know the teacher of the course – we invite you to an open day online. registration here.