Why deepfakes don’t bode well in the future

Researchers at the SAND Lab at the University of Chicago say voice cloning programs available to the general public are progressing alarmingly fast. In particular, voice deepfakes created using such technologies can confuse both people and smart devices with voice control. But not only voice, but also other deepfakes pose a threat. Deepfakes are already being used in advertising, fashion, journalism and education. However, more than 90% of fakes are created to harm the reputation, for example, through pornographic videos. In 2019, total business monetary losses due to deepfakes approached the $ 250 million mark in 2020.

Examples of deepfake attacks

- Utility CEO Attack: This 2019 deepfake scam is the first known attack using this technology and illustrates the dark side of deepfake technology. As in the classic case of corporate extortion, the CEO of an energy firm received a phone call allegedly from his boss. In fact, it was a fake voice generated by artificial intelligence, but it was impossible to determine by ear, so the CEO calmly complied with his order to transfer $ 243,000 within an hour. The program was able to imitate not just a person’s voice, but his tonality, intonation and German accent. The truth came to light when the scammers tried to do the same trick a second time. Alas, the police could not identify the identity of the criminals, and along with them, they could not find money.

- Attack on a tech company: In this failed audio deepfake attempt in 2020, a tech company employee received a strange voicemail from someone whose voice was very similar to that of the company’s CEO. The message contained a request for “immediate assistance to complete an urgent business transaction.” However, an employee of the company, following his suspicions, reported this to the legal department of the firm. While this deepfake attack was ultimately unsuccessful, it is a prime example of the types of attacks that we expect to see in large volumes as technological advances and deepfake tools become more readily available. Emails and voice deepfakes turned out to be part of a deliberate fraudulent operation that involved at least 17 people from different countries, according to UAE police.

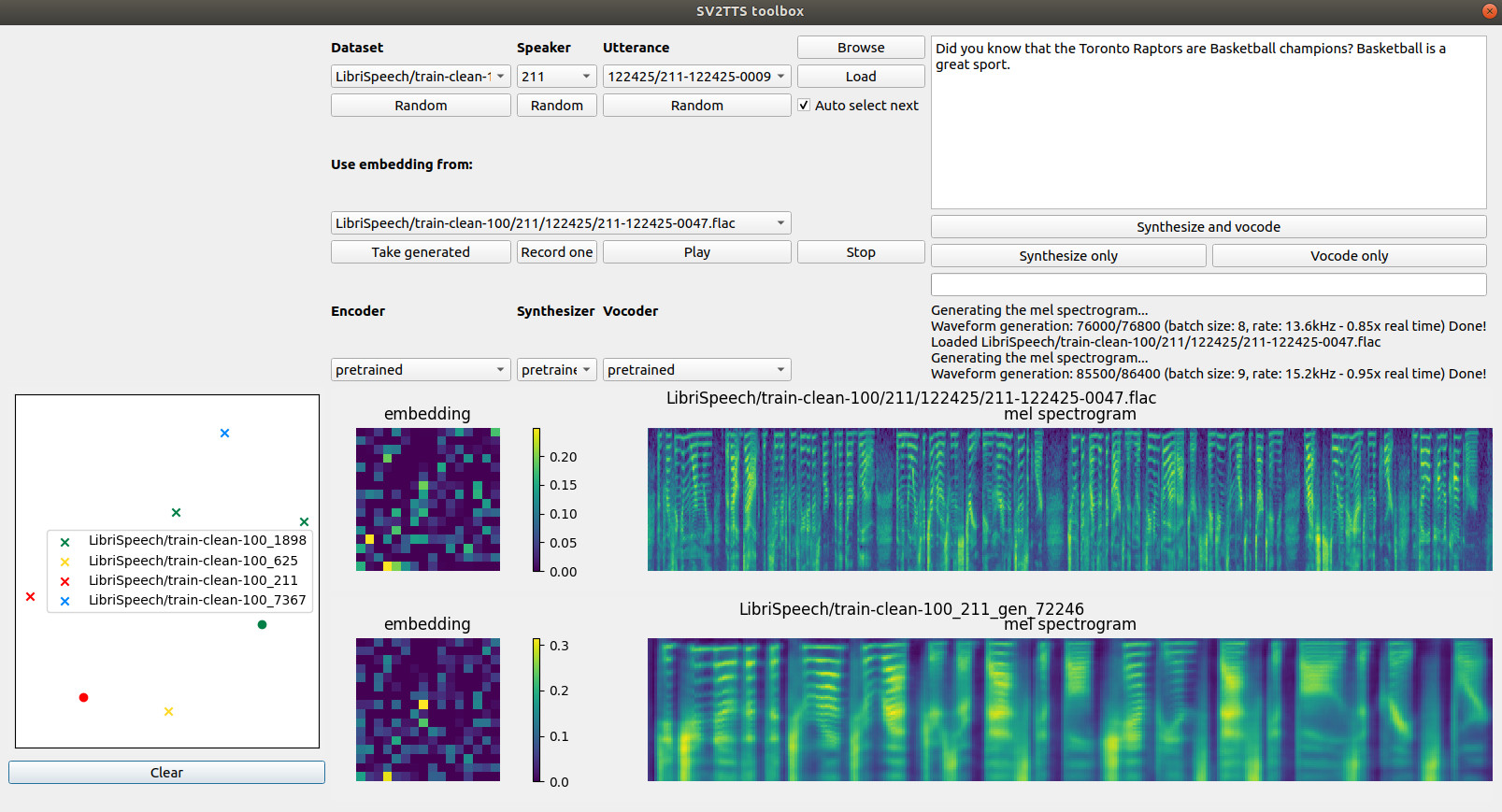

A team of experts from the SAND Lab (Security, Algorithms, Networking and Data Lab) tested the voice cloning programs available on the Github platform to see if they could break through the voice recognition security defenses of the Alexa, WeChat and Azure smart speakers. Among the many programs, the attention of scientists was attracted by the SV2TTS technology, the creators of which call it “a tool for cloning voice in real time.” According to the developers, SV2TTS can synthesize full-fledged voice deepfakes based on just 5 seconds of original voice recording. SV2TTS voice simulation was able to bypass Azure protection from Microsoft in 30% of cases, and Alexa and WeChat speakers were deceived even more often – in 63%. An experiment with 200 volunteers showed no less stunning results: in about half of the cases, people could not distinguish between voice deepfakes from real voices.

In addition, the researchers concluded that, for some reason, speech synthesizers are much better at imitating female voices, as well as the speech of people for whom English is not their first language. According to the researchers, modern defense mechanisms against synthesized speech are developing more slowly than voice imitation technologies. In the wrong hands, such programs run the risk of becoming a tool for the embodiment of a criminal design. At the same time, both real people and smart devices can be the target of the attack. For example, the WeChat speaker uses the user’s voice recognition to provide access to paid features, for example, to conduct transactions in third-party applications such as Uber or New Scientist.

However, initially, voice deepfakes interested scientists after the news about the use of voice imitation technologies to crank up large financial frauds, which were mentioned above.

Jake Moore, an IT security industry expert at ESET, believes that voice and video deepfakes pose a real threat to the security of information, money storage and business relationships at a global level. More businesses will fall victim to high-tech criminal activity for the foreseeable future, Moore said. Fortunately, as the dangers of fraudulent use of AI technologies have been realized, companies like Pindrop Security have become more popular in the marketplace, which offer assistance to customers in identifying calls made using voice simulation technologies.

And what about photos or videos of deepfecks?

This is often pornographic blackmail. Celebrities are regularly targeted. For example, among the victims of the attackers are Natalie Portman, Taylor Swift, Gal Gadot and others. But the appearance and voice of not only famous people are faked.

In 2021, there has been an increasing incidence of video call fraud in India, in which a woman strips for the camera and encourages a man to do the same. The victim is recorded on video and then blackmailed, extorting up to 2 million rupees. Police in the city of Ahmedabad found that in 60% of cases, victims spoke not to real women, but to deepfakes. Scammers use programs that translate text into audio and, presumably, video from porn sites.

A common fear associated with deepfakes is that they will be used for political purposes. Such examples already exist, and this is not about the famous Obama deepfake, in which he calls Trump names. In February 2020, the day before the New Delhi parliamentary elections, deepfake videos of the head of one of India’s leading nationwide parties went viral on WhatsApp. In one of them, he spoke in the Hindi dialect, Hariani. This video was used to dissuade migrant workers from voting for another party. Deepfakes were distributed to 5,800 WhatsApp groups in Delhi and the National Capital Region, reaching approximately 15 million people.

Another interesting example: a video of ex-Prime Minister of Belgium Sophie Vilmes, published by Extinction Rebellion Belgium. This deepfake is a modification of the previous appeal to the nation about the pandemic. The fictional speech says that recent global epidemics are directly related to “the exploitation and destruction of the natural environment by people.” AI was used to reproduce the voice and manner of speech.

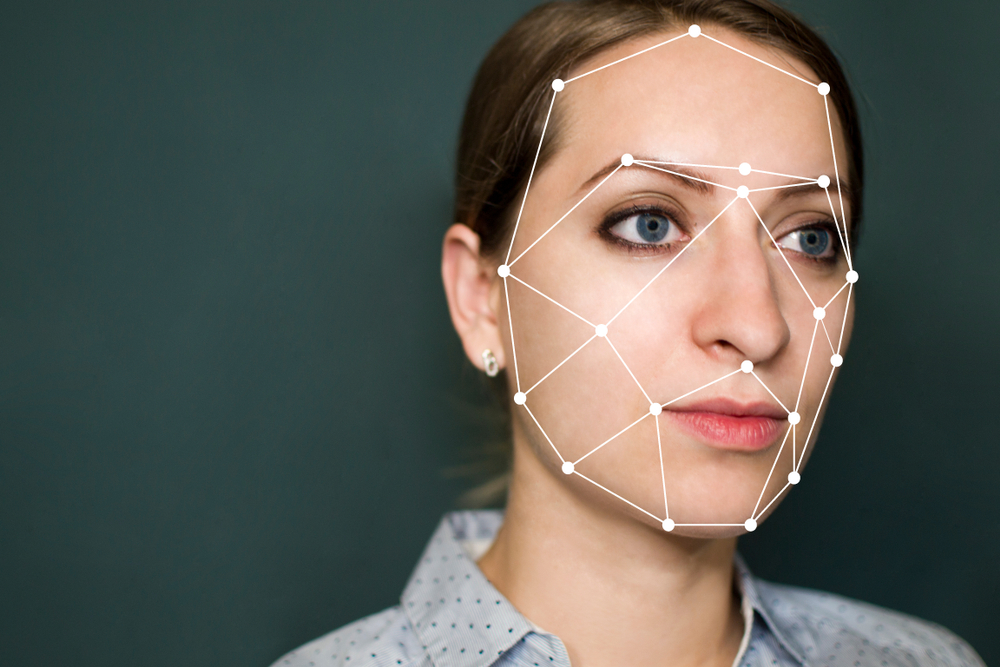

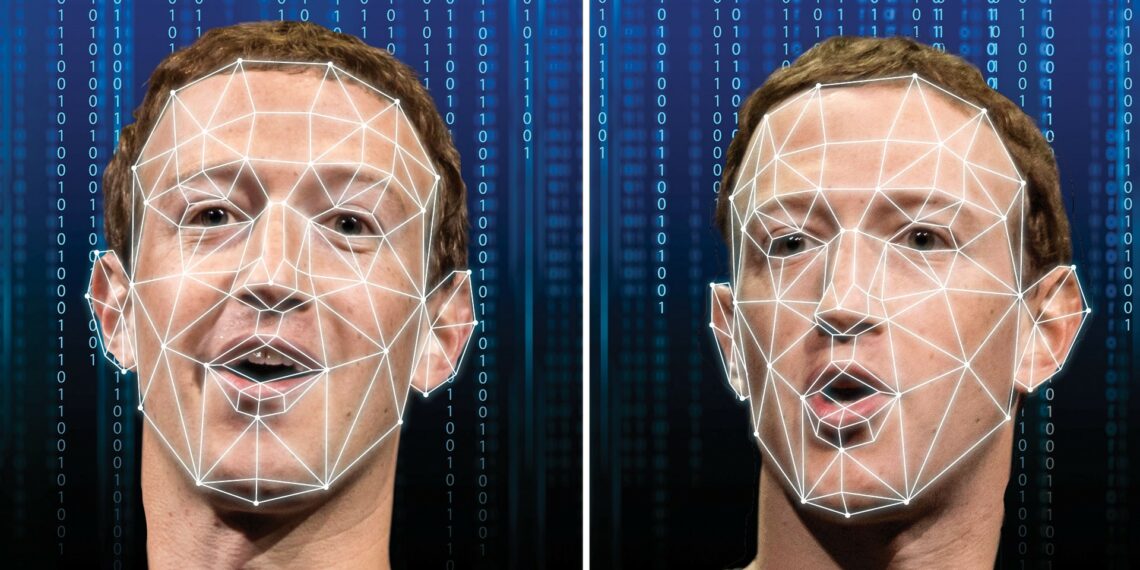

Can you tell a deepfake from a real person?

Previously, it was possible to easily distinguish a deepfake on various grounds, but now it does not help.

- Unnatural intonation of speech

- Robotic tone

- Unnatural blinking or movement of the person in the video

- Lip movements are out of sync with speech

- Poor audio or video quality

Yes, but now it is difficult. Countering deepfakes is a cat and mouse game, as scammers are also improving technology. For example, in 2018 it turned out that people in deepfakes do not blink or do it strangely. The feature was immediately taken into account in improved models.

To spur the creation of technologies for detecting deepfakes, Facebook and Microsoft are holding the Deepfake Detection Challenge. In 2020, more than 2 thousand people took part in it. The developers managed to achieve more than 82% recognition accuracy on a standard test dataset, but on a complicated one (with distracting components like inscriptions) it dropped to just over 65%.

Deepfake detection software can be tricked by slightly modifying the input data, so researchers continue to work in this area. In June 2021, scientists from Facebook and Michigan State University announced a new development. Usually detectors determine which of the known AI models generated the deepfake. The new solution is better suited for practical applications: it can recognize forgeries created using methods that the algorithm did not encounter during training.

Another way: search for digital artifacts. People in deepfakes may not have the same color of the left and right eyes, the distance from the center of the eye to the edge of the iris, the reflection in the eyes. There are poorly traced teeth and unrealistically dark borders of the nose and face. But in modern fakes, only a machine can see such artifacts. So the most important thing in dealing with deepfakes is to be on your guard and pay attention to photos, videos and audio that seem suspicious.

Deepfake as a commodity

The availability of datasets and pre-trained neural network models, reduced computational costs, and competition between creators and deepfake detectors are driving the market. Deepfake tools are commodified: programs and training materials for creating fakes are freely distributed on the net. There are simple smartphone apps that don’t require any technical skills at all.

The increased demand for deepfakes has led to the creation of companies that offer them as a product or service. These are, for example, Synthesia and Rephrase.ai. At Ernst & Young, deepfakes made with Synthesia have already started to be used in client presentations and in correspondence. Sonantic, a startup specializing in voice acting for video games, offers a no-code platform for generating voice clones.

The future of AI-powered media

Along with the market for commercial use of deepfakes, the number of fraudulent transactions will also grow. There are already marketplaces on the network where requests for fakes are published, for example, porn videos with actresses. And some algorithms can generate a deepfake video based on a single image or recreate a human voice using audio several seconds long. Because of this, almost any Internet user can become a victim of scammers.

Deepfakes are fought not only with the help of technology, but also at the legislative level. In the United States and China, laws appear regulating their use, and in Russia, the fight against them in July 2021 was included in one of the roadmaps of the Digital Economy. Regulation in this area will only increase.