Which is more reliable, SSD or HDD? Holivar continues…

Almost seven years ago (August 13, 2015) Samsung introduced the world’s first 16TB SSD, but after that, the rapid development seemed to have stalled. Where are the super-cheap SSDs for tens of terabytes, why don’t we see them in all the computers around? Are the myths about the unreliability of SSDs still alive?

❯ Memory for intercontinental ballistic missiles

A bit of history. Non-volatile memory was invented in the last century by American Bosch Arma specialist, scientist and digital computer pioneer Wen Qing Chou.

who created

(programmable memory for reading) on-board computers of Atlas intercontinental ballistic missiles.

The memory chip was a network of intersecting wires. Each node had a non-conductive jumper – a logical “one”. Burning it with high voltage, we got “zero”. You can add data to such a chip, but you cannot erase it.

This architecture subsequently served as one of the first prototypes that subsequently led to the invention of flash memory (it was invented and demonstrated in 1984 by Fujii Matsuoka, a developer from Toshiba).

❯ How is flash memory structured?

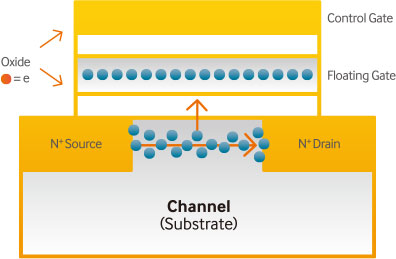

Arrays are assembled from cells

with a channel that controls and floating gates and the thinnest dielectric layer. When a positive voltage is applied (due to the Fowler-Nordheim tunneling effect or the injection of hot electrons (CHE-Channel Hot Electrons)), the electrons from the channel enter the floating gate, forming a logical “one”. When the polarity of the control gate is reversed and voltage is applied to the channel, the floating gate is discharged and we get “zero”. To read a cell, a positive voltage must be applied to the control gate. If the floating gate contains no charge, then current will flow through the channel and vice versa.

The main problem lies in the design of the memory. The number of rewrite cycles is limited. Over time, the dielectric layer wears out, becomes thinner, and accumulates a negative charge. The control chip supplies more voltage to such cells – and their wear is accelerated. Gradually, the mismatch of the charge of the cells increases, which leads to the failure of individual blocks or the entire device. The constant miniaturization of die architecture only complicates the task of developers and shortens the life of the memory.

❯ Memory types

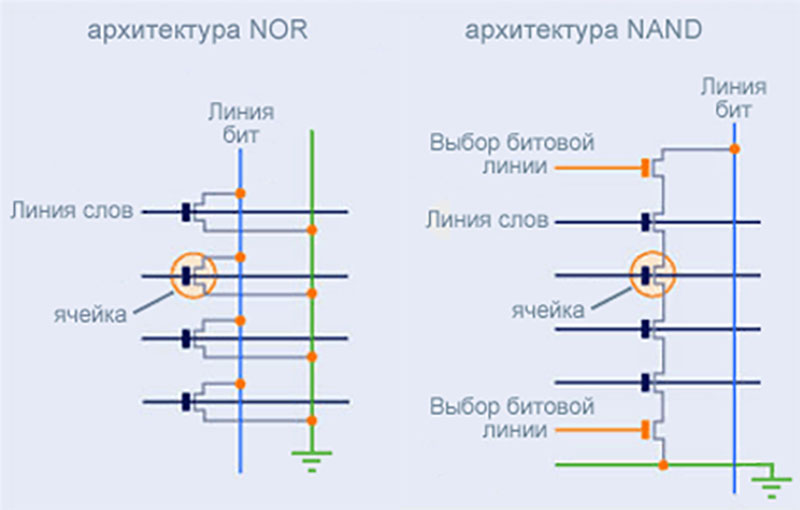

There are two types of flash memory architecture in use today − NOR (in the form of a two-dimensional matrix) and NAND – a three-dimensional array. The device and algorithm of NAND operation are more complicated. Crystals with NOR architecture are used in various low-memory ruggedized embedded systems, NAND is used in flash drives.

- Single Level (SLC) – a cell can include one bit of data, because of this, SLC works faster than others, but writes a much smaller amount of information.

- Multilevel (MLC) – a cell can contain two bits of data. MLC has a low price and a shorter life than SLC, as it requires twice the number of read-write cycles.

- Tri-level (TLC) One cell can store three bits of information. Today it is the most common type of memory.

- Four-level (QLC) stores up to four bits of data and has a lower cost than three levels.

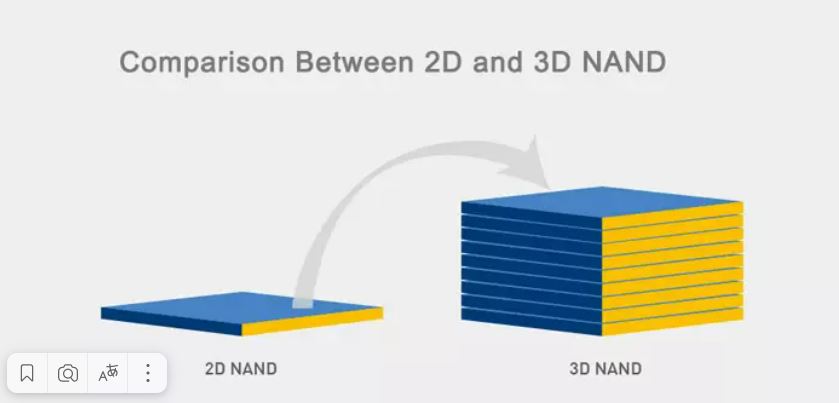

- NAND 3D – in order to increase the memory capacity, manufacturers have developed multilayer chips (now they already include hundreds of layers).

To service the memory, special NAND controllers are built into the drives. They work with the OS of computers through the TRIM or Deallocate commands.

❯ Everyone needs terabytes

As we said, the first 16TB SSD was introduced seven years ago. Today, SSDs are approaching the 300TB milestone. To do this, the number of layers in the chips will need to be doubled to 400 or more. The prospect of creating such SSDs by 2026

announced Pure Storage CTO Alex McMullan

, which introduced a new type of Direct Flash Module (DFM) memory. This is essentially a set of conventional chips]NAND, but with a proprietary FlashArray controller and FlashBlade operating system, as you can see from the datasheet.

Much more modest plans for more eminent competitors. For example, Toshiba is going to bring the capacity of its SSDs to 40 TB by the same 2026.

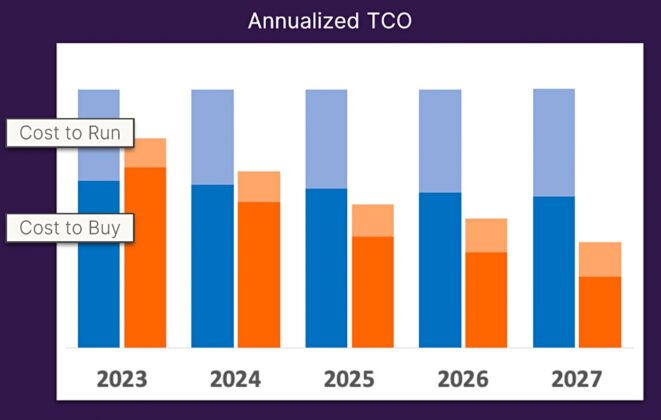

At the same time Seagate counts using HAMR technology, by 2025 bring to the market HDD with a capacity of 50 terabytes, and by 2030 – 100. That is, Pure Storage plans and SSD technology seem to be much more promising than HDD. And the total cost of ownership (TCO) for SSDs should also gradually decrease in the coming years, so HDDs will not stand a chance, according to Pure Storage tech director.

But why haven’t high-capacity SSD drives replaced regular hard drives from the market yet?

Everything is very simple. After all, we have already said that it has not yet been possible to completely get rid of childhood illnesses of SSD technologies. When deploying large storage systems risk is taken into accountassociated with the possibility of drive failure. When calculating, it turns out that installing an SSD larger than 16 TB is already unprofitable because of these risks.

Therefore, solid state drives are more used to store “hot” data that requires quick access. And hard drives today remain a reliable and more cost-effective solution for large-volume cold data recording and storage tasks that do not require expensive, high-speed SSDs.

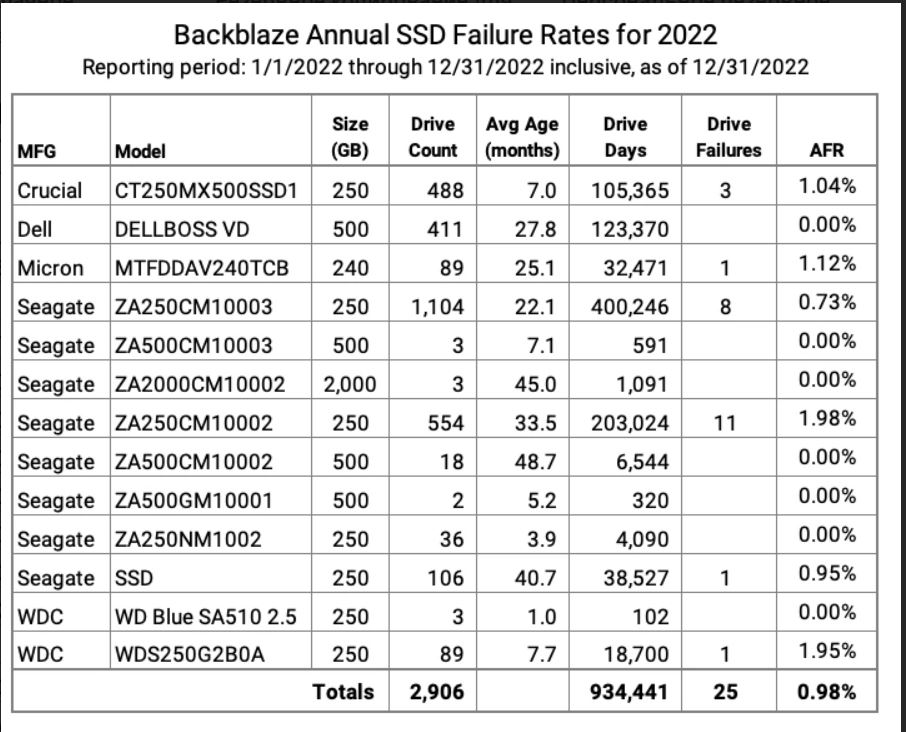

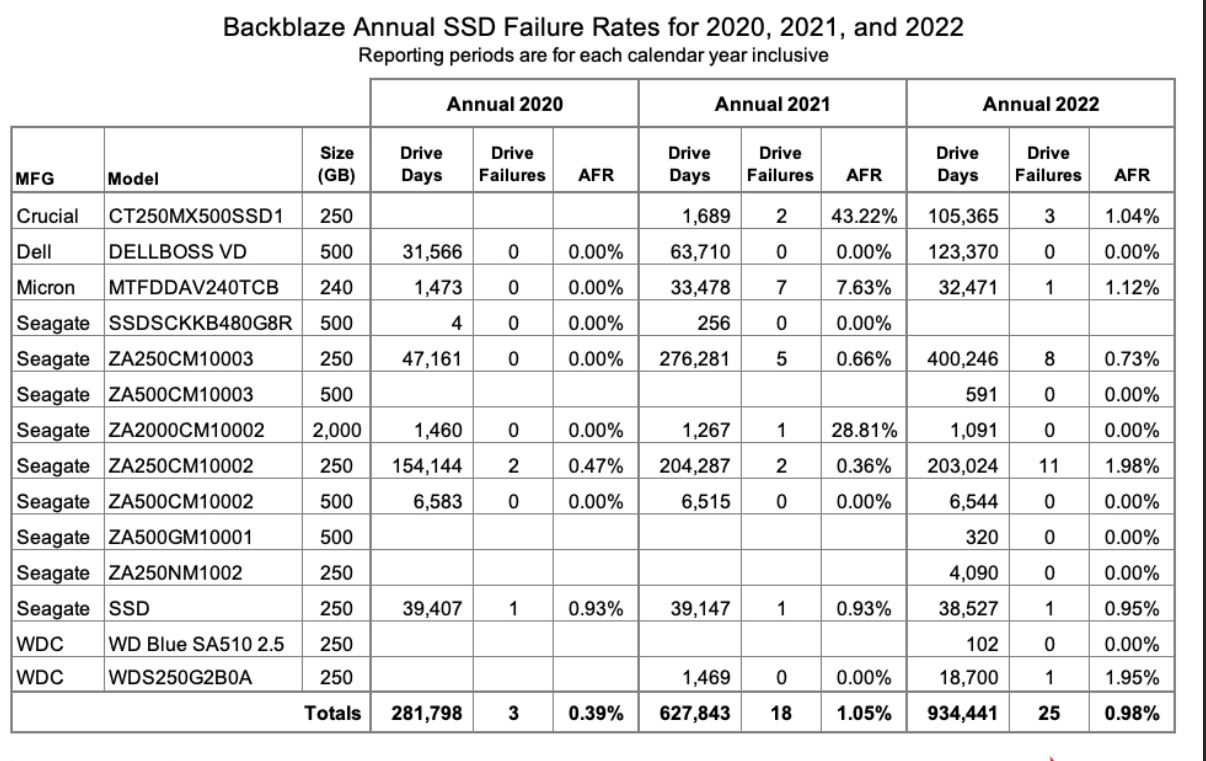

Although the last backblaze stats indicates that the fault tolerance of even low-cost SSD models of the lower and middle price segment is actually not inferior to HDD. Perhaps the myths about the unreliability of SSDs should be revisited?

HDD manufacturers, although they note a decrease in demand for traditional products, but bind him with the unfavorable economic situation and continue to release new models. Seagate introduced HDD 22 TB. It is made in the traditional 3.5-inch format with a SATA III interface. Inside, 10 platters and 20 read heads are mounted with an operating speed of 7200 rpm, a cache memory of 512 MB and a data transfer rate of up to 285 MB/s. The drive is specifically designed for systems with an average write load of up to 550 TB per year.

Western Digital is also promoting a novelty: a hard drive with two independent heads, providing twice the performance.

❯ Forget the myths, they won’t help

Many articles, manuals have been written about SSD optimization, thousands of videos have been shot. But this abundance of information completely confuses the average user and, even worse, gives rise to many “myths” that do not help in any way, but vice versa. Let’s try to figure out what is needed and what is not needed to optimize the performance of your own SSD drive. The main thing is not to overdo it.

Doesn’t make much sense turn off SysMain. As a result, the overall performance of the system may decrease, and the amount of disk writes may decrease. increase (SysMain compresses and merges pages of memory). Disabling is relevant only if it is recommended by the SSD manufacturer, while the launch of programs from the disk may slow down somewhat (the data from there will not be preloaded into RAM), if it is present in the system.

Disable defragmentation will not help. The system sends commands to the SSD controller TRIM or Deallocate, in case of NVMe SSD (supported starting from Win8). The command tells the drive controller which blocks of memory can be removed. If you disable defragmentation, the write speed on the SSD will start to slow down. There is absolutely no need to do this, defragmentation does not occur often enough to disable the SSD drive.

In an effort to save SSD resource, some users disable swap file or transfer it to the HDD. But why then do you need an SSD if, in both cases, you lose performance?

The swap file works great with an SSD, while maintaining a read-to-write ratio of about 40:1. In addition, by disabling the file, you will make it difficult to diagnose critical errors. Memory dump the kernel can no longer be created.

Disabling hibernation (hybrid sleep is also possible in stationary PCs) is not suitable for mobile computers, especially if the socket and 220 volt network are unavailable. It is worth disabling it only in very weak laptops or tablets, since the hiberfil.sys file takes up 75% of RAM. You can slightly reduce it with the console command:

powercfg -h -size 50Disabling system protection

also not recommended. It is unlikely that you will benefit from the fact that you cannot create a system restore point and “roll back” it, in case of some force majeure. But, as they say, the master is the master.

Disabling indexing and search on disk, transferring user folders, folders AppData And ProgramDatareinstalling programs, moving the browser cache, temporary files can, of course, give some result, but the system installed on the SSD will work much faster than if some or even all of the methods mentioned above are applied.

For a real increase in the life of an SSD, it makes sense to check if the TRIM instruction set. This is a function of the OS, with the help of which unnecessary data is marked in a special way. Therefore, the controller does not need to move them by writing to other blocks, which significantly reduces the number of rewriting cycles.

fsutil behavior query disabledeletenotify

A more reliable check is performed by the utility

from Vladimir Panteleev.

Enable TRIM using standard tools:

fsutil behavior set disabledeletenotify NTFS 0fsutil behavior set disabledeletenotify ReFS 0

If TRIM is enabled but doesn’t actually work,

install the latest SATA driver.

❯ conclusions

In theory, SSDs are indeed more reliable than hard drives. But only within the estimated number of records. In practice, the reliability of an SSD depends on a number of factors. For example, from errors in the firmware. There is a known case when drives from the same batch with a certain firmware version quickly and almost simultaneously failed. The reason for this is either too intensive writing to cells or incompatibility during certain operations, which turn the drive into useless trash. Unfortunately, there is still too much marriage in the production of SSD controllers.

Probably, because of such problems, SSDs have not yet completely captured the market, and HDDs are still spinning their records in our system units.