What the neural network saw on the first photo of a black hole

On April 11, scientists and engineers from the Event Horizon telescope team made a real breakthrough in understanding the processes that take place in outer space. They presented the first image (photo) of the black hole. This further strengthened Einstein’s general theory of relativity, namely the hypothesis that “massive objects cause distortion in space-time, which is reflected in the form of gravitational changes”.

Well, I am not a physicist or an astronomer to understand or explain how this works, but I, like millions of people working in various fields, are fascinated by the cosmos and especially the phenomenon of black holes. The first image of a black hole caused a wave of delight around the world. I am a deep learning specialist who mainly works with convolutional neural networks, and it became interesting to me that artificial intelligence algorithms “think” about the image of a black hole. That is what we will talk about in the article.

This passage from the Epoch Times describes a black hole like this: “Black holes consist of“ a large amount of matter packed in a very small space ”, mostly formed from“ remnants of a large star that died during a supernova explosion. ”Black holes are so strong gravitational field that even light cannot avoid it. The resulting image of the black hole M87 is shown below. This phenomenon is well explained in the article “according to 2 astrophysicists”.

Black Hole – M87 – Event Horizon Telescope

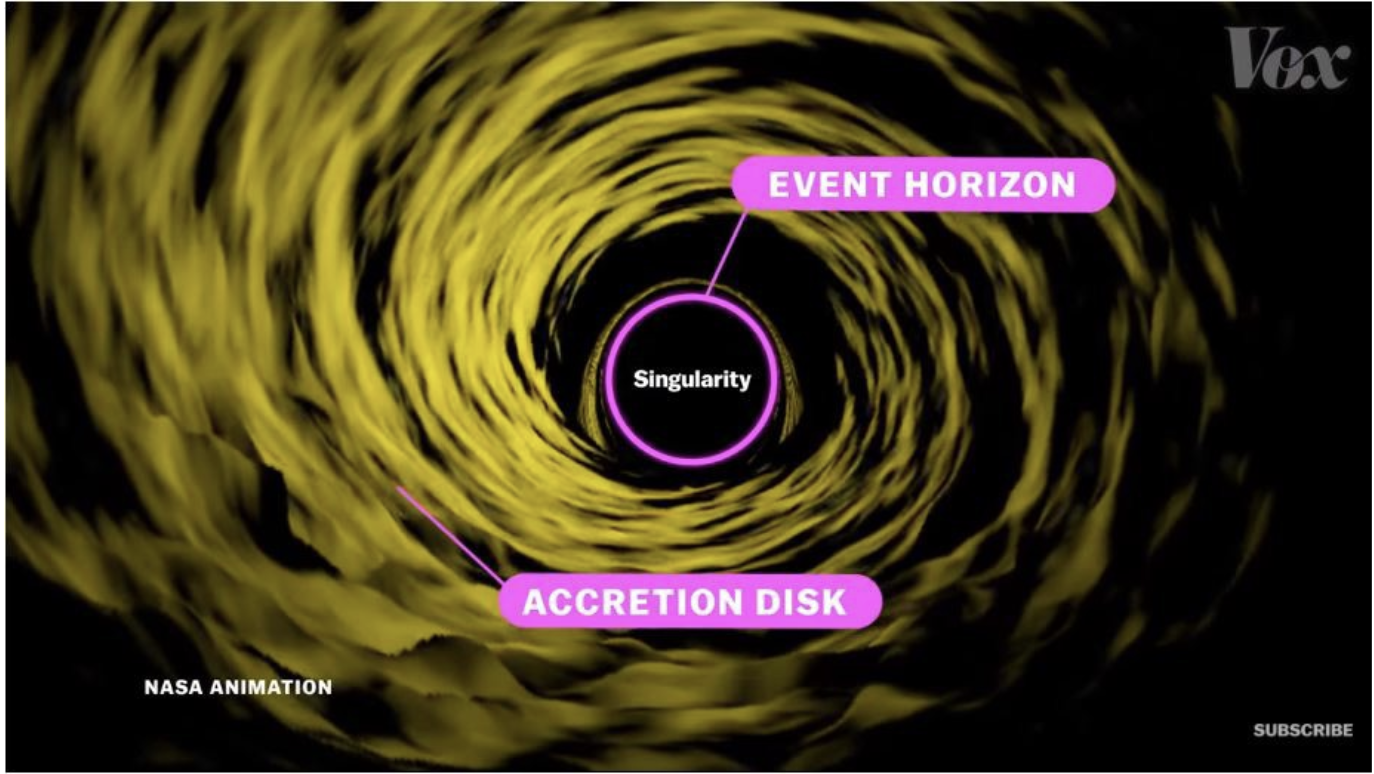

Different areas of the black hole. Screenshot from vox video – Why this black hole photo is such a big deal

Please pay attention to this article, in which there is a cool animation explaining why the image of a black hole looks like this.

1. What does CCN see on the black hole image?

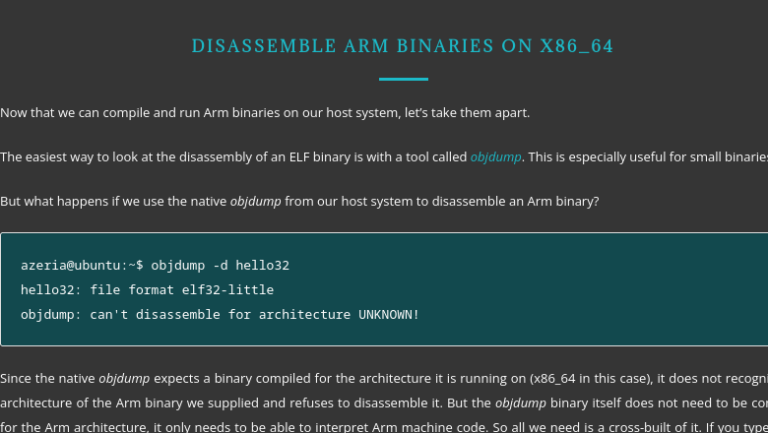

CCN (Convolution Neural Network) – convolutional neural networks – a class of deep learning algorithms that are extremely effective in recognizing objects in the real world. CCN is the best neural network for image interpretation and recognition. Such neural networks are trained on millions of pictures and trained to recognize about 1000 different objects of the surrounding world. I thought about showing the image of a black hole to two trained convolutional neural networks and see how they recognize it, what object of the world around it the black hole looks like the most. This is not the wisest idea, since the black hole image was generated by combining various signals received from space using special equipment, but I just wanted to know how the picture would be interpreted without any additional information about the signals.

Neural Network Prediction VGG-16 – Match

Neural Network Prediction VGG-19 – Match

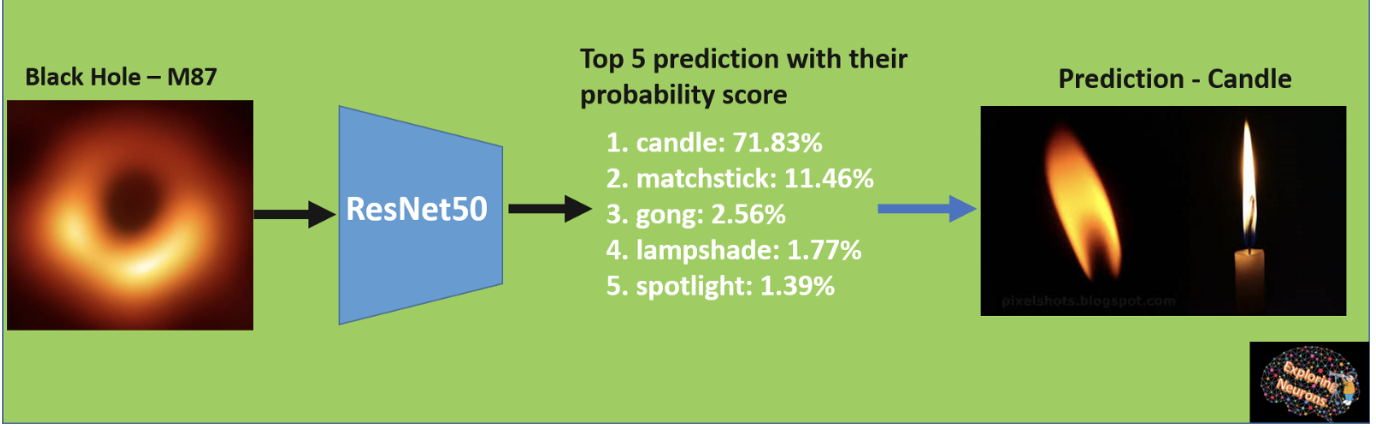

ResNet-50 Neural Network Prediction – Candle

As we see in the above images, the trained VGG-16 and VGG-19 see the black hole as a match, and ResNet-50 thinks it is a candle. If we draw an analogy with these objects, we will understand that it has some meaning, since both the burning match and the candle have a dark center surrounded by a dense bright yellow light.

2. What signs did CCN extract from the black hole image

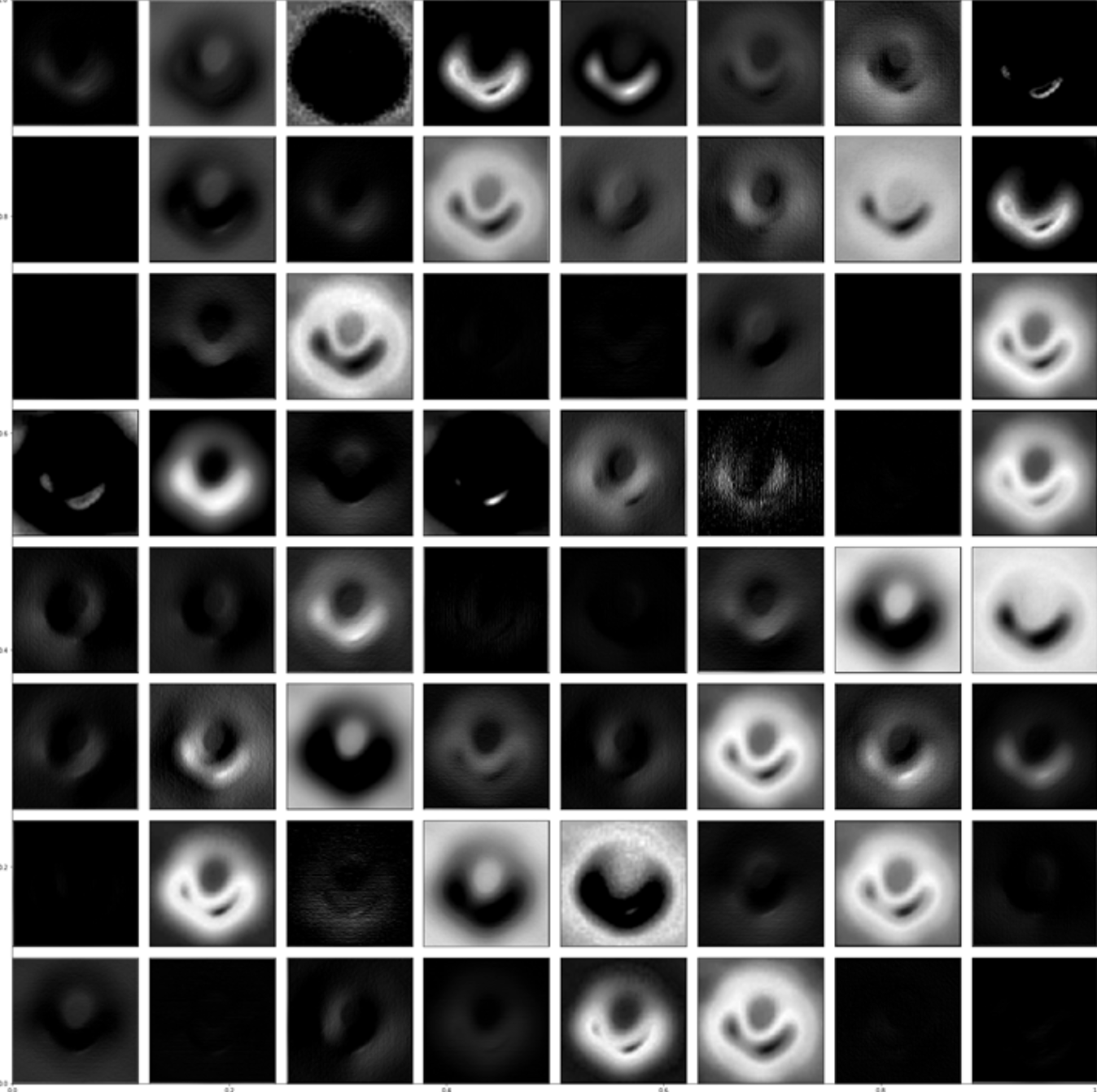

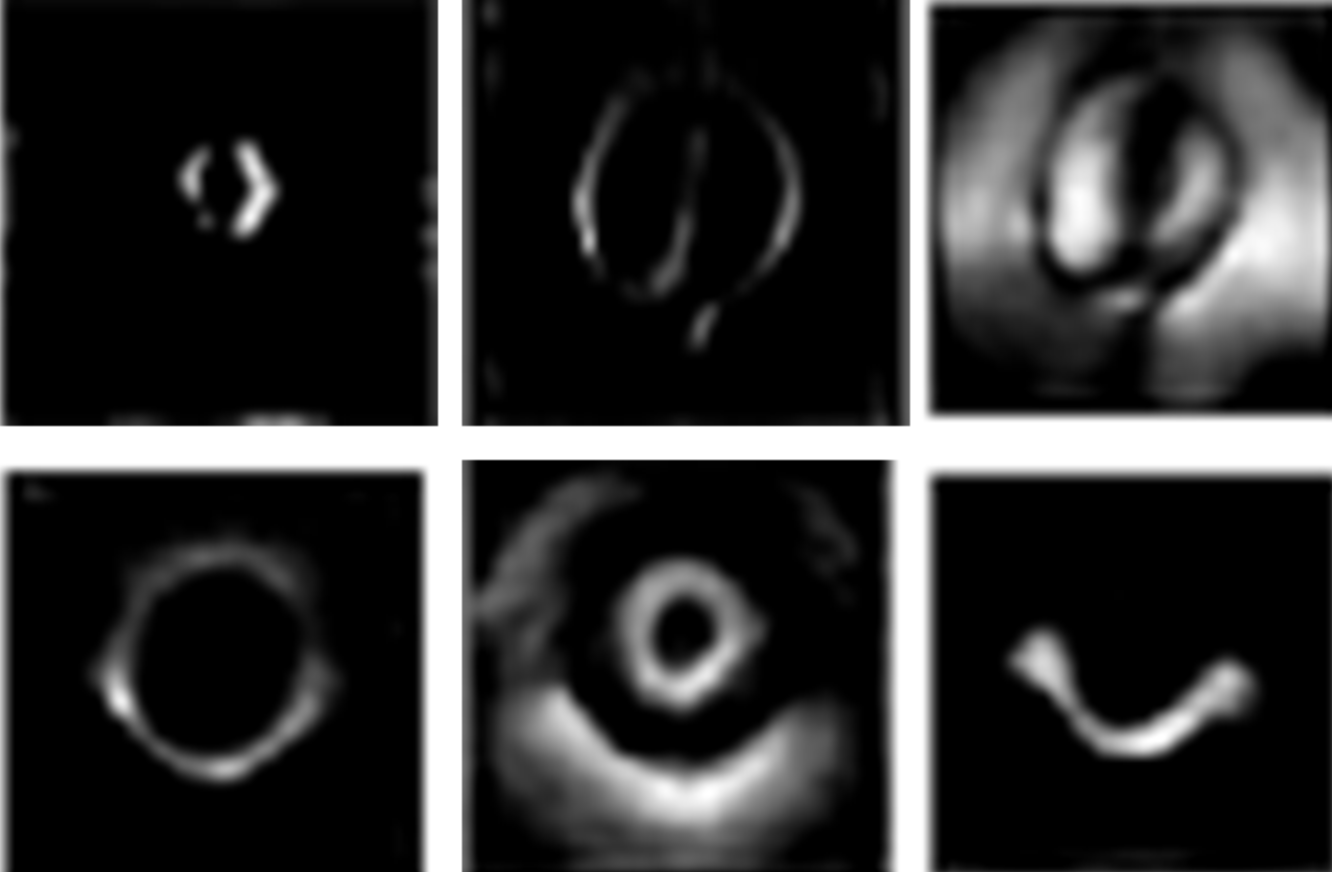

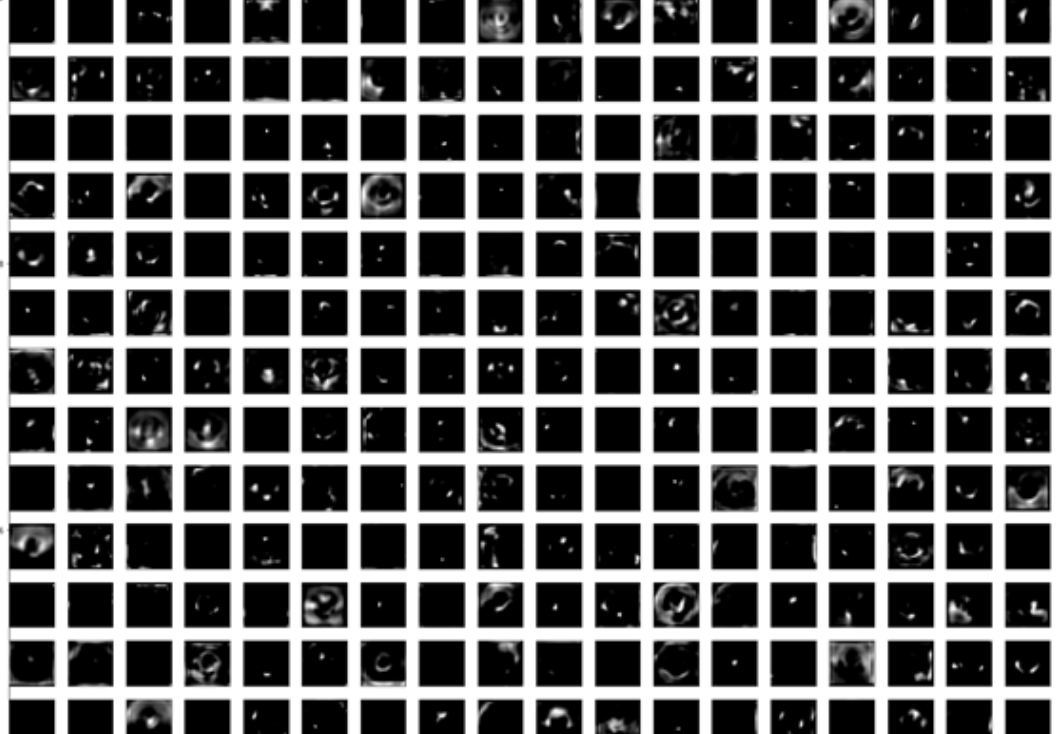

I did one more thing, I visualized that they generate intermediate layers of VGG-16. The deep learning networks are called deep because they have a certain number of layers, and each of them processes the presentation and features of the image at the input. Let's see what the different layers of the network recognize from the incoming image. The result was pretty beautiful.

64 feature maps of the first convolutional layer VGG-16

If you take a closer look, you will see that a small bright area is a strong symptom, and that it is absorbed after passing through most filters. Some interesting filter output is shown below, and they already really look like some kind of celestial object.

4 of 64 feature maps of the first convolutional layer

64 feature maps of the second convolutional layer VGG-16

Increase the scale of some interesting feature maps of the second layer of the neural network.

6 of 64 feature maps of the second convolutional layer

Now we will go even deeper and look at the third convolutional layer.

128 feature maps of the third convolutional layer VGG-16

After approaching, we find a familiar drawing.

8 of the feature maps presented above on the third layer

Moving deeper, we get something like this.

6 of 128 feature maps with 4 convolutional layers VGG-16

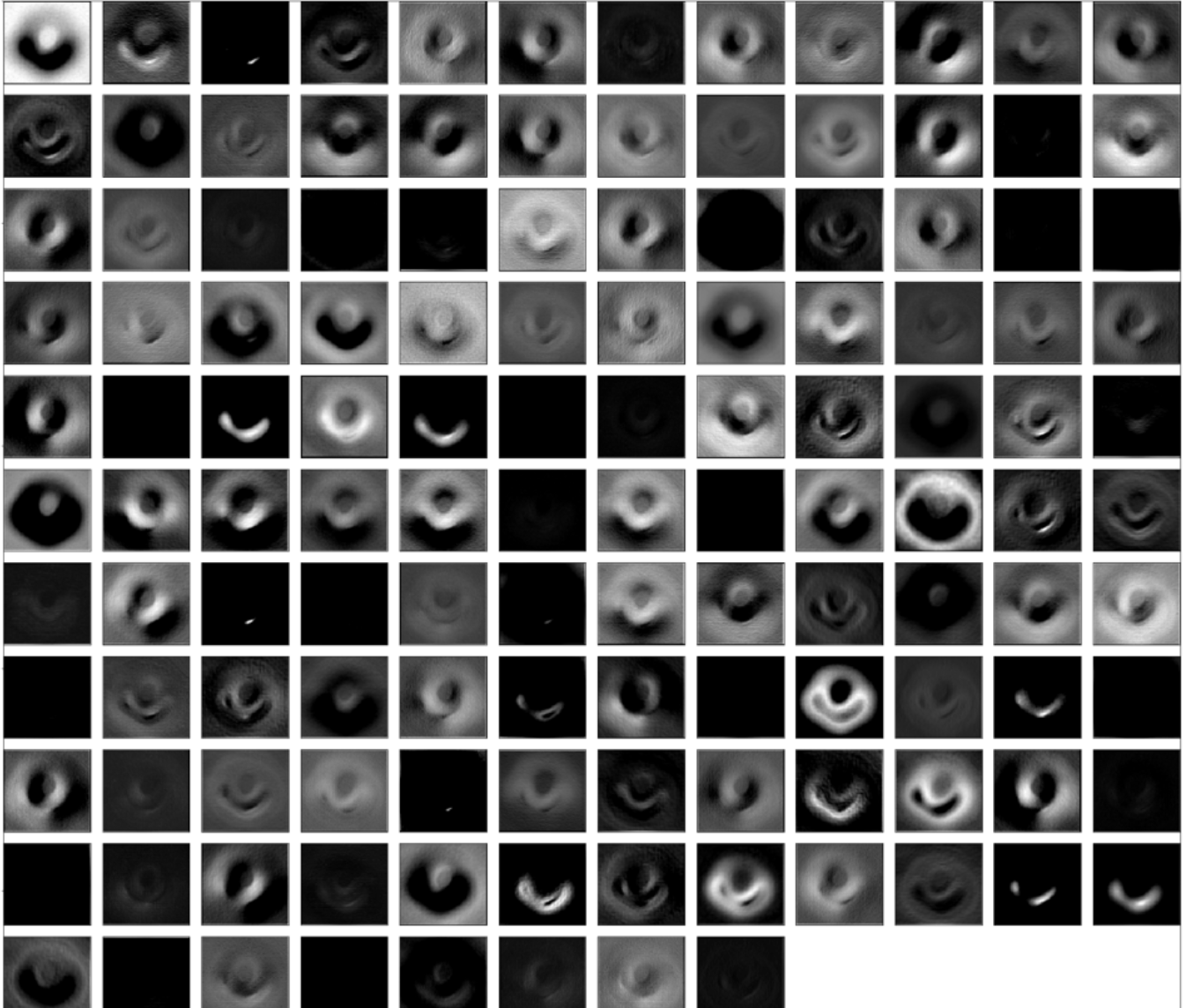

Going deeper, we get higher level abstract information, and when we visualize the 7th, 8th and 10th layers of convolution, we will see only high-level information.

Map of signs of the 7th convolutional layer

As we can see, many of the feature maps are dark and only learn specific high-level features necessary for recognizing this class. In the deeper layers, they become more noticeable. Now we zoom in and take a look at some filters.

6 feature cards

Now consider 512 feature maps of the 10th convolutional layer.

Character maps of 10 convolutional layer.

Now you see that in most of the feature maps received, only the area of the picture is taken as a feature. These are high-level signs that are visible to neurons. Let's take a closer look at some of the feature maps listed above.

Some of the feature maps of the 10 convolution level increased in size

Now that we have seen that CNN is trying to isolate a black hole from an image, we will try to transfer this image to the input to other popular neural network algorithms, such as Neural Style Transfer and DeepDream.

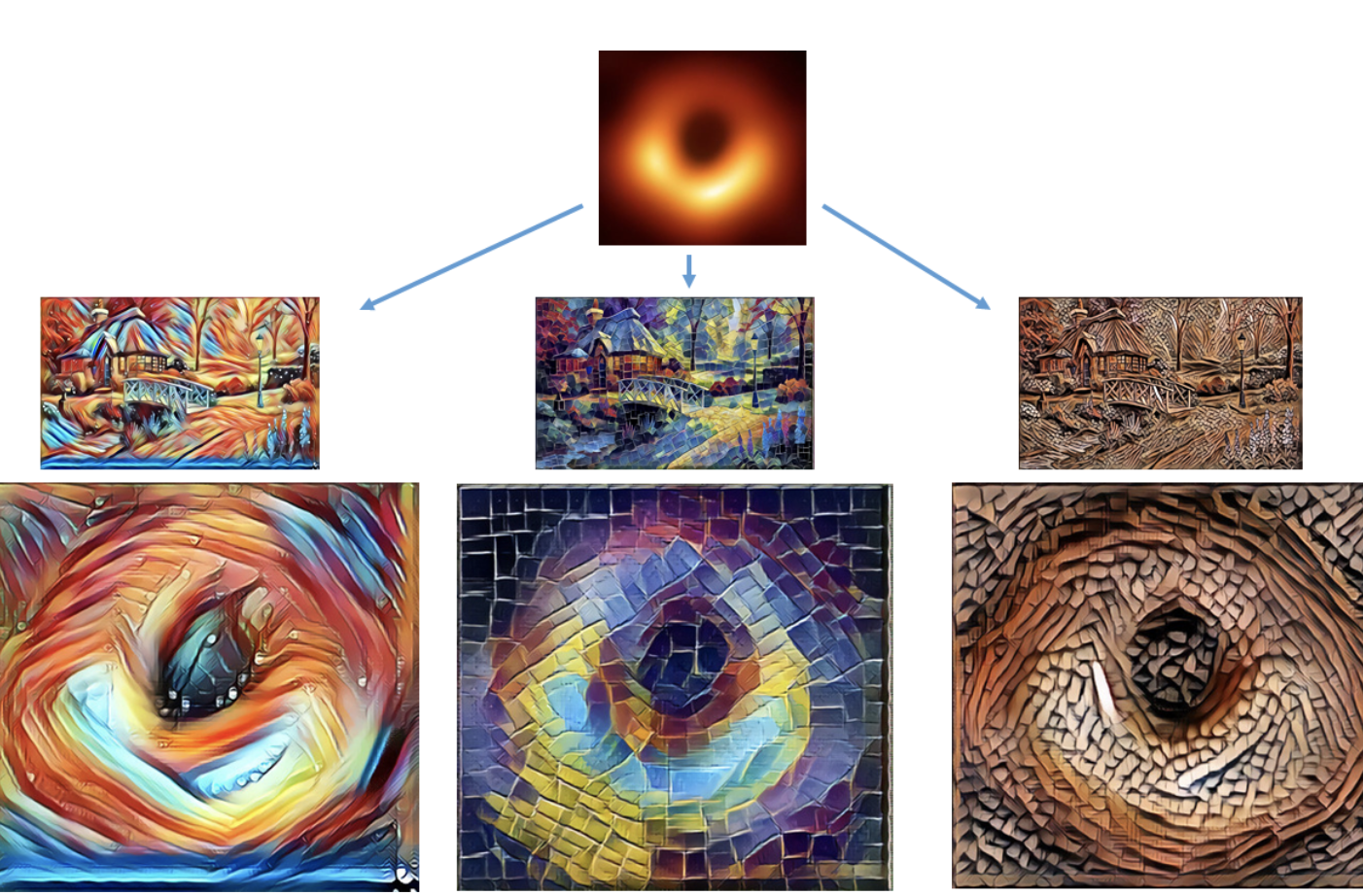

3. We try Neural Style Transfer and Deep Dream on the image of a black hole

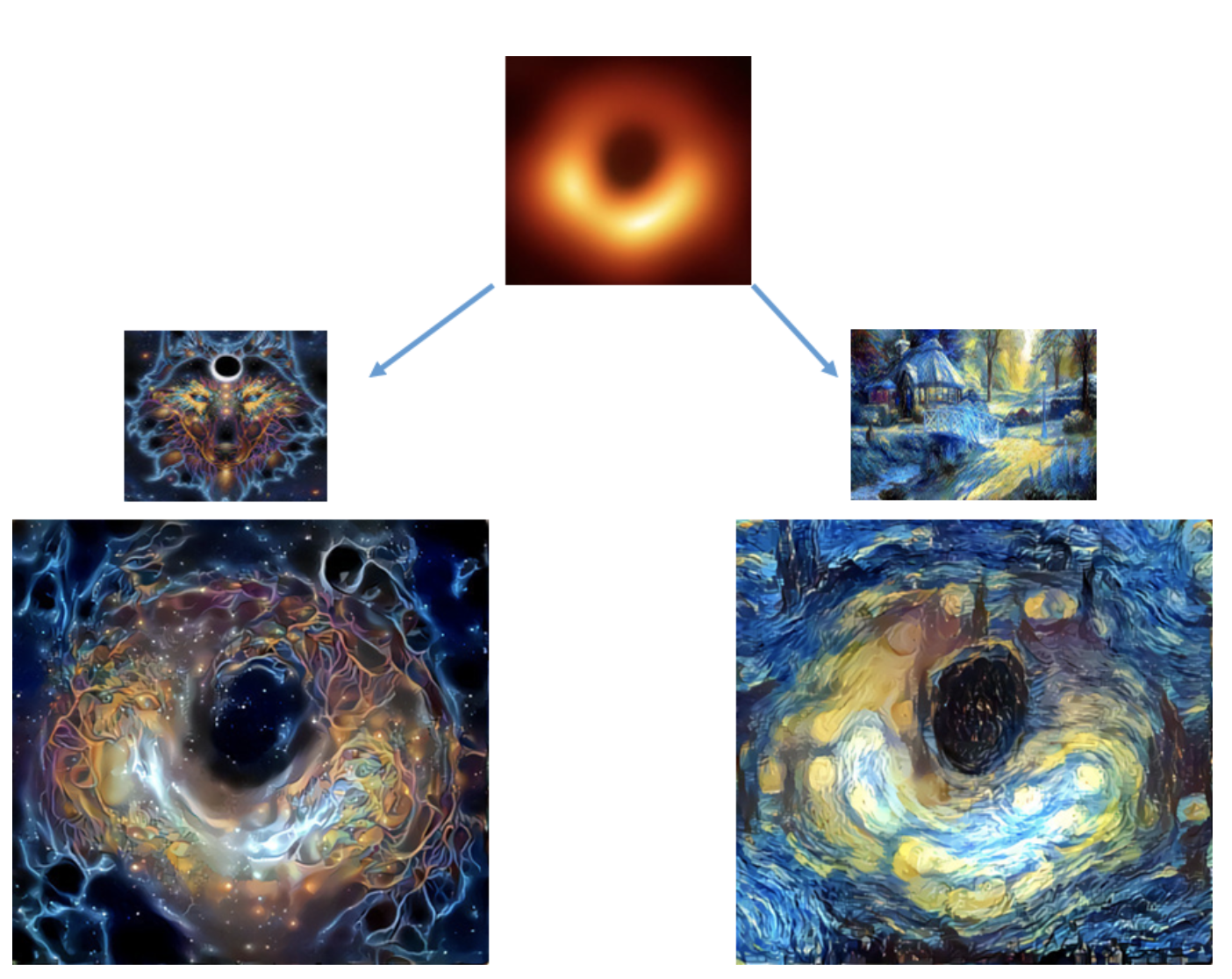

Neural style transfer – this is a smart neural network that gives the “style” of one image to another source image and as a result creates an artistic image. If you still do not understand, the images below will explain the concept. I used the site deepdreamgenerator.comto create various artistic images from the original black hole image. Pictures turned out quite interesting.

Transfer of style. Images generated with deepdreamgenerator.com

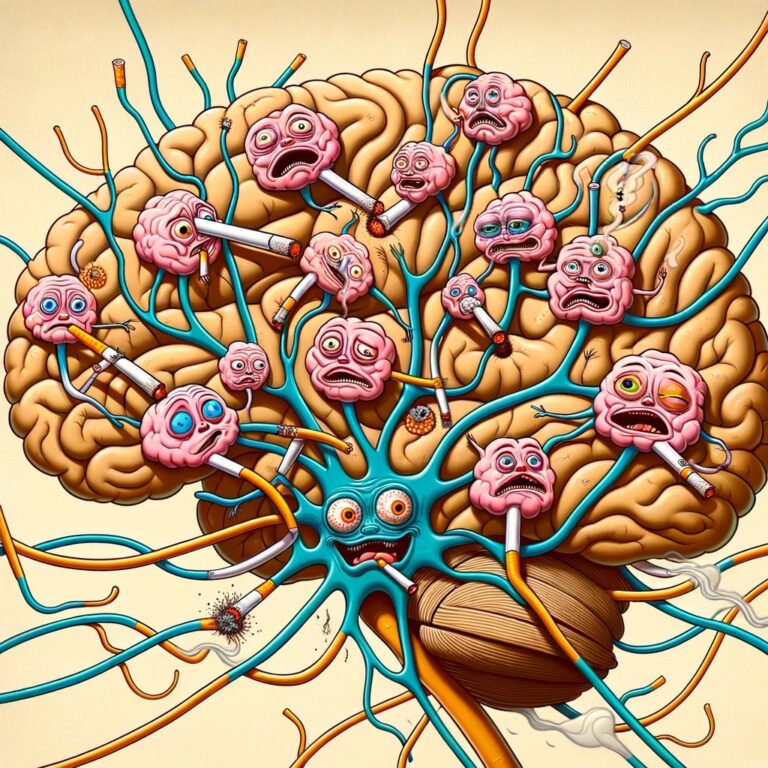

Deepdream Is a computer vision program created by Google engineer Alexander Mordvintsev that uses a convolutional neural network to search for and improve patterns in images using an algorithmic paradolia, thus creating a hallucinogenic image from intentionally processed images.

Deep Dream. Images generated with deepdreamgenerator.com

In these Deep Dream videos, you will see how much hallucinating images she can create.

That's all! I was extremely shocked when I saw the first photo of the black hole, and immediately wrote this little article. The information in it may not be as useful, but the images created during its writing and shown above are completely worth it. Enjoy the photos!

Write in the comments, as you material. We are waiting for everyone at the open door on the course "Neural networks in Python".