What is HDR10 +? Parsing

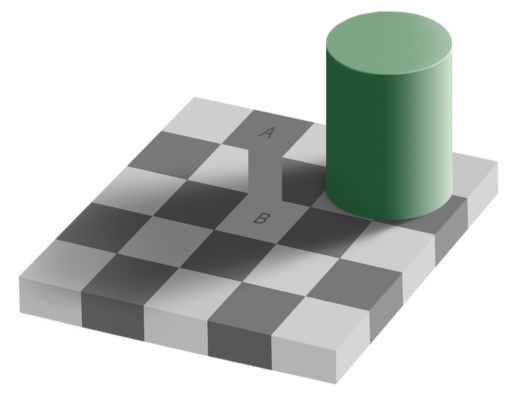

Let’s take a look at the picture. Nothing out of the ordinary. But what if I told you that cells A and B are perfectly the same color.

In fact, we cannot always distinguish light from dark. You don’t have to go far for examples: remember the blue-black / white-gold dress or the sneakers that appeared a little later?

And all modern screens take advantage of this feature of human vision. Instead of real light and shadow, we are shown their imitation. We are so used to it that we can’t even imagine that it could be otherwise. But in fact it can. Thanks to HDR technology, which is much more complex and more interesting than you think. Therefore, today we will talk about what real HDR video is, talk about standards and compare HDR10 and HDR10 + on the most advanced QLED TV!

In fact, the first thing to know about HDR is that it’s not just a thing that stores video correctly. To see HDR content, we need two things: the content itself, and the correct screen that supports it. Therefore, we will watch today on a Samsung QLED TV.

6 stops SDR

Our eyes are faced with extreme changes in brightness every day. Therefore, in the course of evolution, human vision has learned to see a sufficiently wide dynamic range (DD), that is, the difference to catch the difference between different levels of brightness. Photographers and filmmakers know that DD is measured in exposure stops or f-stops.

So how many stops does the human eye see? I will say this – in different ways.

If you blindfold you, take you to an unfamiliar place and abruptly remove the bandage, then at that second you will see 14 stops of exposure. This is not enough. Here is the camera I shoot the videos with and sees only 12 stops. And this is nothing compared to human vision, because it can adapt.

After a couple of seconds, when your eyes get used to the brightness and examine the space around, the vision settings will be adjusted and you will see an amazing play of light and shadow from 30 stops of exposure!

Wow! Beauty! But when we watch video on TV or on a smartphone screen, we have to be content with only 6 stops of exposure, because video with standard dynamic range or SDR is no longer supported.

Brightness

Why so few? The question is historical and it is connected with two stages.

Modern SDR video standards date back to the mid-20th century with color television. Then there were only CRT TVs, and they were very dim. The maximum brightness was 100 nits or candelas per square meter. By the way, the candela is a candle. Therefore, 100 candelas per square meter literally means the brightness level of 100 candles located on an area of 1 meter. But if you don’t like measuring the brightness in candles, instead of candelas per square meter, you can just say nits. By the way, our Samsung Q950T TV has 4000 nits.

So, this limitation of brightness was incorporated into the SDR standard. Therefore, modern TVs showing SDR content essentially ignore the amazing adaptability of human vision and slip us a dull and flat picture. And this despite the fact that since then technology has advanced a lot.

One of the features of QLED technology is its high peak brightness. These are the brightest TVs on the market, even brighter than OLED.

Today’s QLED TVs are capable of delivering a whopping 4000 nits of brightness, 40 times the SDR standard. Awesome – show what you want. But still 99% of the content we see is SDR, so when you watch YouTube on your stunning AMOLED display, you are actually watching a CRT emulation from a living room during the height of the Cold War. So it goes.

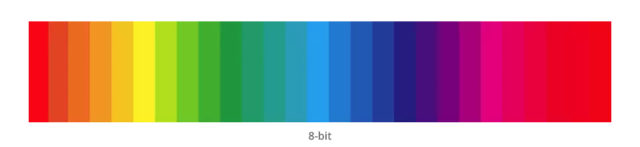

Color depth

The second limitation also comes from ancient times – the 1990s.

Then there was a revolutionary standard – high definition digital television – HDTV, part of which became the color depth of 8 bits. This means that each of the base colors – red, green, and blue – can only have 256 values. Raising 2 to the 8th power turns out to be 256 – this is 8 bits ..

A total of three channels, a total of 16,777,216 million shades.

It seems like a lot. But a person sees much more flowers. And all these ugly stepped transitions that can often be seen on videos and photos and of course on YouTube, thanks to its proprietary codec – these are just 8-bit limitations.

But the most interesting thing is that these two restrictions: 6 stops of exposure and 8 bits per channel, did not allow SD video to simulate the main feature of human vision – its nonlinearity! Therefore, let’s talk about the perception of brightness.

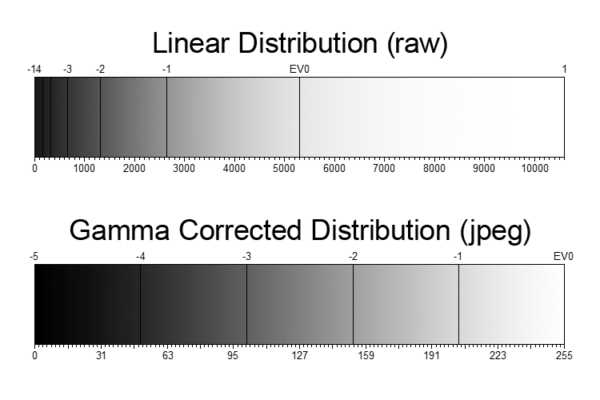

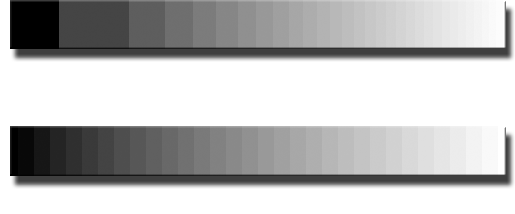

Perception of brightness

It so happened evolutionarily that for a person it was always more important that there is in the shade than in the bright sun. Therefore, the human eye is much better at distinguishing dark shades. And therefore, any digital image is not encoded linearly in order to give as many bits of information as possible for the dark areas of the image.

Otherwise, for the human eye, in the shadows, the differences between brightness levels will be too large, in highlights, on the contrary, they are completely invisible, especially if you have only 256 values at your disposal, which are at the disposal of SDR video.

But unlike SDR, HDR video is encoded with a color depth of at least 10 bits. And this is 1024 values per channel and the total is over a billion shades (1024 x 1024 x 1024 = 1,073,741,824)

And the limiting image brightness in HDR video starts at 1000 nits and can go up to 10,000 nits. It’s 100 times brighter than SDR!

Such expanse allows you to encode a maximum of information in the dark areas of the image and show a picture much more natural for the human eye.

Metadata

So, we found out that the capabilities of HDR are far superior to SDR, and HDR shows a much more terrible and all-out contrasting picture, but! Who cares what video format you have, SDR or HDR, if the image quality still depends on the screen you are looking at. On some screens, SDR looks so rich and contrasty that HDR never dreamed of. It’s like that!

All displays are different. They are calibrated in different ways, they have different brightness levels and other parameters.

But HDR video, unlike SDR, not only displays the image on the screen, but also knows how to tell the TV exactly how to show it! This is done using the so-called metadata.

They are of two types.

Static metadata. Contains settings for adjusting the brightness and contrast of the entire video. For example, a person who has made some atmospheric, dark movie may indicate that the maximum brightness in this movie is only 400 nits. Therefore, a TV with a brightness of 4000 nits will not turn up the brightness and turn your horror movie into a children’s party. Or vice versa, a movie with a brightness of 4000 nits will be revealed to the maximum not only on a TV that pulls such brightness, but also on a dimmer screen, since the picture will be correctly compressed to the capabilities of the TV.

But there are some films that are generally dark, but they have scenes with bright flashes of light. Or, for example, a film about space in which the stars shine brightly. In such cases, it is necessary to adjust the brightness of each scene separately.

For this there are dynamic metadata… They contain the settings for every pixel in every frame of the movie. Moreover, this metadata contains information on which display the content was mastered. This means that your display can take these settings and adapt the image so that you get the picture as close as possible to the author’s idea.

HDR10 and HDR10 +

The most common format with support for static metadata is HDR10. Moreover, it is the most common HDR format in principle. If you see an HDR sticker on your TV, know that it supports HDR10. This is his plus.

But the support for only static metadata does not allow calling it true HDR. Therefore, Samsung, together with 20th Century Fox and Panasonic, decided to correct this misunderstanding and added support for dynamic metadata to HDR10, calling the new standard HDR10 +.

It turned out to be royal – 10 bits, 4000 nits, more than a billion shades. But is the difference between HDR10 and 10+ visible in practice.

We have a Samsung Q950T QLED TV that supports both formats. Therefore, the comparison will be as correct as possible. We launched movies that were made in HDR10 and HDR10 +. And you know what – I really saw the difference. HDR10 looks cool on this TV and HDR10 + breaks the pattern altogether. And it’s not just about HDR10 +.

Adaptive picture

The fact is that HDR content is significantly more picky about display quality than SDR. For example, brightness in HDR video is indicated not in relative values, that is, in percent, but in absolute values - in nits. Therefore, like it or not, but if the metadata indicates that this particular area of the image should shine 1000 nits, it is necessary for the TV to be able to produce such brightness. Otherwise, it will no longer be HDR.

And if, suddenly, you watch a video during the day, in a brightly lit room, then you also need to compensate for the ambient lighting. Most devices fail to do this. But Samsung QLED TVs have a huge advantage in this regard.

Firstly, they use Adaptive Picture technology, which adjusts the brightness and contrast of the image depending on the ambient light.

Secondly, as I said, the brightness reserve in QLED is 4000 nits. And this is enough to compensate for almost any external lighting.

Unlike OLED TVs, which can only produce the required level of contrast with tightly curtained curtains.

Other technologies

Naturally, this isn’t the only cool technology inside this TV. A powerful Quantum 8K neuroprocessor is installed here, which can upscale 4K content up to 8K in real time. Moreover, it not only increases the clarity of the image, it recognizes different types of textures and additionally processes them. There are also ultra-wide viewing angles, excellent surround sound, which by the way also adapts to the noise level in the room in real time. And a host of other technologies exclusive to Samsung’s QLED TVs.

But tonight’s main technology is HDR10 + – and best of all, it’s not exclusive.

HDR10 + is an open and free standard, just like regular HDR10. All this gives him a huge advantage over, in fact, the same, but paid Dolby Vision. Therefore, HDR10 + is not only in Samsung TVs and smartphones – it is supported by almost all manufacturers of TVs, smartphones, cameras, and, of course, films are shot and made in this format. This means that HDR10 + has every chance of becoming a true people’s HDR standard, which you can enjoy on all screens of the country, both large and small.