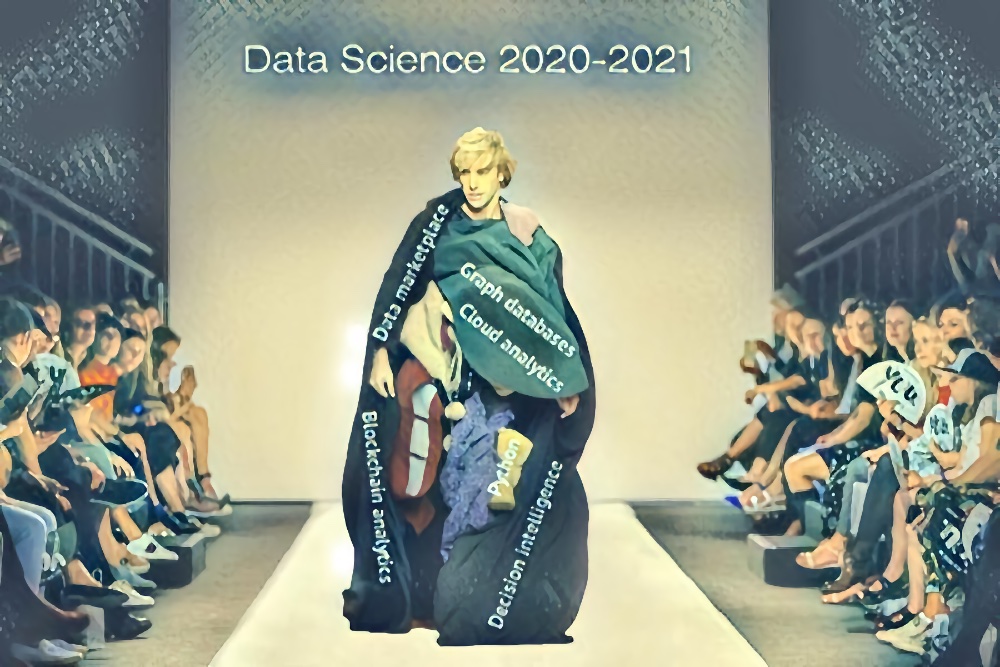

Trends in Data Science 2020-2021

I made KDPV, and then processed it using a neural network. Who recognized the film – that fellow! 🙂

AI and neural networks

Artificial intelligence still has difficulties with the Turing test, but there are successes in this field.

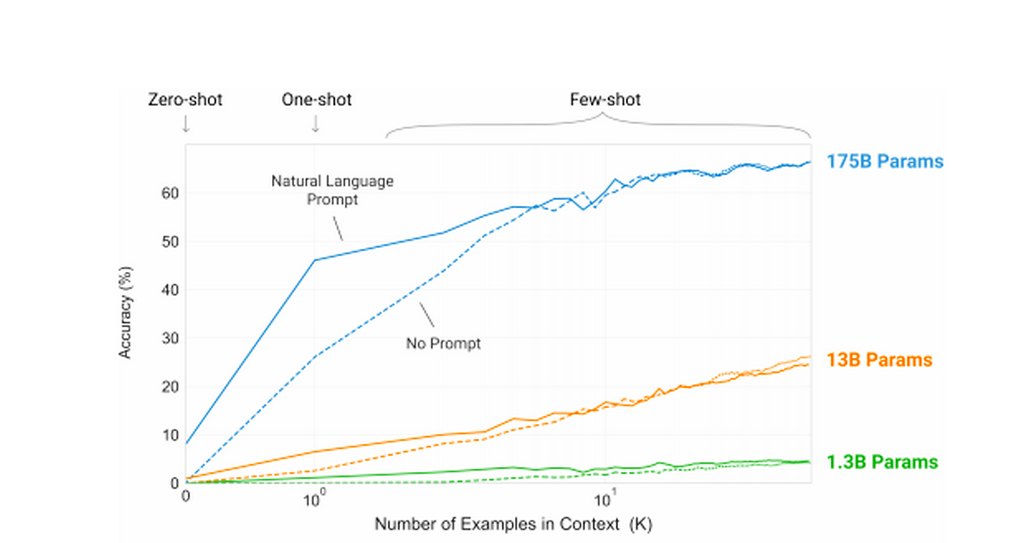

In May 2020, the OpenAI team released the new GPT-3 natural language processing algorithm. It is, without a doubt, the best algorithm in existence today for this purpose.

The system improvements over the previous version of GPT-2 are enormous. The number of algorithm parameters has increased more than 100 times. GPT-3 uses 175 billion parameters while GPT-2 only uses 1.5 billion parameters.

And if earlier a neural network could generate text that only approximately resembled a human one, now its capabilities are much wider.

One student in his Apolos account published articleswritten by GPT-3. Not very difficult, in the style of a motivational trainer. And only one in tens of thousands of readers suspected that the articles were not written by a person.

Actually, this is why OpenAI does not release the algorithm for free access – with its help you can simply bury the Internet under the avalanches of fake news.

The potential benefits of GPT-3 are enormous. From creating a new generation of voice assistants to developing adaptive game mechanics that will take RPG to a whole new level.

By the way, have you already tried AI Dungeon, the text game played by GPT-3? If not, try it, it’s a very interesting experience. This article describes one of these experiences.

Decision intelligence

Decision science is a fairly recent discipline that studies scientific theories about decision making. So that decisions are not made based on the subjective experience or feelings of the decision maker, but through the analysis and comparison of data.

DI allows you to automate the making of routine and operational decisions, offloading the decision-maker.

The medical system InferVision, based on the Alpha Go algorithm, was launched in 2015, and it was in 2020 that it showed all its power. In China, the number of people undergoing computed tomography has grown exponentially. The specialists simply could not cope with the processing of the results. Indeed, a physician needs 10 to 30 minutes to analyze one CT scan.

InferVision came to the rescue, which analyzed CT in 5 seconds. This made it possible to immediately screen out healthy people who do not have pathological changes in the lungs. And in people with pathologies, the system immediately gave out the alleged diagnosis. Naturally, all this was carried out under the supervision of a specialist, and it was he who made the decisions, but this made it possible to reduce the time for processing one analysis several times.

Decision intelligence is based on AI and deep learning. InferVision, for example, was trained on 100 thousand cases.

Of course, with the current development of technology, AI still cannot make objectively better decisions in systems with multiple variants. He simply does not have enough power and input data for analysis. But in many moments it allows you to exclude a person’s impulsivity, his bias and banal thinking errors. And also to automate routine decision-making processes and save a specialist’s time for solving complex problems.

Cloud analytics

Cloud analytics systems have existed before, but in 2020 the dynamics of their development has increased greatly.

Cloud analytics simplify the process of using large data sets that are frequently updated. A unified analytics system for all divisions of the company helps to update analytics results and accelerate their use.

Real-time analytics is the next step many companies are striving for. Better to operate with hot results of analysis, which was done a few seconds ago. After all, the analysis made yesterday may already be inaccurate.

Cloud analytics is a promising tool for business giants that have analytics departments in every branch. Therefore, large companies such as IBM today are closely engaged in the development of such systems.

Data marketplaces

Cloud-related analytics, but at the same time an independent phenomenon.

Data quality is critical for analysis. If a startup does not have the opportunity to conduct global marketing research, then it runs the risk of moving blindly, not knowing the real needs of the target audience.

But now analytics can be bought. Data marketplaces are full-fledged information markets. The famous Statista Is one of the first such marketplaces, but now the industry is growing at a tremendous pace.

Naturally, no one sells personal data (at least legally). Names and surnames, residential addresses, phone numbers and email are protected by law. But anonymized data can be sold. And there are a lot of useful things for business. Age and gender, social status, preferences, sphere of work, hobbies, nationality and hundreds of other parameters that you leave on the network, up to the choice of gadgets on iOS or Android. We remember the old truth – if something on the network is free, then perhaps you yourself are the payment.

Big Data market in 2020 is 138.9 billion dollars. Experts predict that by 2025 it will grow to 229.4 billion. This is a colossal scale, in which the lion’s share will be occupied by the sale of information, and not its mining.

Blockchain in analytics

The blockchain hype has already gone a bit. In 2017, only the lazy did not want to launch their own cryptocurrency, and in 2020 the blockchain is used for more pragmatic purposes.

The combination of blockchain and big data is called the perfect union. Blockchain is focused on extracting and recording reliable data, data science analyzes large amounts of data to find development patterns and make predictions.

Big data is quantity and blockchain is quality.

There are a bunch of potential benefits from integrating blockchain into big data analysis:

- Improving the security of data and analytics results.

- Maintaining maximum data integrity.

- Preventing the use of false data.

- Real-time analytics.

- Improving the quality of big data.

Blockchain for KYC (know your customers). The technology is used by banks and government agencies. But since there is no common data store between different organizations, each of them must be identified separately. Blockchain solves this problem.

Samsung Nexleger Platformwhich launched in Korea simplifies this scheme. Now it is enough to go through the full identification procedure in only one bank or organization. If you need to create a bank account, which is included in the project system, then this can be done in a few minutes. Now all the circles of bureaucratic hell need to go through only once – that’s all.

Graph databases

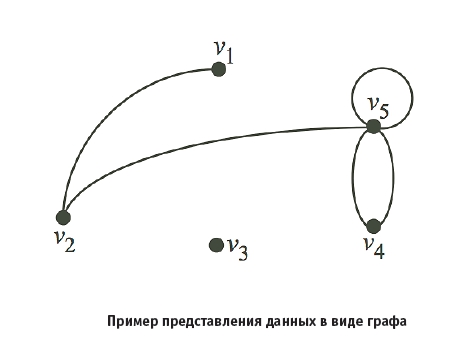

Not the most popular and widespread type of DBMS. It is designed specifically for storing topologies that include nodes and their relationships. It is not just a dataset in the classic table format. Their very essence is different.

Graphs are based on relationships between entities, not entities themselves.

And this is just a klondike for marketing. After all, graph database analysis can be used to analyze opinion leaders and influencers on social networks, personalize advertising, loyalty programs, analyze viral campaigns, enhance SEO, and much more.

Graphs allow you to analyze complex hierarchical structures that would be problematic to model using relational databases.

In 2020, graph analysis was actively used to track the spread of the virus in China and beyond. The study is based on dynamic data from 200 countries, which allows predicting the future development of the situation in the world and taking measures to mitigate the consequences. If interested, complete research here…

In 2020, interest in graph DBMSs has increased significantly. They are used by Ebay, Airbnb, IBM, Adobe, NBC News and dozens of other big companies. And specialists who know how to work well with graph databases are worth their weight in gold.

Python in Data Science

Python continues to capture the global analytics and development market. And his position is only getting stronger. You can read more in this article.

In the PYPL ranking, Python, which analyzes Google Trends, is confidently leading.

Python ranks second in the GitHub ranking for the number of pull requests: 15.9% of the total number of all pull requests. For comparison, the R language, with which Python always competes in analytics, is already in 33rd place, and it accounts for only 0.09% of pull requests.

Experts with Python proficiency in analytics are needed more. We recently analyzed the Data Science job market in Russia and found that knowledge of Python is needed in 81% of vacancies, but R (without Python) is required only in 3% of cases.

R remains a good language for analytics, but Python has almost completely captured the market. If in 2012 they were in approximately the same position, now Python’s leadership is undeniable. And this must be reckoned with.

2020 brought a lot of new things to Data Science, because the field of big data analytics itself is now actively developing. Of course, these are far from all the trends worth mentioning. And a separate question for data scientists – what professional trends influenced your work this year the most? We are very interested to hear.

As usual, the promo code HABR – will add 10% to the tuition discount shown on the banner.

- Profession Ethical hacker

- Frontend developer

- Profession Web developer

- Python for Web Development Course

- Advanced Course “Machine Learning Pro + Deep Learning”

- Machine Learning Course

- Course “Mathematics and Machine Learning for Data Science”

- Unity Game Developer

- JavaScript course

- Java developer profession

- C ++ developer

- Data Analytics Course

- DevOps course

- The profession of iOS developer from scratch

- The profession of Android developer from scratch