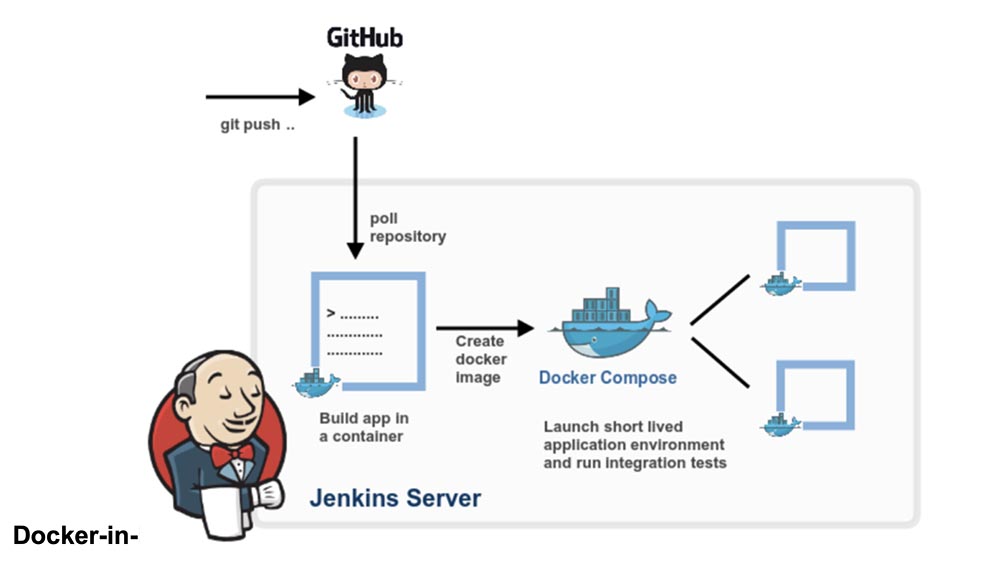

Think carefully before using Docker-in-Docker for CI or test environment.

Docker-in-Docker is a virtualized Docker daemon running in the container itself to build container images. The main goal of the Docker-in-Docker was to help develop the Docker itself. Many people use it to run Jenkins CI. At first this seems normal, but then there are problems that can be avoided by installing Docker in the Jenkins CI container. This article describes how to do this. If you are interested in the final solution without details, just read the last section of the article “Solving the problem.”

Docker-in-Docker: Good

More than two years ago I inserted in Docker flag –Privileged and wrote first version of dind. The goal was to help the core team develop Docker faster. Before Docker-in-Docker, a typical development cycle was like this:

- hackity hack;

- assembly

- stopping a running docker daemon;

- launch a new docker daemon;

- testing;

- loop repeat.

If you wanted to make a beautiful, reproducible assembly (that is, in a container), then it became more intricate:

- hackity hack;

- make sure that a working version of Docker is running;

- build a new docker with an old docker;

- stop the docker daemon;

- launch a new docker daemon;

- to test;

- stop the new Docker daemon;

- repeat.

With the advent of Docker-in-Docker, the process has been simplified:

- hackity hack;

- assembly + launch in one step;

- loop repeat.

Isn’t that much better?

Docker-in-Docker: Bad

However, contrary to popular belief, Docker-in-Docker is not 100% composed of stars, ponies, and unicorns. I mean, there are several issues that a developer needs to know about.

One of them concerns LSM (Linux security modules) such as AppArmor and SELinux: when the container starts, the “internal Docker” may try to apply security profiles that will conflict or confuse the “external Docker”. This is the most difficult problem that had to be solved when trying to combine the original implementation of the –privileged flag. My changes worked, and all the tests would also pass on my Debian machine and the Ubuntu test virtual machines, but they would crash and burn on the Michael Crosby machine (as far as I remember, he had Fedora). I can’t remember the exact cause of the problem, but it probably happened because Mike is a wise person who works with SELINUX = enforce (I used AppArmor) and my changes did not take SELinux profiles into account.

Docker-in-Docker: Angry

The second problem is related to Docker storage drivers. When you launch Docker-in-Docker, the external Docker runs on top of the regular file system (EXT4, BTRFS or whatever you have), and the internal Docker runs on top of the copy-and-write system (AUFS, BTRFS, Device Mapper, etc.). , depending on what is configured to use an external Docker). In this case, there are many combinations that will not work. For example, you cannot run AUFS on top of AUFS.

If you run BTRFS on top of BTRFS, this should work first, but as soon as the subkeys appear, the parent subvolume cannot be deleted. The Device Mapper module does not have a namespace, so if several Docker instances use it on the same machine, they can all see (and influence) the images on each other and on the container backup devices. This is bad.

There are workarounds to solve many of these problems. For example, if you want to use AUFS in the internal Docker, just turn the / var / lib / docker folder into one and everything will be fine. Docker has added some basic namespaces to the target names of the Device Mapper, so if multiple Docker calls are made on the same machine, they won’t “step on” each other.

However, this setup is not at all simple, as you can see from these articles in the dind repository on GitHub.

Docker-in-Docker: getting worse

What about the build cache? This can also be quite difficult. People often ask me “if I am running Docker-in-Docker, how can I use the images located on my host, instead of pulling everything back in my internal Docker”?

Some enterprising people tried to bind / var / lib / docker from the host to the Docker-in-Docker container. Sometimes they share / var / lib / docker with multiple containers.

Want to corrupt data? Because this is exactly what will damage your data!

The docker daemon was clearly designed to have exclusive access to / var / lib / docker. Nothing else should “touch, poke or touch” any Docker files in this folder.

Why is this so? Because it is the result of one of the most difficult lessons learned from developing dotCloud. The dotCloud container engine worked with several processes accessing / var / lib / dotcloud at the same time. Tricky tricks, such as atomic file replacement (instead of editing in place), “perching” the code with advisory and mandatory locks, and other experiments with secure systems such as SQLite and BDB, did not always work. When we redesigned our container engine, which eventually turned into Docker, one of the main design decisions was to collect all container operations under a single daemon to do away with all this nonsense of simultaneous access.

Do not get me wrong: it is quite possible to do something good, reliable and fast, which will include several processes and modern parallel control. But we think it’s simpler and easier to write and maintain code using Docker as the only player.

This means that if you share the / var / lib / docker directory between multiple Docker instances, you will have problems. Of course, this may work, especially in the early stages of testing. “Listen, Ma, I can launch ubuntu with a docker!” But try to do something more complex, for example, pull out the same image from two different instances, and you will see how the world burns.

This means that if your CI system performs assemblies and reassemblies, then every time you restart the Docker-in-Docker container, you run the risk of dropping a nuclear bomb into its cache. This is not cool at all!

Solution

Let’s take a step back. Do you really need Docker-in-Docker or just want to be able to run Docker, namely build and run containers and images from your CI system, while this CI system itself is in the container?

I bet most people need the latter option, meaning they want a CI system like Jenkins to run containers. And the easiest way to do this is to simply insert the Docker socket into your CI container, associating it with the -v flag.

Simply put, when you launch your CI container (Jenkins or another), instead of hacking something with Docker-in-Docker, start it from the line:

docker run -v /var/run/docker.sock:/var/run/docker.sock ...Now this container will have access to the Docker socket and, therefore, will be able to launch containers. Except that instead of launching “child” containers, it will run “related” containers.

Try this using the official docker image (which contains the Docker binary):

docker run -v /var/run/docker.sock:/var/run/docker.sock

-ti dockerIt looks and works like a Docker-in-Docker, but it is not a Docker-in-Docker: when this container creates additional containers, they will be created in the highest level Docker. You will not experience the side effects of nesting, and the build cache will be shared across multiple calls.

Note: previous versions of this article advised binding the Docker binary from the host to the container. This has now become unreliable, as the Docker mechanism no longer extends to static or almost static libraries.

Thus, if you want to use Docker from Jenkins CI, you have 2 options:

Installing the Docker CLI using the basic image packaging system (i.e. if your image is based on Debian, use the .deb packages), using the Docker API.

A bit of advertising 🙂

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to your friends, cloud VPS for developers from $ 4.99, A unique analogue of entry-level servers that was invented by us for you: The whole truth about VPS (KVM) E5-2697 v3 (6 Cores) 10GB DDR4 480GB SSD 1Gbps from $ 19 or how to divide the server correctly? (options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper at the Equinix Tier IV data center in Amsterdam? Only here 2 x Intel TetraDeca-Core Xeon 2x E5-2697v3 2.6GHz 14C 64GB DDR4 4x960GB SSD 1Gbps 100 TV from $ 199 in the Netherlands! Dell R420 – 2x E5-2430 2.2Ghz 6C 128GB DDR3 2x960GB SSD 1Gbps 100TB – from $ 99! Read about How to Build Infrastructure Bldg. class using Dell R730xd E5-2650 v4 servers costing 9,000 euros for a penny?