the path from Opensource to Enterprise

Our team is developing information security products in the Digital Economy League. In this article, we would like to share our experience in creating our product — the TsUP 2.0 infrastructure secrets management module based on open source software. In our case, this is the “vanilla” Vault version 1.7, which was available under the free MPL license. Having taken it as a basis in 2021, we almost immediately encountered the following problems with its use:

lack of horizontal scaling;

performance limitations when used in high-load mode;

non-adaptive monitoring;

limited access control capabilities.

We had to solve all these problems because we had to deploy our secrets module in a project where it would be integrated with the Mission Critical+ systems. We will tell you more about how we did it in the article.

It all started with scaling. We knew that Enterprise Vault had the ability to work with Namespaces (or tenants), which largely solved the scaling problem, but Opensource Vault did not have this ability. In addition, access control in Opensource Vault is limited only to access policies at the level of paths and request methods. This is also useful, but more was needed: Namespaces were needed, like in Enterprise.

“Challenge accepted,” we thought.

A couple of months into development, we've added Namespaces (or tenants) to our implementation. Our first-level tenant is the new Vault core.

We also included our own isolated objects of the following types in Namespace:

Secret Engines (secret engines);

Auth Methods (authentication methods);

Policies (politicians);

Identities (groups);

Tokens (tokens).

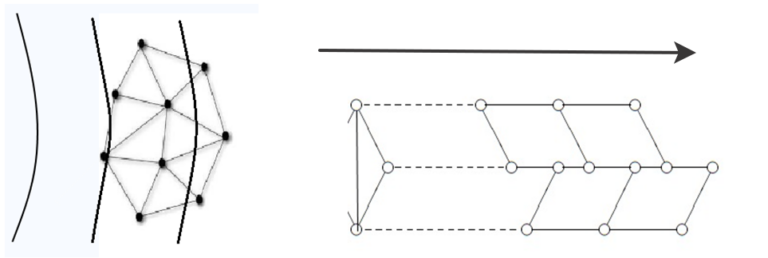

We made it so that a first-level Namespace (or tenant) could contain a nested second-level Namespace. The second level could contain a third, and so on.

Naturally, it was important to keep the API for managing these tenants compatible with the Enterprise Vault API. Therefore, if there are or are used tenant management tools in the project that are based on the Enterprise Vault API specification, they could be reused with our product.

The question may arise – what to do with the large number of objects that need to be created to enable secrets to be managed by multiple consuming systems using multi-tenant demarcation?

We introduced the concept of a Vault: a series of tenants nested within each other. Such vaults can be built based on departmental hierarchies, authentication methods, policies, groups, external groups in Active Directory, or depending on the engines used to manage secrets.

To control this large abstraction (the Storage), we implemented the so-called complex operations module (COM) in the product. We exposed the methods for managing Storages to the outside, and under the hood, the management of the low-level structures listed above is performed. Thus, access to manage the Storage is also granted to the automation system at the policy level. For low-level structures, it is prohibited.

A separate scheme in the DBMS acts as a backend for each tenant. Schemes can be placed both in the main DBMS cluster and in separate dedicated clusters. You can place a tenant in an isolated DB cluster both when creating a storage and later, during use, when you understand that a specific tenant should be in a separate cluster. This feature allowed us to significantly advance in solving the scaling problem at the Storage Backend level (i.e. at the DB level).

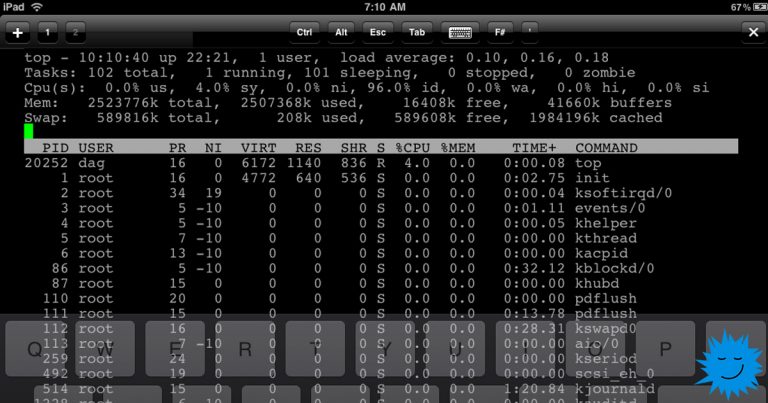

Then the question of productivity arose. First, it was necessary to figure out who was causing the load and when. For this purpose, the monitoring metrics had to be slightly modified.

To monitor the performance of our application and the load on each tenant, we added Namespace tags to the monitoring metrics.

This allowed us to:

identify tenants that receive more requests than others,

to compile a rating of the most loaded (loading vault) consumers,

identify those who are likely to have misconfigured their systems to manage secrets,

help them set up integration and thus make better use of computing resources.

In addition, when it comes to working with consumers, we were able to create request quotas (Rate Limits), which allow us to limit the bandwidth (RPS) at the level of access to the entire tenant – including due to the functionality we added to manage the response time to the client to control the flow of requests.

Coupled with monitoring metrics, quotas allow you to identify and limit parasitic load on the system, freeing up resources for other consumers.

Due to the implementation of tenants as isolated cores, there was a problem with unpacking our application, since all these cores are loaded during unpacking. On the scale of our large project, this is several thousand tenants. Unpacking such a large number of tenants required significant computing resources and took a certain amount of time (for ten thousand, this was about 5 minutes). In the event of an accident that could trigger the vault master to move to another cluster node, unpacking tenants in 5 minutes is 5 minutes of system unavailability. For the Mission Critical+ criticality class, this was unacceptable.

What did we do to solve this problem?

We have implemented fast switching from a slave node of a Vault cluster to a leader so that new releases can be deployed to production stands without long-term unavailability.

As part of this functionality, we made it so that partial unsealing of the cluster performance node is performed at startup. Incomplete — because the expiration manager is not launched, otherwise unsealing is the same as on the master node. During such unsealing, the performance node can already route all requests (just like a regular slave node). In response to a request, such a performance node will return the unsealing status (code 200), that is, requests from the balancer can be sent to it during the unsealing process. We call such a node a “pre-seal” node.

If the user sends requests to change data (change the router – create, delete engines like secrets, auth, audit; work with policies, groups, entity, identity), the request is executed on the master node. Then a request for state synchronization is sent to EVERY performance node in the cluster. Then the response is given to the user.

This maintains data consistency between master and performance nodes. Tokenstore synchronization does not occur; when switching masters, the tokenstore cache is cleared.

In general, when a master is switched, one of the performance nodes in the cluster becomes the new master. Its state (data) is in line with the master's state. The process that occurs before this new master can process requests is called “doansil” – it involves turning on the expiration manager and clearing the node's cache.

This makes switching the master much faster than completely unpacking the new master.

When the cache on the master changes (user changes some secrets), the master node sends a request to invalidate the cache to EVERY performance node. Each performance node periodically polls the master, so the master node has a list of performance nodes to which it sends messages about synchronization or invalidation of the cache. If an error occurs during synchronization of a performance node, this node enters the suspended state – that is, it leaves the balancing to prevent receiving outdated data.

During unpacking of the performance node, requests for sync and cache invalidation are stored in a message buffer on the performance node. These messages are processed when the performance node is fully unpacked.

When switching masters, the old master goes through a process called pre-sil. This is the reverse of do-ansil. Anything that is turned on at do-ansil is turned off at pre-ansil.

The functionality for quickly switching from slave to leader complements our improvements to graceful shutdown of the vault server.

The essence of these improvements is that the process of removing a node from balancing, updating the server, initializing and entering it back into balancing is performed smoothly, without losing user requests.

Between the time the shutdown or paused status is set and the time the load balancer actually stops forwarding requests to the Vault server, some number of requests will be received. In the worst case, the time that requests are still being received is equal to the healthcheck polling time set in the load balancer settings.

If the node is stand by, then all requests received from the balancer must be processed.

If the node is a leader, the processing of all new requests is stopped immediately (including those received via redirection), and previously received but unprocessed requests are processed as provided by the leadership transfer logic (step down).

In response to redirecting requests, a response is returned, the result of which will be an http response to the client with the code 429 and the Retry-After header. In the agent, we also added ability to work after reboot.

To correctly process responses with code 429 and the Retry-After header, we have modified the SDK and, accordingly, the agent.

We have learned to update application servers without unavailability. But what about the DB cluster servers? And here we had to rack our brains a little over the implementation of multiple connections (clustering at the DB level)

For this purpose, a storage backend, hereinafter referred to as postgresql_v2 storage backend, was developed based on the original postgresql storage backend, supporting one or more PostgreSQL clusters based on Patroni as a DBMS and with asynchronous physical replication configured between clusters. Postgresql_v2 storage backend provides a set of metrics or telemetry of the original postgresql storage backend. The implementation of postgresql_v2 storage backend allows it to be used as a storage backend system.

As a result, we got the following supported configurations:

One PostgreSQL cluster:

a cluster that includes two PostgreSQL nodes and an arbiter, with synchronous physical replication configured between the PostgreSQL cluster nodes.

Two PostgreSQL clusters:

A master cluster that includes two PostgreSQL nodes and an arbiter, with synchronous physical replication configured between the PostgreSQL cluster nodes.

StandBy cluster, including two PostgreSQL nodes and an arbiter, synchronous physical replication is configured between the PostgreSQL cluster nodes, asynchronous physical replication is configured between the clusters.

Now, shutting down DB cluster nodes for routine update work or emergency situations on one of the nodes does not result in system unavailability.

In addition, if you specify both the primary and backup cluster nodes in the connection string, switching to the backup DB cluster is performed without reconfiguring and rebooting the vaut servers. It is enough to ensure the connection of vault with the backup DB cluster at the network level and break this connection with the primary cluster, which, for example, is in an emergency state.

This feature can also be used when observing degradation of the storage system on the main DB cluster and quickly switch the application to work with the backup DB cluster.

This is what the complete cold standby database diagram looks like:

Thus, the cold database backup we made is a fully functional set of database components that asynchronously replicates data from the main PostgreSQL cluster.

Cold backup of the database performs backup not only of the functional components of the database, but also of the components that implement non-functional requirements for ensuring hot backup.

Thus, when switching to a cold reserve DB, the system will be protected from failures by a duplicate of the hot reserve. The cold reserve will be able to function as the main capacities, and those capacities that were disabled should become a cold reserve after restoration. To ensure the relevance of the data copy, the PostgreSQL servers must be active and configured for cascade replication from the servers of the main cluster.

All the described activities and improvements have significantly increased the availability of the application.

We have sorted out downtimes during routine maintenance on application servers or DB cluster servers. But there is still one very important and difficult question left – the behavior of secrets consumer systems during the basic and main procedure of the secrets management module – secret rotation. What is the problem here?

The consumer system can receive the secret. At this time, vault can perform password rotation. And the system with the received secret will no longer be able to successfully connect, for example, to its DB.

To solve this problem, we implemented “TUZ Kits” (TUZ — technological accounts), naturally, while maintaining backward compatibility of methods for obtaining secrets.

The essence of the improvement is that our secrets module should be able to provide support for sets of ACS pairs consisting of ACSs and their secrets offset from each other, by validity periods. Thus, when requesting access parameters, a current secret with a guaranteed remaining validity period is now issued, and when delivered, an up-to-date secret with a guaranteed remaining validity period is delivered. Accordingly, the rotation of ACS secrets is configured in such a way that the ACS and secrets for which delivery is configured are up-to-date on all available resources – sources that use them.

Everything is great! We have deployed the project, provided flexible scalability, excellent performance and high availability, configured and put our system into operation. “Accounts are spinning”, clients are connecting. But what does the support team see in the application server resource consumption monitoring graphs? They see uneven resource utilization.

Let's recall the architecture of a vanilla Opensource vault: client requests are processed on the Master node. The Slave node only forwards the request to the Master, waits for a response from the Master, and returns it to the client. Thus, in a cluster of 3, 5, 7 nodes, only the Master node utilizes resources. Slave nodes are essentially idle. Scaling is only vertical: no matter how many nodes are added to the cluster, performance is limited by the capacity of the Master.

To redistribute the load, we implemented functionality for reading from standby nodes.

The improvement consists of providing horizontal scaling of the system performance for requests that do not result in writing information to the database (physical backend). Scaling becomes possible by processing such requests on stand-by nodes with redirection of “inappropriate” requests to the cluster leader.

Thus, the following functions are implemented:

Filtering requests on stand-by nodes and redirecting to the leader those requests that cannot be processed on stand-by nodes (write requests).

Authorization of requests on the stand-by node side.

Updating the cache of stand-by nodes when the leader's cache changes.

Well, in addition to complex non-functional improvements that increase the fault tolerance and performance of the application, we have made a number of changes to the functional part of Vault:

made a plugin for the engine for managing certificates issued by external certification authorities;

developed a plugin for the SSH key generation engine;

made a plugin for the engine for managing credentials and Keytab files of the IPA-based system and improved plugins for standard engines, where they added authentication methods;

and so on. If anyone is interested, we can tell you about this separately 🙂

These are the interesting tasks that our team has solved. But there are no less interesting ones planned for future releases.

Oksana Bubelo, Product Manager of TsUP 2.0 Secrets Management Module, Alexander Salkov, Head of Development Group, and Konstantin Ryzhov, Product Architect