Suno promt (style) = fishing… Alternative?

As always, I don’t pretend to be complete and I hope it will help someone. (My previous articles about Suno 12.)

Yes, it is an important, difficult and “painful topic”. Many complained about the unpredictability of generation, about the difficulty of getting what you want. Even in the first article about Suno, I tried to understand how the system works, to define the style in music, to understand how to compose Promt – a set of key parameters for generation… 3.5 months have passed, on July 9, Suno support congratulated me on overcoming the threshold of 500 generations … has the system become clearer to me?

Before AI systems, everything was simpler – on almost all devices, the parameter values determined the result. And, naturally, we expected similar or even greater “understanding” from AI services – there is “intelligence”. To overcome the novelty effect and accept this property of AI algorithms, I think it is useful to practice creating pictures from text.

Here, I am writing, step by step selecting promt (better in English):

A girl drinks coffee on the balcony – bottom line: yes, but sad girl, chinese or filipina, taken from afar, glass on the table in the semi-darkness.

Young European woman smiling and drinking coffee on the balcony. The sun is shining. Medium shot. – result: already better, but brunette, looking to the side, lots of light, a cup in each hand, a wall behind her.

Young European woman smiling and drinking coffee on the balcony. In one hand the woman holds a porcelain cup. The woman is looking at the camera. Behind the woman are the balcony railings and a view of the city. In the distance, over the roofs of houses, the evening sun is shining, sunset. Medium shot. – result: almost what I wanted.

Even this simple example (I reduced the number of iterations) requires patience, and you try to get a picture in which “a man and a woman are hugging and the woman is looking at the camera, and only the man's back is visible” – AI sometimes produces such horror …

In the early 90s, the press became freer and beauty contests became fashionable in “Soviet Youth” (Novosibirsk) and other newspapers – girls sent photos, and readers voted… In the morning, employees come to our office, 3 programmers take turns looking at the newspaper (there are 3 new girls) and each one says something like “well, one is okay, pretty, but the others…” … They all talked about different girls!

It's all about semantics. The semantic content of the same words is often different for different people. Give two people two identical cakes and ask them to describe the taste. You may get different, even opposite, descriptions.

But in graphics it is simpler – we describe objects and the semantics here are more obvious. By the way, in some services you can specify a specific artist, for example, Kuindzhi, Monet. In Suno this would correspond to commands like [Snoop Dogg] or [Joe Cocker]I suppose it is technically possible (explained below), but it would make the copyright issues even more tense.

What in the description of music has a clear semantic load? Here (in my opinion), in descending order:

Tempo (here there is also a rhythmic structure – 4/4, 12/8… changes in tempo (agogics), etc.).

Tonality, harmonic sequence.

Composition of instruments (solo, duet, ensemble, orchestra, etc.).

Individual instruments/voice (there are also many extraction techniques and performance styles).

Genres (primarily the rhythmic basis) – here are both varieties of one genre and combinations with others.

Well, using 1 and 2 in the Style parameters in Suno is not directly possible, and the rest have a less precise meaning.

Analysis and systematization

I honestly tried to figure out the Style task and additional commands in Lyrics:

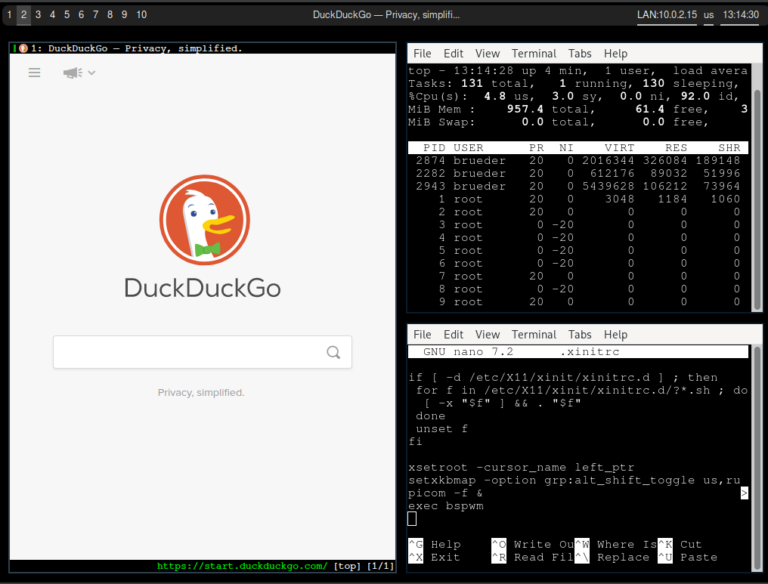

For each generation I saved the promts in a separate file. (I delete tracks in Suno so as not to complicate my work).

I looked at what other users were asking and copied the promts that were interesting to me.

I analyzed Lyrics – both the structure and the commands, and wrote down what seemed useful.

That is, I looked at “who fishes with what and what they feed with.” It seemed to me that I would collect statistics, put everything into a system, and then Suno would generate exactly what I needed… Naive.

There are some funny (or sad) comments on the Suno Wiki:

Is it possible to switch to male, female vocals and simultaneous singing (duet)? The answer is “yes and no”, it depends on …

Is it right to describe music in detail in Style? The answer is “very detailed – no”.

These comments don't seem quite logical. But this is only if you don't assume how the system works, how Suno is arranged. The development of technologies, specialization leads to the fact that even many professionals do not need to know the “basics, the base” – an electrical equipment repairman may not know Ohm's law, a violinist – musical acoustics… We are generally weaned off understanding the “nature, physics” of devices even in general terms – everything is ready, you just need to press a button / pedal. Yes, in the city you may not know the structure of a car, but if you stall on a mountain pass or in the steppe 200 km from the service station, then this knowledge will be extremely useful.

How does the system work (again)?

Taking into account the previous observations and after experimenting with Audio Input, I became even more convinced of the correctness of my guesses:

Suno uses real tracks (RT) for creation, training Models* This model most likely has:

ID and “description”,

service commentary, like “Sade / No Ordinary Love, 1992”. + (possibly) a note about the license, copyright risks…

In the description (essentially, this is Style “on the side” of Suno) there is:

structure – musical form (Intro, Verse1, Chorus1, …);

tempo and notes on variations, if any;

key + (maybe) chords;

genre;

set of tools, even models/series like Roland TR808… ;

vocals (m/f) and its change, if any, singing style;

emotional character;

functional purpose;

national/linguistic characteristics;

* I used to think that Model trains on several similar tracks. Now I think that ONE track is ONE Model… Yeah, I think they downloaded everything they could find online.

Most likely, some of the description creation is automated, and some is done by specialists.

We set the Style and Lyrics. The system selects the Models with the most suitable parameters, then “figures out” how the text “fits” in these Models and selects a pair for generation. By the way, “adjusting” the text to the structure, generating the melody itself, observing the phonetics of the language, without violating the style of both the music and the manner of singing, and “not hitting” the original vocals in RT – this is for you… if not a Nobel Prize, then a dozen patents for sure!

When generating, the system can combine and modify individual parts of the Model (Verse, Chorus, etc.). I think the tempo and chord sequence are preserved from the original RT. Although without much distortion, you can slightly “move” both the key and the tempo. For example, +- 2 semitones and +- 10% of the bpm.

The system checks the result for % matches with the original RT and if it is large, then it selects another Model. (So as not to give out almost the original).

Thus, our new Suno track is a “drawing” of a new composition according to the rules of a real existing one (RT). That is, everything is literally repeated:

if part of the chorus was sung by a man and part by a woman, then that's how it will be generated. (See my example (“Strange Girl” v9.0), although it was indicated [Male vocals]);

And there won't be a solo [Distortion Guitar]as indicated, and Saxophone, if so in the Model;

And there WILL BE NO introduction (even though you indicated it) if it is not in the Model;

Yes, there will be a modulation that you don’t really like, but which is in the Model…

Well, it is clear why the words of the verse sometimes “climb” into the music of the chorus (or vice versa), why the system itself “corrects” the text, etc.

True, Suno can also “mix” different materials – more on that later.

So here, as in real fishing, there are no guarantees… If you stick to this concept of the Suno device, then many questions are removed, right?

Regarding the detailed description. I listen to someone's cool example, I think, “Now I'll copy his promt and… And what's in the style?” I look, and there's just [Pop] or [EDM] ! No, there are, of course, interesting examples where there is a whole paragraph in Style. There are also many examples where there is nothing specified in the style at all (this often happens in mine too).

In the case where we do not prescribe the Style in detail, Suno has much more freedom – more Models are suitable, because there is a smaller number of parameters to match. Maybe the text is easier to “fit in”, maybe fewer modifications to the structure will be required. True, this style may not suit you, but … You just need to generate more. In general, the “truth” is somewhere in the middle – if one parameterThat scatter Very wideIf many parametersBy the search is narrow. It seems the second is “good”, but only if the Model description at Suno matches our Style, but how do we know that?

What you won't see from users! Both in Style and Lyrics teams. Recently (for songs “Strange girl” v1.0,v1.1), I write: [Punk, Male vocals] – I wanted something “a little hooligan” – not that, too tough. I'm changing it to [Punk, Male vocals, Soft, Lounge Singer] – soft acoustic rock, where is the punk? Although the parameter is in the first place… I do not claim that I know better, I just note that everyone is searching, trying, experimenting and …, probably getting used to the way of communicating with AI.

One of the problems is that after adjustments Style / Lyrics at new generation Model(and) that are changing. And in another Model:

previous errors may not appear;

perhaps there will be some problems elsewhere.

So It's better to finish writing track – Suno will “finish drawing” it a little differently, but in the same Model. So we got to Audio Input – an incomplete, but effective alternative.

Audio Input as an alternative

Restrictions

When loading a fragment (6…60 sec.) in Audio Input mode, a warning is immediately displayed that if the material contains vocals, the generated tracks will be private and cannot be published in Suno.

Next, an automatic check, if it is a known track – a ban, Suno will not work with it.

I couldn't resist – I took a 1 min. fragment of a track by our famous artist – it was in the database, i.e. banned. I removed the vocals – it was accepted. Generated songs “Someone Else's Wife” v6.0, 6.1.

Took a 1 min fragment of a famous foreign song – also in the database, banned. And didn't accept it with the vocals removed. Probably because of the characteristic riff. Rearranged the bars, breaking the melody – accepted it. Result generations (“Someone else's wife” v8.0.).

Perhaps many will immediately hear a familiar character, sound… In general, you need to choose a prototype and try to go through this stage. It is better to put both a sparse part (verse), and a rich part (chorus), well, and something from the instrumental interlude in 1 minute. In fact, Suno will have all the necessary material to generate a track with development.

Tempo, tonality, harmony

I was interested in how much I could “impose” the tempo, key and harmony I needed on Suno (something that is not directly set). I had used Audio Input before, noticed that almost everything was saved, but I did not analyze the results in more detail. In the examples 1 (“Someone Else's Wife” v6.0, 6.1.) and 2 (“Someone Else's Wife” v8.0.) The tempo and tonality are preserved, but the harmony is only 70% – Suno developed it, but within obvious limits – a musician would have done something similar.

It should be noted here that, unlike previous experiments using the command, [Use the same style]I also filled in Style. And, apparently, Suno, having selected the Model, brought in something harmonic from the Model. By the way, this is exactly the case where the Model could well have been adjusted in tempo and tonality to the fragment “fed” by the Audio Input.

I decided to analyze the harmony more carefully. I did not make both the verse and the chorus in the fragment for Audio Input, because I was not sure that Suno would “write” the text correctly. Therefore, I made separate fragments (only playing the piano chords) for the verse and chorus (45 seconds each). example with bass – (see “Strange Girl” v2.1) I had:

in the verse – | Am | Am | Em | Em | Dm | Dm | F | G7 | and in Lyrics only verses.

in the chorus – | C | Em/B | Gm6/Bb | A7 | Dm | Db(#5) | F/C | Gsus4, G7 | – in Lyrics choruses.

Result:

in the verse the basic tonality was preserved, but the system did not stupidly repeat the harmony; development resulted – different verses.

in the choruses the system couldn't cope with the “chromatic” bass at all, it cobbled together something of its own, with a change in tonality in places, but overall with the right character.

The original piano is heard in places, developed in places. Yes, and the tempo (90 bpm) is preserved. As a result, I put together one song from 2 generations of verses and 2 generations of choruses. It seems to be very successful, because a couple of friends have already wanted to sing it. However, the vocal part there is not simple, both in range and delivery.

Some “statistics” on this example (“Strange Girl” v2.1): there were 8 generations in total (4 verses, 4 choruses). The tempo was preserved in 7 out of 8. It is more difficult to assess the harmony (the transition to a parallel key is not a change), at a guess – 60% was preserved.

Mixing styles

Style is not only the tempo and character of the rhythm, but also the typical harmony. I decided to “torture” Suno a little – to break both. I made 2 fragments for Audio Input. In both, the sequence is – | Dm | Dbm | Dm | Dbm |… (bass + playing chords on piano):

1st – with House Drum Loop and tempo 130 bpm (15 sec.).

2nd – with Reggae Drum Loop and tempo 90 bpm (22 sec.).

The chords are not typical for either House or Reggae. For the former, the most common would be something like | Am | G, F |, for the latter – | C, G | Am, F | or | Am | G |.

By the way, the piano is the most “harmless” material, timbre for a fragment and “transmission” of Suno tonality and chords, because it easily fits into almost any musical style.

Then I set Style for the 1st (House) [Reggae, Male vocals]. The typical tempo for reggae is 80…110 bpm. But the system retained both the tempo (130) and the harmony (Dm | Dbm) of the fragment, added a characteristic bass, brass, something to the drums. In general, it turned out to be a completely original musical Reggae at an accelerated pace.

And for the 2nd (Reggae) I put Style [House, Male vocals]. For House, the typical tempo is 115…130 bpm, but the system retained both the tempo (90) and the harmony (Dm | Dbm), slightly developing it. Added slap bass, synths, something to the drums. The result was slow, original and not quite House.

More experiments

I wanted my own characteristic bass riff “embed” into generation. Recorded a fragment (42 sec.) bass + ONE chord on piano + Drum Loop at a tempo of 117 bpm. Entered into Style [Latin, Male voice, Dance, Sultry Singer] and made 2 generations. The tempo and tonality were preserved in both, the harmony is different, only in one the bass line remained as a break. Well, it's clear with the harmony – if there is one chord, then for Suno it's like a tonality assignment. Example with bass (“Strange Girl” see v7.0).

Did fragment for 6 seconds. – just 2 bars of light jazz triplet drums 4/4 (12/8) + one note piano – Gb in a small octave – expectation that the key will be Gb (major/minor is not set here). Generated 6 instrumental tracks with Styles:

[Jazz, Drums, Jazz Guitar, grand-piano]

[Heavy Rock Blues, Distortion Guitar Solo, Harmonica Solo]

[Reggae, Clean Guitar Solo, Trombone Solo]

Result:

The tempo and triplet pattern were preserved everywhere, but in some places the rhythm became noticeably more complex.

the key in 5 tracks became B (major) – i.e. the V degree, the dominant from Gb and only in one minor.

the instruments mentioned were heard everywhere, and bass was added everywhere.

So, one note is perceived (apparently, according to statistics) as dominant, and major seems to be more common, at least in the chosen styles. In general, if you need to hit the key more accurately, you should specify a chord. The generations themselves turned out to be quite interesting.

Took the recording (acapella) one friend. I wrote the same text in Lyrics, and in Style in one case [Orchestra Strings, Harp]in a different – [Latin Acoustic Guitar] – quite decent, sometimes unexpected, versions came out. Suno very competently added violins and harp to the existing vocals in the first case, and a Spanish guitar in the second.

CONCLUSIONS

In general, the Audio Input option is a fully functional alternative to setting Style only in text. In my opinion, the quality of generation in this mode is still inferior to what Suno generates on average. One minute is not 3-4 minutes, as in a regular composition, and the system apparently allocates less time for creating and training the Model.

Two approaches

Probably, the arranger could make a high-quality 1-minute “leaven” (“blank”, source) for Audio Input – exactly those drums, that bass, Synth, etc. Such a fragment on one chord, where there are “all” timbres and textures, both for the verse and the chorus, the bridge, so that the system generates exactly these sounds. This can be called an approach Maximum and … hope that with the appropriate assignment in Style, the system will generate what we expect. (I haven't checked this thoroughly, using several examples, but I plan to do so). It is quite possible that someone will specifically make “blanks” for exchange with other users.

And as an opposite – give Suno Minimum – only the tempo and key, so that the system adds the rest from the ready Model. (Approximately, as with the voice Dataset – give only information about phonemes). It is quite possible that 2 measures of the kick + one chord and even one note are enough (I have not tested it in detail yet, but I will do it).

Accordingly, in both approaches, we do not combine the generation result with the original fragment – it is only our “alternative assignment” Style.

The article was written on August 8, 2024. Technologies are developing rapidly, it is quite possible that in 2-3 months the functionality of Suno will be different.

Previous articles about Suno 1, 2.

My Suno Diary.

Catalog my Suno songs.

P.S.

Some Promts I've collected, individual commands, maybe even examples of generation with Audio Input, I'll publish in the Diary when I have time. But I think it's more useful for the user to conduct their own experiments – what “worked” for me may not work for someone else – Suno is something very capacious and constantly changing.

One of the reasons why I am so interested in the “device” of Suno and copyright issues is that more than 20 years ago I developed algorithms for “morphing” a midi score. In OnyxArranger21 (Jasmine Music Technology, USA) any midi file of a finished song could be used as a style template that generated a new texture not only with an arbitrary harmony, but with a change in size (even from 3/4 to 7/8) and other settings. In the program, it is easy to create a file when the melody of “Let It Be” was accompanied, according to the chords, by the accompaniment of “Stairway to Heaven”. That is, Led Zeppelin “accompany” The Beatles. In fact, this is reusing the style in another composition. I did not consider such a result a masterpiece, but morphing with observance of musical rules is a difficult and interesting algorithmic task.

Asked a question about Udio – tried a little… Amazingly expressive vocals and cleaner than Suno. In general, their sound quality is better. In terms of interface, convenience – in my opinion, it is inferior to Suno. In terms of functionality (parameters) – wider than Suno. No time to work with it yet.